University of Palermo

Researchers at Zurich University of Applied Science developed an optimized and secured multimedia streaming protocol for participatory sensing environments, building upon SRTP. This implementation effectively applies AES-CTR encryption and HMAC-SHA-1-256 for data confidentiality and integrity, demonstrating only a ~2 FPS difference compared to unsecured streaming and achieving delays as low as 0.5-2.5 milliseconds with JPEG encoding.

07 Oct 2025

This work aims to investigate the use of a recently proposed, attention-free, scalable state-space model (SSM), Mamba, for the speech enhancement (SE) task. In particular, we employ Mamba to deploy different regression-based SE models (SEMamba) with different configurations, namely basic, advanced, causal, and non-causal. Furthermore, loss functions either based on signal-level distances or metric-oriented are considered. Experimental evidence shows that SEMamba attains a competitive PESQ of 3.55 on the VoiceBank-DEMAND dataset with the advanced, non-causal configuration. A new state-of-the-art PESQ of 3.69 is also reported when SEMamba is combined with Perceptual Contrast Stretching (PCS). Compared against Transformed-based equivalent SE solutions, a noticeable FLOPs reduction up to ~12% is observed with the advanced non-causal configurations. Finally, SEMamba can be used as a pre-processing step before automatic speech recognition (ASR), showing competitive performance against recent SE solutions.

27 May 2025

Meetings are a valuable yet challenging scenario for speech applications due

to complex acoustic conditions. This paper summarizes the outcomes of the MISP

2025 Challenge, hosted at Interspeech 2025, which focuses on multi-modal,

multi-device meeting transcription by incorporating video modality alongside

audio. The tasks include Audio-Visual Speaker Diarization (AVSD), Audio-Visual

Speech Recognition (AVSR), and Audio-Visual Diarization and Recognition (AVDR).

We present the challenge's objectives, tasks, dataset, baseline systems, and

solutions proposed by participants. The best-performing systems achieved

significant improvements over the baseline: the top AVSD model achieved a

Diarization Error Rate (DER) of 8.09%, improving by 7.43%; the top AVSR system

achieved a Character Error Rate (CER) of 9.48%, improving by 10.62%; and the

best AVDR system achieved a concatenated minimum-permutation Character Error

Rate (cpCER) of 11.56%, improving by 72.49%.

The increasing interest in developing Artificial Intelligence applications in the medical domain, suffers from the lack of high-quality data set, mainly due to privacy-related issues. In addition, the recent increase in Vision Language Models (VLM) leads to the need for multimodal medical data sets, where clinical reports and findings are attached to the corresponding medical scans. This paper illustrates the entire workflow for building the MedPix 2.0 data set. Starting with the well-known multimodal data set MedPix\textsuperscript{\textregistered}, mainly used by physicians, nurses, and healthcare students for Continuing Medical Education purposes, a semi-automatic pipeline was developed to extract visual and textual data followed by a manual curing procedure in which noisy samples were removed, thus creating a MongoDB database. Along with the data set, we developed a Graphical User Interface aimed at navigating efficiently the MongoDB instance and obtaining the raw data that can be easily used for training and/or fine-tuning VLMs. To enforce this point, in this work, we first recall DR-Minerva, a Retrieve Augmented Generation-based VLM model trained upon MedPix 2.0. DR-Minerva predicts the body part and the modality used to scan its input image. We also propose the extension of DR-Minerva with a Knowledge Graph that uses Llama 3.1 Instruct 8B, and leverages MedPix 2.0. The resulting architecture can be queried in a end-to-end manner, as a medical decision support system. MedPix 2.0 is available on GitHub.

This work investigates speech enhancement (SE) from the perspective of language models (LMs). We propose a novel method that leverages Direct Preference Optimization (DPO) to improve the perceptual quality of enhanced speech. Using UTMOS, a neural MOS prediction model, as a proxy for human ratings, our approach guides optimization toward perceptually preferred outputs. This differs from existing LM-based SE methods that focus on maximizing the likelihood of clean speech tokens, which may misalign with human perception and degrade quality despite low prediction error. Experiments on the 2020 Deep Noise Suppression Challenge test sets demonstrate that applying DPO to a pretrained LM-based SE model yields consistent improvements across various speech quality metrics, with relative gains of up to 56%. To our knowledge, this is the first application of DPO to SE and the first to incorporate proxy perceptual feedback into LM-based SE training, pointing to a promising direction for perceptually aligned SE.

Recent studies have successfully shown that large language models (LLMs) can be successfully used for generative error correction (GER) on top of the automatic speech recognition (ASR) output. Specifically, an LLM is utilized to carry out a direct mapping from the N-best hypotheses list generated by an ASR system to the predicted output transcription. However, despite its effectiveness, GER introduces extra data uncertainty since the LLM is trained without taking into account acoustic information available in the speech signal. In this work, we aim to overcome such a limitation by infusing acoustic information before generating the predicted transcription through a novel late fusion solution termed Uncertainty-Aware Dynamic Fusion (UADF). UADF is a multimodal fusion approach implemented into an auto-regressive decoding process and works in two stages: (i) It first analyzes and calibrates the token-level LLM decision, and (ii) it then dynamically assimilates the information from the acoustic modality. Experimental evidence collected from various ASR tasks shows that UADF surpasses existing fusion mechanisms in several ways. It yields significant improvements in word error rate (WER) while mitigating data uncertainty issues in LLM and addressing the poor generalization relied with sole modality during fusion. We also demonstrate that UADF seamlessly adapts to audio-visual speech recognition.

28 Sep 2025

This work presents HDA-SELD, a unified framework that combines hierarchical cross-modal distillation (HCMD) and multi-level data augmentation to address low-resource audio-visual (AV) sound event localization and detection (SELD). An audio-only SELD model acts as the teacher, transferring knowledge to an AV student model through both output responses and intermediate feature representations. To enhance learning, data augmentation is applied by mixing features randomly selected from multiple network layers and associated loss functions tailored to the SELD task. Extensive experiments on the DCASE 2023 and 2024 Challenge SELD datasets show that the proposed method significantly improves AV SELD performance, yielding relative gains of 21%-38% in the overall metric over the baselines. Notably, our proposed HDA-SELD achieves results comparable to or better than teacher models trained on much larger datasets, surpassing state-of-the-art methods on both DCASE 2023 and 2024 Challenge SELD tasks.

This work investigates two strategies for zero-shot non-intrusive speech assessment leveraging large language models. First, we explore the audio analysis capabilities of GPT-4o. Second, we propose GPT-Whisper, which uses Whisper as an audio-to-text module and evaluates the naturalness of text via targeted prompt engineering. We evaluate the assessment metrics predicted by GPT-4o and GPT-Whisper, examining their correlation with human-based quality and intelligibility assessments and the character error rate (CER) of automatic speech recognition. Experimental results show that GPT-4o alone is less effective for audio analysis, while GPT-Whisper achieves higher prediction accuracy, has moderate correlation with speech quality and intelligibility, and has higher correlation with CER. Compared to SpeechLMScore and DNSMOS, GPT-Whisper excels in intelligibility metrics, but performs slightly worse than SpeechLMScore in quality estimation. Furthermore, GPT-Whisper outperforms supervised non-intrusive models MOS-SSL and MTI-Net in Spearman's rank correlation for CER of Whisper. These findings validate GPT-Whisper's potential for zero-shot speech assessment without requiring additional training data.

Given recent advances in generative AI technology, a key question is how large language models (LLMs) can enhance acoustic modeling tasks using text decoding results from a frozen, pretrained automatic speech recognition (ASR) model. To explore new capabilities in language modeling for speech processing, we introduce the generative speech transcription error correction (GenSEC) challenge. This challenge comprises three post-ASR language modeling tasks: (i) post-ASR transcription correction, (ii) speaker tagging, and (iii) emotion recognition. These tasks aim to emulate future LLM-based agents handling voice-based interfaces while remaining accessible to a broad audience by utilizing open pretrained language models or agent-based APIs. We also discuss insights from baseline evaluations, as well as lessons learned for designing future evaluations.

22 Oct 2025

This work develops a rigorous numerical framework for solving time-dependent Optimal Control Problems (OCPs) governed by partial differential equations, with a particular focus on biomedical applications. The approach deals with adjoint-based Lagrangian methodology, which enables efficient gradient computation and systematic derivation of optimality conditions for both distributed and concentrated control formulations. The proposed framework is first verified using a time-dependent advection-diffusion problem endowed with a manufactured solution to assess accuracy and convergence properties. Subsequently, two representative applications involving drug delivery are investigated: (i) a light-triggered drug delivery system for targeted cancer therapy and (ii) a catheter-based drug delivery system in a patient-specific coronary artery. Numerical experiments not only demonstrate the accuracy of the approach, but also its flexibility and robustness in handling complex geometries, heterogeneous parameters, and realistic boundary conditions, highlighting its potential for the optimal design and control of complex biomedical systems.

21 Jan 2025

The present study attempts to investigate the demographic dividend phenomenon in China. For this goal, a socio-economic approach has been used to analyze the topic from 1995 to 2019. In contrast to common belief, the outcomes revealed that China is still benefiting from a demographic dividend. However, due to the accelerated population aging trend and the increasing share of government expenditure on the public pension system, the window opportunity has yet to close. Furthermore, concerning the absolute value of estimated coefficients, the employment rate of young people in the age bundle of [15, 24] has the highest impact on decreasing government expenditure.

In this article we present SHARP, an original approach for obtaining human

activity recognition (HAR) through the use of commercial IEEE 802.11 (Wi-Fi)

devices. SHARP grants the possibility to discern the activities of different

persons, across different time-spans and environments. To achieve this, we

devise a new technique to clean and process the channel frequency response

(CFR) phase of the Wi-Fi channel, obtaining an estimate of the Doppler shift at

a radio monitor device. The Doppler shift reveals the presence of moving

scatterers in the environment, while not being affected by

(environment-specific) static objects. SHARP is trained on data collected as a

person performs seven different activities in a single environment. It is then

tested on different setups, to assess its performance as the person, the day

and/or the environment change with respect to those considered at training

time. In the worst-case scenario, it reaches an average accuracy higher than

95%, validating the effectiveness of the extracted Doppler information, used in

conjunction with a learning algorithm based on a neural network, in recognizing

human activities in a subject and environment independent way. The collected

CFR dataset and the code are publicly available for replicability and

benchmarking purposes.

In the last years, several machine learning-based techniques have been proposed to monitor human movements from Wi-Fi channel readings. However, the development of domain-adaptive algorithms that robustly work across different environments is still an open problem, whose solution requires large datasets characterized by strong domain diversity, in terms of environments, persons and Wi-Fi hardware. To date, the few public datasets available are mostly obsolete - as obtained via Wi-Fi devices operating on 20 or 40 MHz bands - and contain little or no domain diversity, thus dramatically limiting the advancements in the design of sensing algorithms. The present contribution aims to fill this gap by providing a dataset of IEEE 802.11ac channel measurements over an 80 MHz bandwidth channel featuring notable domain diversity, through measurement campaigns that involved thirteen subjects across different environments, days, and with different hardware. Novel experimental data is provided by blocking the direct path between the transmitter and the monitor, and collecting measurements in a semi-anechoic chamber (no multi-path fading). Overall, the dataset - available on IEEE DataPort [1] - contains more than thirteen hours of channel state information readings (23.6 GB), allowing researchers to test activity/identity recognition and people counting algorithms.

01 Feb 2014

In this first paper, we briefly retrace some historical pathways of modern

physics of 20th Century. In particular, we have considered some moments of

cosmic ray physics and, above all, the early theoretical and experimental bases

which will lead to the first exact measurements of the anomalous magnetic

moment of the muon, one of the main high precision tests of QED.

Shaping the structure of light with flat optical devices has driven significant advancements in our fundamental understanding of light and light-matter interactions, and enabled a broad range of applications, from image processing and microscopy to optical communication, quantum information processing, and the manipulation of microparticles. Yet, pushing the boundaries of structured light beyond the linear optical regime remains an open challenge. Nonlinear optical interactions, such as wave mixing in nonlinear flat optics, offer a powerful platform to unlock new degrees of freedom and functionalities for generating and detecting structured light. In this study, we experimentally demonstrate the non-trivial structuring of third-harmonic light enabled by the addition of total angular momentum projection in a nonlinear, isotropic flat optics element -- a single thin film of amorphous silicon. We identify the total angular momentum projection and helicity as the most critical properties for analyzing the experimental results. The theoretical model we propose, supported by numerical simulations, offers quantitative predictions for light structuring through nonlinear wave mixing under various pumping conditions, including vectorial and non-paraxial pump light. Notably, we reveal that the shape of third-harmonic light is highly sensitive to the polarization state of the pump. Our findings demonstrate that harnessing the addition of total angular momentum projection in nonlinear wave mixing can be a powerful strategy for generating and detecting precisely controlled structured light.

We first review how we can store a run-length compressed suffix array (RLCSA)

for a text T of length n over an alphabet of size σ whose

Burrows-Wheeler Transform (BWT) consists of r runs in $O \left(

\rule{0ex}{2ex} r \log (n / r) + r \log \sigma + \sigma \right)$ bits such that

later, given character a and the suffix array interval for P, we can find

the suffix-array (SA) interval for aP in O(logra+loglogn) time,

where ra is the number of runs of copies of a in the BWT. We then show how

to modify the RLCSA such that we find the SA interval for aP in only $O

(\log r_a)$ time, without increasing its asymptotic space bound. Our key idea

is applying a result by Nishimoto and Tabei (ICALP 2021) and then replacing

rank queries on sparse bitvectors by a constant number of select queries. We

also review two-level indexing and discuss how our faster RLCSA may be useful

in improving it. Finally, we briefly discuss how two-level indexing may speed

up a recent heuristic for finding maximal exact matches of a pattern with

respect to an indexed text.

This paper investigates the dynamic interdependencies between the European

insurance sector and key financial markets-equity, bond, and banking-by

extending the Generalized Forecast Error Variance Decomposition framework to a

broad set of performance and risk indicators. Our empirical analysis, based on

a comprehensive dataset spanning January 2000 to October 2024, shows that the

insurance market is not a passive receiver of external shocks but an active

contributor in the propagation of systemic risk, particularly during periods of

financial stress such as the subprime crisis, the European sovereign debt

crisis, and the COVID-19 pandemic. Significant heterogeneity is observed across

subsectors, with diversified multiline insurers and reinsurance playing key

roles in shock transmission. Moreover, our granular company-level analysis

reveals clusters of systemically central insurance companies, underscoring the

presence of a core group that consistently exhibits high interconnectivity and

influence in risk propagation.

10 May 2023

Digital Transformation (DT) is the process of integrating digital

technologies and solutions into the activities of an organization, whether

public or private. This paper focuses on the DT of public sector organizations,

where the targets of innovative digital solutions are either the citizens or

the administrative bodies or both. This paper is a guided tour for Computer

Scientists, as the digital transformation of the public sector involves more

than just the use of technology. While technological innovation is a crucial

component of any digital transformation, it is not sufficient on its own.

Instead, DT requires a cultural, organizational, and technological shift in the

way public sector organizations operate and relate to their users, creating the

capabilities within the organization to take full advantage of any opportunity

in the fastest, best, and most innovative manner in the ways they operate and

relate to the citizens. Our tutorial is based on the results of a survey that

we performed as an analysis of scientific literature available in some digital

libraries well known to Computer Scientists. Such tutorial let us to identify

four key pillars that sustain a successful DT: (open) data, ICT technologies,

digital skills of citizens and public administrators, and agile processes for

developing new digital services and products. The tutorial discusses the

interaction of these pillars and highlights the importance of data as the first

and foremost pillar of any DT. We have developed a conceptual map in the form

of a graph model to show some basic relationships among these pillars. We

discuss the relationships among the four pillars aiming at avoiding the

potential negative bias that may arise from a rendering of DT restricted to

technology only. We also provide illustrative examples and highlight relevant

trends emerging from the current state of the art.

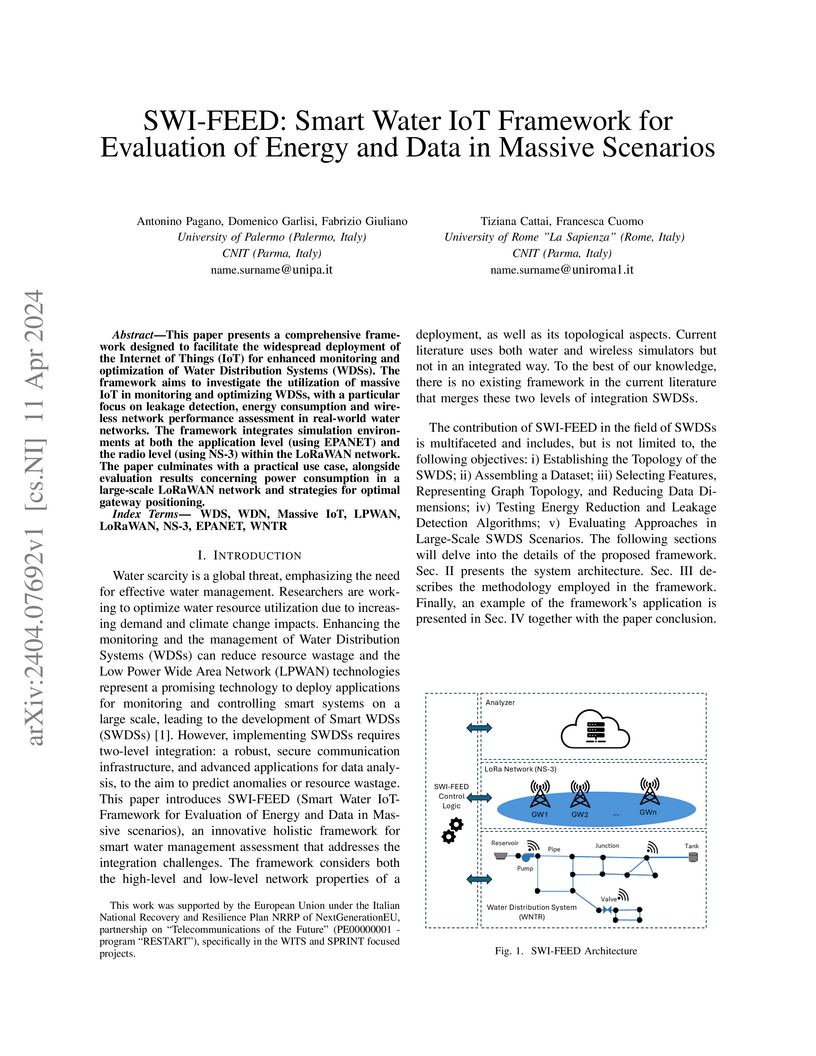

This paper presents a comprehensive framework designed to facilitate the

widespread deployment of the Internet of Things (IoT) for enhanced monitoring

and optimization of Water Distribution Systems (WDSs). The framework aims to

investigate the utilization of massive IoT in monitoring and optimizing WDSs,

with a particular focus on leakage detection, energy consumption and wireless

network performance assessment in real-world water networks. The framework

integrates simulation environments at both the application level (using EPANET)

and the radio level (using NS-3) within the LoRaWAN network. The paper

culminates with a practical use case, alongside evaluation results concerning

power consumption in a large-scale LoRaWAN network and strategies for optimal

gateway positioning.

05 Jan 2025

Benford's law, or the law of the first significant digit, has been subjected to numerous studies due to its unique applications in financial fields, especially accounting and auditing. However, studies that addressed the law's establishment in the stock markets generally concluded that stock prices do not comply with the underlying distribution. The present research, emphasizing data randomness as the underlying assumption of Benford's law, has conducted an empirical investigation of the Warsaw Stock Exchange. The outcomes demonstrated that since stock prices are not distributed randomly, the law cannot be held in the stock market. Besides, the Chi-square goodness-of-fit test also supported the obtained results. Moreover, it is discussed that the lack of randomness originated from market inefficiency. In other words, violating the efficient market hypothesis has caused the time series non-randomness and the failure to establish Benford's law.

There are no more papers matching your filters at the moment.