Vienna University of Economics and Business

15 Sep 2025

We study constrained bi-matrix games, with a particular focus on low-rank games. Our main contribution is a framework that reduces low-rank games to smaller, equivalent constrained games, along with a necessary and sufficient condition for when such reductions exist. Building on this framework, we present three approaches for computing the set of extremal Nash equilibria, based on vertex enumeration, polyhedral calculus, and vector linear programming. Numerical case studies demonstrate the effectiveness of the proposed reduction and solution methods.

We present a novel deep generative semi-supervised framework for credit card fraud detection, formulated as time series classification task. As financial transaction data streams grow in scale and complexity, traditional methods often require large labeled datasets, struggle with time series of irregular sampling frequencies and varying sequence lengths. To address these challenges, we extend conditional Generative Adversarial Networks (GANs) for targeted data augmentation, integrate Bayesian inference to obtain predictive distributions and quantify uncertainty, and leverage log-signatures for robust feature encoding of transaction histories. We introduce a novel Wasserstein distance-based loss to align generated and real unlabeled samples while simultaneously maximizing classification accuracy on labeled data. Our approach is evaluated on the BankSim dataset, a widely used simulator for credit card transaction data, under varying proportions of labeled samples, demonstrating consistent improvements over benchmarks in both global statistical and domain-specific metrics. These findings highlight the effectiveness of GAN-driven semi-supervised learning with log-signatures for irregularly sampled time series and emphasize the importance of uncertainty-aware predictions.

The complexity of black-box algorithms can lead to various challenges,

including the introduction of biases. These biases present immediate risks in

the algorithms' application. It was, for instance, shown that neural networks

can deduce racial information solely from a patient's X-ray scan, a task beyond

the capability of medical experts. If this fact is not known to the medical

expert, automatic decision-making based on this algorithm could lead to

prescribing a treatment (purely) based on racial information. While current

methodologies allow for the "orthogonalization" or "normalization" of neural

networks with respect to such information, existing approaches are grounded in

linear models. Our paper advances the discourse by introducing corrections for

non-linearities such as ReLU activations. Our approach also encompasses scalar

and tensor-valued predictions, facilitating its integration into neural network

architectures. Through extensive experiments, we validate our method's

effectiveness in safeguarding sensitive data in generalized linear models,

normalizing convolutional neural networks for metadata, and rectifying

pre-existing embeddings for undesired attributes.

Neural network representations of simple models, such as linear regression, are being studied increasingly to better understand the underlying principles of deep learning algorithms. However, neural representations of distributional regression models, such as the Cox model, have received little attention so far. We close this gap by proposing a framework for distributional regression using inverse flow transformations (DRIFT), which includes neural representations of the aforementioned models. We empirically demonstrate that the neural representations of models in DRIFT can serve as a substitute for their classical statistical counterparts in several applications involving continuous, ordered, time-series, and survival outcomes. We confirm that models in DRIFT empirically match the performance of several statistical methods in terms of estimation of partial effects, prediction, and aleatoric uncertainty quantification. DRIFT covers both interpretable statistical models and flexible neural networks opening up new avenues in both statistical modeling and deep learning.

02 Apr 2024

Macroeconomic data is characterized by a limited number of observations (small T), many time series (big K) but also by featuring temporal dependence. Neural networks, by contrast, are designed for datasets with millions of observations and covariates. In this paper, we develop Bayesian neural networks (BNNs) that are well-suited for handling datasets commonly used for macroeconomic analysis in policy institutions. Our approach avoids extensive specification searches through a novel mixture specification for the activation function that appropriately selects the form of nonlinearities. Shrinkage priors are used to prune the network and force irrelevant neurons to zero. To cope with heteroskedasticity, the BNN is augmented with a stochastic volatility model for the error term. We illustrate how the model can be used in a policy institution by first showing that our different BNNs produce precise density forecasts, typically better than those from other machine learning methods. Finally, we showcase how our model can be used to recover nonlinearities in the reaction of macroeconomic aggregates to financial shocks.

Ontology engineering (OE) in large projects poses a number of challenges arising from the heterogeneous backgrounds of the various stakeholders, domain experts, and their complex interactions with ontology designers. This multi-party interaction often creates systematic ambiguities and biases from the elicitation of ontology requirements, which directly affect the design, evaluation and may jeopardise the target reuse. Meanwhile, current OE methodologies strongly rely on manual activities (e.g., interviews, discussion pages). After collecting evidence on the most crucial OE activities, we introduce \textbf{OntoChat}, a framework for conversational ontology engineering that supports requirement elicitation, analysis, and testing. By interacting with a conversational agent, users can steer the creation of user stories and the extraction of competency questions, while receiving computational support to analyse the overall requirements and test early versions of the resulting ontologies. We evaluate OntoChat by replicating the engineering of the Music Meta Ontology, and collecting preliminary metrics on the effectiveness of each component from users. We release all code at this https URL.

Our society increasingly benefits from Artificial Intelligence (AI).

Unfortunately, more and more evidence shows that AI is also used for offensive

purposes. Prior works have revealed various examples of use cases in which the

deployment of AI can lead to violation of security and privacy objectives. No

extant work, however, has been able to draw a holistic picture of the offensive

potential of AI. In this SoK paper we seek to lay the ground for a systematic

analysis of the heterogeneous capabilities of offensive AI. In particular we

(i) account for AI risks to both humans and systems while (ii) consolidating

and distilling knowledge from academic literature, expert opinions, industrial

venues, as well as laypeople -- all of which being valuable sources of

information on offensive AI.

To enable alignment of such diverse sources of knowledge, we devise a common

set of criteria reflecting essential technological factors related to offensive

AI. With the help of such criteria, we systematically analyze: 95 research

papers; 38 InfoSec briefings (from, e.g., BlackHat); the responses of a user

study (N=549) entailing individuals with diverse backgrounds and expertise; and

the opinion of 12 experts. Our contributions not only reveal concerning ways

(some of which overlooked by prior work) in which AI can be offensively used

today, but also represent a foothold to address this threat in the years to

come.

Active Infrared thermography (AIRT) is a widely adopted non-destructive testing (NDT) technique for detecting subsurface anomalies in industrial components. Due to the high dimensionality of AIRT data, current approaches employ non-linear autoencoders (AEs) for dimensionality reduction. However, the latent space learned by AIRT AEs lacks structure, limiting their effectiveness in downstream defect characterization tasks. To address this limitation, this paper proposes a principal component analysis guided (PCA-guided) autoencoding framework for structured dimensionality reduction to capture intricate, non-linear features in thermographic signals while enforcing a structured latent space. A novel loss function, PCA distillation loss, is introduced to guide AIRT AEs to align the latent representation with structured PCA components while capturing the intricate, non-linear patterns in thermographic signals. To evaluate the utility of the learned, structured latent space, we propose a neural network-based evaluation metric that assesses its suitability for defect characterization. Experimental results show that the proposed PCA-guided AE outperforms state-of-the-art dimensionality reduction methods on PVC, CFRP, and PLA samples in terms of contrast, signal-to-noise ratio (SNR), and neural network-based metrics.

22 Jan 2025

Many existing shrinkage approaches for time-varying parameter (TVP) models

assume constant innovation variances across time points, inducing sparsity by

shrinking these variances toward zero. However, this assumption falls short

when states exhibit large jumps or structural changes, as often seen in

empirical time series analysis. To address this, we propose the dynamic triple

gamma prior -- a stochastic process that induces time-dependent shrinkage by

modeling dependence among innovations while retaining a well-known triple gamma

marginal distribution. This framework encompasses various special and limiting

cases, including the horseshoe shrinkage prior, making it highly flexible. We

derive key properties of the dynamic triple gamma that highlight its dynamic

shrinkage behavior and develop an efficient Markov chain Monte Carlo algorithm

for posterior sampling. The proposed approach is evaluated through sparse

covariance modeling and forecasting of the returns of the EURO STOXX 50 index,

demonstrating favorable forecasting performance.

In the digital age, data frequently crosses organizational and jurisdictional boundaries, making effective governance essential. Usage control policies have emerged as a key paradigm for regulating data usage, safeguarding privacy, protecting intellectual property, and ensuring compliance with regulations. A central mechanism for usage control is the handling of obligations, which arise as a side effect of using and sharing data. Effective monitoring of obligations requires capturing usage traces and accounting for temporal aspects such as start times and deadlines, as obligations may evolve over times into different states, such as fulfilled, violated, or expired. While several solutions have been proposed for obligation monitoring, they often lack formal semantics or provide limited support for reasoning over obligation states. To address these limitations, we extend GUCON, a policy framework grounded in the formal semantics of SPAQRL graph patterns, to explicitly model the temporal aspects of an obligation. This extension enables the expressing of temporal obligations and supports continuous monitoring of their evolving states based on usage traces stored in temporal knowledge graphs. We demonstrate how this extended model can be represented using RDF-star and SPARQL-star and propose an Obligation State Manager that monitors obligation states and assess their compliance with respect to usage traces. Finally, we evaluate both the extended model and its prototype implementation.

We propose a novel variational autoencoder (VAE) architecture that employs a spherical Cauchy (spCauchy) latent distribution. Unlike traditional Gaussian latent spaces or the widely used von Mises-Fisher (vMF) distribution, spCauchy provides a more natural hyperspherical representation of latent variables, better capturing directional data while maintaining flexibility. Its heavy-tailed nature prevents over-regularization, ensuring efficient latent space utilization while offering a more expressive representation. Additionally, spCauchy circumvents the numerical instabilities inherent to vMF, which arise from computing normalization constants involving Bessel functions. Instead, it enables a fully differentiable and efficient reparameterization trick via Möbius transformations, allowing for stable and scalable training. The KL divergence can be computed through a rapidly converging power series, eliminating concerns of underflow or overflow associated with evaluation of ratios of hypergeometric functions. These properties make spCauchy a compelling alternative for VAEs, offering both theoretical advantages and practical efficiency in high-dimensional generative modeling.

Conversational interfaces are likely to become more efficient, intuitive and

engaging way for human-computer interaction than today's text or touch-based

interfaces. Current research efforts concerning conversational interfaces focus

primarily on question answering functionality, thereby neglecting support for

search activities beyond targeted information lookup. Users engage in

exploratory search when they are unfamiliar with the domain of their goal,

unsure about the ways to achieve their goals, or unsure about their goals in

the first place. Exploratory search is often supported by approaches from

information visualization. However, such approaches cannot be directly

translated to the setting of conversational search.

In this paper we investigate the affordances of interactive storytelling as a

tool to enable exploratory search within the framework of a conversational

interface. Interactive storytelling provides a way to navigate a document

collection in the pace and order a user prefers. In our vision, interactive

storytelling is to be coupled with a dialogue-based system that provides verbal

explanations and responsive design. We discuss challenges and sketch the

research agenda required to put this vision into life.

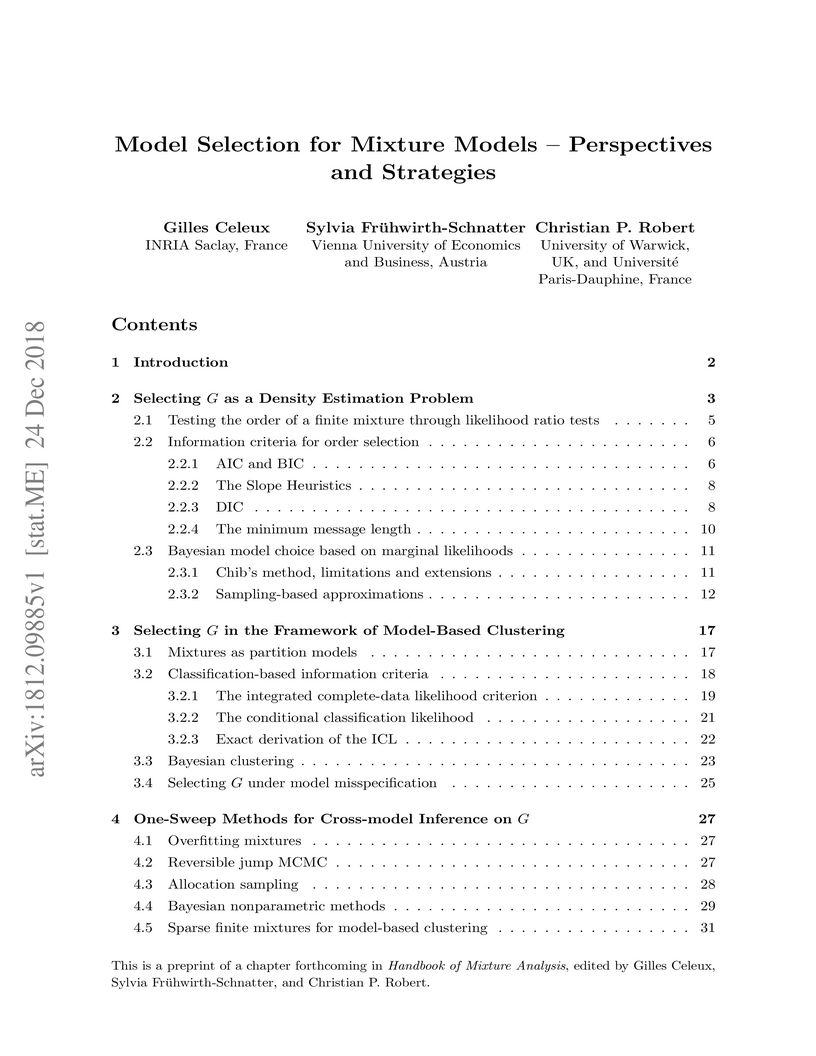

24 Dec 2018

Determining the number G of components in a finite mixture distribution is an

important and difficult inference issue. This is a most important question,

because statistical inference about the resulting model is highly sensitive to

the value of G. Selecting an erroneous value of G may produce a poor density

estimate. This is also a most difficult question from a theoretical perspective

as it relates to unidentifiability issues of the mixture model. This is further

a most relevant question from a practical viewpoint since the meaning of the

number of components G is strongly related to the modelling purpose of a

mixture distribution. We distinguish in this chapter between selecting G as a

density estimation problem in Section 2 and selecting G in a model-based

clustering framework in Section 3. Both sections discuss frequentist as well as

Bayesian approaches. We present here some of the Bayesian solutions to the

different interpretations of picking the "right" number of components in a

mixture, before concluding on the ill-posed nature of the question.

Leibniz University HannoverGhent University KU LeuvenTU Dresden

KU LeuvenTU Dresden King’s College LondonPolitecnico di MilanoUniversity of LiverpoolUniversity of Bologna

King’s College LondonPolitecnico di MilanoUniversity of LiverpoolUniversity of Bologna Inria

Inria Duke UniversityTU BerlinTU WienUniversidade do PortoMaastricht UniversityUniversidad de ChileThe University of Western AustraliaNational Research CouncilVienna University of Economics and BusinessLinköping UniversityUniversity of StavangerUniversity of MannheimINESC TECVrije Universiteit AmsterdamUniversità degli Studi di CagliariUniversity of BariRobert Bosch GmbHNantes UniversityUniversity of MontpellierUniversité de Caen NormandieFU BerlinPolitecnico di BariFree University of Bozen-BolzanoRagn-Sells ABLASIGEDeutsches Forschungszentrum für Künstliche Intelligenz GmbH (DFKI)University of VeniceTechnische InformationsbibliothekISTC-CNRCefrielFIZ KarlsruheUniversit of BremenGerman Federal Institute for Risk AssessmentKarlsruhe Insitute of TechnologyUniversity InnsbruckOpen UniversityUniversity of MauritiusUniversit

Grenoble AlpesUniversit

degli Studi di GenovaUniversity of Illinois Urbana

ChampaignUniversit

degli Studi di PadovaRWTH Aachen UniversityUniversit

degli Studi di MilanoUniversit

de NamurNorwegian University of

Science and Technology

Duke UniversityTU BerlinTU WienUniversidade do PortoMaastricht UniversityUniversidad de ChileThe University of Western AustraliaNational Research CouncilVienna University of Economics and BusinessLinköping UniversityUniversity of StavangerUniversity of MannheimINESC TECVrije Universiteit AmsterdamUniversità degli Studi di CagliariUniversity of BariRobert Bosch GmbHNantes UniversityUniversity of MontpellierUniversité de Caen NormandieFU BerlinPolitecnico di BariFree University of Bozen-BolzanoRagn-Sells ABLASIGEDeutsches Forschungszentrum für Künstliche Intelligenz GmbH (DFKI)University of VeniceTechnische InformationsbibliothekISTC-CNRCefrielFIZ KarlsruheUniversit of BremenGerman Federal Institute for Risk AssessmentKarlsruhe Insitute of TechnologyUniversity InnsbruckOpen UniversityUniversity of MauritiusUniversit

Grenoble AlpesUniversit

degli Studi di GenovaUniversity of Illinois Urbana

ChampaignUniversit

degli Studi di PadovaRWTH Aachen UniversityUniversit

degli Studi di MilanoUniversit

de NamurNorwegian University of

Science and Technology

KU LeuvenTU Dresden

KU LeuvenTU Dresden King’s College LondonPolitecnico di MilanoUniversity of LiverpoolUniversity of Bologna

King’s College LondonPolitecnico di MilanoUniversity of LiverpoolUniversity of Bologna Inria

Inria Duke UniversityTU BerlinTU WienUniversidade do PortoMaastricht UniversityUniversidad de ChileThe University of Western AustraliaNational Research CouncilVienna University of Economics and BusinessLinköping UniversityUniversity of StavangerUniversity of MannheimINESC TECVrije Universiteit AmsterdamUniversità degli Studi di CagliariUniversity of BariRobert Bosch GmbHNantes UniversityUniversity of MontpellierUniversité de Caen NormandieFU BerlinPolitecnico di BariFree University of Bozen-BolzanoRagn-Sells ABLASIGEDeutsches Forschungszentrum für Künstliche Intelligenz GmbH (DFKI)University of VeniceTechnische InformationsbibliothekISTC-CNRCefrielFIZ KarlsruheUniversit of BremenGerman Federal Institute for Risk AssessmentKarlsruhe Insitute of TechnologyUniversity InnsbruckOpen UniversityUniversity of MauritiusUniversit

Grenoble AlpesUniversit

degli Studi di GenovaUniversity of Illinois Urbana

ChampaignUniversit

degli Studi di PadovaRWTH Aachen UniversityUniversit

degli Studi di MilanoUniversit

de NamurNorwegian University of

Science and Technology

Duke UniversityTU BerlinTU WienUniversidade do PortoMaastricht UniversityUniversidad de ChileThe University of Western AustraliaNational Research CouncilVienna University of Economics and BusinessLinköping UniversityUniversity of StavangerUniversity of MannheimINESC TECVrije Universiteit AmsterdamUniversità degli Studi di CagliariUniversity of BariRobert Bosch GmbHNantes UniversityUniversity of MontpellierUniversité de Caen NormandieFU BerlinPolitecnico di BariFree University of Bozen-BolzanoRagn-Sells ABLASIGEDeutsches Forschungszentrum für Künstliche Intelligenz GmbH (DFKI)University of VeniceTechnische InformationsbibliothekISTC-CNRCefrielFIZ KarlsruheUniversit of BremenGerman Federal Institute for Risk AssessmentKarlsruhe Insitute of TechnologyUniversity InnsbruckOpen UniversityUniversity of MauritiusUniversit

Grenoble AlpesUniversit

degli Studi di GenovaUniversity of Illinois Urbana

ChampaignUniversit

degli Studi di PadovaRWTH Aachen UniversityUniversit

degli Studi di MilanoUniversit

de NamurNorwegian University of

Science and TechnologyThe International Semantic Web Research School (ISWS) is a week-long intensive program designed to immerse participants in the field. This document reports a collaborative effort performed by ten teams of students, each guided by a senior researcher as their mentor, attending ISWS 2023. Each team provided a different perspective to the topic of creative AI, substantiated by a set of research questions as the main subject of their investigation. The 2023 edition of ISWS focuses on the intersection of Semantic Web technologies and Creative AI. ISWS 2023 explored various intersections between Semantic Web technologies and creative AI. A key area of focus was the potential of LLMs as support tools for knowledge engineering. Participants also delved into the multifaceted applications of LLMs, including legal aspects of creative content production, humans in the loop, decentralised approaches to multimodal generative AI models, nanopublications and AI for personal scientific knowledge graphs, commonsense knowledge in automatic story and narrative completion, generative AI for art critique, prompt engineering, automatic music composition, commonsense prototyping and conceptual blending, and elicitation of tacit knowledge. As Large Language Models and semantic technologies continue to evolve, new exciting prospects are emerging: a future where the boundaries between creative expression and factual knowledge become increasingly permeable and porous, leading to a world of knowledge that is both informative and inspiring.

31 Jul 2019

The financial crisis showed the importance of measuring, allocating and regulating systemic risk. Recently, the systemic risk measures that can be decomposed into an aggregation function and a scalar measure of risk, received a lot of attention. In this framework, capital allocations are added after aggregation and can represent bailout costs. More recently, a framework has been introduced, where institutions are supplied with capital allocations before aggregation. This yields an interpretation that is particularly useful for regulatory purposes. In each framework, the set of all feasible capital allocations leads to a multivariate risk measure. In this paper, we present dual representations for scalar systemic risk measures as well as for the corresponding multivariate risk measures concerning capital allocations. Our results cover both frameworks: aggregating after allocating and allocating after aggregation. As examples, we consider the aggregation mechanisms of the Eisenberg-Noe model as well as those of the resource allocation and network flow models.

The digitization of healthcare presents numerous challenges, including the complexity of biological systems, vast data generation, and the need for personalized treatment plans. Traditional computational methods often fall short, leading to delayed and sometimes ineffective diagnoses and treatments. Quantum Computing (QC) and Quantum Machine Learning (QML) offer transformative advancements with the potential to revolutionize medicine. This paper summarizes areas where QC promises unprecedented computational power, enabling faster, more accurate diagnostics, personalized treatments, and enhanced drug discovery processes. However, integrating quantum technologies into precision medicine also presents challenges, including errors in algorithms and high costs. We show that mathematically-based techniques for specifying, developing, and verifying software (formal methods) can enhance the reliability and correctness of QC. By providing a rigorous mathematical framework, formal methods help to specify, develop, and verify systems with high precision. In genomic data analysis, formal specification languages can precisely (1) define the behavior and properties of quantum algorithms designed to identify genetic markers associated with diseases. Model checking tools can systematically explore all possible states of the algorithm to (2) ensure it behaves correctly under all conditions, while theorem proving techniques provide mathematical (3) proof that the algorithm meets its specified properties, ensuring accuracy and reliability. Additionally, formal optimization techniques can (4) enhance the efficiency and performance of quantum algorithms by reducing resource usage, such as the number of qubits and gate operations. Therefore, we posit that formal methods can significantly contribute to enabling QC to realize its full potential as a game changer in precision medicine.

15 May 2025

Accelerating climate-tech innovation in the formative stage of the technology

life cycle is crucial to meeting climate policy goals. During this period,

competing technologies are often undergoing major technical improvements within

a nascent value chain. We analyze this formative stage for 14 climate-tech

sectors using a dataset of 4,172 North American firms receiving 12,929

early-stage private investments between 2006 and 2021. Investments in these

firms reveal that commercialization occurs in five distinct product clusters

across the value chain. Only 15% of firms develop end products (i.e.,

downstream products bought by consumers), while 59% support these end products

through components, manufacturing processes, or optimization products, and 26%

develop business services. Detailed analysis of the temporal evolution of

investments reveals the driving forces behind the technologies that

commercialize, such as innovation spillovers, coalescence around a dominant

design, and flexible regulatory frameworks. We identify three patterns of

innovation: emerging innovation (e.g., agriculture), characterized by recent

growth in private investments across most product clusters and spillover from

other sectors; ongoing innovation (e.g., energy storage), characterized by

multiple waves of investments in evolving products; and maturing innovation

(e.g., energy efficiency), characterized by a dominant end product with a

significant share of investments in optimization and services. Understanding

the development of nascent value chains can inform policy design to best

support scaling of climate-tech by identifying underfunded elements in the

value chain and supporting development of a full value chain rather than only

end products.

Large language models are deep learning models with a large number of parameters. The models made noticeable progress on a large number of tasks, and as a consequence allowing them to serve as valuable and versatile tools for a diverse range of applications. Their capabilities also offer opportunities for business process management, however, these opportunities have not yet been systematically investigated. In this paper, we address this research problem by foregrounding various management tasks of the BPM lifecycle. We investigate six research directions highlighting problems that need to be addressed when using large language models, including usage guidelines for practitioners.

16 Feb 2022

Event sequence data is increasingly available. Many business operations are

supported by information systems that record transactions, events, state

changes, message exchanges, and so forth. This observation is equally valid for

various industries, including production, logistics, healthcare, financial

services, education, to name but a few. The variety of application areas

explains that techniques for event sequence data analysis have been developed

rather independently in different fields of computer science. Most prominent

are contributions from information visualization and from process mining. So

far, the contributions from these two fields have neither been compared nor

have they been mapped to an integrated framework. In this paper, we develop the

Event Sequence Visualization framework (ESeVis) that gives due credit to the

traditions of both fields. Our mapping study provides an integrated perspective

on both fields and identifies potential for synergies for future research.

05 Feb 2025

We develop a framework to holistically test for and monitor the impact of

different types of events affecting a country's housing market, yet originating

from housing-external sources. We classify events along three dimensions

leading to testable hypotheses: prices versus quantities, supply versus demand,

and immediate versus gradually evolving. These dimensions translate into

guidance about which data type, statistical measure and testing strategy should

be used. To perform such test suitable statistical models are needed which we

implement as a hierarchical hedonic price model and a complementary count

model. These models are amended by regime and contextual variables as suggested

by our classification strategy. We apply this framework to the Austrian real

estate market together with three disruptive events triggered by the COVID-19

pandemic, a policy tightening mortgage lending standards, as well as the

cost-of-living crisis that came along with increased financing costs. The tests

yield the expected results and, by that, some housing market puzzles are

resolved. Deviating from the prior classification exercise means that some

developments would have been undetected. Further, adopting our framework

consistently when performing empirical research on residential real estate

would lead to better comparable research results and, by that, would allow

researchers to draw meta-conclusions from the bulk of studies available across

time and space.

There are no more papers matching your filters at the moment.