Wilfrid Laurier University

A comprehensive survey analyzes Reinforcement Learning algorithms from foundational methods to advanced Deep Reinforcement Learning techniques, providing detailed insights into their strengths and weaknesses as observed in specific research papers across diverse application domains. It aims to bridge the gap between theoretical understanding and practical deployment by offering guidance on algorithm selection and implementation challenges.

Majid Ghasemi and Dariush Ebrahimi from Wilfrid Laurier University provide a structured and accessible overview of Reinforcement Learning fundamentals, categorizing core methodologies and essential algorithms while curating resources for beginners to navigate the field's complexities.

06 Aug 2025

TURA (Tool-Augmented Unified Retrieval Agent), developed by Baidu Inc., is an agentic framework that unifies static web content retrieval with dynamic tool-use for AI search. It overcomes limitations of traditional RAG systems to provide robust, real-time answers for complex queries, demonstrating an 8.9% increase in session success rate during online A/B testing.

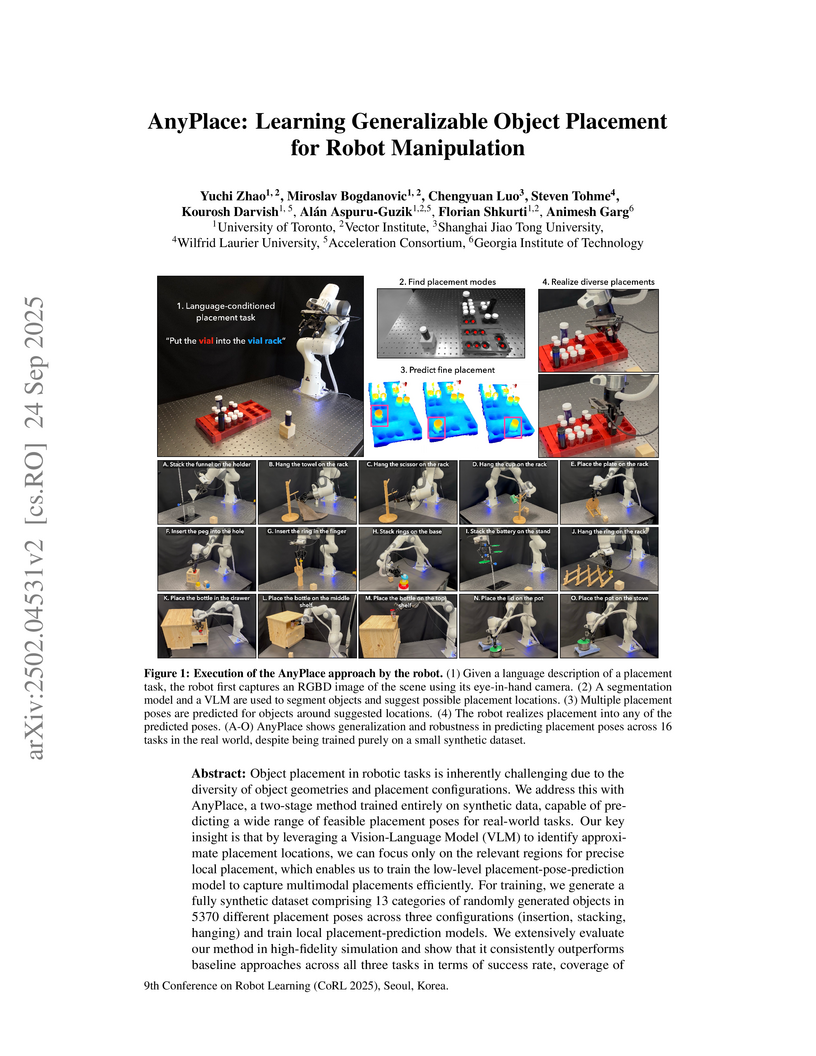

Object placement in robotic tasks is inherently challenging due to the diversity of object geometries and placement configurations. To address this, we propose AnyPlace, a two-stage method trained entirely on synthetic data, capable of predicting a wide range of feasible placement poses for real-world tasks. Our key insight is that by leveraging a Vision-Language Model (VLM) to identify rough placement locations, we focus only on the relevant regions for local placement, which enables us to train the low-level placement-pose-prediction model to capture diverse placements efficiently. For training, we generate a fully synthetic dataset of randomly generated objects in different placement configurations (insertion, stacking, hanging) and train local placement-prediction models. We conduct extensive evaluations in simulation, demonstrating that our method outperforms baselines in terms of success rate, coverage of possible placement modes, and precision. In real-world experiments, we show how our approach directly transfers models trained purely on synthetic data to the real world, where it successfully performs placements in scenarios where other models struggle -- such as with varying object geometries, diverse placement modes, and achieving high precision for fine placement. More at: this https URL.

3D Gaussian Splatting (3DGS) struggles in few-shot scenarios, where its standard adaptive density control (ADC) can lead to overfitting and bloated reconstructions. While state-of-the-art methods like FSGS improve quality, they often do so by significantly increasing the primitive count. This paper presents a framework that revises the core 3DGS optimization to prioritize efficiency. We replace the standard positional gradient heuristic with a novel densification trigger that uses the opacity gradient as a lightweight proxy for rendering error. We find this aggressive densification is only effective when paired with a more conservative pruning schedule, which prevents destructive optimization cycles. Combined with a standard depth-correlation loss for geometric guidance, our framework demonstrates a fundamental improvement in efficiency. On the 3-view LLFF dataset, our model is over 40% more compact (32k vs. 57k primitives) than FSGS, and on the Mip-NeRF 360 dataset, it achieves a reduction of approximately 70%. This dramatic gain in compactness is achieved with a modest trade-off in reconstruction metrics, establishing a new state-of-the-art on the quality-vs-efficiency Pareto frontier for few-shot view synthesis.

Researchers from Beijing University of Posts and Telecommunications and Baidu Inc. provide a comprehensive survey on Graph Foundation Models (GFMs) for recommendation systems. The paper proposes a tripartite taxonomy to categorize GFM approaches based on how Graph Neural Networks (GNNs) and Large Language Models (LLMs) synergize, and identifies key challenges and future directions in this emerging field.

Effective and interpretable classification of medical images is a challenge in computer-aided diagnosis, especially in resource-limited clinical settings. This study introduces spline-based Kolmogorov-Arnold Networks (KANs) for accurate medical image classification with limited, diverse datasets. The models include SBTAYLOR-KAN, integrating B-splines with Taylor series; SBRBF-KAN, combining B-splines with Radial Basis Functions; and SBWAVELET-KAN, embedding B-splines in Morlet wavelet transforms. These approaches leverage spline-based function approximation to capture both local and global nonlinearities. The models were evaluated on brain MRI, chest X-rays, tuberculosis X-rays, and skin lesion images without preprocessing, demonstrating the ability to learn directly from raw data. Extensive experiments, including cross-dataset validation and data reduction analysis, showed strong generalization and stability. SBTAYLOR-KAN achieved up to 98.93% accuracy, with a balanced F1-score, maintaining over 86% accuracy using only 30% of the training data across three datasets. Despite class imbalance in the skin cancer dataset, experiments on both imbalanced and balanced versions showed SBTAYLOR-KAN outperforming other models, achieving 68.22% accuracy. Unlike traditional CNNs, which require millions of parameters (e.g., ResNet50 with 24.18M), SBTAYLOR-KAN achieves comparable performance with just 2,872 trainable parameters, making it more suitable for constrained medical environments. Gradient-weighted Class Activation Mapping (Grad-CAM) was used for interpretability, highlighting relevant regions in medical images. This framework provides a lightweight, interpretable, and generalizable solution for medical image classification, addressing the challenges of limited datasets and data-scarce scenarios in clinical AI applications.

Collaborative Filtering (CF) has emerged as fundamental paradigms for parameterizing users and items into latent representation space, with their correlative patterns from interaction data. Among various CF techniques, the development of GNN-based recommender systems, e.g., PinSage and LightGCN, has offered the state-of-the-art performance. However, two key challenges have not been well explored in existing solutions: i) The over-smoothing effect with deeper graph-based CF architecture, may cause the indistinguishable user representations and degradation of recommendation results. ii) The supervision signals (i.e., user-item interactions) are usually scarce and skewed distributed in reality, which limits the representation power of CF paradigms. To tackle these challenges, we propose a new self-supervised recommendation framework Hypergraph Contrastive Collaborative Filtering (HCCF) to jointly capture local and global collaborative relations with a hypergraph-enhanced cross-view contrastive learning architecture. In particular, the designed hypergraph structure learning enhances the discrimination ability of GNN-based CF paradigm, so as to comprehensively capture the complex high-order dependencies among users. Additionally, our HCCF model effectively integrates the hypergraph structure encoding with self-supervised learning to reinforce the representation quality of recommender systems, based on the hypergraph-enhanced self-discrimination. Extensive experiments on three benchmark datasets demonstrate the superiority of our model over various state-of-the-art recommendation methods, and the robustness against sparse user interaction data. Our model implementation codes are available at this https URL.

Query reformulation is a well-known problem in Information Retrieval (IR) aimed at enhancing single search successful completion rate by automatically modifying user's input query. Recent methods leverage Large Language Models (LLMs) to improve query reformulation, but often generate limited and redundant expansions, potentially constraining their effectiveness in capturing diverse intents. In this paper, we propose GenCRF: a Generative Clustering and Reformulation Framework to capture diverse intentions adaptively based on multiple differentiated, well-generated queries in the retrieval phase for the first time. GenCRF leverages LLMs to generate variable queries from the initial query using customized prompts, then clusters them into groups to distinctly represent diverse intents. Furthermore, the framework explores to combine diverse intents query with innovative weighted aggregation strategies to optimize retrieval performance and crucially integrates a novel Query Evaluation Rewarding Model (QERM) to refine the process through feedback loops. Empirical experiments on the BEIR benchmark demonstrate that GenCRF achieves state-of-the-art performance, surpassing previous query reformulation SOTAs by up to 12% on nDCG@10. These techniques can be adapted to various LLMs, significantly boosting retriever performance and advancing the field of Information Retrieval.

Recent studies show that graph neural networks (GNNs) are prevalent to model high-order relationships for collaborative filtering (CF). Towards this research line, graph contrastive learning (GCL) has exhibited powerful performance in addressing the supervision label shortage issue by learning augmented user and item representations. While many of them show their effectiveness, two key questions still remain unexplored: i) Most existing GCL-based CF models are still limited by ignoring the fact that user-item interaction behaviors are often driven by diverse latent intent factors (e.g., shopping for family party, preferred color or brand of products); ii) Their introduced non-adaptive augmentation techniques are vulnerable to noisy information, which raises concerns about the model's robustness and the risk of incorporating misleading self-supervised signals. In light of these limitations, we propose a Disentangled Contrastive Collaborative Filtering framework (DCCF) to realize intent disentanglement with self-supervised augmentation in an adaptive fashion. With the learned disentangled representations with global context, our DCCF is able to not only distill finer-grained latent factors from the entangled self-supervision signals but also alleviate the augmentation-induced noise. Finally, the cross-view contrastive learning task is introduced to enable adaptive augmentation with our parameterized interaction mask generator. Experiments on various public datasets demonstrate the superiority of our method compared to existing solutions. Our model implementation is released at the link this https URL.

Researchers from Ontario Tech University, Wilfrid Laurier University, Brock University, and Michigan State University developed a novel Pareto-optimal ranking method for Multi-objective Optimization algorithms. This method leverages the concept of Pareto optimality to comprehensively compare algorithms across multiple performance indicators, providing a more robust evaluation than traditional single-metric approaches.

This study demonstrates that Large Language Models (LLMs) can transcribe historical handwritten documents with significantly higher accuracy than specialized Handwritten Text Recognition (HTR) software, while being faster and more cost-effective. We introduce an open-source software tool called Transcription Pearl that leverages these capabilities to automatically transcribe and correct batches of handwritten documents using commercially available multimodal LLMs from OpenAI, Anthropic, and Google. In tests on a diverse corpus of 18th/19th century English language handwritten documents, LLMs achieved Character Error Rates (CER) of 5.7 to 7% and Word Error Rates (WER) of 8.9 to 15.9%, improvements of 14% and 32% respectively over specialized state-of-the-art HTR software like Transkribus. Most significantly, when LLMs were then used to correct those transcriptions as well as texts generated by conventional HTR software, they achieved near-human levels of accuracy, that is CERs as low as 1.8% and WERs of 3.5%. The LLMs also completed these tasks 50 times faster and at approximately 1/50th the cost of proprietary HTR programs. These results demonstrate that when LLMs are incorporated into software tools like Transcription Pearl, they provide an accessible, fast, and highly accurate method for mass transcription of historical handwritten documents, significantly streamlining the digitization process.

A long road trip is fun for drivers. However, a long drive for days can be tedious for a driver to accommodate stringent deadlines to reach distant destinations. Such a scenario forces drivers to drive extra miles, utilizing extra hours daily without sufficient rest and breaks. Once a driver undergoes such a scenario, it occasionally triggers drowsiness during driving. Drowsiness in driving can be life-threatening to any individual and can affect other drivers' safety; therefore, a real-time detection system is needed. To identify fatigued facial characteristics in drivers and trigger the alarm immediately, this research develops a real-time driver drowsiness detection system utilizing deep convolutional neural networks (DCNNs) and this http URL proposed and implemented model takes real- time facial images of a driver using a live camera and utilizes a Python-based library named OpenCV to examine the facial images for facial landmarks like sufficient eye openings and yawn-like mouth movements. The DCNNs framework then gathers the data and utilizes a per-trained model to detect the drowsiness of a driver using facial landmarks. If the driver is identified as drowsy, the system issues a continuous alert in real time, embedded in the Smart Car this http URL potentially saving innocent lives on the roadways, the proposed technique offers a non-invasive, inexpensive, and cost-effective way to identify drowsiness. Our proposed and implemented DCNNs embedded drowsiness detection model successfully react with NTHU-DDD dataset and Yawn-Eye-Dataset with drowsiness detection classification accuracy of 99.6% and 97% respectively.

In this article we demonstrate how to solve a variety of problems and puzzles

using the built-in SAT solver of the computer algebra system Maple. Once the

problems have been encoded into Boolean logic, solutions can be found (or shown

to not exist) automatically, without the need to implement any search

algorithm. In particular, we describe how to solve the n-queens problem, how

to generate and solve Sudoku puzzles, how to solve logic puzzles like the

Einstein riddle, how to solve the 15-puzzle, how to solve the maximum clique

problem, and finding Graeco-Latin squares.

10 Sep 2019

In this paper, we study the geometries given by commuting pairs of

generalized endomorphisms A∈End(T⊕T∗) with the

property that their product defines a generalized metric. There are four types

of such commuting pairs: generalized K\"ahler (GK), generalized para-K\"ahler

(GpK), generalized chiral and generalized anti-K\"ahler geometries. We show

that GpK geometry is equivalent to a pair of para-Hermitian structures and we

derive the integrability conditions in terms of these. From the physics point

of view, this is the geometry of 2D (2,2) twisted supersymmetric sigma

models. The generalized chiral structures are equivalent to a pair of tangent

bundle product structures that also appear in physics applications of 2D

sigma models. We show that the case when the two product structures

anti-commute corresponds to Born geometry. Lastly, the generalized

anti-K\"ahler structures are equivalent to a pair of anti-Hermitian structures

(sometimes called Hermitian with Norden metric). The generalized chiral and

anti-K\"ahler geometries do not have isotropic eigenbundles and therefore do

not admit the usual description of integrability in terms of the Dorfman

bracket. We therefore use an alternative definition of integrability in terms

of the generalized Bismut connection of the corresponding metric, which for GK

and GpK commuting pairs recovers the usual integrability conditions and can

also be used to define the integrability of generalized chiral and

anti-K\"ahler structures. In addition, it allows for a weakening of the

integrability condition, which has various applications in physics.

Alzheimer's disease (AD) is a complex neurodegenerative disorder

characterized by the accumulation of amyloid-beta (Aβ) and phosphorylated

tau (p-tau) proteins, leading to cognitive decline measured by the Alzheimer's

Disease Assessment Scale (ADAS) score. In this study, we develop and analyze a

system of ordinary differential equation models to describe the interactions

between Aβ, p-tau, and ADAS score, providing a mechanistic understanding

of disease progression. To ensure accurate model calibration, we employ

Bayesian inference and Physics-Informed Neural Networks (PINNs) for parameter

estimation based on Alzheimer's Disease Neuroimaging Initiative data. The

data-driven Bayesian approach enables uncertainty quantification, improving

confidence in model predictions, while the PINN framework leverages neural

networks to capture complex dynamics directly from data. Furthermore, we

implement an optimal control strategy to assess the efficacy of an anti-tau

therapeutic intervention aimed at reducing p-tau levels and mitigating

cognitive decline. Our data-driven solutions indicate that while optimal drug

administration effectively decreases p-tau concentration, its impact on

cognitive decline, as reflected in the ADAS score, remains limited. These

findings suggest that targeting p-tau alone may not be sufficient for

significant cognitive improvement, highlighting the need for multi-target

therapeutic strategies. The integration of mechanistic modelling, advanced

parameter estimation, and control-based therapeutic optimization provides a

comprehensive framework for improving treatment strategies for AD.

17 Aug 2022

This paper presents a comprehensive survey of methods which can be utilized

to search for solutions to systems of nonlinear equations (SNEs). Our

objectives with this survey are to synthesize pertinent literature in this

field by presenting a thorough description and analysis of the known methods

capable of finding one or many solutions to SNEs, and to assist interested

readers seeking to identify solution techniques which are well suited for

solving the various classes of SNEs which one may encounter in real world

applications.

To accomplish these objectives, we present a multi-part survey. In part one,

we focus on root-finding approaches which can be used to search for solutions

to a SNE without transforming it into an optimization problem. In part two, we

will introduce the various transformations which have been utilized to

transform a SNE into an optimization problem, and we discuss optimization

algorithms which can then be used to search for solutions. In part three, we

will present a robust quantitative comparative analysis of methods capable of

searching for solutions to SNEs.

22 Jun 2025

University College London

University College London Zhejiang UniversityTilburg UniversityUniversity of LiverpoolUniversity of ZagrebBergische Universität WuppertalUniversity of VeronaTechnical University of DarmstadtFriedrich-Schiller-Universität JenaWilfrid Laurier University

Zhejiang UniversityTilburg UniversityUniversity of LiverpoolUniversity of ZagrebBergische Universität WuppertalUniversity of VeronaTechnical University of DarmstadtFriedrich-Schiller-Universität JenaWilfrid Laurier University Chalmers University of Technology

Chalmers University of Technology University of GroningenUniversity of BathUniversity of Southern DenmarkUniversity of AdelaideUniversity of LisbonIMT AtlantiqueRobert Gordon UniversityWageningen University and ResearchLoughborough UniversityCopenhagen Business SchoolBerlin School of Economics and LawUniversity of the West of EnglandErasmus UniversityTexas Christian UniversityCentral Queensland UniversityThe University of Sydney Business SchoolKühne Logistics UniversityKEDGE Business SchoolUniversity of Exeter Business SchoolUniversity of Sussex Business SchoolMaryville University of Saint LouisRabdan AcademyUniversity of Southampton Business SchoolKoc

UniversityUniversity of Naples

“Federico II”

University of GroningenUniversity of BathUniversity of Southern DenmarkUniversity of AdelaideUniversity of LisbonIMT AtlantiqueRobert Gordon UniversityWageningen University and ResearchLoughborough UniversityCopenhagen Business SchoolBerlin School of Economics and LawUniversity of the West of EnglandErasmus UniversityTexas Christian UniversityCentral Queensland UniversityThe University of Sydney Business SchoolKühne Logistics UniversityKEDGE Business SchoolUniversity of Exeter Business SchoolUniversity of Sussex Business SchoolMaryville University of Saint LouisRabdan AcademyUniversity of Southampton Business SchoolKoc

UniversityUniversity of Naples

“Federico II”Operations and Supply Chain Management (OSCM) has continually evolved, incorporating a broad array of strategies, frameworks, and technologies to address complex challenges across industries. This encyclopedic article provides a comprehensive overview of contemporary strategies, tools, methods, principles, and best practices that define the field's cutting-edge advancements. It also explores the diverse environments where OSCM principles have been effectively implemented. The article is meant to be read in a nonlinear fashion. It should be used as a point of reference or first-port-of-call for a diverse pool of readers: academics, researchers, students, and practitioners.

A supervised feature selection method selects an appropriate but concise set of features to differentiate classes, which is highly expensive for large-scale datasets. Therefore, feature selection should aim at both minimizing the number of selected features and maximizing the accuracy of classification, or any other task. However, this crucial task is computationally highly demanding on many real-world datasets and requires a very efficient algorithm to reach a set of optimal features with a limited number of fitness evaluations. For this purpose, we have proposed the binary multi-objective coordinate search (MOCS) algorithm to solve large-scale feature selection problems. To the best of our knowledge, the proposed algorithm in this paper is the first multi-objective coordinate search algorithm. In this method, we generate new individuals by flipping a variable of the candidate solutions on the Pareto front. This enables us to investigate the effectiveness of each feature in the corresponding subset. In fact, this strategy can play the role of crossover and mutation operators to generate distinct subsets of features. The reported results indicate the significant superiority of our method over NSGA-II, on five real-world large-scale datasets, particularly when the computing budget is limited. Moreover, this simple hyper-parameter-free algorithm can solve feature selection much faster and more efficiently than NSGA-II.

Micro-ultrasound (micro-US) is a promising imaging technique for cancer detection and computer-assisted visualization. This study investigates prostate capsule segmentation using deep learning techniques from micro-US images, addressing the challenges posed by the ambiguous boundaries of the prostate capsule. Existing methods often struggle in such cases, motivating the development of a tailored approach. This study introduces an adaptive focal loss function that dynamically emphasizes both hard and easy regions, taking into account their respective difficulty levels and annotation variability. The proposed methodology has two primary strategies: integrating a standard focal loss function as a baseline to design an adaptive focal loss function for proper prostate capsule segmentation. The focal loss baseline provides a robust foundation, incorporating class balancing and focusing on examples that are difficult to classify. The adaptive focal loss offers additional flexibility, addressing the fuzzy region of the prostate capsule and annotation variability by dilating the hard regions identified through discrepancies between expert and non-expert annotations. The proposed method dynamically adjusts the segmentation model's weights better to identify the fuzzy regions of the prostate capsule. The proposed adaptive focal loss function demonstrates superior performance, achieving a mean dice coefficient (DSC) of 0.940 and a mean Hausdorff distance (HD) of 1.949 mm in the testing dataset. These results highlight the effectiveness of integrating advanced loss functions and adaptive techniques into deep learning models. This enhances the accuracy of prostate capsule segmentation in micro-US images, offering the potential to improve clinical decision-making in prostate cancer diagnosis and treatment planning.

There are no more papers matching your filters at the moment.