ulich

One of the main challenges in optimal scaling of large language models (LLMs)

is the prohibitive cost of hyperparameter tuning, particularly learning rate

η and batch size B. While techniques like μP (Yang et al., 2022)

provide scaling rules for optimal η transfer in the infinite model size

limit, the optimal scaling behavior in the infinite data size limit remains

unknown. We fill in this gap by observing for the first time an intricate

dependence of optimal η scaling on the pretraining token budget T, B

and its relation to the critical batch size Bcrit, which we measure

to evolve as Bcrit∝T. Furthermore, we show that the optimal

batch size is positively correlated with Bcrit: keeping it fixed

becomes suboptimal over time even if learning rate is scaled optimally.

Surprisingly, our results demonstrate that the observed optimal η and B

dynamics are preserved with μP model scaling, challenging the conventional

view of Bcrit dependence solely on loss value. Complementing

optimality, we examine the sensitivity of loss to changes in learning rate,

where we find the sensitivity to decrease with increase of T and to remain

constant with μP model scaling. We hope our results make the first step

towards a unified picture of the joint optimal data and model scaling.

09 Jan 2025

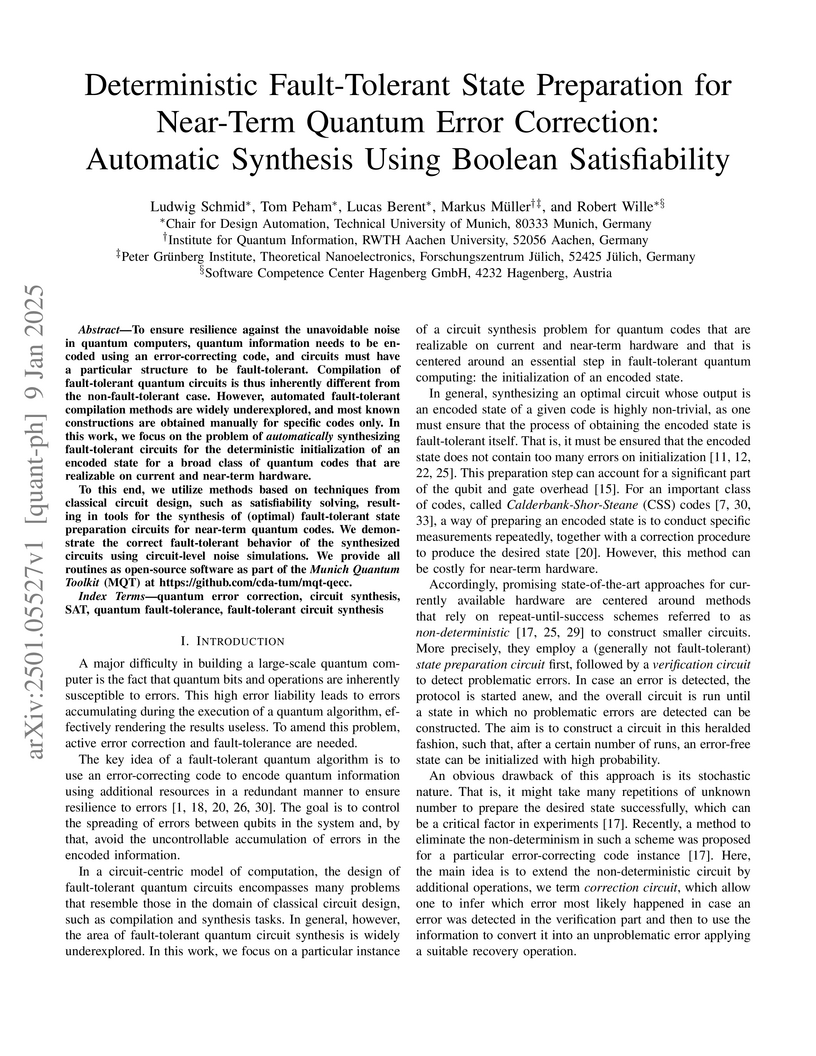

To ensure resilience against the unavoidable noise in quantum computers, quantum information needs to be encoded using an error-correcting code, and circuits must have a particular structure to be fault-tolerant. Compilation of fault-tolerant quantum circuits is thus inherently different from the non-fault-tolerant case. However, automated fault-tolerant compilation methods are widely underexplored, and most known constructions are obtained manually for specific codes only. In this work, we focus on the problem of automatically synthesizing fault-tolerant circuits for the deterministic initialization of an encoded state for a broad class of quantum codes that are realizable on current and near-term hardware.

To this end, we utilize methods based on techniques from classical circuit design, such as satisfiability solving, resulting in tools for the synthesis of (optimal) fault-tolerant state preparation circuits for near-term quantum codes. We demonstrate the correct fault-tolerant behavior of the synthesized circuits using circuit-level noise simulations. We provide all routines as open-source software as part of the Munich Quantum Toolkit (MQT) at this https URL.

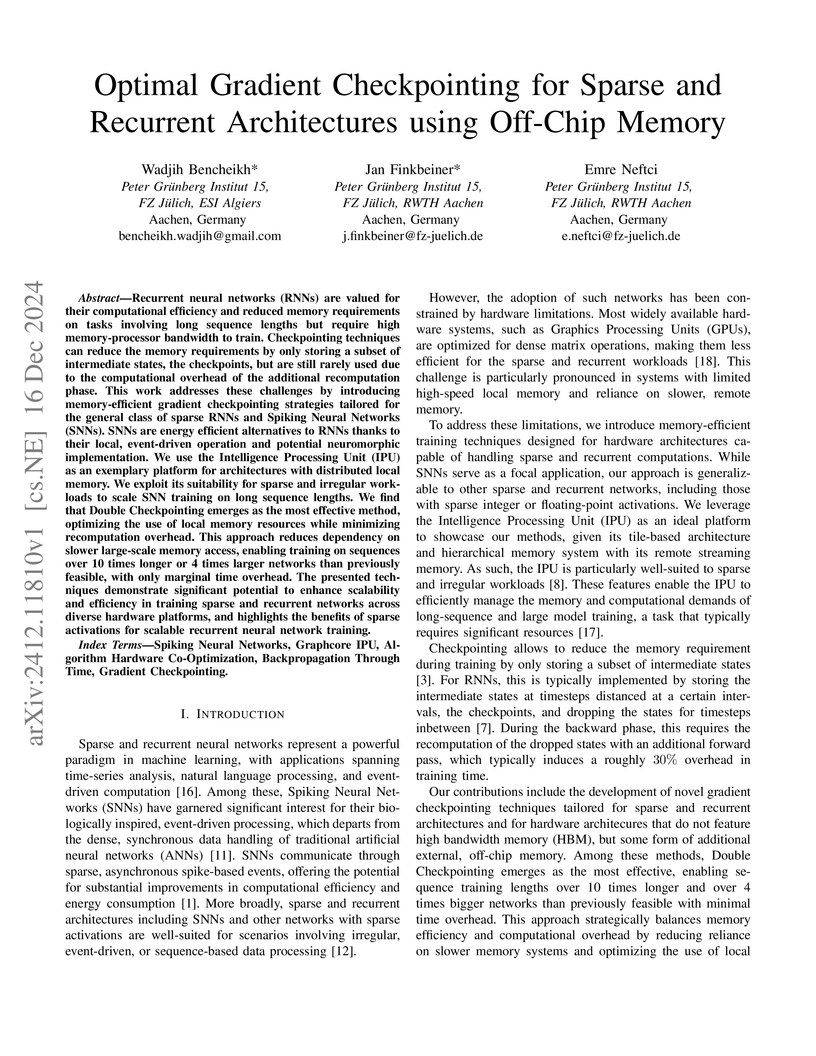

Recurrent neural networks (RNNs) are valued for their computational efficiency and reduced memory requirements on tasks involving long sequence lengths but require high memory-processor bandwidth to train. Checkpointing techniques can reduce the memory requirements by only storing a subset of intermediate states, the checkpoints, but are still rarely used due to the computational overhead of the additional recomputation phase. This work addresses these challenges by introducing memory-efficient gradient checkpointing strategies tailored for the general class of sparse RNNs and Spiking Neural Networks (SNNs). SNNs are energy efficient alternatives to RNNs thanks to their local, event-driven operation and potential neuromorphic implementation. We use the Intelligence Processing Unit (IPU) as an exemplary platform for architectures with distributed local memory. We exploit its suitability for sparse and irregular workloads to scale SNN training on long sequence lengths. We find that Double Checkpointing emerges as the most effective method, optimizing the use of local memory resources while minimizing recomputation overhead. This approach reduces dependency on slower large-scale memory access, enabling training on sequences over 10 times longer or 4 times larger networks than previously feasible, with only marginal time overhead. The presented techniques demonstrate significant potential to enhance scalability and efficiency in training sparse and recurrent networks across diverse hardware platforms, and highlights the benefits of sparse activations for scalable recurrent neural network training.

16 Jan 2025

Graph partitioning has many applications in powersystems from decentralized state estimation to parallel simulation. Focusing on parallel simulation, optimal grid partitioning minimizes the idle time caused by different simulation times for the sub-networks and their components and reduces the overhead required to simulate the cuts. Partitioning a graph into two parts such that, for example, the cut is minimal and the subgraphs have equal size is an NP-hard problem. In this paper we show how optimal partitioning of a graph can be obtained using quantum annealing (QA). We show how to map the requirements for optimal splitting to a quadratic unconstrained binary optimization (QUBO) formulation and test the proposed formulation using a current D-Wave QPU. We show that the necessity to find an embedding of the QUBO on current D-Wave QPUs limits the problem size to under 200 buses and notably affects the time-to-solution. We finally discuss the implications on near-term implementation of QA in combination to traditional CPU or GPU based simulation.

27 Mar 2025

Understanding the physical nature of the D-Wave annealers remains a subject

of active investigation. In this study, we analyze the sampling behavior of

these systems and explore whether their results can be replicated using quantum

and Markovian models. Employing the standard and the fast annealing protocols,

we observe that the D-Wave annealers sample states with frequencies matching

the Gibbs distribution for sufficiently long annealing times. Using Bloch

equation simulations for single-qubit problems and Lindblad and Markovian

master equations for two-qubit systems, we compare experimental data with

theoretical predictions. Our results provide insights into the role of quantum

mechanics in these devices.

One-dimensional (1D) topological superconductivity is a state of matter that is not found in nature. However, it can be realised, for example, by inducing superconductivity into the quantum spin Hall edge state of a two-dimensional topological insulator. Because topological superconductors are proposed to host Majorana zero modes, they have been suggested as a platform for topological quantum computing. Yet, conclusive proof of 1D topological superconductivity has remained elusive. Here, we employ low-temperature scanning tunnelling microscopy to show 1D topological superconductivity in a van der Waals heterostructure by directly probing its superconducting properties, instead of relying on the observation of Majorana zero modes at its boundary. We realise this by placing the two-dimensional topological insulator monolayer WTe2 on the superconductor NbSe2. We find that the superconducting topological edge state is robust against magnetic fields, a hallmark of its triplet pairing. Its topological protection is underpinned by a lateral self-proximity effect, which is resilient against disorder in the monolayer edge. By creating this exotic state in a van der Waals heterostructure, we provide an adaptable platform for the future realization of Majorana bound states. Finally, our results more generally demonstrate the power of Abrikosov vortices as effective experimental probes for superconductivity in nanostructures.

26 May 2023

Tohoku UniversityAcademia Sinica

Tohoku UniversityAcademia Sinica University of CambridgeGhent University

University of CambridgeGhent University University College London

University College London CEAUniversity of ViennaThe Faraday InstitutionPaul Scherrer InstitutTechnical University of DenmarkMcMaster UniversityHelmholtz-Zentrum Dresden-RossendorfTechnical University of Viennaulicherale de LausanneCentral Michigan UniversityUniversity of PaderbornSINTEFHasselt UniversityVASP Software GmbHD.́Ecole Polytechnique FeSwiss Federal Laboratories for Materials Science and TechnologyOCAS NV/ArcelorMittal Global R&D GentePotentiaHPE HPC EMEA Research LabSigma2 ASe catholique de LouvainInstitut de Ciencia de Materials de BarcelonaForschungszentrum J",Universit ",

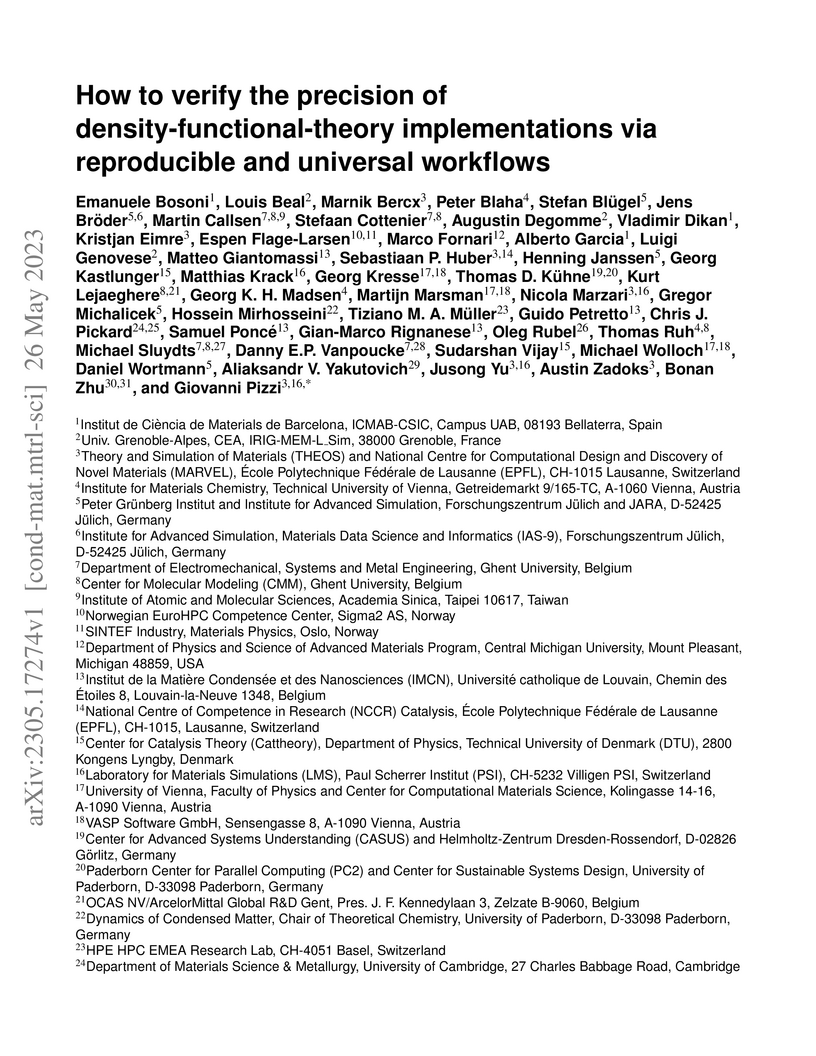

CEAUniversity of ViennaThe Faraday InstitutionPaul Scherrer InstitutTechnical University of DenmarkMcMaster UniversityHelmholtz-Zentrum Dresden-RossendorfTechnical University of Viennaulicherale de LausanneCentral Michigan UniversityUniversity of PaderbornSINTEFHasselt UniversityVASP Software GmbHD.́Ecole Polytechnique FeSwiss Federal Laboratories for Materials Science and TechnologyOCAS NV/ArcelorMittal Global R&D GentePotentiaHPE HPC EMEA Research LabSigma2 ASe catholique de LouvainInstitut de Ciencia de Materials de BarcelonaForschungszentrum J",Universit ",In the past decades many density-functional theory methods and codes adopting periodic boundary conditions have been developed and are now extensively used in condensed matter physics and materials science research. Only in 2016, however, their precision (i.e., to which extent properties computed with different codes agree among each other) was systematically assessed on elemental crystals: a first crucial step to evaluate the reliability of such computations. We discuss here general recommendations for verification studies aiming at further testing precision and transferability of density-functional-theory computational approaches and codes. We illustrate such recommendations using a greatly expanded protocol covering the whole periodic table from Z=1 to 96 and characterizing 10 prototypical cubic compounds for each element: 4 unaries and 6 oxides, spanning a wide range of coordination numbers and oxidation states. The primary outcome is a reference dataset of 960 equations of state cross-checked between two all-electron codes, then used to verify and improve nine pseudopotential-based approaches. Such effort is facilitated by deploying AiiDA common workflows that perform automatic input parameter selection, provide identical input/output interfaces across codes, and ensure full reproducibility. Finally, we discuss the extent to which the current results for total energies can be reused for different goals (e.g., obtaining formation energies).

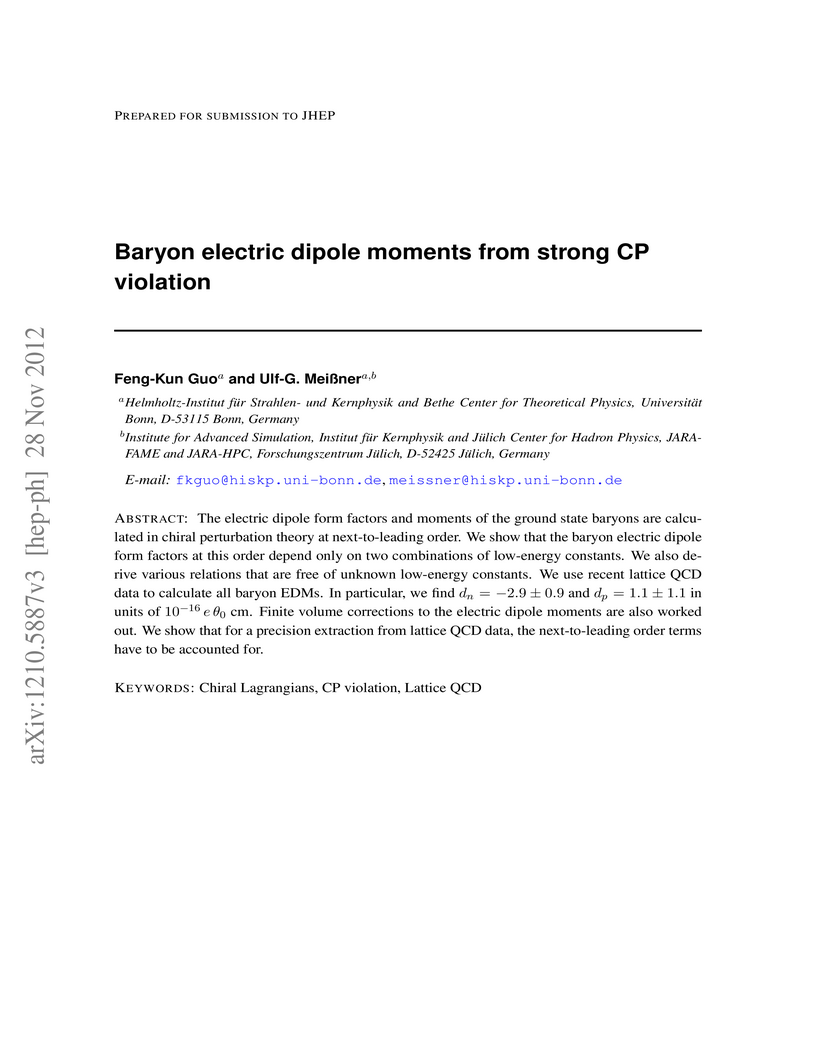

The electric dipole form factors and moments of the ground state baryons are

calculated in chiral perturbation theory at next-to-leading order. We show that

the baryon electric dipole form factors at this order depend only on two

combinations of low-energy constants. We also derive various relations that are

free of unknown low-energy constants. We use recent lattice QCD data to

calculate all baryon EDMs. In particular, we find d_n = -2.9\pm 0.9 and d_p =

1.1\pm 1.1 in units of 10^{-16} e \theta_0 cm. Finite volume corrections to the

moments are also worked out. We show that for a precision extraction from

lattice QCD data, the next-to-leading order terms have to be accounted for.

03 May 2021

We currently observe a disconcerting phenomenon in machine learning studies

in psychiatry: While we would expect larger samples to yield better results due

to the availability of more data, larger machine learning studies consistently

show much weaker performance than the numerous small-scale studies. Here, we

systematically investigated this effect focusing on one of the most heavily

studied questions in the field, namely the classification of patients suffering

from major depressive disorder (MDD) and healthy control (HC) based on

neuroimaging data. Drawing upon structural magnetic resonance imaging (MRI)

data from a balanced sample of N=1,868 MDD patients and HC from our recent

international Predictive Analytics Competition (PAC), we first trained and

tested a classification model on the full dataset which yielded an accuracy of

61%. Next, we mimicked the process by which researchers would draw samples

of various sizes (N=4 to N=150) from the population and showed a strong

risk of misestimation. Specifically, for small sample sizes (N=20), we

observe accuracies of up to 95%. For medium sample sizes (N=100)

accuracies up to 75% were found. Importantly, further investigation showed

that sufficiently large test sets effectively protect against performance

misestimation whereas larger datasets per se do not. While these results

question the validity of a substantial part of the current literature, we

outline the relatively low-cost remedy of larger test sets, which is readily

available in most cases.

The ever increasing availability of supercomputing resources led

computer-based materials science into a new era of high-throughput

calculations. Recently, Pizzi et al. [Comp. Mat. Sci. 111, 218 (2016)]

introduced the AiiDA framework that provides a way to automate calculations

while allowing to store the full provenance of complex workflows in a database.

We present the development of the AiiDA-KKR plugin that allows to perform a

large number of ab initio impurity embedding calculations based on the

relativistic full-potential Korringa-Kohn-Rostoker Green function method. The

capabilities of the AiiDA-KKR plugin are demonstrated with the calculation of

several thousand impurities embedded into the prototypical topological

insulator Sb2Te3. The results are collected in the JuDiT database which we use

to investigate chemical trends as well as Fermi level and layer dependence of

physical properties of impurities. This includes the study of spin moments, the

impurity's tendency to form in-gap states or its effect on the charge doping of

the host-crystal. These properties depend on the detailed electronic structure

of the impurity embedded into the host crystal which highlights the need for ab

initio calculations in order to get accurate predictions.

Michigan State University

Michigan State University Sun Yat-Sen UniversitySouth China Normal UniversityInstitute for Basic ScienceMississippi State UniversityGraduate School of China Academy of Engineering PhysicsulichTbilisi State UniversityGaziantep Islam Science and Technology UniversityCEA Paris-Saclayat Bonnat BochumRuhr UniversitHelmholtz-Institut fur Strahlen- und KernphysikForschungszentrum J",Universit ",":

Sun Yat-Sen UniversitySouth China Normal UniversityInstitute for Basic ScienceMississippi State UniversityGraduate School of China Academy of Engineering PhysicsulichTbilisi State UniversityGaziantep Islam Science and Technology UniversityCEA Paris-Saclayat Bonnat BochumRuhr UniversitHelmholtz-Institut fur Strahlen- und KernphysikForschungszentrum J",Universit ",":Ab initio calculations play an essential role in our fundamental

understanding of quantum many-body systems across many subfields, from strongly

correlated fermions to quantum chemistry and from atomic and molecular systems

to nuclear physics. One of the primary challenges is to perform accurate

calculations for systems where the interactions may be complicated and

difficult for the chosen computational method to handle. Here we address the

problem by introducing a new approach called wavefunction matching.

Wavefunction matching transforms the interaction between particles so that the

wavefunctions up to some finite range match that of an easily computable

interaction. This allows for calculations of systems that would otherwise be

impossible due to problems such as Monte Carlo sign cancellations. We apply the

method to lattice Monte Carlo simulations of light nuclei, medium-mass nuclei,

neutron matter, and nuclear matter. We use high-fidelity chiral effective field

theory interactions and find good agreement with empirical data. These results

are accompanied by new insights on the nuclear interactions that may help to

resolve long-standing challenges in accurately reproducing nuclear binding

energies, charge radii, and nuclear matter saturation in ab initio

calculations.

Increasing HPC cluster sizes and large-scale simulations that produce petabytes of data per run, create massive IO and storage challenges for analysis. Deep learning-based techniques, in particular, make use of these amounts of domain data to extract patterns that help build scientific understanding. Here, we demonstrate a streaming workflow in which simulation data is streamed directly to a machine-learning (ML) framework, circumventing the file system bottleneck. Data is transformed in transit, asynchronously to the simulation and the training of the model. With the presented workflow, data operations can be performed in common and easy-to-use programming languages, freeing the application user from adapting the application output routines. As a proof-of-concept we consider a GPU accelerated particle-in-cell (PIConGPU) simulation of the Kelvin- Helmholtz instability (KHI). We employ experience replay to avoid catastrophic forgetting in learning from this non-steady process in a continual manner. We detail challenges addressed while porting and scaling to Frontier exascale system.

Researchers at FZ Jülich and RWTH Aachen University developed a custom Automatic Differentiation pipeline, leveraging Graphax, to enable efficient, online gradient-based synaptic plasticity in Spiking Neural Networks. This method demonstrates memory usage constant with sequence length and reduced computational time compared to Backpropagation Through Time, while maintaining comparable classification accuracy on benchmarks.

23 May 2025

Mapping quantum approximate optimization algorithm (QAOA) circuits with

non-trivial connectivity in fixed-layout quantum platforms such as

superconducting-based quantum processing units (QPUs) requires a process of

transpilation to match the quantum circuit on the given layout. This step is

critical for reducing error rates when running on noisy QPUs. Two methodologies

that improve the resource required to do such transpilation are the SWAP

network and parity twine chains (PTC). These approaches reduce the two-qubit

gate count and depth needed to represent fully connected circuits. In this

work, a simulated annealing-based method is introduced that reduces the PTC and

SWAP network encoding requirements in QAOA circuits with non-fully connected

two-qubit gates. This method is benchmarked against various transpilers and

demonstrates that, beyond specific connectivity thresholds, it achieves

significant reductions in both two-qubit gate count and circuit depth,

surpassing the performance of Qiskit transpiler at its highest optimization

level. For example, for a 120-qubit QAOA instance with 25% connectivity, our

method achieves an 85% reduction in depth and a 28% reduction in two-qubit

gates. Finally, the practical impact of PTC encoding is validated by

benchmarking QAOA on the ibm_fez device, showing improved performance up to 20

qubits, compared to a 15-qubit limit when using SWAP networks.

By introducing an additional operator into the action and using the Feynman-Hellmann theorem we describe a method to determine both the quark line connected and disconnected terms of matrix elements. As an illustration of the method we calculate the gluon contribution (chromo-electric and chromo-magnetic components) to the nucleon mass.

13 Jul 2018

Electrons which are slowly moving through chiral magnetic textures can

effectively be described as if they where influenced by electromagnetic fields

emerging from the real-space topology. This adiabatic viewpoint has been very

successful in predicting physical properties of chiral magnets. Here, based on

a rigorous quantum-mechanical approach, we unravel the emergence of chiral and

topological orbital magnetism in one- and two-dimensional spin systems. We

uncover that the quantized orbital magnetism in the adiabatic limit can be

understood as a Landau-Peierls response to the emergent magnetic field. Our

central result is that the spin-orbit interaction in interfacial skyrmions and

domain walls can be used to tune the orbital magnetism over orders of magnitude

by merging the real-space topology with the topology in reciprocal space. Our

findings point out the route to experimental engineering of orbital properties

of chiral spin systems, thereby paving the way to the field of chiral

orbitronics.

04 Oct 2021

We report on the implementation of the Bogoliubov-de Gennes method into the

JuKKR code [https: //jukkr.fz-juelich.de], an implementation of the

relativistic all-electron, full-potential Korringa-Kohn-Rostoker Green function

method, which allows a material-specific description of inhomogeneous

super-conductors and heterostructures on the basis of density functional

theory. We describe the formalism and report on calculations for the s-wave

superconductor Nb, a potential component of the materials platform enabling the

realization of the Majorana zero modes in the field of topological quantum

computing. We compare the properties of the superconducting state both in the

bulk and for (110) surfaces. We compare slab calculations for different

thicknesses and comment on the importance of spin-orbit coupling, the effect of

surface relaxations and the influence of a softening of phonon modes on the

surface for the resulting superconducting gap.

Interfacing a topological insulator (TI) with an s-wave superconductor (SC) is a promising material platform that offers the possibility to realize a topological superconductor through which Majorana-based topologically protected qubits can be engineered. In our computational study of the prototypical SC/TI interface between Nb and Bi2Te3, we identify the benefits and possible bottlenecks of this potential Majorana material platform. Bringing Nb in contact with the TI film induces charge doping from the SC to the TI, which shifts the Fermi level into the TI conduction band. For thick TI films, this results in band bending leading to the population of trivial TI quantum-well states at the interface. In the superconducting state, we uncover that the topological surface state experiences a sizable superconducting gap-opening at the SC/TI interface, which is furthermore robust against fluctuations of the Fermi energy. We also show that the trivial interface state is only marginally proximitized, potentially obstructing the realization of Majorana-based qubits in this material platform.

30 May 2023

Combinatorial optimization problems are one of the target applications of current quantum technology, mainly because of their industrial relevance, the difficulty of solving large instances of them classically, and their equivalence to Ising Hamiltonians using the quadratic unconstrained binary optimization (QUBO) formulation. Many of these applications have inequality constraints, usually encoded as penalization terms in the QUBO formulation using additional variables known as slack variables. The slack variables have two disadvantages: (i) these variables extend the search space of optimal and suboptimal solutions, and (ii) the variables add extra qubits and connections to the quantum algorithm. Recently, a new method known as unbalanced penalization has been presented to avoid using slack variables. This method offers a trade-off between additional slack variables to ensure that the optimal solution is given by the ground state of the Ising Hamiltonian, and using an unbalanced heuristic function to penalize the region where the inequality constraint is violated with the only certainty that the optimal solution will be in the vicinity of the ground state. This work tests the unbalanced penalization method using real quantum hardware on D-Wave Advantage for the traveling salesman problem (TSP). The results show that the unbalanced penalization method outperforms the solutions found using slack variables and sets a new record for the largest TSP solved with quantum technology.

20 May 2024

Resource allocation of wide-area internet networks is inherently a

combinatorial optimization problem that if solved quickly, could provide near

real-time adaptive control of internet-protocol traffic ensuring increased

network efficacy and robustness, while minimizing energy requirements coming

from power-hungry transceivers. In recent works we demonstrated how such a

problem could be cast as a quadratic unconstrained binary optimization (QUBO)

problem that can be embedded onto the D-Wave AdvantageTM quantum annealer

system, demonstrating proof of principle. Our initial studies left open the

possibility for improvement of D-Wave solutions via judicious choices of system

run parameters. Here we report on our investigations for optimizing these

system parameters, and how we incorporate machine learning (ML) techniques to

further improve on the quality of solutions. In particular, we use the Hamming

distance to investigate correlations between various system-run parameters and

solution vectors. We then apply a decision tree neural network (NN) to learn

these correlations, with the goal of using the neural network to provide

further guesses to solution vectors. We successfully implement this NN in a

simple integer linear programming (ILP) example, demonstrating how the NN can

fully map out the solution space that was not captured by D-Wave. We find,

however, for the 3-node network problem the NN is not able to enhance the

quality of space of solutions.

There are no more papers matching your filters at the moment.