École Normale Supérieure Paris-Saclay

02 Jun 2025

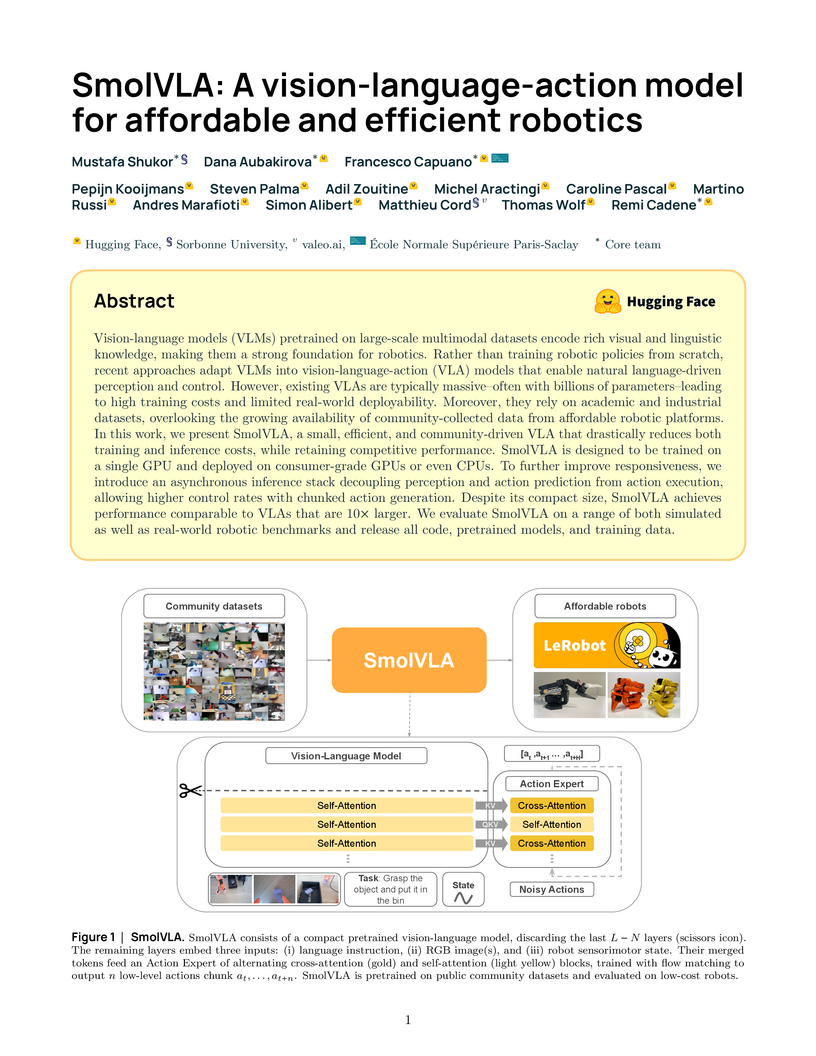

Hugging Face researchers develop SmolVLA, a 450-million parameter vision-language-action model that achieves competitive robotic manipulation performance using 7x less memory and 40% faster training than larger VLA models like π0 (3.3B parameters), demonstrating 78.3% success rate on real-world SO-100 tasks compared to 61.7% for π0 and introducing an asynchronous inference stack that enables 30% faster task completion (9.7s vs 13.75s) by decoupling action prediction from execution, while being trained exclusively on community-contributed datasets totaling fewer than 30,000 episodes and deployable on consumer-grade hardware including CPUs.

A collaborative team led by MIT researchers introduces the first comprehensive index of deployed AI agent systems, documenting 67 real-world implementations across a structured 33-field framework while revealing significant gaps in safety practices - only 19.4% disclose formal safety policies and less than 10% report external safety evaluations.

17 Sep 2025

We provide series expansions for the tempered stable densities and for the price of European-style contracts in the exponential Lévy model driven by the tempered stable process. These formulas recover several popular option pricing models, and become particularly simple in some specific cases such as bilateral Gamma process and one-sided TS process. When compared to traditional Fourier pricing, our method has the advantage of being hyperparameter free. We also provide a detailed numerical analysis and show that our technique is competitive with state-of-the-art pricing methods.

Deep neural networks perform remarkably well on image classification tasks but remain vulnerable to carefully crafted adversarial perturbations. This work revisits linear dimensionality reduction as a simple, data-adapted defense. We empirically compare standard Principal Component Analysis (PCA) with its sparse variant (SPCA) as front-end feature extractors for downstream classifiers, and we complement these experiments with a theoretical analysis. On the theory side, we derive exact robustness certificates for linear heads applied to SPCA features: for both ℓ∞ and ℓ2 threat models (binary and multiclass), the certified radius grows as the dual norms of W⊤u shrink, where W is the projection and u the head weights. We further show that for general (non-linear) heads, sparsity reduces operator-norm bounds through a Lipschitz composition argument, predicting lower input sensitivity. Empirically, with a small non-linear network after the projection, SPCA consistently degrades more gracefully than PCA under strong white-box and black-box attacks while maintaining competitive clean accuracy. Taken together, the theory identifies the mechanism (sparser projections reduce adversarial leverage) and the experiments verify that this benefit persists beyond the linear setting. Our code is available at this https URL.

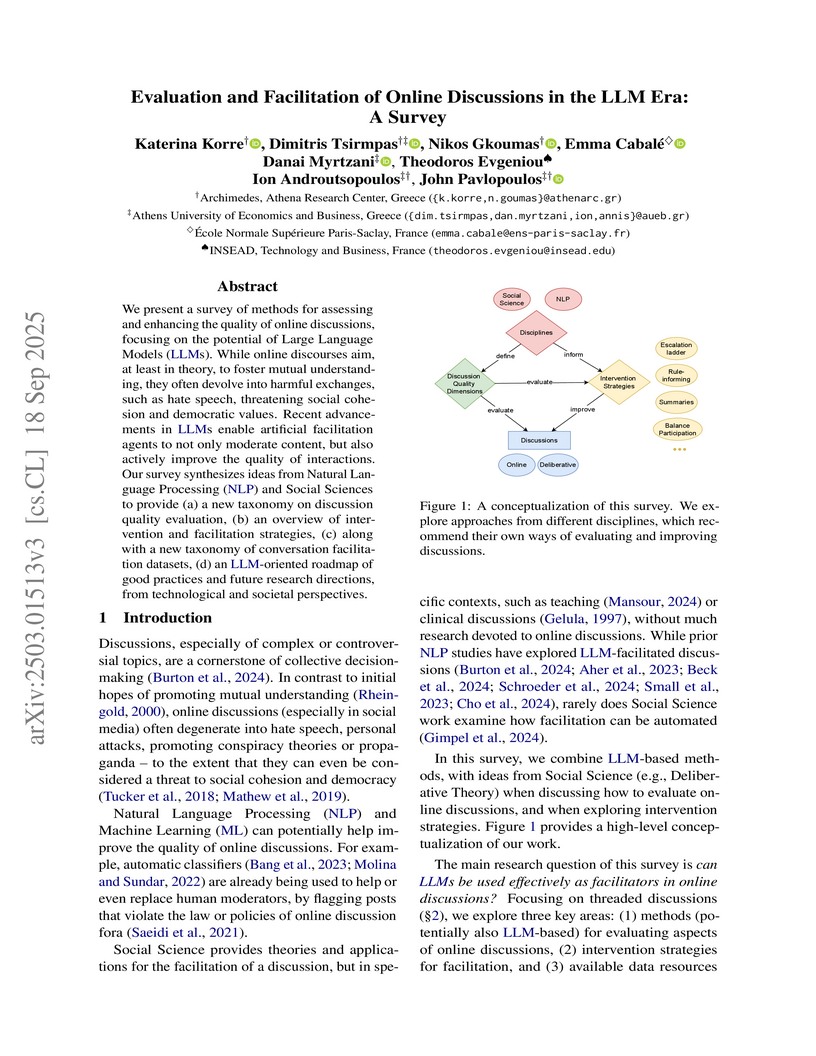

We present a survey of methods for assessing and enhancing the quality of online discussions, focusing on the potential of LLMs. While online discourses aim, at least in theory, to foster mutual understanding, they often devolve into harmful exchanges, such as hate speech, threatening social cohesion and democratic values. Recent advancements in LLMs enable artificial facilitation agents to not only moderate content, but also actively improve the quality of interactions. Our survey synthesizes ideas from NLP and Social Sciences to provide (a) a new taxonomy on discussion quality evaluation, (b) an overview of intervention and facilitation strategies, (c) along with a new taxonomy of conversation facilitation datasets, (d) an LLM-oriented roadmap of good practices and future research directions, from technological and societal perspectives.

Mechanistic interpretability research requires reliable tools for analyzing transformer internals across diverse architectures. Current approaches face a fundamental tradeoff: custom implementations like TransformerLens ensure consistent interfaces but require coding a manual adaptation for each architecture, introducing numerical mismatch with the original models, while direct HuggingFace access through NNsight preserves exact behavior but lacks standardization across models. To bridge this gap, we develop nnterp, a lightweight wrapper around NNsight that provides a unified interface for transformer analysis while preserving original HuggingFace implementations. Through automatic module renaming and comprehensive validation testing, nnterp enables researchers to write intervention code once and deploy it across 50+ model variants spanning 16 architecture families. The library includes built-in implementations of common interpretability methods (logit lens, patchscope, activation steering) and provides direct access to attention probabilities for models that support it. By packaging validation tests with the library, researchers can verify compatibility with custom models locally. nnterp bridges the gap between correctness and usability in mechanistic interpretability tooling.

We study static tidal Love numbers (TLNs) of a static and spherically symmetric black hole for odd-parity metric perturbations. We describe black hole perturbations using the effective field theory (EFT), formulated on an arbitrary background with a timelike scalar profile in the context of scalar-tensor theories. In particular, we obtain a static solution for the generalized Regge-Wheeler equation order by order in a modified-gravity parameter and extract the TLNs uniquely by analytic continuation of the multipole index ℓ to non-integer values. For a stealth Schwarzschild black hole, the TLNs are vanishing as in the case of Schwarzschild solution in general relativity. We also study the case of Hayward black hole as an example of non-stealth background, where we find that the TLNs are non-zero (or there is a logarithmic running). This result suggests that our EFT allows for non-vanishing TLNs and can in principle leave a detectable imprint on gravitational waves from inspiralling binary systems, which opens a new window for testing gravity in the strong-field regime.

Researchers introduced and formalized the use of non-Gaussian noise distributions, such as Mixture of Gaussians and Gamma distributions, within Denoising Diffusion Probabilistic Models. This approach significantly improved generation quality and efficiency across image and speech synthesis tasks, with Mixture of Gaussians achieving an FID of 31.21 compared to 299.71 for Gaussian DDPMs with 10 inference steps on CelebA.

Since the seminal work of Venkatakrishnan et al. in 2013, Plug & Play (PnP) methods have become ubiquitous in Bayesian imaging. These methods derive Minimum Mean Square Error (MMSE) or Maximum A Posteriori (MAP) estimators for inverse problems in imaging by combining an explicit likelihood function with a prior that is implicitly defined by an image denoising algorithm. The PnP algorithms proposed in the literature mainly differ in the iterative schemes they use for optimisation or for sampling. In the case of optimisation schemes, some recent works guarantee the convergence to a fixed point, albeit not necessarily a MAP estimate. In the case of sampling schemes, to the best of our knowledge, there is no known proof of convergence. There also remain important open questions regarding whether the underlying Bayesian models and estimators are well defined, well-posed, and have the basic regularity properties required to support these numerical schemes. To address these limitations, this paper develops theory, methods, and provably convergent algorithms for performing Bayesian inference with PnP priors. We introduce two algorithms: 1) PnP-ULA (Unadjusted Langevin Algorithm) for Monte Carlo sampling and MMSE inference; and 2) PnP-SGD (Stochastic Gradient Descent) for MAP inference. Using recent results on the quantitative convergence of Markov chains, we establish detailed convergence guarantees for these two algorithms under realistic assumptions on the denoising operators used, with special attention to denoisers based on deep neural networks. We also show that these algorithms approximately target a decision-theoretically optimal Bayesian model that is well-posed. The proposed algorithms are demonstrated on several canonical problems such as image deblurring, inpainting, and denoising, where they are used for point estimation as well as for uncertainty visualisation and quantification.

Generative diffusion processes are an emerging and effective tool for image and speech generation. In the existing methods, the underlying noise distribution of the diffusion process is Gaussian noise. However, fitting distributions with more degrees of freedom could improve the performance of such generative models. In this work, we investigate other types of noise distribution for the diffusion process. Specifically, we introduce the Denoising Diffusion Gamma Model (DDGM) and show that noise from Gamma distribution provides improved results for image and speech generation. Our approach preserves the ability to efficiently sample state in the training diffusion process while using Gamma noise.

This paper proposes a thorough theoretical analysis of Stochastic Gradient Descent (SGD) with non-increasing step sizes. First, we show that the recursion defining SGD can be provably approximated by solutions of a time inhomogeneous Stochastic Differential Equation (SDE) using an appropriate coupling. In the specific case of a batch noise we refine our results using recent advances in Stein's method. Then, motivated by recent analyses of deterministic and stochastic optimization methods by their continuous counterpart, we study the long-time behavior of the continuous processes at hand and establish non-asymptotic bounds. To that purpose, we develop new comparison techniques which are of independent interest. Adapting these techniques to the discrete setting, we show that the same results hold for the corresponding SGD sequences. In our analysis, we notably improve non-asymptotic bounds in the convex setting for SGD under weaker assumptions than the ones considered in previous works. Finally, we also establish finite-time convergence results under various conditions, including relaxations of the famous Łojasiewicz inequality, which can be applied to a class of non-convex functions.

25 Feb 2021

The classical approach to measure the expressive power of deep neural

networks with piecewise linear activations is based on counting their maximum

number of linear regions. This complexity measure is quite relevant to

understand general properties of the expressivity of neural networks such as

the benefit of depth over width. Nevertheless, it appears limited when it comes

to comparing the expressivity of different network architectures. This lack

becomes particularly prominent when considering permutation-invariant networks,

due to the symmetrical redundancy among the linear regions. To tackle this, we

propose a refined definition of piecewise linear function complexity: instead

of counting the number of linear regions directly, we first introduce an

equivalence relation among the linear functions composing a piecewise linear

function and then count those linear functions relative to that equivalence

relation. Our new complexity measure can clearly distinguish between the two

aforementioned models, is consistent with the classical measure, and increases

exponentially with depth.

Annotating musical beats is a very long and tedious process. In order to combat this problem, we present a new self-supervised learning pretext task for beat tracking and downbeat estimation. This task makes use of Spleeter, an audio source separation model, to separate a song's drums from the rest of its signal. The first set of signals are used as positives, and by extension negatives, for contrastive learning pre-training. The drum-less signals, on the other hand, are used as anchors. When pre-training a fully-convolutional and recurrent model using this pretext task, an onset function is learned. In some cases, this function is found to be mapped to periodic elements in a song. We find that pre-trained models outperform randomly initialized models when a beat tracking training set is extremely small (less than 10 examples). When this is not the case, pre-training leads to a learning speed-up that causes the model to overfit to the training set. More generally, this work defines new perspectives in the realm of musical self-supervised learning. It is notably one of the first works to use audio source separation as a fundamental component of self-supervision.

\emph{Focused sequent calculi} are a refinement of sequent calculi, where additional side-conditions on the applicability of inference rules force the implementation of a proof search strategy. Focused cut-free proofs exhibit a special normal form that is used for defining identity of sequent calculi proofs. We introduce a novel focused display calculus this http URL and a fully polarized algebraic semantics this http URL for Lambek-Grishin logic by generalizing the theory of \emph{multi-type calculi} and their algebraic semantics with \emph{heterogenous consequence relations}. The calculus this http URL has \emph{strong focalization} and it is \emph{sound and complete} w.r.t. this http URL. This completeness result is in a sense stronger than completeness with respect to standard polarized algebraic semantics (see e.g. the phase semantics of Bastenhof for Lambek-Grishin logic or Hamano and Takemura for linear logic), insofar we do not need to quotient over proofs with consecutive applications of shifts over the same formula. We plan to investigate the connections, if any, between this completeness result and the notion of \emph{full completeness} introduced by Abramsky et al. We also show a number of additional results. this http URL is sound and complete w.r.t. LG-algebras: this amounts to a semantic proof of the so-called \emph{completeness of focusing}, given that the standard (display) sequent calculus for Lambek-Grishin logic is complete w.r.t. LG-algebras. this http URL and the focused calculus this http URL of Moortgat and Moot are equivalent with respect to proofs, indeed there is an effective translation from this http URL-derivations to this http URL-derivations and vice versa: this provides the link with operational semantics, given that every this http URL-derivation is in a Curry-Howard correspondence with a directional λμμ-term.

Recent progress in \emph{Geometric Deep Learning} (GDL) has shown its potential to provide powerful data-driven models. This gives momentum to explore new methods for learning physical systems governed by \emph{Partial Differential Equations} (PDEs) from Graph-Mesh data. However, despite the efforts and recent achievements, several research directions remain unexplored and progress is still far from satisfying the physical requirements of real-world phenomena. One of the major impediments is the absence of benchmarking datasets and common physics evaluation protocols. In this paper, we propose a 2-D graph-mesh dataset to study the airflow over airfoils at high Reynolds regime (from 106 and beyond). We also introduce metrics on the stress forces over the airfoil in order to evaluate GDL models on important physical quantities. Moreover, we provide extensive GDL baselines.

This paper considers a new family of variational distributions motivated by

Sklar's theorem. This family is based on new copula-like densities on the

hypercube with non-uniform marginals which can be sampled efficiently, i.e.

with a complexity linear in the dimension of state space. Then, the proposed

variational densities that we suggest can be seen as arising from these

copula-like densities used as base distributions on the hypercube with Gaussian

quantile functions and sparse rotation matrices as normalizing flows. The

latter correspond to a rotation of the marginals with complexity $\mathcal{O}(d

\log d)$. We provide some empirical evidence that such a variational family can

also approximate non-Gaussian posteriors and can be beneficial compared to

Gaussian approximations. Our method performs largely comparably to

state-of-the-art variational approximations on standard regression and

classification benchmarks for Bayesian Neural Networks.

Language use reveals information about who we are and how we feel1-3. One of the pioneers in text analysis, Walter Weintraub, manually counted which types of words people used in medical interviews and showed that the frequency of first-person singular pronouns (i.e., I, me, my) was a reliable indicator of depression, with depressed people using I more often than people who are not depressed4. Several studies have demonstrated that language use also differs between truthful and deceptive statements5-7, but not all differences are consistent across people and contexts, making prediction difficult8. Here we show how well linguistic deception detection performs at the individual level by developing a model tailored to a single individual: the current US president. Using tweets fact-checked by an independent third party (Washington Post), we found substantial linguistic differences between factually correct and incorrect tweets and developed a quantitative model based on these differences. Next, we predicted whether out-of-sample tweets were either factually correct or incorrect and achieved a 73% overall accuracy. Our results demonstrate the power of linguistic analysis in real-world deception research when applied at the individual level and provide evidence that factually incorrect tweets are not random mistakes of the sender.

12 Jul 2025

High Power Laser (HPL) systems operate in the attoseconds regime -- the shortest timescale ever created by humanity. HPL systems are instrumental in high-energy physics, leveraging ultra-short impulse durations to yield extremely high intensities, which are essential for both practical applications and theoretical advancements in light-matter interactions. Traditionally, the parameters regulating HPL optical performance have been manually tuned by human experts, or optimized using black-box methods that can be computationally demanding. Critically, black box methods rely on stationarity assumptions overlooking complex dynamics in high-energy physics and day-to-day changes in real-world experimental settings, and thus need to be often restarted. Deep Reinforcement Learning (DRL) offers a promising alternative by enabling sequential decision making in non-static settings. This work explores the feasibility of applying DRL to HPL systems, extending the current research by (1) learning a control policy relying solely on non-destructive image observations obtained from readily available diagnostic devices, and (2) retaining performance when the underlying dynamics vary. We evaluate our method across various test dynamics, and observe that DRL effectively enables cross-domain adaptability, coping with dynamics' fluctuations while achieving 90\% of the target intensity in test environments.

29 Feb 2024

The recent developments of quantum computing present potential novel pathways for quantum chemistry, as the increased computational power of quantum computers could be harnessed to naturally encode and solve electronic structure problems. Theoretically exact quantum algorithms for chemistry have been proposed (e.g. Quantum Phase Estimation) but the limited capabilities of current noisy intermediate scale quantum devices (NISQ) motivated the development of less demanding hybrid algorithms. In this context, the Variational Quantum Eigensolver (VQE) algorithm was successfully introduced as an effective method to compute the ground state energy of small molecules. The current study investigates the Folded Spectrum (FS) method as an extension to the VQE algorithm for the computation of molecular excited states. It provides the possibility of directly computing excited states around a selected target energy, using the same ansatz as for the ground state calculation. Inspired by the variance-based methods from the Quantum Monte Carlo literature, the FS method minimizes the energy variance, thus requiring a computationally expensive squared Hamiltonian. We alleviate this potentially poor scaling by employing a Pauli grouping procedure, identifying sets of commuting Pauli strings that can be evaluated simultaneously. This allows for a significant reduction of the computational cost. We apply the FS-VQE method to small molecules (H2,LiH), obtaining all electronic excited states with chemical accuracy on ideal quantum simulators.

30 Nov 2025

Owing to their exceptional chemical and electronic tunability, metal-organic frameworks can be designed to develop magnetic ground states making a range of applications feasible, from magnetic gas separation to the implementation of lightweight, rare-earth free permanent magnets. However, the typically weak exchange interactions mediated by the diamagnetic organic ligands result in ordering temperatures confined to the cryogenic limit. The itinerant magnetic ground state realized in the chromium-based framework Cr(tri)2(CF3SO3)0.33 (Htri, 1H-1,2,3-triazole) is a remarkable exception to this trend, showing a robust ferromagnetic behavior almost at ambient conditions. Here, we use dc SQUID magnetometry, nuclear magnetic resonance, and ferromagnetic resonance to study the magnetic state realized in this material. We highlight several thermally-activated relaxation mechanisms for the nuclear magnetization due to the tendency of electrons towards localization at low temperatures as well as the rotational dynamics of the charge-balancing triflate ions confined within the pores. Most interestingly, we report the development within the paramagnetic regime of mesoscopic magnetic correlated clusters whose slow dynamics in the MHz range are tracked by the nuclear moments, in agreement with the highly unconventional nature of the magnetic transition detected by dc SQUID magnetometry. We discuss the similarity between the clustered phase in the paramagnetic phase and the magnetoelectronic phase segregation leading to colossal magnetoresistance in manganites and cobaltites. These results demonstrate that high-temperature magnetic metal-organic frameworks can serve as a versatile platform for exploring correlated electron phenomena in low-density, chemically tunable materials.

There are no more papers matching your filters at the moment.