École de Technologie Supérieure (ETS)University of Quebec

While image generation techniques are now capable of producing high-quality images that respect prompts which span multiple sentences, the task of text-guided image editing remains a challenge. Even edit requests that consist of only a few words often fail to be executed correctly. We explore three strategies to enhance performance on a wide range of image editing tasks: supervised fine-tuning (SFT), reinforcement learning (RL), and Chain-of-Thought (CoT) reasoning. In order to study all these components in one consistent framework, we adopt an autoregressive multimodal model that processes textual and visual tokens in a unified manner. We find RL combined with a large multi-modal LLM verifier to be the most effective of these strategies. As a result, we release EARL: Editing with Autoregression and RL, a strong RL-based image editing model that performs competitively on a diverse range of edits compared to strong baselines, despite using much less training data. Thus, EARL pushes the frontier of autoregressive multimodal models on image editing. We release our code, training data, and trained models at this https URL.

WebMMU introduces a multimodal, multilingual benchmark that unifies web question answering, mockup-to-code generation, and web code editing tasks using expert-annotated, real-world data across 20 domains and four languages. Evaluations reveal current MLLMs struggle significantly with multi-step reasoning, precise element grounding, layout fidelity in code generation, and producing functionally correct code edits, particularly in non-English languages.

The new SysMLv2 adds mechanisms for the built-in specification of domain-specific concepts and language extensions. This feature promises to facilitate the creation of Domain-Specific Languages (DSLs) and interfacing with existing system descriptions and technical designs. In this paper, we review these features and evaluate SysMLv2's capabilities using concrete use cases. We develop DarTwin DSL, a DSL that formalizes the existing DarTwin notation for Digital Twin (DT) evolution, through SysMLv2, thereby supposedly enabling the wide application of DarTwin's evolution templates using any SysMLv2 tool. We demonstrate DarTwin DSL, but also point out limitations in the currently available tooling of SysMLv2 in terms of graphical notation capabilities. This work contributes to the growing field of Model-Driven Engineering (MDE) for DTs and combines it with the release of SysMLv2, thus integrating a systematic approach with DT evolution management in systems engineering.

We present a novel longitudinal multimodal corpus of physiological and

behavioral data collected from direct clinical providers in a hospital

workplace. We designed the study to investigate the use of off-the-shelf

wearable and environmental sensors to understand individual-specific constructs

such as job performance, interpersonal interaction, and well-being of hospital

workers over time in their natural day-to-day job settings. We collected

behavioral and physiological data from n=212 participants through

Internet-of-Things Bluetooth data hubs, wearable sensors (including a

wristband, a biometrics-tracking garment, a smartphone, and an audio-feature

recorder), together with a battery of surveys to assess personality traits,

behavioral states, job performance, and well-being over time. Besides the

default use of the data set, we envision several novel research opportunities

and potential applications, including multi-modal and multi-task behavioral

modeling, authentication through biometrics, and privacy-aware and

privacy-preserving machine learning.

Objective. This paper presents an overview of generalizable and explainable

artificial intelligence (XAI) in deep learning (DL) for medical imaging, aimed

at addressing the urgent need for transparency and explainability in clinical

applications.

Methodology. We propose to use four CNNs in three medical datasets (brain

tumor, skin cancer, and chest x-ray) for medical image classification tasks. In

addition, we perform paired t-tests to show the significance of the differences

observed between different methods. Furthermore, we propose to combine ResNet50

with five common XAI techniques to obtain explainable results for model

prediction, aiming at improving model transparency. We also involve a

quantitative metric (confidence increase) to evaluate the usefulness of XAI

techniques.

Key findings. The experimental results indicate that ResNet50 can achieve

feasible accuracy and F1 score in all datasets (e.g., 86.31\% accuracy in skin

cancer). Furthermore, the findings show that while certain XAI methods, such as

XgradCAM, effectively highlight relevant abnormal regions in medical images,

others, like EigenGradCAM, may perform less effectively in specific scenarios.

In addition, XgradCAM indicates higher confidence increase (e.g., 0.12 in

glioma tumor) compared to GradCAM++ (0.09) and LayerCAM (0.08).

Implications. Based on the experimental results and recent advancements, we

outline future research directions to enhance the robustness and

generalizability of DL models in the field of biomedical imaging.

This paper reviews pioneering works in microphone array processing and

multichannel speech enhancement, highlighting historical achievements,

technological evolution, commercialization aspects, and key challenges. It

provides valuable insights into the progression and future direction of these

areas. The paper examines foundational developments in microphone array design

and optimization, showcasing innovations that improved sound acquisition and

enhanced speech intelligibility in noisy and reverberant environments. It then

introduces recent advancements and cutting-edge research in the field,

particularly the integration of deep learning techniques such as all-neural

beamformers. The paper also explores critical applications, discussing their

evolution and current state-of-the-art technologies that significantly impact

user experience. Finally, the paper outlines future research directions,

identifying challenges and potential solutions that could drive further

innovation in these fields. By providing a comprehensive overview and

forward-looking perspective, this paper aims to inspire ongoing research and

contribute to the sustained growth and development of microphone arrays and

multichannel speech enhancement.

06 Jul 2019

In this paper we present a novel approach for extracting a Bag-of-Words (BoW) representation based on a Neural Network codebook. The conventional BoW model is based on a dictionary (codebook) built from elementary representations which are selected randomly or by using a clustering algorithm on a training dataset. A metric is then used to assign unseen elementary representations to the closest dictionary entries in order to produce a histogram. In the proposed approach, an autoencoder (AE) encompasses the role of both the dictionary creation and the assignment metric. The dimension of the encoded layer of the AE corresponds to the size of the dictionary and the output of its neurons represents the assignment metric. Experimental results for the continuous emotion prediction task on the AVEC 2017 audio dataset have shown an improvement of the Concordance Correlation Coefficient (CCC) from 0.225 to 0.322 for arousal dimension and from 0.244 to 0.368 for valence dimension relative to the conventional BoW version implemented in a baseline system.

Consecutive frames in a video contain redundancy, but they may also contain

relevant complementary information for the detection task. The objective of our

work is to leverage this complementary information to improve detection.

Therefore, we propose a spatio-temporal fusion framework (STF). We first

introduce multi-frame and single-frame attention modules that allow a neural

network to share feature maps between nearby frames to obtain more robust

object representations. Second, we introduce a dual-frame fusion module that

merges feature maps in a learnable manner to improve them. Our evaluation is

conducted on three different benchmarks including video sequences of moving

road users. The performed experiments demonstrate that the proposed

spatio-temporal fusion module leads to improved detection performance compared

to baseline object detectors. Code is available at

this https URL

Embedders play a central role in machine learning, projecting any object into

numerical representations that can, in turn, be leveraged to perform various

downstream tasks. The evaluation of embedding models typically depends on

domain-specific empirical approaches utilizing downstream tasks, primarily

because of the lack of a standardized framework for comparison. However,

acquiring adequately large and representative datasets for conducting these

assessments is not always viable and can prove to be prohibitively expensive

and time-consuming. In this paper, we present a unified approach to evaluate

embedders. First, we establish theoretical foundations for comparing embedding

models, drawing upon the concepts of sufficiency and informativeness. We then

leverage these concepts to devise a tractable comparison criterion (information

sufficiency), leading to a task-agnostic and self-supervised ranking procedure.

We demonstrate experimentally that our approach aligns closely with the

capability of embedding models to facilitate various downstream tasks in both

natural language processing and molecular biology. This effectively offers

practitioners a valuable tool for prioritizing model trials.

15 Oct 2015

Reverberation, especially in large rooms, severely degrades speech recognition performance and speech intelligibility. Since direct measurement of room characteristics is usually not possible, blind estimation of reverberation-related metrics such as the reverberation time (RT) and the direct-to-reverberant energy ratio (DRR) can be valuable information to speech recognition and enhancement algorithms operating in enclosed environments. The objective of this work is to evaluate the performance of five variants of blind RT and DRR estimators based on a modulation spectrum representation of reverberant speech with single- and multi-channel speech data. These models are all based on variants of the so-called Speech-to-Reverberation Modulation Energy Ratio (SRMR). We show that these measures outperform a state-of-the-art baseline based on maximum-likelihood estimation of sound decay rates in terms of root-mean square error (RMSE), as well as Pearson correlation. Compared to the baseline, the best proposed measure, called NSRMR_k , achieves a 23% relative improvement in terms of RMSE and allows for relative correlation improvements ranging from 13% to 47% for RT prediction.

The field of distributed machine learning (ML) faces increasing demands for scalable and cost-effective training solutions, particularly in the context of large, complex models. Serverless computing has emerged as a promising paradigm to address these challenges by offering dynamic scalability and resource-efficient execution. Building upon our previous work, which introduced the Serverless Peer Integrated for Robust Training (SPIRT) architecture, this paper presents a comparative analysis of several serverless distributed ML architectures. We examine SPIRT alongside established architectures like ScatterReduce, AllReduce, and MLLess, focusing on key metrics such as training time efficiency, cost-effectiveness, communication overhead, and fault tolerance capabilities. Our findings reveal that SPIRT provides significant improvements in reducing training times and communication overhead through strategies such as parallel batch processing and in-database operations facilitated by RedisAI. However, traditional architectures exhibit scalability challenges and varying degrees of vulnerability to faults and adversarial attacks. The cost analysis underscores the long-term economic benefits of SPIRT despite its higher initial setup costs. This study not only highlights the strengths and limitations of current serverless ML architectures but also sets the stage for future research aimed at developing new models that combine the most effective features of existing systems.

In this paper, we investigate the potential effect of the adversarially

training on the robustness of six advanced deep neural networks against a

variety of targeted and non-targeted adversarial attacks. We firstly show that,

the ResNet-56 model trained on the 2D representation of the discrete wavelet

transform appended with the tonnetz chromagram outperforms other models in

terms of recognition accuracy. Then we demonstrate the positive impact of

adversarially training on this model as well as other deep architectures

against six types of attack algorithms (white and black-box) with the cost of

the reduced recognition accuracy and limited adversarial perturbation. We run

our experiments on two benchmarking environmental sound datasets and show that

without any imposed limitations on the budget allocations for the adversary,

the fooling rate of the adversarially trained models can exceed 90\%. In other

words, adversarial attacks exist in any scales, but they might require higher

adversarial perturbations compared to non-adversarially trained models.

In Federated Learning (FL), training is conducted on client devices,

typically with limited computational resources and storage capacity. To address

these constraints, we propose an automatic pruning scheme tailored for FL

systems. Our solution improves computation efficiency on client devices, while

minimizing communication costs. One of the challenges of tuning pruning

hyper-parameters in FL systems is the restricted access to local data. Thus, we

introduce an automatic pruning paradigm that dynamically determines pruning

boundaries. Additionally, we utilized a structured pruning algorithm optimized

for mobile devices that lack hardware support for sparse computations.

Experimental results demonstrate the effectiveness of our approach, achieving

accuracy comparable to existing methods. Our method notably reduces the number

of parameters by 89% and FLOPS by 90%, with minimal impact on the accuracy of

the FEMNIST and CelebFaces datasets. Furthermore, our pruning method decreases

communication overhead by up to 5x and halves inference time when deployed on

Android devices.

Assessing the quality of summarizers poses significant challenges. In response, we propose a novel task-oriented evaluation approach that assesses summarizers based on their capacity to produce summaries that are useful for downstream tasks, while preserving task outcomes. We theoretically establish a direct relationship between the resulting error probability of these tasks and the mutual information between source texts and generated summaries. We introduce COSMIC as a practical implementation of this metric, demonstrating its strong correlation with human judgment-based metrics and its effectiveness in predicting downstream task performance. Comparative analyses against established metrics like BERTScore and ROUGE highlight the competitive performance of COSMIC.

This study investigates a 3D and fully convolutional neural network (CNN) for

subcortical brain structure segmentation in MRI. 3D CNN architectures have been

generally avoided due to their computational and memory requirements during

inference. We address the problem via small kernels, allowing deeper

architectures. We further model both local and global context by embedding

intermediate-layer outputs in the final prediction, which encourages

consistency between features extracted at different scales and embeds

fine-grained information directly in the segmentation process. Our model is

efficiently trained end-to-end on a graphics processing unit (GPU), in a single

stage, exploiting the dense inference capabilities of fully CNNs.

We performed comprehensive experiments over two publicly available datasets.

First, we demonstrate a state-of-the-art performance on the ISBR dataset. Then,

we report a {\em large-scale} multi-site evaluation over 1112 unregistered

subject datasets acquired from 17 different sites (ABIDE dataset), with ages

ranging from 7 to 64 years, showing that our method is robust to various

acquisition protocols, demographics and clinical factors. Our method yielded

segmentations that are highly consistent with a standard atlas-based approach,

while running in a fraction of the time needed by atlas-based methods and

avoiding registration/normalization steps. This makes it convenient for massive

multi-site neuroanatomical imaging studies. To the best of our knowledge, our

work is the first to study subcortical structure segmentation on such

large-scale and heterogeneous data.

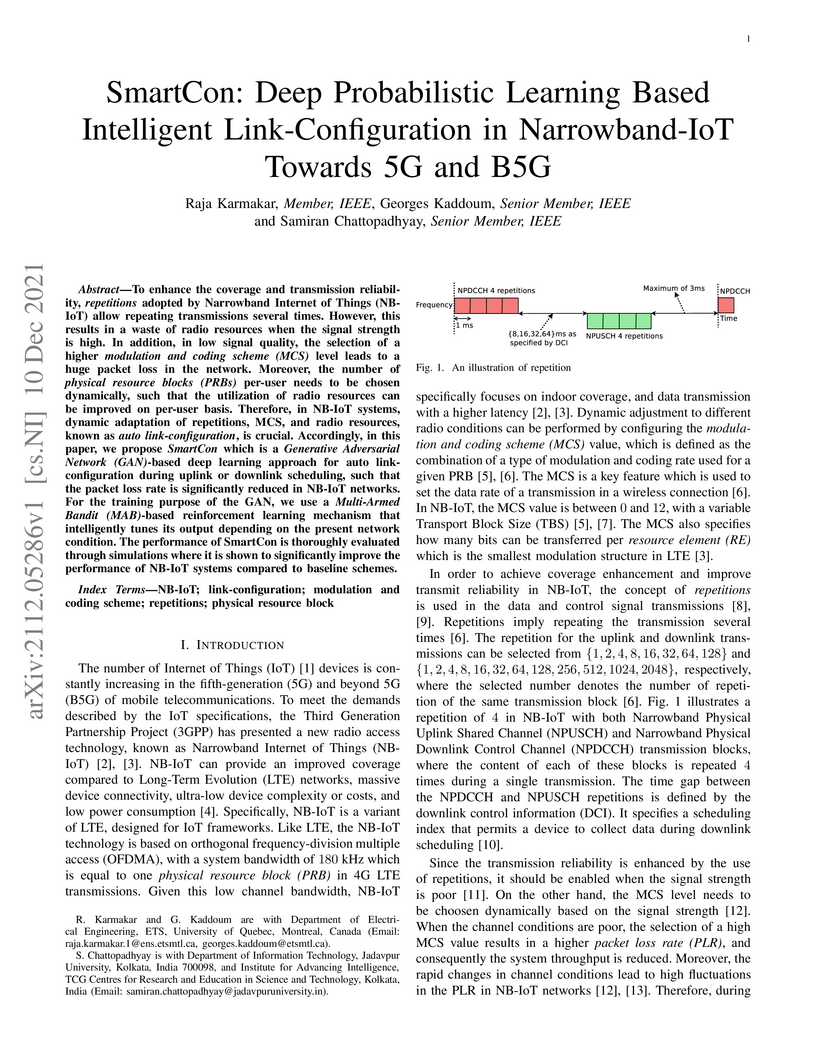

To enhance the coverage and transmission reliability, repetitions adopted by Narrowband Internet of Things (NB-IoT) allow repeating transmissions several times. However, this results in a waste of radio resources when the signal strength is high. In addition, in low signal quality, the selection of a higher modulation and coding scheme (MCS) level leads to a huge packet loss in the network. Moreover, the number of physical resource blocks (PRBs) per-user needs to be chosen dynamically, such that the utilization of radio resources can be improved on per-user basis. Therefore, in NB-IoT systems, dynamic adaptation of repetitions, MCS, and radio resources, known as auto link-configuration, is crucial. Accordingly, in this paper, we propose SmartCon which is a Generative Adversarial Network (GAN)-based deep learning approach for auto link-configuration during uplink or downlink scheduling, such that the packet loss rate is significantly reduced in NB-IoT networks. For the training purpose of the GAN, we use a Multi-Armed Bandit (MAB)-based reinforcement learning mechanism that intelligently tunes its output depending on the present network condition. The performance of SmartCon is thoroughly evaluated through simulations where it is shown to significantly improve the performance of NB-IoT systems compared to baseline schemes.

Integer Linear Programming (ILP) formulations of Markov random fields (MRFs) models with global connectivity priors were investigated previously in computer vision, e.g., \cite{globalinter,globalconn}. In these works, only Linear Programing (LP) relaxations \cite{globalinter,globalconn} or simplified versions \cite{graphcutbase} of the problem were solved. This paper investigates the ILP of multi-label MRF with exact connectivity priors via a branch-and-cut method, which provably finds globally optimal solutions. The method enforces connectivity priors iteratively by a cutting plane method, and provides feasible solutions with a guarantee on sub-optimality even if we terminate it earlier. The proposed ILP can be applied as a post-processing method on top of any existing multi-label segmentation approach. As it provides globally optimal solution, it can be used off-line to generate ground-truth labeling, which serves as quality check for any fast on-line algorithm. Furthermore, it can be used to generate ground-truth proposals for weakly supervised segmentation. We demonstrate the power and usefulness of our model by several experiments on the BSDS500 and PASCAL image dataset, as well as on medical images with trained probability maps.

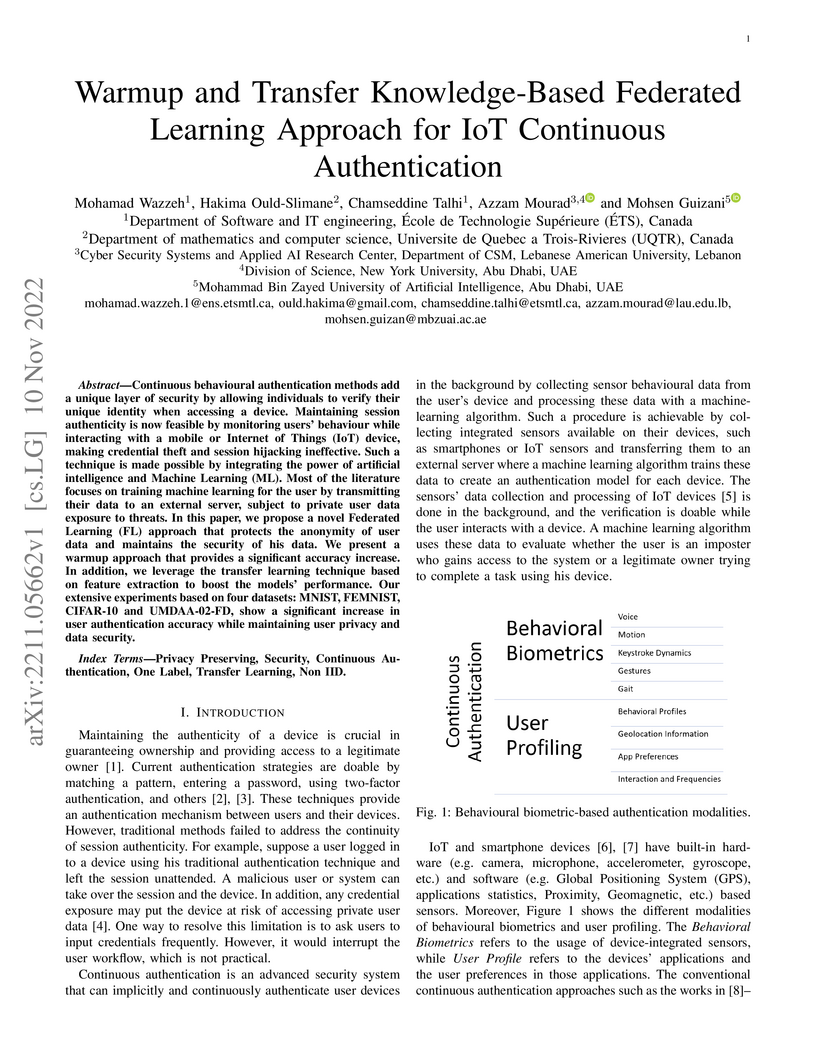

Continuous behavioural authentication methods add a unique layer of security

by allowing individuals to verify their unique identity when accessing a

device. Maintaining session authenticity is now feasible by monitoring users'

behaviour while interacting with a mobile or Internet of Things (IoT) device,

making credential theft and session hijacking ineffective. Such a technique is

made possible by integrating the power of artificial intelligence and Machine

Learning (ML). Most of the literature focuses on training machine learning for

the user by transmitting their data to an external server, subject to private

user data exposure to threats. In this paper, we propose a novel Federated

Learning (FL) approach that protects the anonymity of user data and maintains

the security of his data. We present a warmup approach that provides a

significant accuracy increase. In addition, we leverage the transfer learning

technique based on feature extraction to boost the models' performance. Our

extensive experiments based on four datasets: MNIST, FEMNIST, CIFAR-10 and

UMDAA-02-FD, show a significant increase in user authentication accuracy while

maintaining user privacy and data security.

06 Jun 2025

Atomic force microscopy (AFM) enables high-resolution imaging and quantitative force measurement, which is critical for understanding nanoscale mechanical, chemical, and biological interactions. In dynamic AFM modes, however, interaction forces are not directly measured; they must be mathematically reconstructed from observables such as amplitude, phase, or frequency shift. Many reconstruction techniques have been proposed over the last two decades, but they rely on different assumptions and have been applied inconsistently, limiting reproducibility and cross-study comparison. Here, we systematically evaluate major force reconstruction methods in both frequency- and amplitude-modulation AFM, detailing their theoretical foundations, performance regimes, and sources of error. To support benchmarking and reproducibility, we introduce an open-source software package that unifies all widely used methods, enabling side-by-side comparisons across different formulations. This work represents a critical step toward achieving consistent and interpretable AFM force spectroscopy, thereby supporting the more reliable application of AFM in fields ranging from materials science to biophysics.

In this paper, an adjustment to the original differentially private

stochastic gradient descent (DPSGD) algorithm for deep learning models is

proposed. As a matter of motivation, to date, almost no state-of-the-art

machine learning algorithm hires the existing privacy protecting components due

to otherwise serious compromise in their utility despite the vital necessity.

The idea in this study is natural and interpretable, contributing to improve

the utility with respect to the state-of-the-art. Another property of the

proposed technique is its simplicity which makes it again more natural and also

more appropriate for real world and specially commercial applications. The

intuition is to trim and balance out wild individual discrepancies for privacy

reasons, and at the same time, to preserve relative individual differences for

seeking performance. The idea proposed here can also be applied to the

recurrent neural networks (RNN) to solve the gradient exploding problem. The

algorithm is applied to benchmark datasets MNIST and CIFAR-10 for a

classification task and the utility measure is calculated. The results

outperformed the original work.

There are no more papers matching your filters at the moment.