Academia Sinica

06 Nov 2024

We present the AM3 ("Astrophysical Multi-Messenger Modeling") software. AM3 is a documented open source software that efficiently solves the coupled integro-differential equations describing the temporal evolution of the spectral densities of particles interacting in astrophysical environments, in-cluding photons, electrons, positrons, protons, neutrons, pions, muons, and neutrinos. The software has been extensively used to simulate the multi-wavelength and neutrino emission from active galactic nuclei (including blazars), gamma-ray bursts, and tidal disruption events. The simulations include all relevant non-thermal processes, namely synchrotron emission, inverse Compton scattering, photon-photon annihilation, proton-proton and proton-photon pion production, and photo-pair production. The software self-consistently calculates the full cascade of primary and secondary particles, including non-linear feedback processes and predictions in the time domain. It also allows to track separately the particle densities produced by means of each distinct interaction processes, including the different hadronic channels. With its efficient hybrid solver combining analytical and numerical techniques, AM3 combines efficiency and accuracy at a user-adjustable level. We describe the technical details of the numerical framework and present three examples of applications to different astrophysical environments.

09 Oct 2025

Academia SinicaNational Astronomical Observatory of Japan UC Berkeley

UC Berkeley University College LondonNational Taiwan University

University College LondonNational Taiwan University University of Michigan

University of Michigan Boston UniversityKavli Institute for the Physics and Mathematics of the UniverseThe University of Texas at Dallas

Boston UniversityKavli Institute for the Physics and Mathematics of the UniverseThe University of Texas at Dallas Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory Sorbonne UniversitéFermi National Accelerator LaboratoryUniversitat Politècnica de CatalunyaUniversity of Portsmouth

Sorbonne UniversitéFermi National Accelerator LaboratoryUniversitat Politècnica de CatalunyaUniversity of Portsmouth The Ohio State UniversitySejong UniversityUniversidad Nacional Autónoma de MéxicoUniversitat Autònoma de Barcelona

The Ohio State UniversitySejong UniversityUniversidad Nacional Autónoma de MéxicoUniversitat Autònoma de Barcelona University of California, Santa CruzNSF NOIRLabUniversidad de Los AndesUniversity of WyomingCIEMATInstitut de Física d’Altes Energies (IFAE)Institució Catalana de Recerca i Estudis AvançatsSiena CollegeInstituto Astrofisica de CanariasInstitute of Space Sciences (ICE–CSIC)Universit

degli Studi di MilanoINAF

Osservatorio Astronomico di Brera

University of California, Santa CruzNSF NOIRLabUniversidad de Los AndesUniversity of WyomingCIEMATInstitut de Física d’Altes Energies (IFAE)Institució Catalana de Recerca i Estudis AvançatsSiena CollegeInstituto Astrofisica de CanariasInstitute of Space Sciences (ICE–CSIC)Universit

degli Studi di MilanoINAF

Osservatorio Astronomico di Brera

UC Berkeley

UC Berkeley University College LondonNational Taiwan University

University College LondonNational Taiwan University University of Michigan

University of Michigan Boston UniversityKavli Institute for the Physics and Mathematics of the UniverseThe University of Texas at Dallas

Boston UniversityKavli Institute for the Physics and Mathematics of the UniverseThe University of Texas at Dallas Lawrence Berkeley National Laboratory

Lawrence Berkeley National Laboratory Sorbonne UniversitéFermi National Accelerator LaboratoryUniversitat Politècnica de CatalunyaUniversity of Portsmouth

Sorbonne UniversitéFermi National Accelerator LaboratoryUniversitat Politècnica de CatalunyaUniversity of Portsmouth The Ohio State UniversitySejong UniversityUniversidad Nacional Autónoma de MéxicoUniversitat Autònoma de Barcelona

The Ohio State UniversitySejong UniversityUniversidad Nacional Autónoma de MéxicoUniversitat Autònoma de Barcelona University of California, Santa CruzNSF NOIRLabUniversidad de Los AndesUniversity of WyomingCIEMATInstitut de Física d’Altes Energies (IFAE)Institució Catalana de Recerca i Estudis AvançatsSiena CollegeInstituto Astrofisica de CanariasInstitute of Space Sciences (ICE–CSIC)Universit

degli Studi di MilanoINAF

Osservatorio Astronomico di Brera

University of California, Santa CruzNSF NOIRLabUniversidad de Los AndesUniversity of WyomingCIEMATInstitut de Física d’Altes Energies (IFAE)Institució Catalana de Recerca i Estudis AvançatsSiena CollegeInstituto Astrofisica de CanariasInstitute of Space Sciences (ICE–CSIC)Universit

degli Studi di MilanoINAF

Osservatorio Astronomico di BreraThis study comprehensively characterized the cool circumgalactic medium (CGM) around galaxies at redshifts below 0.4 using data from the Dark Energy Spectroscopic Instrument (DESI) Year 1 survey. It reveals persistent correlations between cool gas absorption and galaxy properties like stellar mass and star formation rate, along with an unexpected absence of azimuthal anisotropy, indicating a possible evolution in CGM dynamics at lower redshifts.

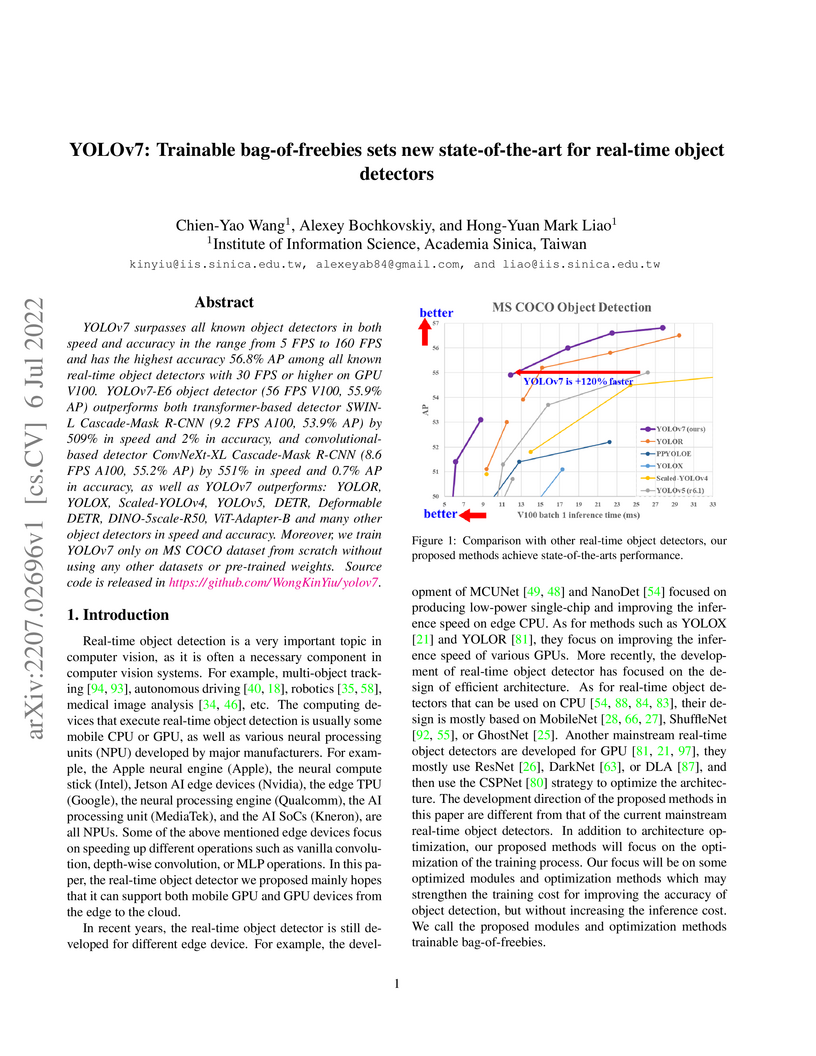

YOLOv7, developed by researchers at Academia Sinica, pushes the boundaries of real-time object detection by introducing trainable 'bag-of-freebies' optimizations that enhance accuracy without increasing inference computational cost. The model achieved 56.8% AP on MS COCO and is 1200% faster than comparable transformer-based models, demonstrating improved speed-accuracy trade-offs.

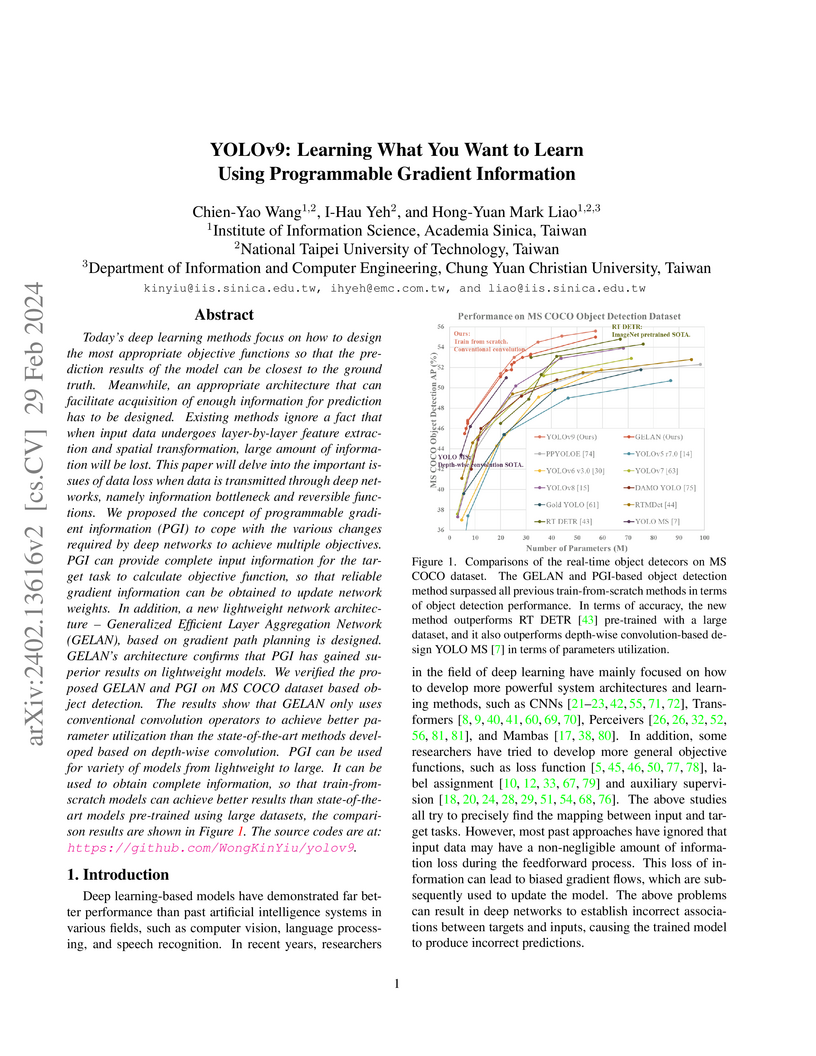

YOLOv9 introduces Programmable Gradient Information (PGI) and a Generalized Efficient Layer Aggregation Network (GELAN) to address information loss in deep networks, achieving state-of-the-art real-time object detection performance when trained from scratch on MS COCO.

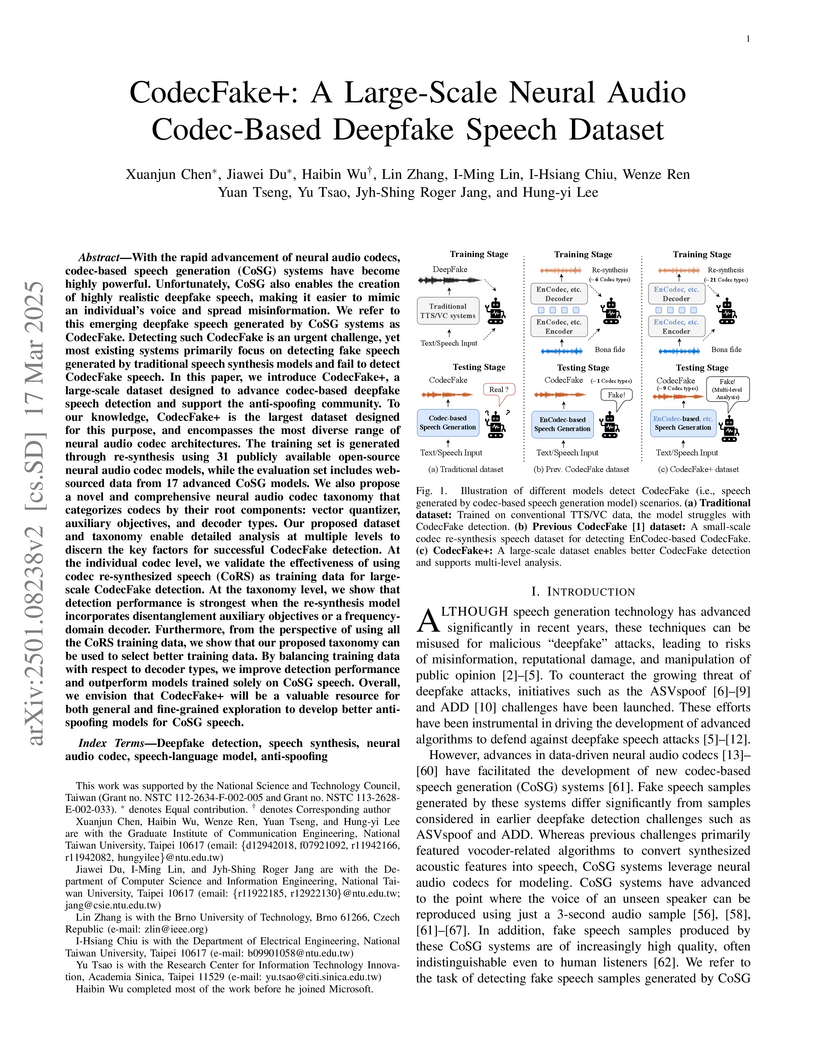

Researchers at National Taiwan University introduced CodecFake+, a large-scale dataset, and a novel taxonomy for neural audio codecs, enabling improved deepfake speech detection. A taxonomy-guided data balancing strategy achieved an 11.91% Equal Error Rate on a diverse set of unseen CodecFake samples.

This survey paper from National Taiwan University and collaborators provides the first systematic taxonomy of persona in Large Language Models, organizing research into two categories: LLM Role-Playing and LLM Personalization. It identifies diverse applications, agent schemas, and emergent behaviors, while also highlighting critical challenges such as data scarcity, bias, and privacy concerns across various domains.

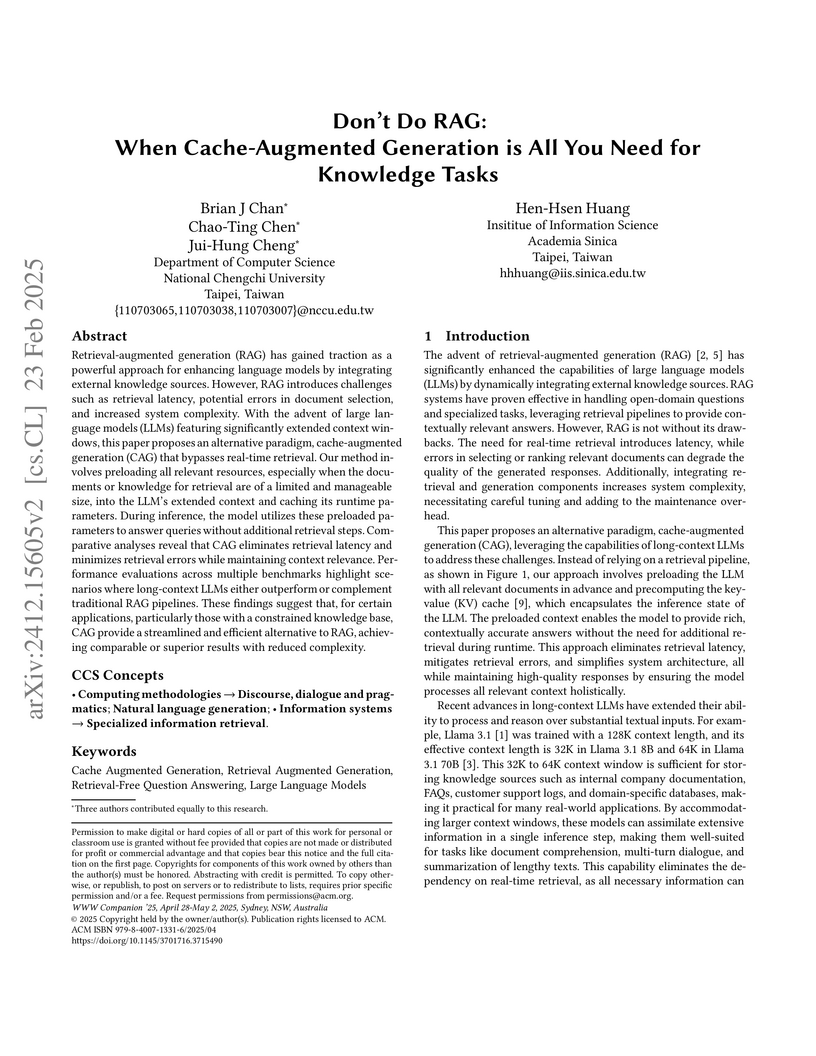

The paper introduces Cache-Augmented Generation (CAG) as an alternative to Retrieval-Augmented Generation (RAG) for knowledge tasks, by preloading an entire knowledge base into an LLM's extended context and precomputing its Key-Value cache. This approach eliminates real-time retrieval, leading to improved efficiency and accuracy over RAG, particularly for manageable knowledge bases.

24 Sep 2025

ThinkFake introduces a framework leveraging Multimodal Large Language Models (MLLMs) and reinforcement learning to detect AI-generated images with enhanced interpretability. The system provides detailed, step-by-step reasoning explanations while achieving 84.0% mean accuracy, outperforming existing state-of-the-art methods and demonstrating robust generalization on unseen content.

Researchers developed a parameter-efficient fine-tuning framework that adapts a frozen CLIP Vision Transformer for generalized video-based deepfake detection by employing a side-network decoder and Facial Component Guidance. This method demonstrated superior generalizability to unseen deepfake datasets with an average AUROC of 92.3%, enhanced robustness to perturbations, and required only 250,000 trainable parameters.

09 Oct 2023

Motivated by the fermionic Berry's phase in momentum space, we study a local

Abelian phase in momentum space coupled to electromagnetism, for complex

scalars in the phase-space worldline formalism. The interaction of both Abelian

fields is shown to give rise to a momentum gauge dependent emergent spacetime.

As a concrete example, we further study classical solutions of the

Berry-inspired gauge field that lead to an emergent Newtonian gravity with

gravitational potential predicted by coupled Coulomb fields both in

configuration and momentum spaces. Noncommutative aspects of the theory are

also provided.

24 Sep 2025

Lin et al. (2025) investigates the relationship between an LLM's answer generation and evaluation capabilities, revealing a weak correlation under standard conditions. The study introduces a self-reference-guided evaluation strategy that significantly strengthens this correlation, making a model's generation accuracy a more reliable indicator for judge selection.

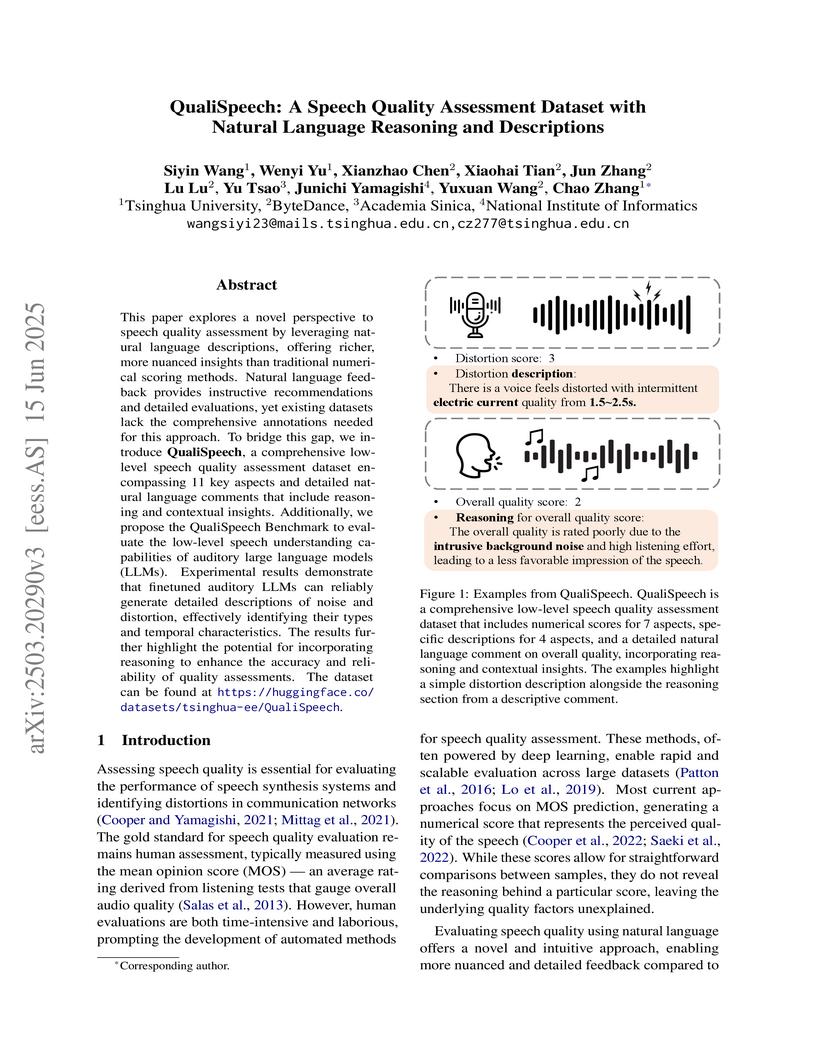

This paper explores a novel perspective to speech quality assessment by leveraging natural language descriptions, offering richer, more nuanced insights than traditional numerical scoring methods. Natural language feedback provides instructive recommendations and detailed evaluations, yet existing datasets lack the comprehensive annotations needed for this approach. To bridge this gap, we introduce QualiSpeech, a comprehensive low-level speech quality assessment dataset encompassing 11 key aspects and detailed natural language comments that include reasoning and contextual insights. Additionally, we propose the QualiSpeech Benchmark to evaluate the low-level speech understanding capabilities of auditory large language models (LLMs). Experimental results demonstrate that finetuned auditory LLMs can reliably generate detailed descriptions of noise and distortion, effectively identifying their types and temporal characteristics. The results further highlight the potential for incorporating reasoning to enhance the accuracy and reliability of quality assessments. The dataset will be released at this https URL.

03 Aug 2021

While there are many music datasets with emotion labels in the literature, they cannot be used for research on symbolic-domain music analysis or generation, as there are usually audio files only. In this paper, we present the EMOPIA (pronounced `yee-mò-pi-uh') dataset, a shared multi-modal (audio and MIDI) database focusing on perceived emotion in pop piano music, to facilitate research on various tasks related to music emotion. The dataset contains 1,087 music clips from 387 songs and clip-level emotion labels annotated by four dedicated annotators. Since the clips are not restricted to one clip per song, they can also be used for song-level analysis. We present the methodology for building the dataset, covering the song list curation, clip selection, and emotion annotation processes. Moreover, we prototype use cases on clip-level music emotion classification and emotion-based symbolic music generation by training and evaluating corresponding models using the dataset. The result demonstrates the potential of EMOPIA for being used in future exploration on piano emotion-related MIR tasks.

13 Oct 2025

Document expansion (DE) via query generation tackles vocabulary mismatch in sparse retrieval, yet faces limitations: uncontrolled generation producing hallucinated or redundant queries with low diversity; poor generalization from in-domain training (e.g., MS MARCO) to out-of-domain data like BEIR; and noise from concatenation harming dense retrieval. While Large Language Models (LLMs) enable cross-domain query generation, basic prompting lacks control, and taxonomy-based methods rely on domain-specific structures, limiting applicability. To address these challenges, we introduce Doc2Query++, a DE framework that structures query generation by first inferring a document's latent topics via unsupervised topic modeling for cross-domain applicability, then using hybrid keyword selection to create a diverse and relevant keyword set per document. This guides LLM not only to leverage keywords, which ensure comprehensive topic representation, but also to reduce redundancy through diverse, relevant terms. To prevent noise from query appending in dense retrieval, we propose Dual-Index Fusion strategy that isolates text and query signals, boosting performance in dense settings. Extensive experiments show Doc2Query++ significantly outperforms state-of-the-art baselines, achieving substantial gains in MAP, nDCG@10 and Recall@100 across diverse datasets on both sparse and dense retrieval.

03 Oct 2025

Tohoku UniversityAcademia SinicaNational Astronomical Observatory of Japan

Tohoku UniversityAcademia SinicaNational Astronomical Observatory of Japan Nagoya University

Nagoya University Peking UniversityKavli Institute for the Physics and Mathematics of the Universe

Peking UniversityKavli Institute for the Physics and Mathematics of the Universe University of Tokyo

University of Tokyo Chalmers University of TechnologyRitsumeikan UniversityUniversity of MassachusettsEhime University

Chalmers University of TechnologyRitsumeikan UniversityUniversity of MassachusettsEhime University Waseda UniversityKavli Institute for Astronomy and AstrophysicsUniversity of ToyamaFrontier Research Institute for Interdisciplinary Sciences

Waseda UniversityKavli Institute for Astronomy and AstrophysicsUniversity of ToyamaFrontier Research Institute for Interdisciplinary SciencesOne of the most remarkable discoveries of JWST is a population of compact, red sources at z > 4, commonly referred to as Little Red Dots (LRDs). Spectroscopic identifications reported that most LRDs are active galactic nuclei (AGNs), which are preferentially found around z~6 and could imply a key phase in the formation and growth of black holes (BHs) in the early universe. Photometric surveys at lower redshift have recently been carried out to trace their evolution across cosmic time, and a small number of LRDs have been spectroscopically identified at both Cosmic Noon and in the local universe. Here we report the discovery of one of the lowest-z analogs of LRDs, J204837.26-002437.2 (hereafter J2048) at z = 0.4332, using new Gemini-N/GMOS IFU observations combined with archival multi-band photometric SED data. The GMOS data reveal extended blue emission from starburst with a star formation rate of 400 Msun yr-1, together with an extended, highly fast ionized outflow. This is the first spectroscopic confirmation of extended host emission and outflow in an LRD-like galaxy, providing a unique laboratory for understanding the nature of their high-redshift counterparts. Moreover, J2048 would host an extremely overmassive BH with a BH-to-stellar mass ratio of 0.6, with the BH mass and host stellar mass estimated to be 10^10.2 and 10^10.4 Msun, respectively. We discuss the origin and evolutionary fate of J2048, and the implications that such low-z analogs have for interpreting the properties of high-z LRDs.

An inference-time, backpropagation-free method called "Demon" enables aligning diffusion models with user preferences using arbitrary, non-differentiable reward functions, including those from proprietary Visual-Language Model APIs and human feedback. This approach improved LAION aesthetics scores for Stable Diffusion v1.4 from an upper bound of approximately 6.5 to 7.394 and for SDXL from 7 to 7.841.

Kramers-Wannier duality, a hallmark of the Ising model, has recently gained renewed interest through its reinterpretation as a non-invertible symmetry with a state-level action. Using sequential quantum circuits (SQC), we argue that this duality governs the stability of quantum many-body scar (QMBS) states in a nonintegrable model, depending on whether the dual preserves the embedding conditions for scarring. This is supported by good agreement between first-order perturbation theory and numerics, which capture scar dynamics despite chaotic spectra. Our results establish non-invertible dualities as both a generative mechanism and a diagnostic tool for quantum many- body scarring, offering a generalized symmetry-based route to weak ergodicity breaking.

The Interspeech 2025 URGENT Challenge aimed to advance universal, robust, and generalizable speech enhancement by unifying speech enhancement tasks across a wide variety of conditions, including seven different distortion types and five languages. We present Universal Speech Enhancement Mamba (USEMamba), a state-space speech enhancement model designed to handle long-range sequence modeling, time-frequency structured processing, and sampling frequency-independent feature extraction. Our approach primarily relies on regression-based modeling, which performs well across most distortions. However, for packet loss and bandwidth extension, where missing content must be inferred, a generative variant of the proposed USEMamba proves more effective. Despite being trained on only a subset of the full training data, USEMamba achieved 2nd place in Track 1 during the blind test phase, demonstrating strong generalization across diverse conditions.

07 Oct 2025

This work aims to investigate the use of a recently proposed, attention-free, scalable state-space model (SSM), Mamba, for the speech enhancement (SE) task. In particular, we employ Mamba to deploy different regression-based SE models (SEMamba) with different configurations, namely basic, advanced, causal, and non-causal. Furthermore, loss functions either based on signal-level distances or metric-oriented are considered. Experimental evidence shows that SEMamba attains a competitive PESQ of 3.55 on the VoiceBank-DEMAND dataset with the advanced, non-causal configuration. A new state-of-the-art PESQ of 3.69 is also reported when SEMamba is combined with Perceptual Contrast Stretching (PCS). Compared against Transformed-based equivalent SE solutions, a noticeable FLOPs reduction up to ~12% is observed with the advanced non-causal configurations. Finally, SEMamba can be used as a pre-processing step before automatic speech recognition (ASR), showing competitive performance against recent SE solutions.

Music Information Retrieval (MIR) encompasses a broad range of computational techniques for analyzing and understanding musical content, with recent deep learning advances driving substantial improvements. Building upon these advances, this paper explores how large language models (LLMs) can serve as an integrative bridge to connect and integrate information from multiple MIR tools, with a focus on enhancing automatic chord recognition performance. We present a novel approach that positions text-based LLMs as intelligent coordinators that process and integrate outputs from diverse state-of-the-art MIR tools-including music source separation, key detection, chord recognition, and beat tracking. Our method converts audio-derived musical information into textual representations, enabling LLMs to perform reasoning and correction specifically for chord recognition tasks. We design a 5-stage chain-of-thought framework that allows GPT-4o to systematically analyze, compare, and refine chord recognition results by leveraging music-theoretical knowledge to integrate information across different MIR components. Experimental evaluation on three datasets demonstrates consistent improvements across multiple evaluation metrics, with overall accuracy gains of 1-2.77% on the MIREX metric. Our findings demonstrate that LLMs can effectively function as integrative bridges in MIR pipelines, opening new directions for multi-tool coordination in music information retrieval tasks.

There are no more papers matching your filters at the moment.