University of Denver

AffectNet+ introduces a large-scale facial expression recognition database featuring "soft-labels" that assign probability vectors across multiple emotions, addressing the ambiguity of traditional single-label datasets. The enhanced dataset, developed by the University of Denver, includes comprehensive metadata and categorizes images by recognition difficulty, providing a more nuanced foundation for training robust FER models.

19 Sep 2025

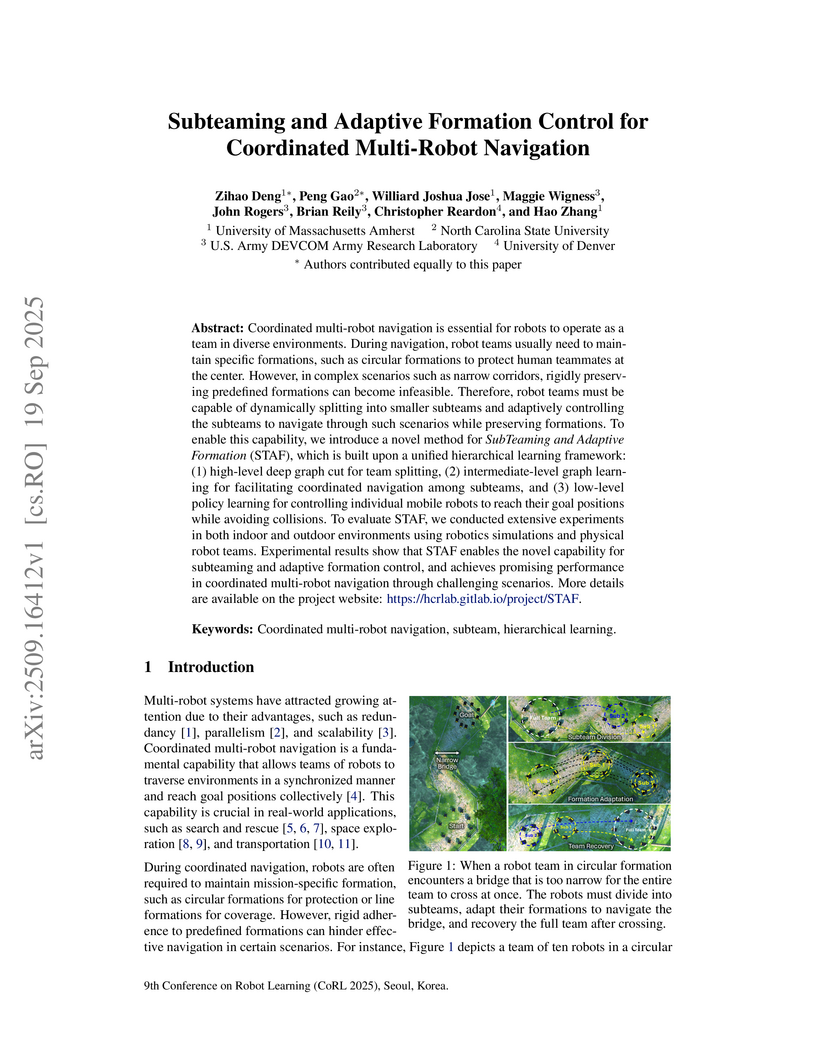

Coordinated multi-robot navigation is essential for robots to operate as a team in diverse environments. During navigation, robot teams usually need to maintain specific formations, such as circular formations to protect human teammates at the center. However, in complex scenarios such as narrow corridors, rigidly preserving predefined formations can become infeasible. Therefore, robot teams must be capable of dynamically splitting into smaller subteams and adaptively controlling the subteams to navigate through such scenarios while preserving formations. To enable this capability, we introduce a novel method for SubTeaming and Adaptive Formation (STAF), which is built upon a unified hierarchical learning framework: (1) high-level deep graph cut for team splitting, (2) intermediate-level graph learning for facilitating coordinated navigation among subteams, and (3) low-level policy learning for controlling individual mobile robots to reach their goal positions while avoiding collisions. To evaluate STAF, we conducted extensive experiments in both indoor and outdoor environments using robotics simulations and physical robot teams. Experimental results show that STAF enables the novel capability for subteaming and adaptive formation control, and achieves promising performance in coordinated multi-robot navigation through challenging scenarios. More details are available on the project website: this https URL.

We study ⊥Grad, a geometry-aware modification to gradient-based optimization that constrains descent directions to address overconfidence, a key limitation of standard optimizers in uncertainty-critical applications. By enforcing orthogonality between gradient updates and weight vectors, ⊥Grad alters optimization trajectories without architectural changes. On CIFAR-10 with 10% labeled data, ⊥Grad matches SGD in accuracy while achieving statistically significant improvements in test loss (p=0.05), predictive entropy (p=0.001), and confidence measures. These effects show consistent trends across corruption levels and architectures. ⊥Grad is optimizer-agnostic, incurs minimal overhead, and remains compatible with post-hoc calibration techniques.

Theoretically, we characterize convergence and stationary points for a simplified ⊥Grad variant, revealing that orthogonalization constrains loss reduction pathways to avoid confidence inflation and encourage decision-boundary improvements. Our findings suggest that geometric interventions in optimization can improve predictive uncertainty estimates at low computational cost.

03 Nov 2010

CNRSSLAC National Accelerator Laboratory

CNRSSLAC National Accelerator Laboratory Stanford UniversityOhio State UniversityCSIC

Stanford UniversityOhio State UniversityCSIC NASA Goddard Space Flight CenterIEEC

NASA Goddard Space Flight CenterIEEC University of Maryland

University of Maryland Stockholm University

Stockholm University CEAICREAUniversity of DenverGeorge Mason UniversityHiroshima UniversityNaval Research Laboratory

CEAICREAUniversity of DenverGeorge Mason UniversityHiroshima UniversityNaval Research Laboratory Waseda University

Waseda University University of California, Santa CruzINAF-Istituto di RadioastronomiaINAF, Istituto di Astrofisica Spaziale e Fisica CosmicaUPSIstituto Nazionale di Fisica Nucleare Sezione di PisaThe Oskar Klein Centre for Cosmoparticle PhysicsUniversit`a di Roma Tor VergataJAXAUniversit`a di TriesteMax-Planck-Institut fur extraterrestrische PhysikIstituto Nazionale di Fisica Nucleare, Sezione di PadovaUniversit´e Paris DiderotIstituto Nazionale di Fisica Nucleare Sezione di PerugiaIstituto Nazionale di Fisica Nucleare, Sezione di BariUniversit`a degli Studi di PerugiaPurdue University CalumetUniversite Montpellier 2Royal Swedish Academy of SciencesUniversit`a e del Politecnico di BariLeopold-Franzens-Universit•at InnsbruckCentre d’Etude Spatiale des RayonnementsNYCB Real-Time Computing Inc.Universite´ Paris-SudUniversit´e Joseph Fourier - Grenoble 1Istituto Nazionale di Fisica Nucleare Sezione di Trieste`Ecole PolytechniqueUniversita' di Padova

University of California, Santa CruzINAF-Istituto di RadioastronomiaINAF, Istituto di Astrofisica Spaziale e Fisica CosmicaUPSIstituto Nazionale di Fisica Nucleare Sezione di PisaThe Oskar Klein Centre for Cosmoparticle PhysicsUniversit`a di Roma Tor VergataJAXAUniversit`a di TriesteMax-Planck-Institut fur extraterrestrische PhysikIstituto Nazionale di Fisica Nucleare, Sezione di PadovaUniversit´e Paris DiderotIstituto Nazionale di Fisica Nucleare Sezione di PerugiaIstituto Nazionale di Fisica Nucleare, Sezione di BariUniversit`a degli Studi di PerugiaPurdue University CalumetUniversite Montpellier 2Royal Swedish Academy of SciencesUniversit`a e del Politecnico di BariLeopold-Franzens-Universit•at InnsbruckCentre d’Etude Spatiale des RayonnementsNYCB Real-Time Computing Inc.Universite´ Paris-SudUniversit´e Joseph Fourier - Grenoble 1Istituto Nazionale di Fisica Nucleare Sezione di Trieste`Ecole PolytechniqueUniversita' di PadovaWe report an analysis of the interstellar γ-ray emission in the third Galactic quadrant measured by the {Fermi} Large Area Telescope. The window encompassing the Galactic plane from longitude 210\arcdeg to 250\arcdeg has kinematically well-defined segments of the Local and the Perseus arms, suitable to study the cosmic-ray densities across the outer Galaxy. We measure no large gradient with Galactocentric distance of the γ-ray emissivities per interstellar H atom over the regions sampled in this study. The gradient depends, however, on the optical depth correction applied to derive the \HI\ column densities. No significant variations are found in the interstellar spectra in the outer Galaxy, indicating similar shapes of the cosmic-ray spectrum up to the Perseus arm for particles with GeV to tens of GeV energies. The emissivity as a function of Galactocentric radius does not show a large enhancement in the spiral arms with respect to the interarm region. The measured emissivity gradient is flatter than expectations based on a cosmic-ray propagation model using the radial distribution of supernova remnants and uniform diffusion properties. In this context, observations require a larger halo size and/or a flatter CR source distribution than usually assumed. The molecular mass calibrating ratio, XCO=N(H2)/WCO, is found to be (2.08±0.11)×1020cm−2(Kkms−1)−1 in the Local-arm clouds and is not significantly sensitive to the choice of \HI\ spin temperature. No significant variations are found for clouds in the interarm region.

A reinforcement learning (RL) based methodology is proposed and implemented

for online fine-tuning of PID controller gains, thus, improving quadrotor

effective and accurate trajectory tracking. The RL agent is first trained

offline on a quadrotor PID attitude controller and then validated through

simulations and experimental flights. RL exploits a Deep Deterministic Policy

Gradient (DDPG) algorithm, which is an off-policy actor-critic method. Training

and simulation studies are performed using Matlab/Simulink and the UAV Toolbox

Support Package for PX4 Autopilots. Performance evaluation and comparison

studies are performed between the hand-tuned and RL-based tuned approaches. The

results show that the controller parameters based on RL are adjusted during

flights, achieving the smallest attitude errors, thus significantly improving

attitude tracking performance compared to the hand-tuned approach.

22 Oct 2025

We present a random construction proving that the extreme off-diagonal Ramsey numbers satisfy R(3,k)≥(21+o(1))logkk2. This bound has been conjectured to be asymptotically tight, and improves the previously best bound R(3,k)≥(31+o(1))logkk2. In contrast to all previous constructions achieving the correct order of magnitude, we do not use a nibble argument, and the proof is significantly less technical.

Building AI systems, including Facial Expression Recognition (FER), involves two critical aspects: data and model design. Both components significantly influence bias and fairness in FER tasks. Issues related to bias and fairness in FER datasets and models remain underexplored. This study investigates bias sources in FER datasets and models. Four common FER datasets--AffectNet, ExpW, Fer2013, and RAF-DB--are analyzed. The findings demonstrate that AffectNet and ExpW exhibit high generalizability despite data imbalances. Additionally, this research evaluates the bias and fairness of six deep models, including three state-of-the-art convolutional neural network (CNN) models: MobileNet, ResNet, XceptionNet, as well as three transformer-based models: ViT, CLIP, and GPT-4o-mini. Experimental results reveal that while GPT-4o-mini and ViT achieve the highest accuracy scores, they also display the highest levels of bias. These findings underscore the urgent need for developing new methodologies to mitigate bias and ensure fairness in datasets and models, particularly in affective computing applications. See our implementation details at this https URL.

We introduce GIER (Gap-driven Iterative Enhancement of Responses), a general framework for improving large language model (LLM) outputs through self-reflection and revision based on conceptual quality criteria. Unlike prompting strategies that rely on demonstrations, examples, or chain-of-thought templates, GIER utilizes natural language descriptions of reasoning gaps, and prompts a model to iteratively critique and refine its own outputs to better satisfy these criteria. Across three reasoning-intensive tasks (SciFact, PrivacyQA, and e-SNLI) and four LLMs (GPT-4.1, GPT-4o Mini, Gemini 1.5 Pro, and Llama 3.3 70B), GIER improves rationale quality, grounding, and reasoning alignment without degrading task accuracy. Our analysis demonstrates that models can not only interpret abstract conceptual gaps but also translate them into concrete reasoning improvements.

Recently, over-parameterized deep networks, with increasingly more network parameters than training samples, have dominated the performances of modern machine learning. However, when the training data is corrupted, it has been well-known that over-parameterized networks tend to overfit and do not generalize. In this work, we propose a principled approach for robust training of over-parameterized deep networks in classification tasks where a proportion of training labels are corrupted. The main idea is yet very simple: label noise is sparse and incoherent with the network learned from clean data, so we model the noise and learn to separate it from the data. Specifically, we model the label noise via another sparse over-parameterization term, and exploit implicit algorithmic regularizations to recover and separate the underlying corruptions. Remarkably, when trained using such a simple method in practice, we demonstrate state-of-the-art test accuracy against label noise on a variety of real datasets. Furthermore, our experimental results are corroborated by theory on simplified linear models, showing that exact separation between sparse noise and low-rank data can be achieved under incoherent conditions. The work opens many interesting directions for improving over-parameterized models by using sparse over-parameterization and implicit regularization.

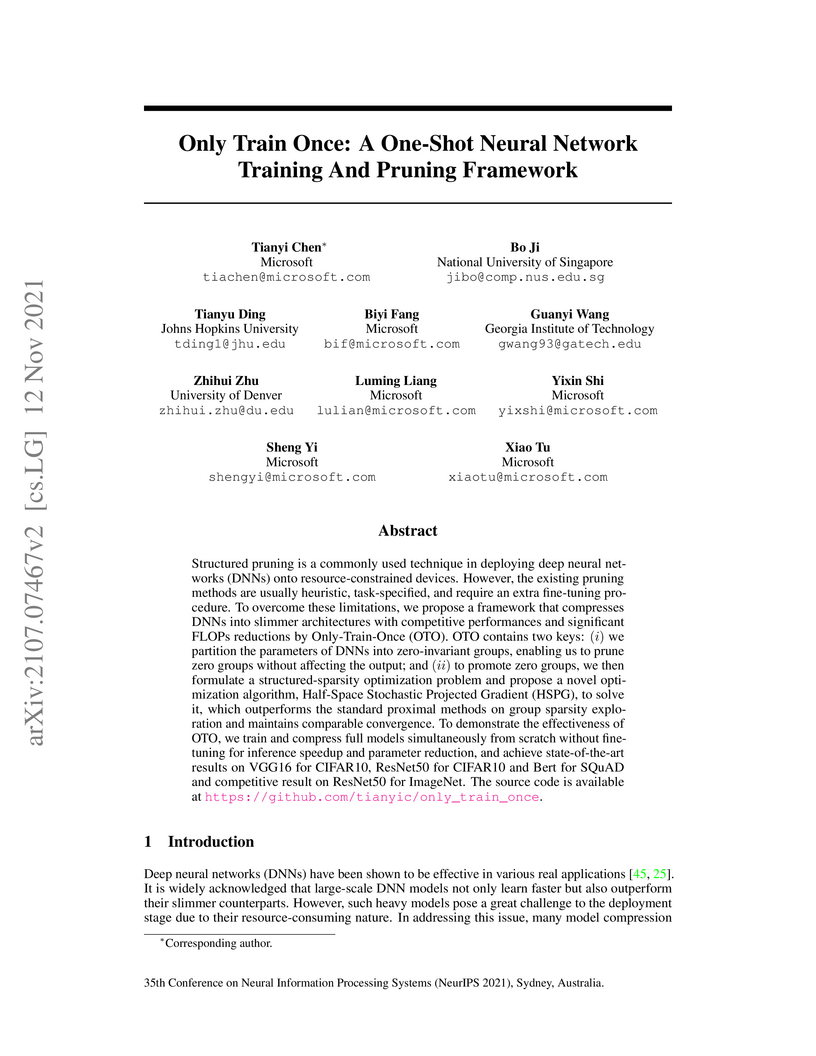

Structured pruning is a commonly used technique in deploying deep neural networks (DNNs) onto resource-constrained devices. However, the existing pruning methods are usually heuristic, task-specified, and require an extra fine-tuning procedure. To overcome these limitations, we propose a framework that compresses DNNs into slimmer architectures with competitive performances and significant FLOPs reductions by Only-Train-Once (OTO). OTO contains two keys: (i) we partition the parameters of DNNs into zero-invariant groups, enabling us to prune zero groups without affecting the output; and (ii) to promote zero groups, we then formulate a structured-sparsity optimization problem and propose a novel optimization algorithm, Half-Space Stochastic Projected Gradient (HSPG), to solve it, which outperforms the standard proximal methods on group sparsity exploration and maintains comparable convergence. To demonstrate the effectiveness of OTO, we train and compress full models simultaneously from scratch without fine-tuning for inference speedup and parameter reduction, and achieve state-of-the-art results on VGG16 for CIFAR10, ResNet50 for CIFAR10 and Bert for SQuAD and competitive result on ResNet50 for ImageNet. The source code is available at this https URL.

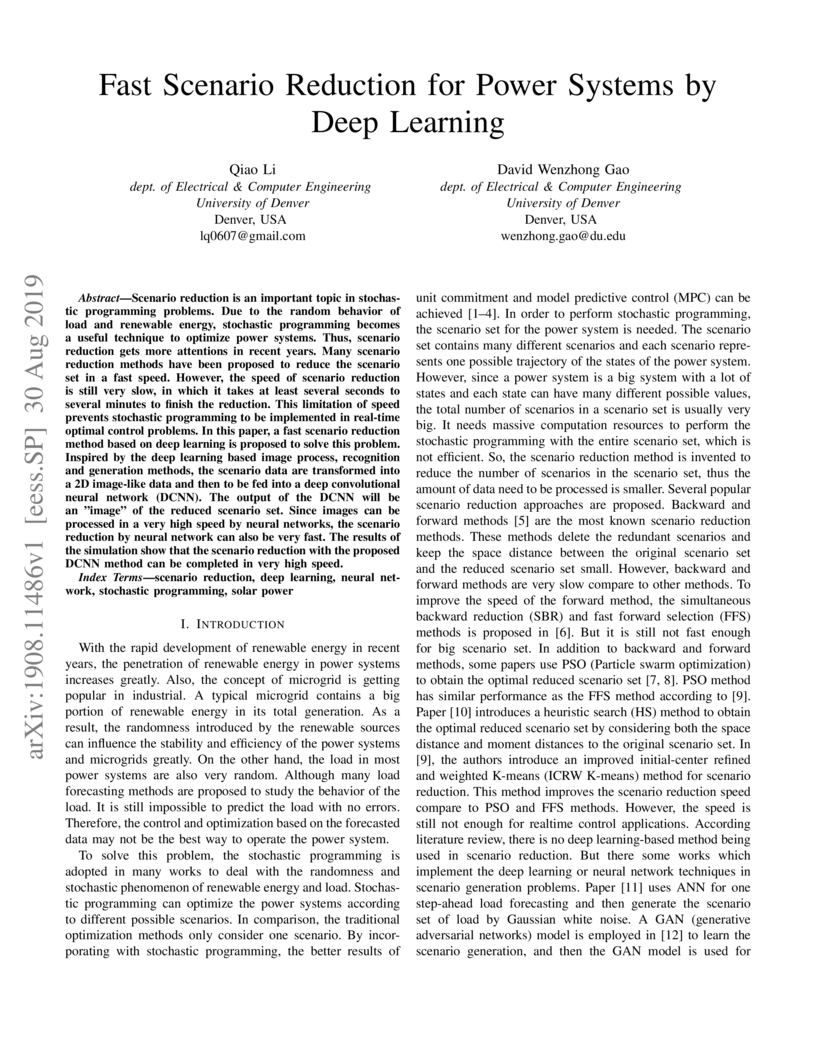

Scenario reduction is an important topic in stochastic programming problems. Due to the random behavior of load and renewable energy, stochastic programming becomes a useful technique to optimize power systems. Thus, scenario reduction gets more attentions in recent years. Many scenario reduction methods have been proposed to reduce the scenario set in a fast speed. However, the speed of scenario reduction is still very slow, in which it takes at least several seconds to several minutes to finish the reduction. This limitation of speed prevents stochastic programming to be implemented in real-time optimal control problems. In this paper, a fast scenario reduction method based on deep learning is proposed to solve this problem. Inspired by the deep learning based image process, recognition and generation methods, the scenario data are transformed into a 2D image-like data and then to be fed into a deep convolutional neural network (DCNN). The output of the DCNN will be an "image" of the reduced scenario set. Since images can be processed in a very high speed by neural networks, the scenario reduction by neural network can also be very fast. The results of the simulation show that the scenario reduction with the proposed DCNN method can be completed in very high speed.

Mobile healthcare (mHealth) applications promise convenient, continuous patient-provider interaction but also introduce severe and often underexamined security and privacy risks. We present an end-to-end audit of 272 Android mHealth apps from Google Play, combining permission forensics, static vulnerability analysis, and user review mining. Our multi-tool assessment with MobSF, RiskInDroid, and OWASP Mobile Audit revealed systemic weaknesses: 26.1% request fine-grained location without disclosure, 18.3% initiate calls silently, and 73 send SMS without notice. Nearly half (49.3%) still use deprecated SHA-1 encryption, 42 transmit unencrypted data, and 6 remain vulnerable to StrandHogg 2.0. Analysis of 2.56 million user reviews found 28.5% negative or neutral sentiment, with over 553,000 explicitly citing privacy intrusions, data misuse, or operational instability. These findings demonstrate the urgent need for enforceable permission transparency, automated pre-market security vetting, and systematic adoption of secure-by-design practices to protect Protected Health Information (PHI).

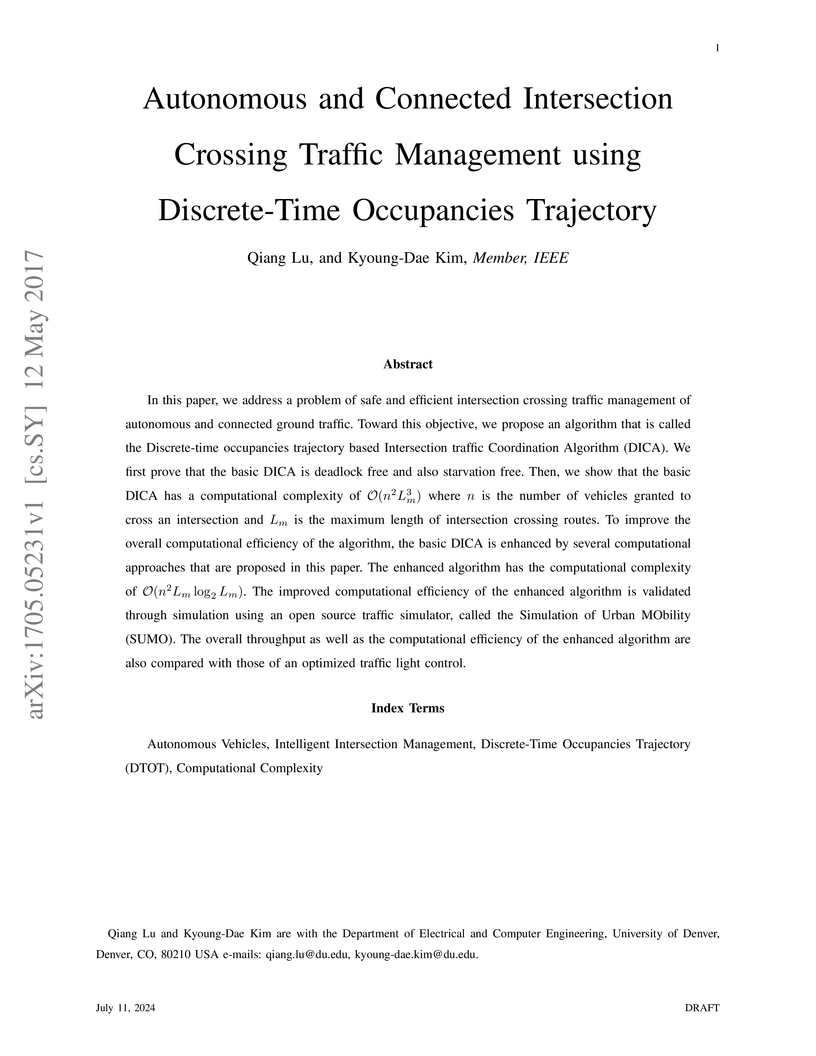

In this paper, we address a problem of safe and efficient intersection

crossing traffic management of autonomous and connected ground traffic. Toward

this objective, we propose an algorithm that is called the Discrete-time

occupancies trajectory based Intersection traffic Coordination Algorithm

(DICA). We first prove that the basic DICA is deadlock free and also starvation

free. Then, we show that the basic DICA has a computational complexity of

O(n2Lm3) where n is the number of vehicles granted to cross

an intersection and Lm is the maximum length of intersection crossing

routes.

To improve the overall computational efficiency of the algorithm, the basic

DICA is enhanced by several computational approaches that are proposed in this

paper. The enhanced algorithm has the computational complexity of

O(n2Lmlog2Lm). The improved computational efficiency of the

enhanced algorithm is validated through simulation using an open source traffic

simulator, called the Simulation of Urban MObility (SUMO). The overall

throughput as well as the computational efficiency of the enhanced algorithm

are also compared with those of an optimized traffic light control.

23 Jul 2024

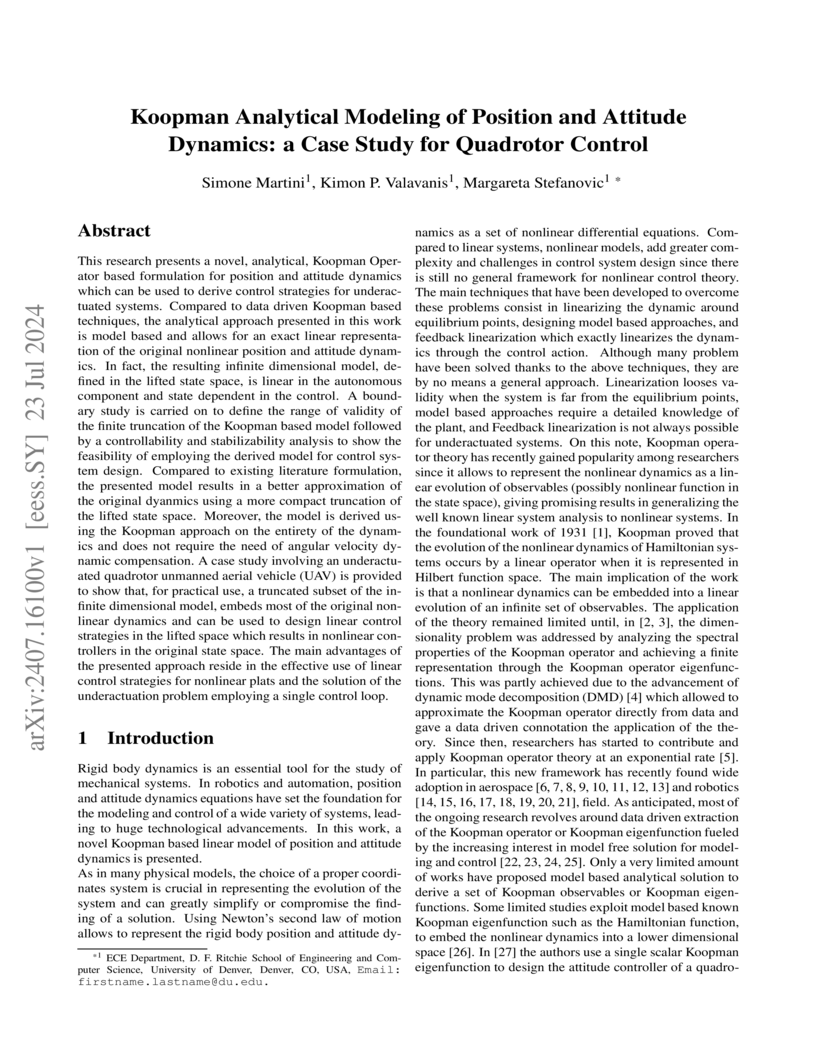

This research presents a novel, analytical, Koopman Operator based formulation for position and attitude dynamics which can be used to derive control strategies for underactuated systems. Compared to data driven Koopman based techniques, the analytical approach presented in this work is model based and allows for an exact linear representation of the original nonlinear position and attitude dynamics. In fact, the resulting infinite dimensional model, defined in the lifted state space, is linear in the autonomous component and state dependent in the control. A boundary study is carried on to define the range of validity of the finite truncation of the Koopman based model followed by a controllability and stabilizability analysis to show the feasibility of employing the derived model for control system design. Compared to existing literature formulation, the presented model results in a better approximation of the original dyanmics using a more compact truncation of the lifted state space. Moreover, the model is derived using the Koopman approach on the entirety of the dynamics and does not require the need of angular velocity dynamic compensation. A case study involving an underactuated quadrotor unmanned aerial vehicle (UAV) is provided to show that, for practical use, a truncated subset of the infinite dimensional model, embeds most of the original nonlinear dynamics and can be used to design linear control strategies in the lifted space which results in nonlinear controllers in the original state space. The main advantages of the presented approach reside in the effective use of linear control strategies for nonlinear plats and the solution of the underactuation problem employing a single control loop.

In two long-duration balloon flights over Antarctica, the BESS-Polar

collaboration has searched for antihelium in the cosmic radiation with higher

sensitivity than any reported investigation. BESS- Polar I flew in 2004,

observing for 8.5 days. BESS-Polar II flew in 2007-2008, observing for 24.5

days. No antihelium candidate was found in BESS-Polar I data among 8.4\times

10^6 |Z| = 2 nuclei from 1.0 to 20 GV or in BESS-Polar II data among 4.0\times

10^7 |Z| = 2 nuclei from 1.0 to 14 GV. Assuming antihelium to have the same

spectral shape as helium, a 95% confidence upper limit of 6.9 \times 10^-8 was

determined by combining all the BESS data, including the two BESS-Polar

flights. With no assumed antihelium spectrum and a weighted average of the

lowest antihelium efficiencies from 1.6 to 14 GV, an upper limit of 1.0 \times

10^-7 was determined for the combined BESS-Polar data. These are the most

stringent limits obtained to date.

02 Sep 2022

In this paper we study projective algebras in varieties of (bounded) commutative integral residuated lattices from an algebraic (as opposed to categorical) point of view. In particular we use a well-established construction in residuated lattices: the ordinal sum. Its interaction with divisibility makes our results have a better scope in varieties of divisibile commutative integral residuated lattices, and it allows us to show that many such varieties have the property that every finitely presented algebra is projective. In particular, we obtain results on (Stonean) Heyting algebras, certain varieties of hoops, and product algebras. Moreover, we study varieties with a Boolean retraction term, showing for instance that in a variety with a Boolean retraction term all finite Boolean algebras are projective. Finally, we connect our results with the theory of Unification.

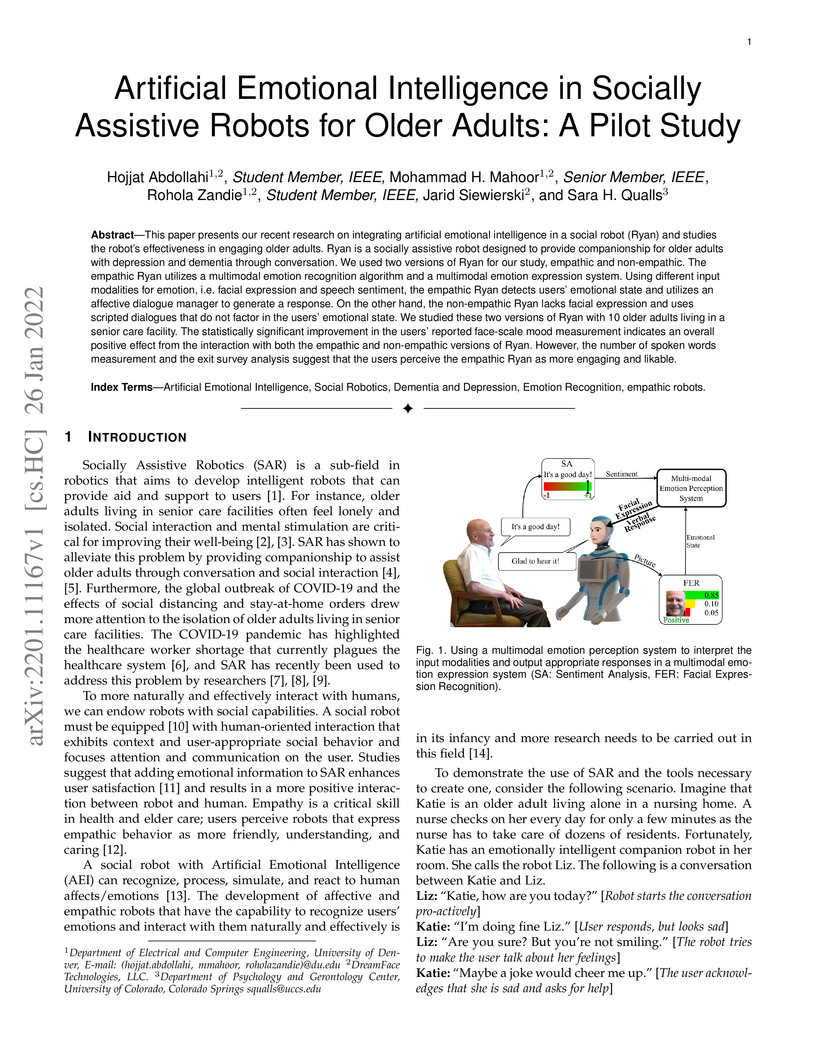

This paper presents our recent research on integrating artificial emotional

intelligence in a social robot (Ryan) and studies the robot's effectiveness in

engaging older adults. Ryan is a socially assistive robot designed to provide

companionship for older adults with depression and dementia through

conversation. We used two versions of Ryan for our study, empathic and

non-empathic. The empathic Ryan utilizes a multimodal emotion recognition

algorithm and a multimodal emotion expression system. Using different input

modalities for emotion, i.e. facial expression and speech sentiment, the

empathic Ryan detects users' emotional state and utilizes an affective dialogue

manager to generate a response. On the other hand, the non-empathic Ryan lacks

facial expression and uses scripted dialogues that do not factor in the users'

emotional state. We studied these two versions of Ryan with 10 older adults

living in a senior care facility. The statistically significant improvement in

the users' reported face-scale mood measurement indicates an overall positive

effect from the interaction with both the empathic and non-empathic versions of

Ryan. However, the number of spoken words measurement and the exit survey

analysis suggest that the users perceive the empathic Ryan as more engaging and

likable.

University of Washington

University of Washington CNRS

CNRS California Institute of Technology

California Institute of Technology Stanford University

Stanford University Stockholm UniversityUppsala UniversityRoyal Institute of Technology (KTH)ICREA

Stockholm UniversityUppsala UniversityRoyal Institute of Technology (KTH)ICREA The Ohio State UniversityUniversity of DenverGeorge Mason UniversityHiroshima UniversityINAFIstituto Nazionale di Fisica NucleareNaval Research LaboratoryNational Research Council

The Ohio State UniversityUniversity of DenverGeorge Mason UniversityHiroshima UniversityINAFIstituto Nazionale di Fisica NucleareNaval Research LaboratoryNational Research Council Waseda University

Waseda University University of California, Santa CruzUniversity of Maryland Baltimore CountyThe Oskar Klein Centre for Cosmoparticle PhysicsAgenzia Spaziale ItalianaNASAPolitecnico di BariUniversit`a di Roma Tor VergataMax-Planck Institut fur RadioastronomieCEA IrfuUniversit`a di TriesteUniversit`a di BariUniversité Bordeaux 1Max-Planck-Institut fur extraterrestrische PhysikNational Academy of SciencesUniversit`a degli Studi di PerugiaUniversit`a degli Studi di SienaPurdue University CalumetUniversite Montpellier 2Institut de Ci`encies de L’Espai (IEEC-CSIC)Centre d’Etude Spatiale des RayonnementsUniversit´a di UdineCentre d’´Etudes Nucl´eaires de Bordeaux GradignanUniversit

Paris Diderot`Ecole PolytechniqueUniversita' di Padova

University of California, Santa CruzUniversity of Maryland Baltimore CountyThe Oskar Klein Centre for Cosmoparticle PhysicsAgenzia Spaziale ItalianaNASAPolitecnico di BariUniversit`a di Roma Tor VergataMax-Planck Institut fur RadioastronomieCEA IrfuUniversit`a di TriesteUniversit`a di BariUniversité Bordeaux 1Max-Planck-Institut fur extraterrestrische PhysikNational Academy of SciencesUniversit`a degli Studi di PerugiaUniversit`a degli Studi di SienaPurdue University CalumetUniversite Montpellier 2Institut de Ci`encies de L’Espai (IEEC-CSIC)Centre d’Etude Spatiale des RayonnementsUniversit´a di UdineCentre d’´Etudes Nucl´eaires de Bordeaux GradignanUniversit

Paris Diderot`Ecole PolytechniqueUniversita' di PadovaWe report the discovery by the Large Area Telescope (LAT) onboard the Fermi Gamma-ray Space Telescope of high-energy gamma-ray emission from the peculiar quasar PMN J0948+0022 (z=0.5846). The optical spectrum of this object exhibits rather narrow Hbeta (FWHM(Hbeta) ~ 1500 km s^-1), weak forbidden lines and is therefore classified as a narrow-line type I quasar. This class of objects is thought to have relatively small black hole mass and to accrete at high Eddington ratio. The radio loudness and variability of the compact radio core indicates the presence of a relativistic jet. Quasi simultaneous radio-optical-X-ray and gamma-ray observations are presented. Both radio and gamma-ray emission (observed over 5-months) are strongly variable. The simultaneous optical and X-ray data from Swift show a blue continuum attributed to the accretion disk and a hard X-ray spectrum attributed to the jet. The resulting broad band spectral energy distribution (SED) and, in particular, the gamma-ray spectrum measured by Fermi are similar to those of more powerful FSRQ. A comparison of the radio and gamma-ray characteristics of PMN J0948+0022 with the other blazars detected by LAT shows that this source has a relatively low radio and gamma-ray power, with respect to other FSRQ. The physical parameters obtained from modelling the SED also fall at the low power end of the FSRQ parameter region discussed in Celotti & Ghisellini (2008). We suggest that the similarity of the SED of PMN J0948+0022 to that of more massive and more powerful quasars can be understood in a scenario in which the SED properties depend on the Eddington ratio rather than on the absolute power.

It is well-known that any admissible unidirectional heuristic search algorithm must expand all states whose f-value is smaller than the optimal solution cost when using a consistent heuristic. Such states are called "surely expanded" (s.e.). A recent study characterized s.e. pairs of states for bidirectional search with consistent heuristics: if a pair of states is s.e. then at least one of the two states must be expanded. This paper derives a lower bound, VC, on the minimum number of expansions required to cover all s.e. pairs, and present a new admissible front-to-end bidirectional heuristic search algorithm, Near-Optimal Bidirectional Search (NBS), that is guaranteed to do no more than 2VC expansions. We further prove that no admissible front-to-end algorithm has a worst case better than 2VC. Experimental results show that NBS competes with or outperforms existing bidirectional search algorithms, and often outperforms A* as well.

07 Feb 2025

This Paper introduces a methodology to achieve a lower dimensional Koopman

quasi linear representation of nonlinear dynamics using Koopman generalized

eigenfunctions. The methodology is presented for the analytically derived

Koopman formulation of rigid body dynamics but can be generalized to any

data-driven or analytically derived generalized eigenfunction set. The

presented approach aim at achieving a representation for which the number of

Koopman observables matches the number of input leading to an exact

linearization solution instead of resorting to the least square approximation

method. The methodology is tested by designing a linear quadratic (LQ) flight

controller of a quadrotor unmanned aerial vehicle (UAV). Hardware in the loop

simulations validate the applicability of this approach to real-time

implementation in presence of noise and sensor delays.

There are no more papers matching your filters at the moment.