European Commission Joint Research Centre

A method is introduced to provide users with provable control over the fraction of unwanted content in their personalized recommendations, utilizing Conformal Risk Control. This framework, developed by researchers from Fondazione Bruno Kessler, the European Commission's Joint Research Centre, and the University of Trento, preserves recommendation quality through an item replacement strategy that reintroduces 'safe' previously consumed content.

27 Jun 2024

The increasing frequency of attacks on Android applications coupled with the recent popularity of large language models (LLMs) necessitates a comprehensive understanding of the capabilities of the latter in identifying potential vulnerabilities, which is key to mitigate the overall risk. To this end, the work at hand compares the ability of nine state-of-the-art LLMs to detect Android code vulnerabilities listed in the latest Open Worldwide Application Security Project (OWASP) Mobile Top 10. Each LLM was evaluated against an open dataset of over 100 vulnerable code samples, including obfuscated ones, assessing each model's ability to identify key vulnerabilities. Our analysis reveals the strengths and weaknesses of each LLM, identifying important factors that contribute to their performance. Additionally, we offer insights into context augmentation with retrieval-augmented generation (RAG) for detecting Android code vulnerabilities, which in turn may propel secure application development. Finally, while the reported findings regarding code vulnerability analysis show promise, they also reveal significant discrepancies among the different LLMs.

12 Aug 2025

This study investigates the assisted lane change functionality of five different vehicles equipped with advanced driver assistance systems (ADAS). The goal is to examine novel, under-researched features of commercially available ADAS technologies. The experimental campaign, conducted in the I-24 highway near Nashville, TN, US, collected data on the kinematics and safety margins of assisted lane changes in real-world conditions. The results show that the kinematics of assisted lane changes are consistent for each system, with four out of five vehicles using slower speeds and decelerations than human drivers. However, one system consistently performed more assertive lane changes, completing the maneuver in around 5 seconds. Regarding safety margins, only three vehicles are investigated. Those operated in the US are not restricted by relevant UN regulations, and their designs were found not to adhere to these regulatory requirements. A simulation method used to classify the challenge level for the vehicle receiving the lane change, showing that these systems can force trailing vehicles to decelerate to keep a safe gap. One assisted system was found to have performed a maneuver that posed a hard challenge level for the other vehicle, raising concerns about the safety of these systems in real-world operation. All three vehicles were found to carry out lane changes that induced decelerations to the vehicle in the target lane. Those decelerations could affect traffic flow, inducing traffic shockwaves.

12 Jul 2022

Despite the increasing integration of the global economic system, anti-dumping measures are a common tool used by governments to protect their national economy. In this paper, we propose a methodology to detect cases of anti-dumping circumvention through re-routing trade via a third country. Based on the observed full network of trade flows, we propose a measure to proxy the evasion of an anti-dumping duty for a subset of trade flows directed to the European Union, and look for possible cases of circumvention of an active anti-dumping duty. Using panel regression, we are able correctly classify 86% of the trade flows, on which an investigation of anti-dumping circumvention has been opened by the European authorities.

27 Jul 2023

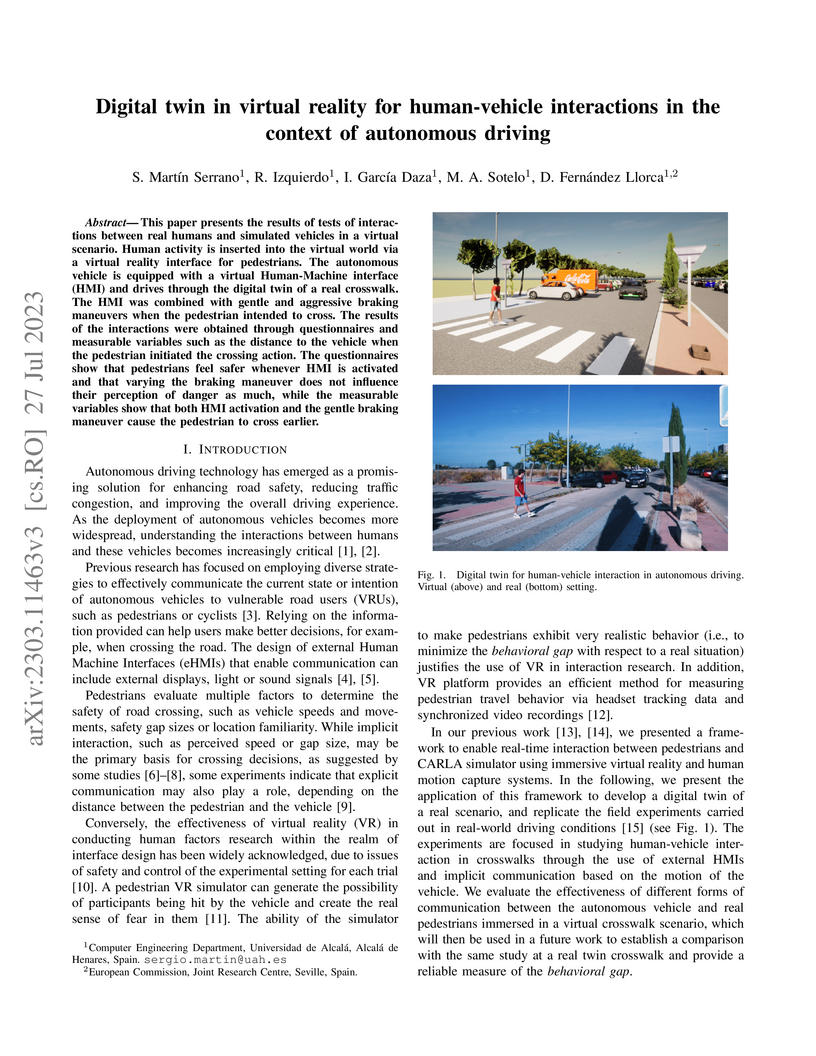

This paper presents the results of tests of interactions between real humans and simulated vehicles in a virtual scenario. Human activity is inserted into the virtual world via a virtual reality interface for pedestrians. The autonomous vehicle is equipped with a virtual Human-Machine interface (HMI) and drives through the digital twin of a real crosswalk. The HMI was combined with gentle and aggressive braking maneuvers when the pedestrian intended to cross. The results of the interactions were obtained through questionnaires and measurable variables such as the distance to the vehicle when the pedestrian initiated the crossing action. The questionnaires show that pedestrians feel safer whenever HMI is activated and that varying the braking maneuver does not influence their perception of danger as much, while the measurable variables show that both HMI activation and the gentle braking maneuver cause the pedestrian to cross earlier.

17 Feb 2020

In recent decades, global oil palm production has shown an abrupt increase, with almost 90% produced in Southeast Asia alone. Monitoring oil palm is largely based on national surveys and inventories or one-off mapping studies. However, they do not provide detailed spatial extent or timely updates and trends in oil palm expansion or age. Palm oil yields vary significantly with plantation age, which is critical for landscape-level planning. Here we show the extent and age of oil palm plantations for the year 2017 across Southeast Asia using remote sensing. Satellites reveal a total of 11.66 (+/- 2.10) million hectares (Mha) of plantations with more than 45% located in Sumatra. Plantation age varies from ~7 years in Kalimantan to ~13 in Insular Malaysia. More than half the plantations on Kalimantan are young (<7 years) and not yet in full production compared to Insular Malaysia where 45% of plantations are older than 15 years, with declining yields. For the first time, these results provide a consistent, independent, and transparent record of oil palm plantation extent and age structure, which are complementary to national statistics.

06 Dec 2021

We identify recurrent ingredients in the antithetic sampling literature leading to a unified sampling framework. We introduce a new class of antithetic schemes that includes the most used antithetic proposals. This perspective enables the derivation of new properties of the sampling schemes: i) optimality in the Kullback-Leibler sense; ii) closed-form multivariate Kendall's τ and Spearman's ρ; iii)ranking in concordance order and iv) a central limit theorem that characterizes stochastic behavior of Monte Carlo estimators when the sample size tends to infinity. Finally, we provide applications to Monte Carlo integration and Markov Chain Monte Carlo Bayesian estimation.

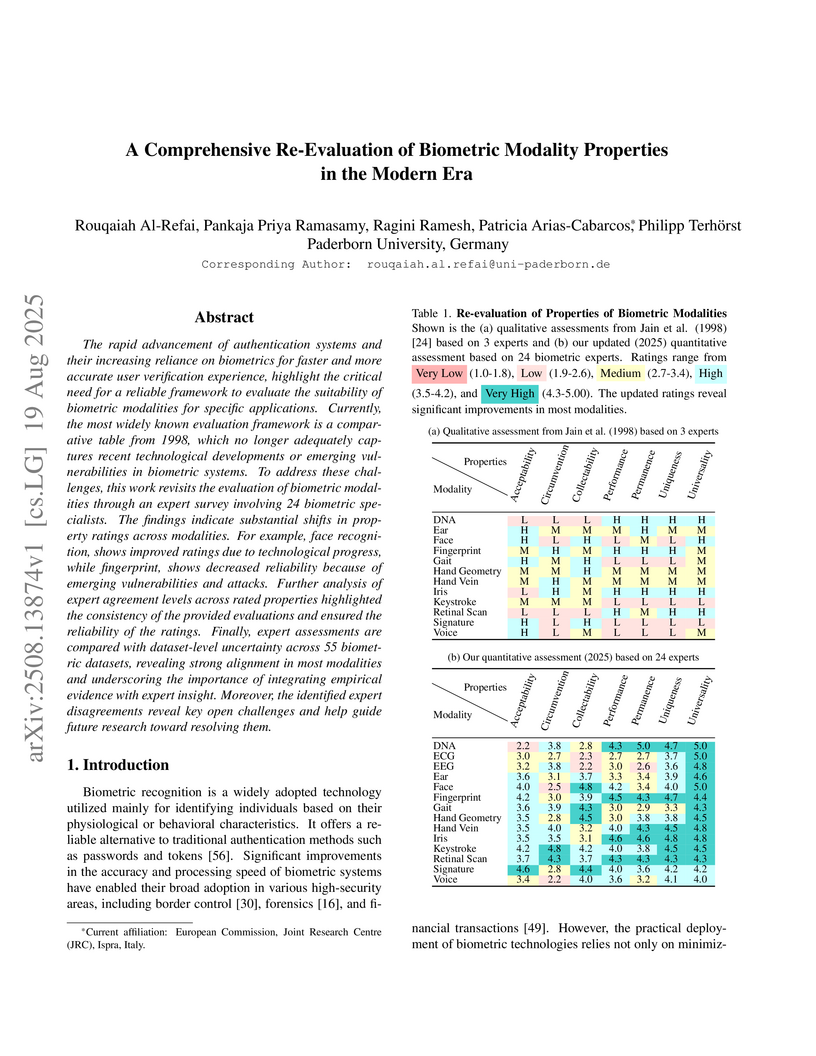

The rapid advancement of authentication systems and their increasing reliance on biometrics for faster and more accurate user verification experience, highlight the critical need for a reliable framework to evaluate the suitability of biometric modalities for specific applications. Currently, the most widely known evaluation framework is a comparative table from 1998, which no longer adequately captures recent technological developments or emerging vulnerabilities in biometric systems. To address these challenges, this work revisits the evaluation of biometric modalities through an expert survey involving 24 biometric specialists. The findings indicate substantial shifts in property ratings across modalities. For example, face recognition, shows improved ratings due to technological progress, while fingerprint, shows decreased reliability because of emerging vulnerabilities and attacks. Further analysis of expert agreement levels across rated properties highlighted the consistency of the provided evaluations and ensured the reliability of the ratings. Finally, expert assessments are compared with dataset-level uncertainty across 55 biometric datasets, revealing strong alignment in most modalities and underscoring the importance of integrating empirical evidence with expert insight. Moreover, the identified expert disagreements reveal key open challenges and help guide future research toward resolving them.

24 Sep 2013

This paper describes a new, freely available, highly multilingual named

entity resource for person and organisation names that has been compiled over

seven years of large-scale multilingual news analysis combined with Wikipedia

mining, resulting in 205,000 per-son and organisation names plus about the same

number of spelling variants written in over 20 different scripts and in many

more languages. This resource, produced as part of the Europe Media Monitor

activity (EMM, this http URL), can be used for a number

of purposes. These include improving name search in databases or on the

internet, seeding machine learning systems to learn named entity recognition

rules, improve machine translation results, and more. We describe here how this

resource was created; we give statistics on its current size; we address the

issue of morphological inflection; and we give details regarding its

functionality. Updates to this resource will be made available daily.

We build a simple diagnostic criterion for approximate factor structure in large cross-sectional equity datasets. Given a model for asset returns with observable factors, the criterion checks whether the error terms are weakly cross-sectionally correlated or share at least one unobservable common factor. It only requires computing the largest eigenvalue of the empirical cross-sectional covariance matrix of the residuals of a large unbalanced panel. A general version of this criterion allows us to determine the number of omitted common factors. The panel data model accommodates both time-invariant and time-varying factor structures. The theory applies to random coefficient panel models with interactive fixed effects under large cross-section and time-series dimensions. The empirical analysis runs on monthly and quarterly returns for about ten thousand US stocks from January 1968 to December 2011 for several time-invariant and time-varying specifications. For monthly returns, we can choose either among time-invariant specifications with at least four financial factors, or a scaled three-factor specification. For quarterly returns, we cannot select macroeconomic models without the market factor.

31 Mar 2025

The deployment of Automated Vehicles (AVs) is expected to address road

transport externalities (e.g., safety, traffic, environmental impact, etc.).

For this reason, a legal framework for their large-scale market introduction

and deployment is currently being developed in the European Union. Despite the

first steps towards road transport automation, the timeline for full automation

and its potential economic benefits remains uncertain. The aim of this paper is

twofold. First, it presents a methodological framework to determine deployment

pathways of the five different levels of automation in EU27+UK to 2050 under

three scenarios (i.e., slow, medium baseline and fast) focusing on passenger

vehicles. Second, it proposes an assessment of the economic impact of AVs

through the calculation of the value-added. The method to define assumptions

and uptake trajectories involves a comprehensive literature review, expert

interviews, and a model to forecast the new registrations of different levels

of automation. In this way, the interviews provided insights that complemented

the literature and informed the design of assumptions and deployment

trajectories. The added-value assessment shows additional economic activity due

to the introduction of automated technologies in all uptake scenarios.

28 Oct 2021

When a new environmental policy or a specific intervention is taken in order to improve air quality, it is paramount to assess and quantify - in space and time - the effectiveness of the adopted strategy. The lockdown measures taken worldwide in 2020 to reduce the spread of the SARS-CoV- 2 virus can be envisioned as a policy intervention with an indirect effect on air quality. In this paper we propose a statistical spatio-temporal model as a tool for intervention analysis, able to take into account the effect of weather and other confounding factors, as well as the spatial and temporal correlation existing in the data. In particular, we focus here on the 2019/2020 relative change in nitrogen dioxide (NO2) concentrations in the north of Italy, for the period of March and April during which the lockdown measure was in force. As an output, we provide a collection of weekly continuous maps, describing the spatial pattern of the NO2 2019/2020 relative changes. We found that during March and April 2020 most of the studied area is characterized by negative relative changes (median values around -25%), with the exception of the first week of March and the fourth week of April (median values around 5%). As these changes cannot be attributed to a weather effect, it is likely that they are a byproduct of the lockdown measures.

11 Jul 2025

We address the task of identifying anomalous observations by analyzing digits under the lens of Benford's law. Motivated by the crucial objective of providing reliable statistical analysis of customs declarations, we answer one major and still open question: How can we detect the behavior of operators who are aware of the prevalence of the Benford's pattern in the digits of regular observations and try to manipulate their data in such a way that the same pattern also holds after data fabrication? This challenge arises from the ability of highly skilled and strategically minded manipulators in key organizational positions or criminal networks to exploit statistical knowledge and evade detection. For this purpose, we write a specific contamination model for digits, obtain new relevant distributional results and derive appropriate goodness-of-fit statistics for the considered adversarial testing problem. Along our path, we also unveil the peculiar relationship between two simple conformance tests based on the distribution of the first digit. We show the empirical properties of the proposed tests through a simulation exercise and application to data from international trade transactions. Although we cannot claim that our results are able to anticipate data fabrication with certainty, they surely point to situations where more substantial controls are needed. Furthermore, our work can reinforce trust in data integrity in many critical domains where mathematically informed misconduct is suspected.

We study how the diffusion of broadband Internet affects social capital using

two data sets from the UK. Our empirical strategy exploits the fact that

broadband access has long depended on customers' position in the voice

telecommunication infrastructure that was designed in the 1930s. The actual

speed of an Internet connection, in fact, rapidly decays with the distance of

the dwelling from the specific node of the network serving its area. Merging

unique information about the topology of the voice network with geocoded

longitudinal data about individual social capital, we show that access to

broadband Internet caused a significant decline in forms of offline interaction

and civic engagement. Overall, our results suggest that broadband penetration

substantially crowded out several aspects of social capital.

The moral value of liberty is a central concept in our inference system when it comes to taking a stance towards controversial social issues such as vaccine hesitancy, climate change, or the right to abortion. Here, we propose a novel Liberty lexicon evaluated on more than 3,000 manually annotated data both in in- and out-of-domain scenarios. As a result of this evaluation, we produce a combined lexicon that constitutes the main outcome of this work. This final lexicon incorporates information from an ensemble of lexicons that have been generated using word embedding similarity (WE) and compositional semantics (CS). Our key contributions include enriching the liberty annotations, developing a robust liberty lexicon for broader application, and revealing the complexity of expressions related to liberty across different platforms. Through the evaluation, we show that the difficulty of the task calls for designing approaches that combine knowledge, in an effort of improving the representations of learning systems.

08 Feb 2024

This paper investigated the potential of a multivariate Transformer model to

forecast the temporal trajectory of the Fraction of Absorbed Photosynthetically

Active Radiation (FAPAR) for short (1 month) and long horizon (more than 1

month) periods at the regional level in Europe and North Africa. The input data

covers the period from 2002 to 2022 and includes remote sensing and weather

data for modelling FAPAR predictions. The model was evaluated using a leave one

year out cross-validation and compared with the climatological benchmark.

Results show that the transformer model outperforms the benchmark model for one

month forecasting horizon, after which the climatological benchmark is better.

The RMSE values of the transformer model ranged from 0.02 to 0.04 FAPAR units

for the first 2 months of predictions. Overall, the tested Transformer model is

a valid method for FAPAR forecasting, especially when combined with weather

data and used for short-term predictions.

Uncertainty propagation to the γ-γ coincidence-summing

correction factor from the covariances of the nuclear data and detection

efficiencies have been formulated. The method was applied in the uncertainty

analysis of the coincidence-summing correction factors in the γ-ray

spectrometry of the 134Cs point source using a p-type coaxial HPGe

detector.

Motion prediction systems aim to capture the future behavior of traffic

scenarios enabling autonomous vehicles to perform safe and efficient planning.

The evolution of these scenarios is highly uncertain and depends on the

interactions of agents with static and dynamic objects in the scene. GNN-based

approaches have recently gained attention as they are well suited to naturally

model these interactions. However, one of the main challenges that remains

unexplored is how to address the complexity and opacity of these models in

order to deal with the transparency requirements for autonomous driving

systems, which includes aspects such as interpretability and explainability. In

this work, we aim to improve the explainability of motion prediction systems by

using different approaches. First, we propose a new Explainable Heterogeneous

Graph-based Policy (XHGP) model based on an heterograph representation of the

traffic scene and lane-graph traversals, which learns interaction behaviors

using object-level and type-level attention. This learned attention provides

information about the most important agents and interactions in the scene.

Second, we explore this same idea with the explanations provided by

GNNExplainer. Third, we apply counterfactual reasoning to provide explanations

of selected individual scenarios by exploring the sensitivity of the trained

model to changes made to the input data, i.e., masking some elements of the

scene, modifying trajectories, and adding or removing dynamic agents. The

explainability analysis provided in this paper is a first step towards more

transparent and reliable motion prediction systems, important from the

perspective of the user, developers and regulatory agencies. The code to

reproduce this work is publicly available at

this https URL

We introduce a novel multilingual hierarchical corpus annotated for entity framing and role portrayal in news articles. The dataset uses a unique taxonomy inspired by storytelling elements, comprising 22 fine-grained roles, or archetypes, nested within three main categories: protagonist, antagonist, and innocent. Each archetype is carefully defined, capturing nuanced portrayals of entities such as guardian, martyr, and underdog for protagonists; tyrant, deceiver, and bigot for antagonists; and victim, scapegoat, and exploited for innocents. The dataset includes 1,378 recent news articles in five languages (Bulgarian, English, Hindi, European Portuguese, and Russian) focusing on two critical domains of global significance: the Ukraine-Russia War and Climate Change. Over 5,800 entity mentions have been annotated with role labels. This dataset serves as a valuable resource for research into role portrayal and has broader implications for news analysis. We describe the characteristics of the dataset and the annotation process, and we report evaluation results on fine-tuned state-of-the-art multilingual transformers and hierarchical zero-shot learning using LLMs at the level of a document, a paragraph, and a sentence.

01 Mar 2022

Variance-based sensitivity indices have established themselves as a reference among practitioners of sensitivity analysis of model outputs. A variance-based sensitivity analysis typically produces the first-order sensitivity indices Sj and the so-called total-effect sensitivity indices Tj for the uncertain factors of the mathematical model under analysis.

The cost of the analysis depends upon the number of model evaluations needed to obtain stable and accurate values of the estimates. While efficient estimation procedures are available for Sj, this availability is less the case for Tj. When estimating these indices, one can either use a sample-based approach whose computational cost depends on the number of factors or use approaches based on meta modelling/emulators.

The present work focuses on sample-based estimation procedures for Tj and tests different avenues to achieve an algorithmic improvement over the existing best practices. To improve the exploration of the space of the input factors (design) and the formula to compute the indices (estimator), we propose strategies based on the concepts of economy and explorativity. We then discuss how several existing estimators perform along these characteristics.

We conclude that: a) sample-based approaches based on the use of multiple matrices to enhance the economy are outperformed by designs using fewer matrices but with better explorativity; b) among the latter, asymmetric designs perform the best and outperform symmetric designs having corrective terms for spurious correlations; c) improving on the existing best practices is fraught with difficulties; and d) ameliorating the results comes at the cost of introducing extra design parameters.

There are no more papers matching your filters at the moment.