FORTH - ICS

19 Nov 2025

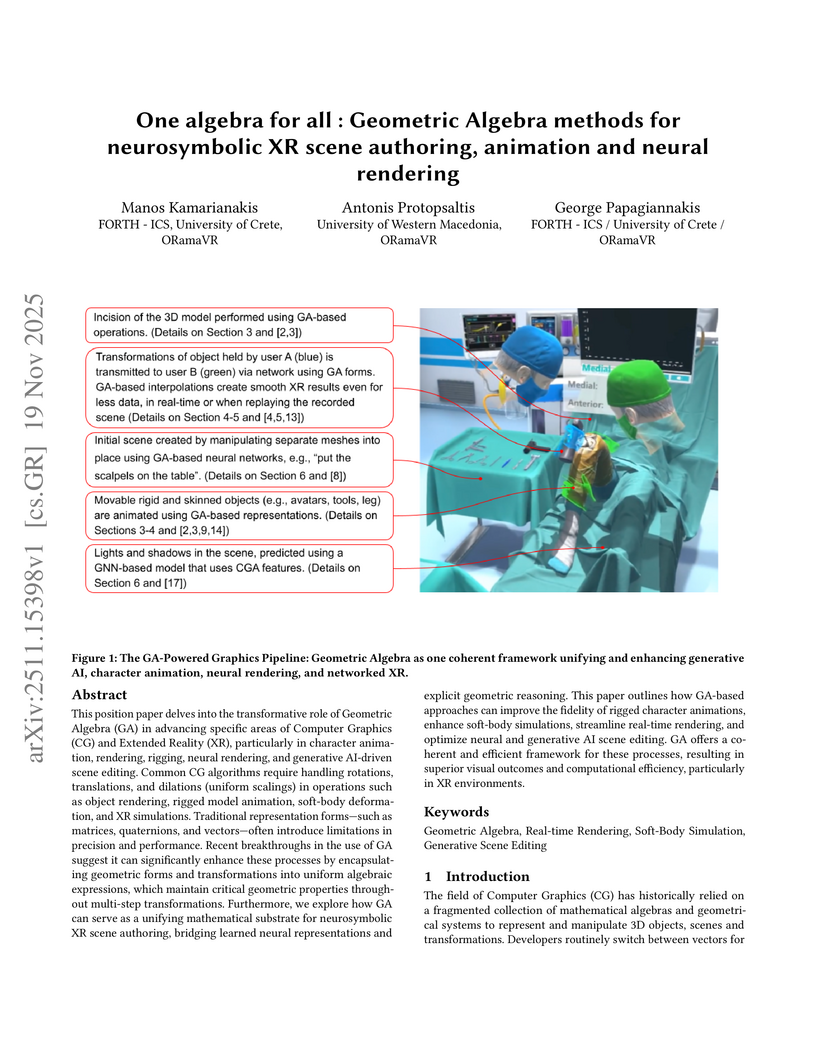

This position paper delves into the transformative role of Geometric Algebra (GA) in advancing specific areas of Computer Graphics (CG) and Extended Reality (XR), particularly in character animation, rendering, rigging, neural rendering, and generative AI-driven scene editing. Common CG algorithms require handling rotations, translations, and dilations (uniform scalings) in operations such as object rendering, rigged model animation, soft-body deformation, and XR simulations. Traditional representation forms - such as matrices, quaternions, and vectors - often introduce limitations in precision and performance. Recent breakthroughs in the use of GA suggest it can significantly enhance these processes by encapsulating geometric forms and transformations into uniform algebraic expressions, which maintain critical geometric properties throughout multi-step transformations. Furthermore, we explore how GA can serve as a unifying mathematical substrate for neurosymbolic XR scene authoring, bridging learned neural representations and explicit geometric reasoning. This paper outlines how GA-based approaches can improve the fidelity of rigged character animations, enhance soft-body simulations, streamline real-time rendering, and optimize neural and generative AI scene editing. GA offers a coherent and efficient framework for these processes, resulting in superior visual outcomes and computational efficiency, particularly in XR environments.

25 Sep 2024

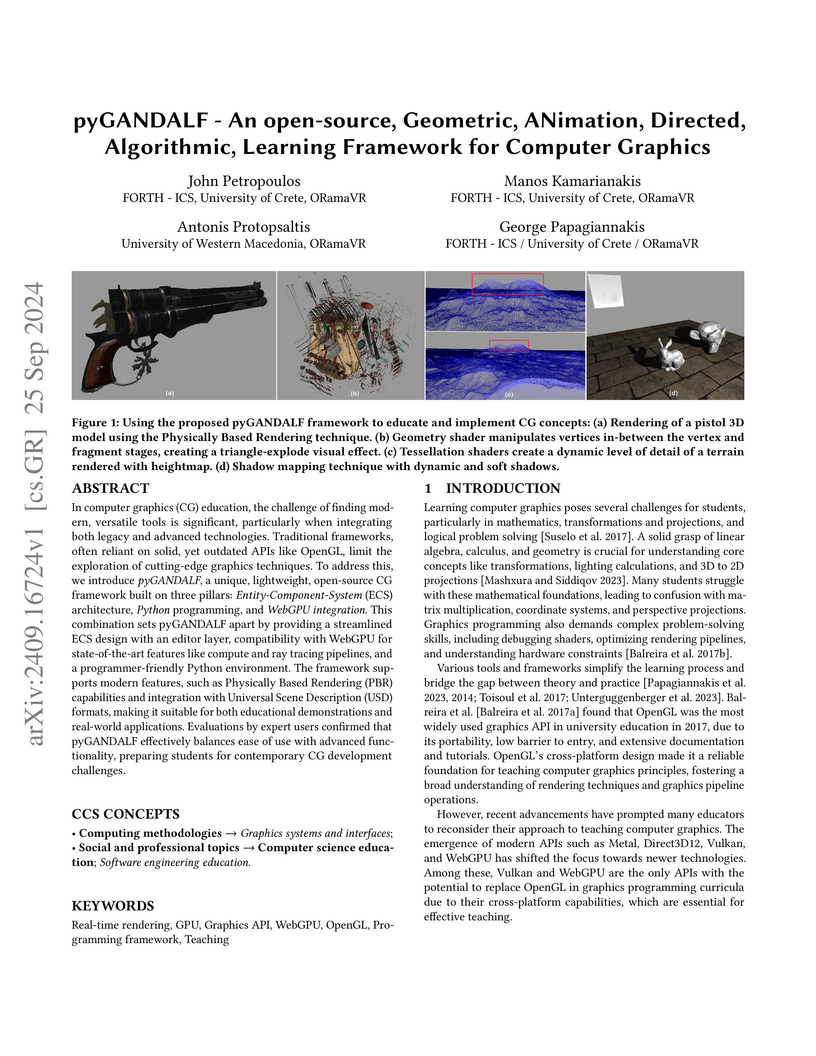

In computer graphics (CG) education, the challenge of finding modern,

versatile tools is significant, particularly when integrating both legacy and

advanced technologies. Traditional frameworks, often reliant on solid, yet

outdated APIs like OpenGL, limit the exploration of cutting-edge graphics

techniques. To address this, we introduce pyGANDALF, a unique, lightweight,

open-source CG framework built on three pillars: Entity-Component-System (ECS)

architecture, Python programming, and WebGPU integration. This combination sets

pyGANDALF apart by providing a streamlined ECS design with an editor layer,

compatibility with WebGPU for state-of-the-art features like compute and ray

tracing pipelines, and a programmer-friendly Python environment. The framework

supports modern features, such as Physically Based Rendering (PBR) capabilities

and integration with Universal Scene Description (USD) formats, making it

suitable for both educational demonstrations and real-world applications.

Evaluations by expert users confirmed that pyGANDALF effectively balances ease

of use with advanced functionality, preparing students for contemporary CG

development challenges.

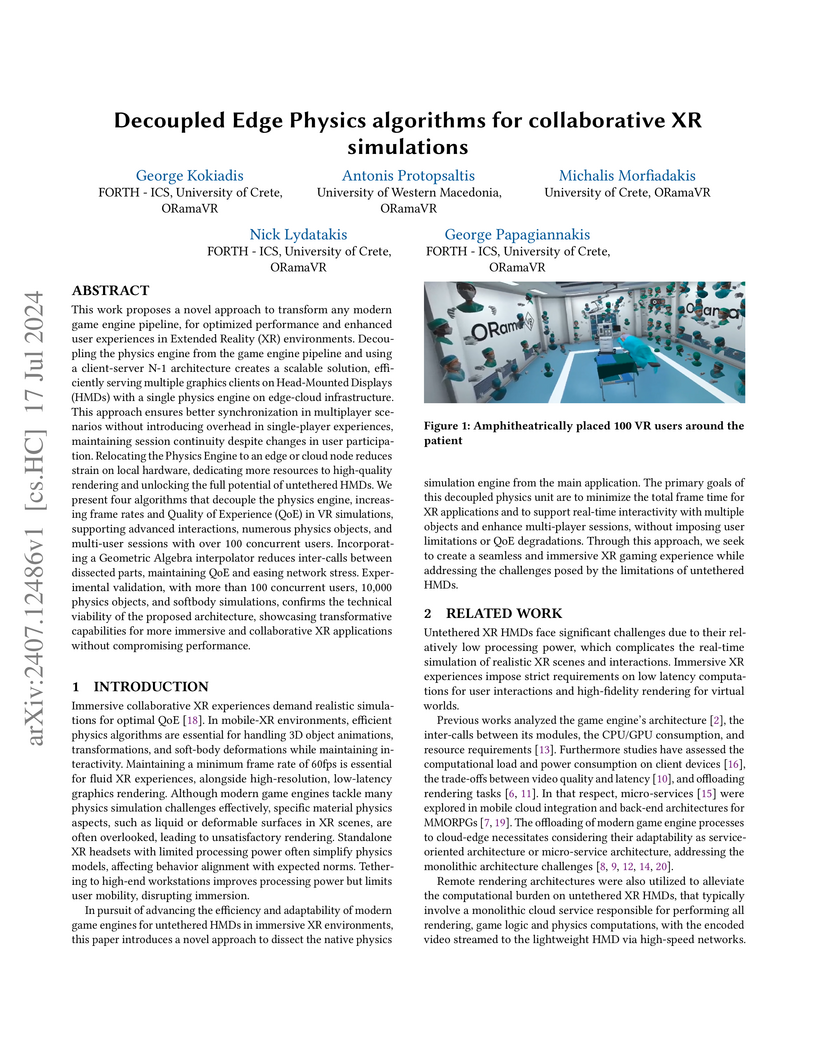

This work proposes a novel approach to transform any modern game engine pipeline, for optimized performance and enhanced user experiences in Extended Reality (XR) environments. Decoupling the physics engine from the game engine pipeline and using a client-server N-1 architecture creates a scalable solution, efficiently serving multiple graphics clients on Head-Mounted Displays (HMDs) with a single physics engine on edge-cloud infrastructure. This approach ensures better synchronization in multiplayer scenarios without introducing overhead in single-player experiences, maintaining session continuity despite changes in user participation. Relocating the Physics Engine to an edge or cloud node reduces strain on local hardware, dedicating more resources to high-quality rendering and unlocking the full potential of untethered HMDs. We present four algorithms that decouple the physics engine, increasing frame rates and Quality of Experience (QoE) in VR simulations, supporting advanced interactions, numerous physics objects, and multi-user sessions with over 100 concurrent users. Incorporating a Geometric Algebra interpolator reduces inter-calls between dissected parts, maintaining QoE and easing network stress. Experimental validation, with more than 100 concurrent users, 10,000 physics objects, and softbody simulations, confirms the technical viability of the proposed architecture, showcasing transformative capabilities for more immersive and collaborative XR applications without compromising performance.

12 May 2020

The continuous and rapid growth of highly interconnected datasets, which are both voluminous and complex, calls for the development of adequate processing and analytical techniques. One method for condensing and simplifying such datasets is graph summarization. It denotes a series of application-specific algorithms designed to transform graphs into more compact representations while preserving structural patterns, query answers, or specific property distributions. As this problem is common to several areas studying graph topologies, different approaches, such as clustering, compression, sampling, or influence detection, have been proposed, primarily based on statistical and optimization methods. The focus of our chapter is to pinpoint the main graph summarization methods, but especially to focus on the most recent approaches and novel research trends on this topic, not yet covered by previous surveys.

There is a recent trend for using the novel Artificial Intelligence ChatGPT chatbox, which provides detailed responses and articulate answers across many domains of knowledge. However, in many cases it returns plausible-sounding but incorrect or inaccurate responses, whereas it does not provide evidence. Therefore, any user has to further search for checking the accuracy of the answer or/and for finding more information about the entities of the response. At the same time there is a high proliferation of RDF Knowledge Graphs (KGs) over any real domain, that offer high quality structured data. For enabling the combination of ChatGPT and RDF KGs, we present a research prototype, called GPToLODS, which is able to enrich any ChatGPT response with more information from hundreds of RDF KGs. In particular, it identifies and annotates each entity of the response with statistics and hyperlinks to LODsyndesis KG (which contains integrated data from 400 RDF KGs and over 412 million entities). In this way, it is feasible to enrich the content of entities and to perform fact checking and validation for the facts of the response at real time.

28 May 2021

Descriptive and empirical sciences, such as History, are the sciences that collect, observe and describe phenomena in order to explain them and draw interpretative conclusions about influences, driving forces and impacts under given circumstances. Spreadsheet software and relational database management systems are still the dominant tools for quantitative analysis and overall data management in these these sciences, allowing researchers to directly analyse the gathered data and perform scholarly interpretation. However, this current practice has a set of limitations, including the high dependency of the collected data on the initial research hypothesis, usually useless for other research, the lack of representation of the details from which the registered relations are inferred, and the difficulty to revisit the original data sources for verification, corrections or improvements. To cope with these problems, in this paper we present FAST CAT, a collaborative system for assistive data entry and curation in Digital Humanities and similar forms of empirical research. We describe the related challenges, the overall methodology we follow for supporting semantic interoperability, and discuss the use of FAST CAT in the context of a European (ERC) project of Maritime History, called SeaLiT, which examines economic, social and demographic impacts of the introduction of steamboats in the Mediterranean area between the 1850s and the 1920s.

02 May 2022

We present a novel integration of a real-time continuous tearing algorithm for 3D meshes in VR, suitable for devices of low CPU/GPU specifications, along with a suitable particle decomposition that allows soft-body deformations on both the original and the torn model.

Augmenting an existing sequential data structure with extra information to support greater functionality is a widely used technique. For example, search trees are augmented to build sequential data structures like order-statistic trees, interval trees, tango trees, link/cut trees and many others.

We study how to design concurrent augmented tree data structures. We present a new, general technique that can augment a lock-free tree to add any new fields to each tree node, provided the new fields' values can be computed from information in the node and its children. This enables the design of lock-free, linearizable analogues of a wide variety of classical augmented data structures. As a first example, we give a wait-free trie that stores a set S of elements drawn from {1,…,N} and supports linearizable order-statistic queries such as finding the kth smallest element of S. Updates and queries take O(logN) steps. We also apply our technique to a lock-free binary search tree (BST), where changes to the structure of the tree make the linearization argument more challenging. Our augmented BST supports order statistic queries in O(h) steps on a tree of height h. The augmentation does not affect the asymptotic running time of the updates.

For both our trie and BST, we give an alternative augmentation to improve searches and order-statistic queries to run in O(log∣S∣) steps (with a small increase in step complexity of updates). As an added bonus, our technique supports arbitrary multi-point queries (such as range queries) with the same time complexity as they would have in the corresponding sequential data structure.

This paper introduces a novel integration of Large Language Models (LLMs) with Conformal Geometric Algebra (CGA) to revolutionize controllable 3D scene editing, particularly for object repositioning tasks, which traditionally requires intricate manual processes and specialized expertise. These conventional methods typically suffer from reliance on large training datasets or lack a formalized language for precise edits. Utilizing CGA as a robust formal language, our system, Shenlong, precisely models spatial transformations necessary for accurate object repositioning. Leveraging the zero-shot learning capabilities of pre-trained LLMs, Shenlong translates natural language instructions into CGA operations which are then applied to the scene, facilitating exact spatial transformations within 3D scenes without the need for specialized pre-training. Implemented in a realistic simulation environment, Shenlong ensures compatibility with existing graphics pipelines. To accurately assess the impact of CGA, we benchmark against robust Euclidean Space baselines, evaluating both latency and accuracy. Comparative performance evaluations indicate that Shenlong significantly reduces LLM response times by 16% and boosts success rates by 9.6% on average compared to the traditional methods. Notably, Shenlong achieves a 100% perfect success rate in common practical queries, a benchmark where other systems fall short. These advancements underscore Shenlong's potential to democratize 3D scene editing, enhancing accessibility and fostering innovation across sectors such as education, digital entertainment, and virtual reality.

For years, SIMD/vector units have enhanced the capabilities of modern CPUs in High-Performance Computing (HPC) and mobile technology. Typical commercially-available SIMD units process up to 8 double-precision elements with one instruction. The optimal vector width and its impact on CPU throughput due to memory latency and bandwidth remain challenging research areas. This study examines the behavior of four computational kernels on a RISC-V core connected to a customizable vector unit, capable of operating up to 256 double precision elements per instruction. The four codes have been purposefully selected to represent non-dense workloads: SpMV, BFS, PageRank, FFT. The experimental setup allows us to measure their performance while varying the vector length, the memory latency, and bandwidth. Our results not only show that larger vector lengths allow for better tolerance of limitations in the memory subsystem but also offer hope to code developers beyond dense linear algebra.

Since ChatGPT offers detailed responses without justifications, and erroneous facts even for popular persons, events and places, in this paper we present a novel pipeline that retrieves the response of ChatGPT in RDF and tries to validate the ChatGPT facts using one or more RDF Knowledge Graphs (KGs). To this end we leverage DBpedia and LODsyndesis (an aggregated Knowledge Graph that contains 2 billion triples from 400 RDF KGs of many domains) and short sentence embeddings, and introduce an algorithm that returns the more relevant triple(s) accompanied by their provenance and a confidence score. This enables the validation of ChatGPT responses and their enrichment with justifications and provenance. To evaluate this service (such services in general), we create an evaluation benchmark that includes 2,000 ChatGPT facts; specifically 1,000 facts for famous Greek Persons, 500 facts for popular Greek Places, and 500 facts for Events related to Greece. The facts were manually labelled (approximately 73% of ChatGPT facts were correct and 27% of facts were erroneous). The results are promising; indicatively for the whole benchmark, we managed to verify the 85.3% of the correct facts of ChatGPT and to find the correct answer for the 58% of the erroneous ChatGPT facts.

Automatic fake news detection is a challenging problem in misinformation

spreading, and it has tremendous real-world political and social impacts. Past

studies have proposed machine learning-based methods for detecting such fake

news, focusing on different properties of the published news articles, such as

linguistic characteristics of the actual content, which however have

limitations due to the apparent language barriers. Departing from such efforts,

we propose Fake News Detection-as-a Service (FNDaaS), the first automatic,

content-agnostic fake news detection method, that considers new and unstudied

features such as network and structural characteristics per news website. This

method can be enforced as-a-Service, either at the ISP-side for easier

scalability and maintenance, or user-side for better end-user privacy. We

demonstrate the efficacy of our method using more than 340K datapoints crawled

from existing lists of 637 fake and 1183 real news websites, and by building

and testing a proof of concept system that materializes our proposal. Our

analysis of data collected from these websites shows that the vast majority of

fake news domains are very young and appear to have lower time periods of an IP

associated with their domain than real news ones. By conducting various

experiments with machine learning classifiers, we demonstrate that FNDaaS can

achieve an AUC score of up to 0.967 on past sites, and up to 77-92% accuracy on

newly-flagged ones.

18 Sep 2022

We present an algorithm that allows a user within a virtual environment to perform real-time unconstrained cuts or consecutive tears, i.e., progressive, continuous fractures on a deformable rigged and soft-body mesh model in high-performance 10ms. In order to recreate realistic results for different physically-principled materials such as sponges, hard or soft tissues, we incorporate a novel soft-body deformation, via a particle system layered on-top of a linear-blend skinning model. Our framework allows the simulation of realistic, surgical-grade cuts and continuous tears, especially valuable in the context of medical VR training. In order to achieve high performance in VR, our algorithms are based on Euclidean geometric predicates on the rigged mesh, without requiring any specific model pre-processing. The contribution of this work lies on the fact that current frameworks supporting similar kinds of model tearing, either do not operate in high-performance real-time or only apply to predefined tears. The framework presented allows the user to freely cut or tear a 3D mesh model in a consecutive way, under 10ms, while preserving its soft-body behaviour and/or allowing further animation.

26 Dec 2022

Recent advances in NLU and NLP have resulted in renewed interest in natural

language interfaces to data, which provide an easy mechanism for non-technical

users to access and query the data. While early systems evolved from keyword

search and focused on simple factual queries, the complexity of both the input

sentences as well as the generated SQL queries has evolved over time. More

recently, there has also been a lot of focus on using conversational interfaces

for data analytics, empowering a line of non-technical users with quick

insights into the data. There are three main challenges in natural language

querying (NLQ): (1) identifying the entities involved in the user utterance,

(2) connecting the different entities in a meaningful way over the underlying

data source to interpret user intents, and (3) generating a structured query in

the form of SQL or SPARQL.

There are two main approaches for interpreting a user's NLQ. Rule-based

systems make use of semantic indices, ontologies, and KGs to identify the

entities in the query, understand the intended relationships between those

entities, and utilize grammars to generate the target queries. With the

advances in deep learning (DL)-based language models, there have been many

text-to-SQL approaches that try to interpret the query holistically using DL

models. Hybrid approaches that utilize both rule-based techniques as well as DL

models are also emerging by combining the strengths of both approaches.

Conversational interfaces are the next natural step to one-shot NLQ by

exploiting query context between multiple turns of conversation for

disambiguation. In this article, we review the background technologies that are

used in natural language interfaces, and survey the different approaches to

NLQ. We also describe conversational interfaces for data analytics and discuss

several benchmarks used for NLQ research and evaluation.

This paper presents the tracking approach for deriving detectably recoverable (and thus also durable) implementations of many widely-used concurrent data structures. Such data structures, satisfying detectable recovery, are appealing for emerging systems featuring byte-addressable non-volatile main memory (NVRAM), whose persistence allows to efficiently resurrect failed processes after crashes. Detectable recovery ensures that after a crash, every executed operation is able to recover and return a correct response, and that the state of the data structure is not corrupted. Info-Structure Based (ISB)-tracking amends descriptor objects used in existing lock-free helping schemes with additional fields that track an operation's progress towards completion and persists these fields to memory in order to ensure detectable recovery. ISB-tracking avoids full-fledged logging and tracks the progress of concurrent operations in a per-process manner, thus reducing the cost of ensuring detectable recovery. We have applied ISB-tracking to derive detectably recoverable implementations of a queue, a linked list, a binary search tree, and an exchanger. Experimental results show the feasibility of the technique.

In various domains and cases, we observe the creation and usage of information elements which are unnamed. Such elements do not have a name, or may have a name that is not externally referable (usually meaningless and not persistent over time). This paper discusses why we will never `escape' from the problem of having to construct mappings between such unnamed elements in information systems. Since unnamed elements nowadays occur very often in the framework of the Semantic Web and Linked Data as blank nodes, the paper describes scenarios that can benefit from methods that compute mappings between the unnamed elements. For each scenario, the corresponding bnode matching problem is formally defined. Based on this analysis, we try to reach to more a general formulation of the problem, which can be useful for guiding the required technological advances. To this end, the paper finally discusses methods to realize blank node matching, the implementations that exist, and identifies open issues and challenges.

Online advertising is progressively moving towards a programmatic model in which ads are matched to actual interests of individuals collected as they browse the web. Letting the huge debate around privacy aside, a very important question in this area, for which little is known, is: How much do advertisers pay to reach an individual? In this study, we develop a first of its kind methodology for computing exactly that -- the price paid for a web user by the ad ecosystem -- and we do that in real time. Our approach is based on tapping on the Real Time Bidding (RTB) protocol to collect cleartext and encrypted prices for winning bids paid by advertisers in order to place targeted ads. Our main technical contribution is a method for tallying winning bids even when they are encrypted. We achieve this by training a model using as ground truth prices obtained by running our own "probe" ad-campaigns. We design our methodology through a browser extension and a back-end server that provides it with fresh models for encrypted bids. We validate our methodology using a one year long trace of 1600 mobile users and demonstrate that it can estimate a user's advertising worth with more than 82% accuracy.

Joint caching and recommendation has been recently proposed as a new paradigm

for increasing the efficiency of mobile edge caching. Early findings

demonstrate significant gains for the network performance. However, previous

works evaluated the proposed schemes exclusively on simulation environments.

Hence, it still remains uncertain whether the claimed benefits would change in

real settings. In this paper, we propose a methodology that enables to evaluate

joint network and recommendation schemes in real content services by only using

publicly available information. We apply our methodology to the YouTube

service, and conduct extensive measurements to investigate the potential

performance gains. Our results show that significant gains can be achieved in

practice; e.g., 8 to 10 times increase in the cache hit ratio from cache-aware

recommendations. Finally, we build an experimental testbed and conduct

experiments with real users; we make available our code and datasets to

facilitate further research. To our best knowledge, this is the first realistic

evaluation (over a real service, with real measurements and user experiments)

of the joint caching and recommendations paradigm. Our findings provide

experimental evidence for the feasibility and benefits of this paradigm,

validate assumptions of previous works, and provide insights that can drive

future research.

01 Jul 2024

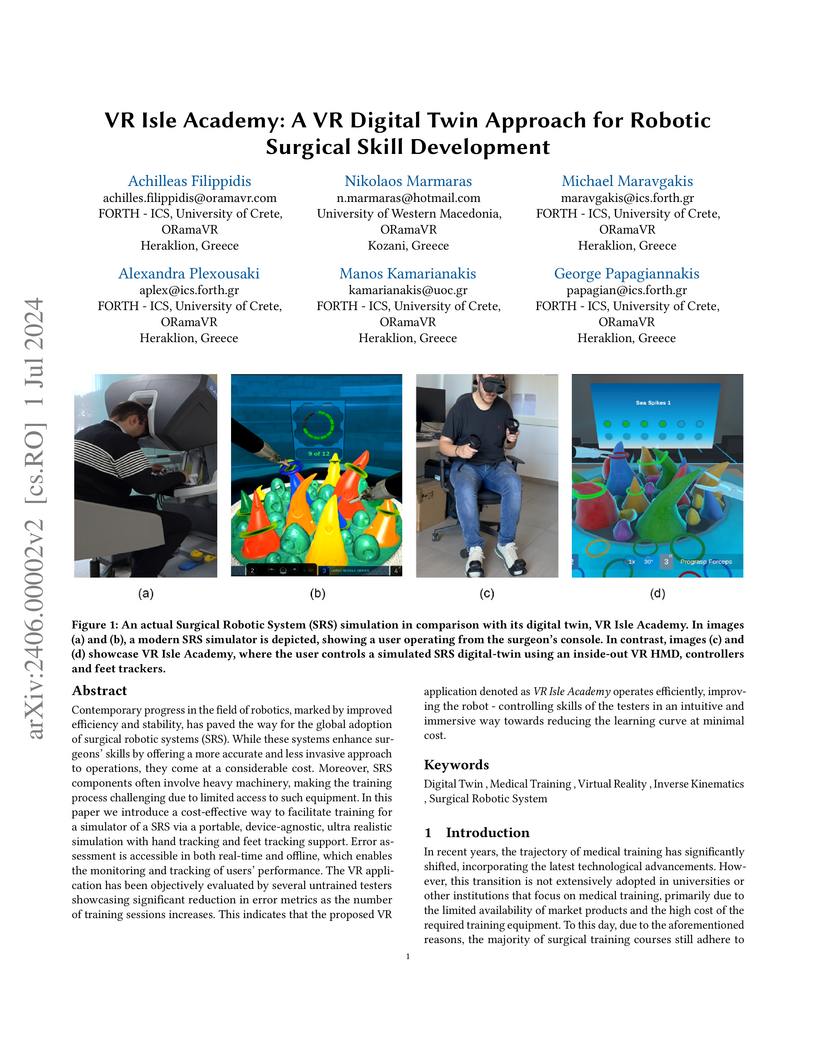

Contemporary progress in the field of robotics, marked by improved efficiency

and stability, has paved the way for the global adoption of surgical robotic

systems (SRS). While these systems enhance surgeons' skills by offering a more

accurate and less invasive approach to operations, they come at a considerable

cost. Moreover, SRS components often involve heavy machinery, making the

training process challenging due to limited access to such equipment. In this

paper we introduce a cost-effective way to facilitate training for a simulator

of a SRS via a portable, device-agnostic, ultra realistic simulation with hand

tracking and feet tracking support. Error assessment is accessible in both

real-time and offline, which enables the monitoring and tracking of users'

performance. The VR application has been objectively evaluated by several

untrained testers showcasing significant reduction in error metrics as the

number of training sessions increases. This indicates that the proposed VR

application denoted as VR Isle Academy operates efficiently, improving the

robot - controlling skills of the testers in an intuitive and immersive way

towards reducing the learning curve at minimal cost.

This paper presents Odyssey, a novel distributed data-series processing framework that efficiently addresses the critical challenges of exhibiting good speedup and ensuring high scalability in data series processing by taking advantage of the full computational capacity of modern clusters comprised of multi-core servers. Odyssey addresses a number of challenges in designing efficient and highly scalable distributed data series index, including efficient scheduling, and load-balancing without paying the prohibitive cost of moving data around. It also supports a flexible partial replication scheme, which enables Odyssey to navigate through a fundamental trade-off between data scalability and good performance during query answering. Through a wide range of configurations and using several real and synthetic datasets, our experimental analysis demonstrates that Odyssey achieves its challenging goals.

There are no more papers matching your filters at the moment.