Handong Global University

MuSE-SVS is a neural singing voice synthesizer that enables multi-singer emotional synthesis with controllable intensity, outperforming baselines in voice quality, naturalness, and emotional similarity. It achieves highly accurate pitch modeling and synchronization, reducing cumulative synchronization error to 0.2% for long songs.

UniTTS, developed by researchers at Handong Global University, enhances expressive speech synthesis by employing residual learning in a unified embedding space to precisely control overlapping style attributes like speaker ID and emotion. The model achieves higher Mean Opinion Scores for fidelity (3.77), speaker similarity (3.88), and emotion similarity (4.15) compared to baseline methods, enabling interference-free style control.

Bolus segmentation is crucial for the automated detection of swallowing

disorders in videofluoroscopic swallowing studies (VFSS). However, it is

difficult for the model to accurately segment a bolus region in a VFSS image

because VFSS images are translucent, have low contrast and unclear region

boundaries, and lack color information. To overcome these challenges, we

propose PECI-Net, a network architecture for VFSS image analysis that combines

two novel techniques: the preprocessing ensemble network (PEN) and the cascaded

inference network (CIN). PEN enhances the sharpness and contrast of the VFSS

image by combining multiple preprocessing algorithms in a learnable way. CIN

reduces ambiguity in bolus segmentation by using context from other regions

through cascaded inference. Moreover, CIN prevents undesirable side effects

from unreliably segmented regions by referring to the context in an asymmetric

way. In experiments, PECI-Net exhibited higher performance than four recently

developed baseline models, outperforming TernausNet, the best among the

baseline models, by 4.54\% and the widely used UNet by 10.83\%. The results of

the ablation studies confirm that CIN and PEN are effective in improving bolus

segmentation performance.

This survey provides a structured overview of hallucinations in code-generating Large Language Models (LLMs), proposing a detailed, objective taxonomy for various error types. Researchers from UNIST, Handong Global University, Korea University, and Seoul National University of Science and Technology collaborated to review existing benchmarks, identify root causes, and analyze mitigation strategies, addressing a critical gap in CodeLLM reliability research.

Researchers at NCSOFT's Speech AI Lab and Handong Global University developed VocGAN, a neural vocoder that addresses quality limitations of fast vocoders like MelGAN by introducing a hierarchically-nested adversarial network and joint conditional/unconditional loss. It achieves a MOS of 4.202, outperforming MelGAN (3.898) and Parallel WaveGAN (4.098), while maintaining 3.24x real-time inference on CPU.

In this paper,we explore the application of Back translation (BT) as a

semi-supervised technique to enhance Neural Machine Translation(NMT) models for

the English-Luganda language pair, specifically addressing the challenges faced

by low-resource languages. The purpose of our study is to demonstrate how BT

can mitigate the scarcity of bilingual data by generating synthetic data from

monolingual corpora. Our methodology involves developing custom NMT models

using both publicly available and web-crawled data, and applying Iterative and

Incremental Back translation techniques. We strategically select datasets for

incremental back translation across multiple small datasets, which is a novel

element of our approach. The results of our study show significant

improvements, with translation performance for the English-Luganda pair

exceeding previous benchmarks by more than 10 BLEU score units across all

translation directions. Additionally, our evaluation incorporates comprehensive

assessment metrics such as SacreBLEU, ChrF2, and TER, providing a nuanced

understanding of translation quality. The conclusion drawn from our research

confirms the efficacy of BT when strategically curated datasets are utilized,

establishing new performance benchmarks and demonstrating the potential of BT

in enhancing NMT models for low-resource languages.

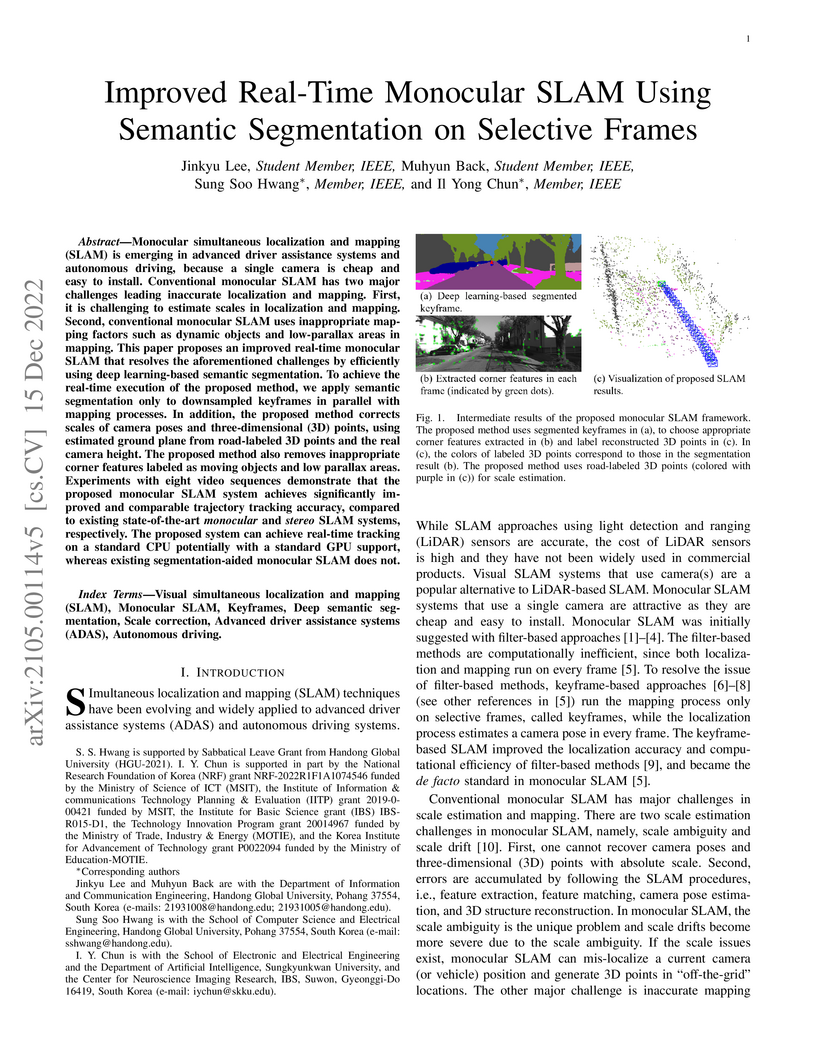

Monocular simultaneous localization and mapping (SLAM) is emerging in

advanced driver assistance systems and autonomous driving, because a single

camera is cheap and easy to install. Conventional monocular SLAM has two major

challenges leading inaccurate localization and mapping. First, it is

challenging to estimate scales in localization and mapping. Second,

conventional monocular SLAM uses inappropriate mapping factors such as dynamic

objects and low-parallax areas in mapping. This paper proposes an improved

real-time monocular SLAM that resolves the aforementioned challenges by

efficiently using deep learning-based semantic segmentation. To achieve the

real-time execution of the proposed method, we apply semantic segmentation only

to downsampled keyframes in parallel with mapping processes. In addition, the

proposed method corrects scales of camera poses and three-dimensional (3D)

points, using estimated ground plane from road-labeled 3D points and the real

camera height. The proposed method also removes inappropriate corner features

labeled as moving objects and low parallax areas. Experiments with eight video

sequences demonstrate that the proposed monocular SLAM system achieves

significantly improved and comparable trajectory tracking accuracy, compared to

existing state-of-the-art monocular and stereo SLAM systems, respectively. The

proposed system can achieve real-time tracking on a standard CPU potentially

with a standard GPU support, whereas existing segmentation-aided monocular SLAM

does not.

In autonomous driving, the end-to-end (E2E) driving approach that predicts

vehicle control signals directly from sensor data is rapidly gaining attention.

To learn a safe E2E driving system, one needs an extensive amount of driving

data and human intervention. Vehicle control data is constructed by many hours

of human driving, and it is challenging to construct large vehicle control

datasets. Often, publicly available driving datasets are collected with limited

driving scenes, and collecting vehicle control data is only available by

vehicle manufacturers. To address these challenges, this letter proposes the

first fully self-supervised learning framework, self-supervised imitation

learning (SSIL), for E2E driving, based on the self-supervised regression

learning (SSRL) framework.The proposed SSIL framework can learn E2E driving

networks \emph{without} using driving command data or a pre-trained model. To

construct pseudo steering angle data, proposed SSIL predicts a pseudo target

from the vehicle's poses at the current and previous time points that are

estimated with light detection and ranging sensors. In addition, we propose two

E2E driving networks that predict driving commands depending on high-level

instruction. Our numerical experiments with three different benchmark datasets

demonstrate that the proposed SSIL framework achieves \emph{very} comparable

E2E driving accuracy with the supervised learning counterpart. The proposed

pseudo-label predictor outperformed an existing one using proportional integral

derivative controller.

Unsupervised anomaly detection (UAD) in medical imaging is crucial for

identifying pathological abnormalities without requiring extensive labeled

data. However, existing diffusion-based UAD models rely solely on imaging

features, limiting their ability to distinguish between normal anatomical

variations and pathological anomalies. To address this, we propose Diff3M, a

multi-modal diffusion-based framework that integrates chest X-rays and

structured Electronic Health Records (EHRs) for enhanced anomaly detection.

Specifically, we introduce a novel image-EHR cross-attention module to

incorporate structured clinical context into the image generation process,

improving the model's ability to differentiate normal from abnormal features.

Additionally, we develop a static masking strategy to enhance the

reconstruction of normal-like images from anomalies. Extensive evaluations on

CheXpert and MIMIC-CXR/IV demonstrate that Diff3M achieves state-of-the-art

performance, outperforming existing UAD methods in medical imaging. Our code is

available at this http URL this https URL

Causal language modeling (CLM) serves as the foundational framework underpinning remarkable successes of recent large language models (LLMs). Despite its success, the training approach for next word prediction poses a potential risk of causing the model to overly focus on local dependencies within a sentence. While prior studies have been introduced to predict future N words simultaneously, they were primarily applied to tasks such as masked language modeling (MLM) and neural machine translation (NMT). In this study, we introduce a simple N-gram prediction framework for the CLM task. Moreover, we introduce word difference representation (WDR) as a surrogate and contextualized target representation during model training on the basis of N-gram prediction framework. To further enhance the quality of next word prediction, we propose an ensemble method that incorporates the future N words' prediction results. Empirical evaluations across multiple benchmark datasets encompassing CLM and NMT tasks demonstrate the significant advantages of our proposed methods over the conventional CLM.

For years, adversarial training has been extensively studied in natural

language processing (NLP) settings. The main goal is to make models robust so

that similar inputs derive in semantically similar outcomes, which is not a

trivial problem since there is no objective measure of semantic similarity in

language. Previous works use an external pre-trained NLP model to tackle this

challenge, introducing an extra training stage with huge memory consumption

during training. However, the recent popular approach of contrastive learning

in language processing hints a convenient way of obtaining such similarity

restrictions. The main advantage of the contrastive learning approach is that

it aims for similar data points to be mapped close to each other and further

from different ones in the representation space. In this work, we propose

adversarial training with contrastive learning (ATCL) to adversarially train a

language processing task using the benefits of contrastive learning. The core

idea is to make linear perturbations in the embedding space of the input via

fast gradient methods (FGM) and train the model to keep the original and

perturbed representations close via contrastive learning. In NLP experiments,

we applied ATCL to language modeling and neural machine translation tasks. The

results show not only an improvement in the quantitative (perplexity and BLEU)

scores when compared to the baselines, but ATCL also achieves good qualitative

results in the semantic level for both tasks without using a pre-trained model.

13 Jul 2021

Recent achievements in end-to-end deep learning have encouraged the exploration of tasks dealing with highly structured data with unified deep network models. Having such models for compressing audio signals has been challenging since it requires discrete representations that are not easy to train with end-to-end backpropagation. In this paper, we present an end-to-end deep learning approach that combines recurrent neural networks (RNNs) within the training strategy of variational autoencoders (VAEs) with a binary representation of the latent space. We apply a reparametrization trick for the Bernoulli distribution for the discrete representations, which allows smooth backpropagation. In addition, our approach allows the separation of the encoder and decoder, which is necessary for compression tasks. To our best knowledge, this is the first end-to-end learning for a single audio compression model with RNNs, and our model achieves a Signal to Distortion Ratio (SDR) of 20.54.

To address the issue of catastrophic forgetting in neural networks, we propose a novel, simple, and effective solution called neuron-level plasticity control (NPC). While learning a new task, the proposed method preserves the knowledge for the previous tasks by controlling the plasticity of the network at the neuron level. NPC estimates the importance value of each neuron and consolidates important \textit{neurons} by applying lower learning rates, rather than restricting individual connection weights to stay close to certain values. The experimental results on the incremental MNIST (iMNIST) and incremental CIFAR100 (iCIFAR100) datasets show that neuron-level consolidation is substantially more effective compared to the connection-level consolidation approaches.

As one of standard approaches to train deep neural networks, dropout has been

applied to regularize large models to avoid overfitting, and the improvement in

performance by dropout has been explained as avoiding co-adaptation between

nodes. However, when correlations between nodes are compared after training the

networks with or without dropout, one question arises if co-adaptation

avoidance explains the dropout effect completely. In this paper, we propose an

additional explanation of why dropout works and propose a new technique to

design better activation functions. First, we show that dropout can be

explained as an optimization technique to push the input towards the saturation

area of nonlinear activation function by accelerating gradient information

flowing even in the saturation area in backpropagation. Based on this

explanation, we propose a new technique for activation functions, {\em gradient

acceleration in activation function (GAAF)}, that accelerates gradients to flow

even in the saturation area. Then, input to the activation function can climb

onto the saturation area which makes the network more robust because the model

converges on a flat region. Experiment results support our explanation of

dropout and confirm that the proposed GAAF technique improves image

classification performance with expected properties.

Since deep learning became a key player in natural language processing (NLP),

many deep learning models have been showing remarkable performances in a

variety of NLP tasks, and in some cases, they are even outperforming humans.

Such high performance can be explained by efficient knowledge representation of

deep learning models. While many methods have been proposed to learn more

efficient representation, knowledge distillation from pretrained deep networks

suggest that we can use more information from the soft target probability to

train other neural networks. In this paper, we propose a new knowledge

distillation method self-knowledge distillation, based on the soft target

probabilities of the training model itself, where multimode information is

distilled from the word embedding space right below the softmax layer. Due to

the time complexity, our method approximates the soft target probabilities. In

experiments, we applied the proposed method to two different and fundamental

NLP tasks: language model and neural machine translation. The experiment

results show that our proposed method improves performance on the tasks.

This paper presents a novel automatic calibration system to estimate the

extrinsic parameters of LiDAR mounted on a mobile platform for sensor

misalignment inspection in the large-scale production of highly automated

vehicles. To obtain subdegree and subcentimeter accuracy levels of extrinsic

calibration, this study proposed a new concept of a target board with embedded

photodetector arrays, named the PD-target system, to find the precise position

of the correspondence laser beams on the target surface. Furthermore, the

proposed system requires only the simple design of the target board at the

fixed pose in a close range to be readily applicable in the automobile

manufacturing environment. The experimental evaluation of the proposed system

on low-resolution LiDAR showed that the LiDAR offset pose can be estimated

within 0.1 degree and 3 mm levels of precision. The high accuracy and

simplicity of the proposed calibration system make it practical for large-scale

applications for the reliability and safety of autonomous systems.

A misalignment of LiDAR as low as a few degrees could cause a significant

error in obstacle detection and mapping that could cause safety and quality

issues. In this paper, an accurate inspection system is proposed for estimating

a LiDAR alignment error after sensor attachment on a mobility system such as a

vehicle or robot. The proposed method uses only a single target board at the

fixed position to estimate the three orientations (roll, tilt, and yaw) and the

horizontal position of the LiDAR attachment with sub-degree and millimeter

level accuracy. After the proposed preprocessing steps, the feature beam points

that are the closest to each target corner are extracted and used to calculate

the sensor attachment pose with respect to the target board frame using a

nonlinear optimization method and with a low computational cost. The

performance of the proposed method is evaluated using a test bench that can

control the reference yaw and horizontal translation of LiDAR within ranges of

3 degrees and 30 millimeters, respectively. The experimental results for a

low-resolution 16 channel LiDAR (Velodyne VLP-16) confirmed that misalignment

could be estimated with accuracy within 0.2 degrees and 4 mm. The high accuracy

and simplicity of the proposed system make it practical for large-scale

industrial applications such as automobile or robot manufacturing process that

inspects the sensor attachment for the safety quality control.

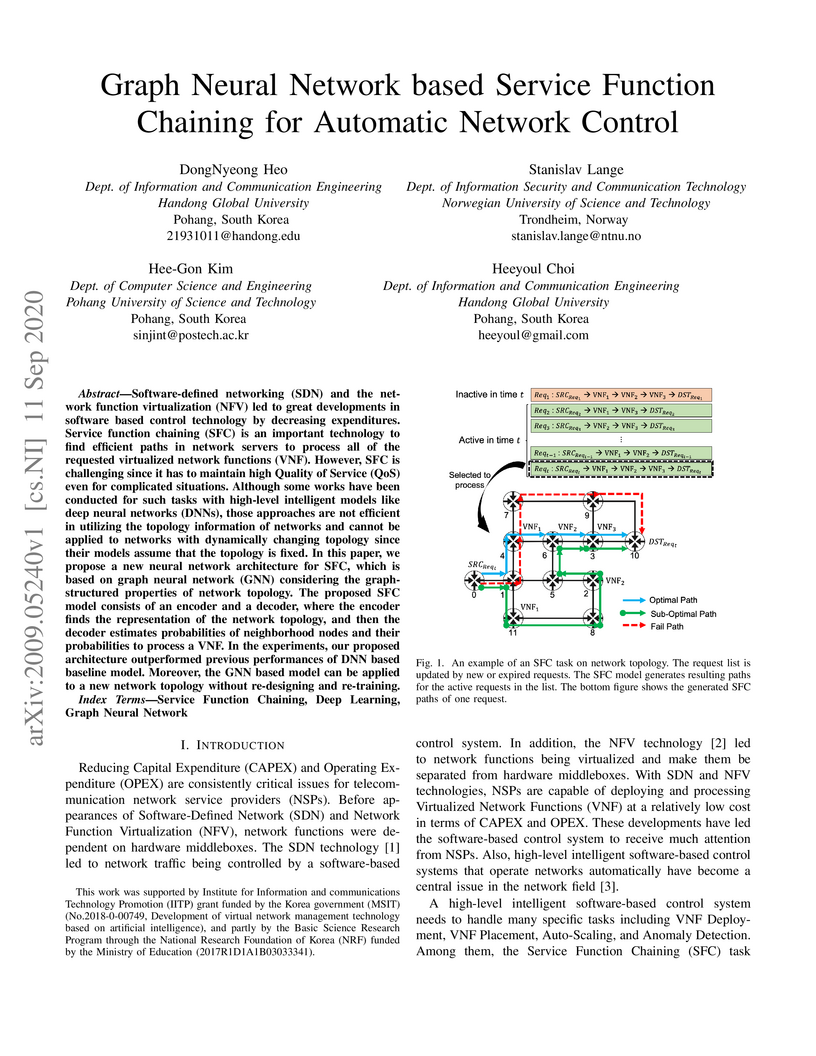

Software-defined networking (SDN) and the network function virtualization

(NFV) led to great developments in software based control technology by

decreasing expenditures. Service function chaining (SFC) is an important

technology to find efficient paths in network servers to process all of the

requested virtualized network functions (VNF). However, SFC is challenging

since it has to maintain high Quality of Service (QoS) even for complicated

situations. Although some works have been conducted for such tasks with

high-level intelligent models like deep neural networks (DNNs), those

approaches are not efficient in utilizing the topology information of networks

and cannot be applied to networks with dynamically changing topology since

their models assume that the topology is fixed. In this paper, we propose a new

neural network architecture for SFC, which is based on graph neural network

(GNN) considering the graph-structured properties of network topology. The

proposed SFC model consists of an encoder and a decoder, where the encoder

finds the representation of the network topology, and then the decoder

estimates probabilities of neighborhood nodes and their probabilities to

process a VNF. In the experiments, our proposed architecture outperformed

previous performances of DNN based baseline model. Moreover, the GNN based

model can be applied to a new network topology without re-designing and

re-training.

For deployment on an embedded processor for autonomous driving, the object

detection network should satisfy all of the accuracy, real-time inference, and

light model size requirements. Conventional deep CNN-based detectors aim for

high accuracy, making their model size heavy for an embedded system with

limited memory space. In contrast, lightweight object detectors are greatly

compressed but at a significant sacrifice of accuracy. Therefore, we propose

FRDet, a lightweight one-stage object detector that is balanced to satisfy all

the constraints of accuracy, model size, and real-time processing on an

embedded GPU processor for autonomous driving applications. Our network aims to

maximize the compression of the model while achieving or surpassing YOLOv3

level of accuracy. This paper proposes the Fire-Residual (FR) module to design

a lightweight network with low accuracy loss by adapting fire modules with

residual skip connections. In addition, the Gaussian uncertainty modeling of

the bounding box is applied to further enhance the localization accuracy.

Experiments on the KITTI dataset showed that FRDet reduced the memory size by

50.8% but achieved higher accuracy by 1.12% mAP compared to YOLOv3. Moreover,

the real-time detection speed reached 31.3 FPS on an embedded GPU board(NVIDIA

Xavier). The proposed network achieved higher compression with comparable

accuracy compared to other deep CNN object detectors while showing improved

accuracy than the lightweight detector baselines. Therefore, the proposed FRDet

is a well-balanced and efficient object detector for practical application in

autonomous driving that can satisfies all the criteria of accuracy, real-time

inference, and light model size.

Recent 3D face editing methods using masks have produced high-quality edited

images by leveraging Neural Radiance Fields (NeRF). Despite their impressive

performance, existing methods often provide limited user control due to the use

of pre-trained segmentation masks. To utilize masks with a desired layout, an

extensive training dataset is required, which is challenging to gather. We

present FFaceNeRF, a NeRF-based face editing technique that can overcome the

challenge of limited user control due to the use of fixed mask layouts. Our

method employs a geometry adapter with feature injection, allowing for

effective manipulation of geometry attributes. Additionally, we adopt latent

mixing for tri-plane augmentation, which enables training with a few samples.

This facilitates rapid model adaptation to desired mask layouts, crucial for

applications in fields like personalized medical imaging or creative face

editing. Our comparative evaluations demonstrate that FFaceNeRF surpasses

existing mask based face editing methods in terms of flexibility, control, and

generated image quality, paving the way for future advancements in customized

and high-fidelity 3D face editing. The code is available on the

{\href{this https URL}{project-page}}.

There are no more papers matching your filters at the moment.