Industrial Technology Research Institute

26 Jun 2025

We propose a hybrid quantum-classical reinforcement learning framework for sector rotation in the Taiwan stock market. Our system employs Proximal Policy Optimization (PPO) as the backbone algorithm and integrates both classical architectures (LSTM, Transformer) and quantum-enhanced models (QNN, QRWKV, QASA) as policy and value networks. An automated feature engineering pipeline extracts financial indicators from capital share data to ensure consistent model input across all configurations. Empirical backtesting reveals a key finding: although quantum-enhanced models consistently achieve higher training rewards, they underperform classical models in real-world investment metrics such as cumulative return and Sharpe ratio. This discrepancy highlights a core challenge in applying reinforcement learning to financial domains -- namely, the mismatch between proxy reward signals and true investment objectives. Our analysis suggests that current reward designs may incentivize overfitting to short-term volatility rather than optimizing risk-adjusted returns. This issue is compounded by the inherent expressiveness and optimization instability of quantum circuits under Noisy Intermediate-Scale Quantum (NISQ) constraints. We discuss the implications of this reward-performance gap and propose directions for future improvement, including reward shaping, model regularization, and validation-based early stopping. Our work offers a reproducible benchmark and critical insights into the practical challenges of deploying quantum reinforcement learning in real-world finance.

14 Mar 2025

Researchers at Academia Sinica, in collaboration with Qolab and Applied Materials, developed a lift-off-free "Window Junction" (WJ) fabrication process for superconducting qubits, compatible with industrial semiconductor manufacturing. This method, which uses a sacrificial SiO2 scaffold and vapor HF removal, achieved qubit energy relaxation times (T1) up to 57 μs, enhancing both scalability and coherence by minimizing defect introduction.

In this paper, we introduce a novel geometry-aware self-training framework for room layout estimation models on unseen scenes with unlabeled data. Our approach utilizes a ray-casting formulation to aggregate multiple estimates from different viewing positions, enabling the computation of reliable pseudo-labels for self-training. In particular, our ray-casting approach enforces multi-view consistency along all ray directions and prioritizes spatial proximity to the camera view for geometry reasoning. As a result, our geometry-aware pseudo-labels effectively handle complex room geometries and occluded walls without relying on assumptions such as Manhattan World or planar room walls. Evaluation on publicly available datasets, including synthetic and real-world scenarios, demonstrates significant improvements in current state-of-the-art layout models without using any human annotation.

Recent years have seen a shift towards learning-based methods for trajectory prediction, with challenges remaining in addressing uncertainty and capturing multi-modal distributions. This paper introduces Temporal Ensembling with Learning-based Aggregation, a meta-algorithm designed to mitigate the issue of missing behaviors in trajectory prediction, which leads to inconsistent predictions across consecutive frames. Unlike conventional model ensembling, temporal ensembling leverages predictions from nearby frames to enhance spatial coverage and prediction diversity. By confirming predictions from multiple frames, temporal ensembling compensates for occasional errors in individual frame predictions. Furthermore, trajectory-level aggregation, often utilized in model ensembling, is insufficient for temporal ensembling due to a lack of consideration of traffic context and its tendency to assign candidate trajectories with incorrect driving behaviors to final predictions. We further emphasize the necessity of learning-based aggregation by utilizing mode queries within a DETR-like architecture for our temporal ensembling, leveraging the characteristics of predictions from nearby frames. Our method, validated on the Argoverse 2 dataset, shows notable improvements: a 4% reduction in minADE, a 5% decrease in minFDE, and a 1.16% reduction in the miss rate compared to the strongest baseline, QCNet, highlighting its efficacy and potential in autonomous driving.

10 Jan 2025

The Huge growth in the usage of web applications has raised concerns regarding their security vulnerabilities, which in turn pushes toward robust security testing tools. This study compares OWASP ZAP, the leading open-source web application vulnerability scanner, across its two most recent iterations. While comparing their performance to the OWASP Benchmark, the study evaluates their efficiency in spotting vulnerabilities in the purposefully vulnerable application, OWASP Benchmark project. The research methodology involves conducting systematic scans of OWASP Benchmark using both v2.12.0 and v2.13.0 of OWASP ZAP. The OWASP Benchmark provides a standardized framework to evaluate the scanner's abilities in identifying security flaws, Insecure Cookies, Path traversal, SQL injection, and more. Results obtained from this benchmark comparison offer valuable insights into the strengths and weaknesses of each version of the tool. This study aids in web application security testing by shedding light on how well-known scanners work at spotting vulnerabilities. The knowledge gained from this study can assist security professionals and developers in making informed decisions to support their web application security status. In conclusion, this study comprehensively analyzes ZAP's capabilities in detecting security flaws using OWASP Benchmark v1.2. The findings add to the continuing debates about online application security tools and establish the framework for future studies and developments in the research field of web application security testing.

The prevalence of the powerful multilingual models, such as Whisper, has significantly advanced the researches on speech recognition. However, these models often struggle with handling the code-switching setting, which is essential in multilingual speech recognition. Recent studies have attempted to address this setting by separating the modules for different languages to ensure distinct latent representations for languages. Some other methods considered the switching mechanism based on language identification. In this study, a new attention-guided adaptation is proposed to conduct parameter-efficient learning for bilingual ASR. This method selects those attention heads in a model which closely express language identities and then guided those heads to be correctly attended with their corresponding languages. The experiments on the Mandarin-English code-switching speech corpus show that the proposed approach achieves a 14.2% mixed error rate, surpassing state-of-the-art method, where only 5.6% additional parameters over Whisper are trained.

26 Feb 2024

In recent years, advanced deep neural networks have required a large number of parameters for training. Therefore, finding a method to reduce the number of parameters has become crucial for achieving efficient training. This work proposes a training scheme for classical neural networks (NNs) that utilizes the exponentially large Hilbert space of a quantum system. By mapping a classical NN with M parameters to a quantum neural network (QNN) with O(polylog(M)) rotational gate angles, we can significantly reduce the number of parameters. These gate angles can be updated to train the classical NN. Unlike existing quantum machine learning (QML) methods, the results obtained from quantum computers using our approach can be directly used on classical computers. Numerical results on the MNIST and Iris datasets are presented to demonstrate the effectiveness of our approach. Additionally, we investigate the effects of deeper QNNs and the number of measurement shots for the QNN, followed by the theoretical perspective of the proposed method. This work opens a new branch of QML and offers a practical tool that can greatly enhance the influence of QML, as the trained QML results can benefit classical computing in our daily lives.

Camera calibration is a crucial prerequisite in many applications of computer

vision. In this paper, a new, geometry-based camera calibration technique is

proposed, which resolves two main issues associated with the widely used

Zhang's method: (i) the lack of guidelines to avoid outliers in the computation

and (ii) the assumption of fixed camera focal length. The proposed approach is

based on the closed-form solution of principal lines (PLs), with their

intersection being the principal point while each PL can concisely represent

relative orientation/position (up to one degree of freedom for both) between a

special pair of coordinate systems of image plane and calibration pattern. With

such analytically tractable image features, computations associated with the

calibration are greatly simplified, while the guidelines in (i) can be

established intuitively. Experimental results for synthetic and real data show

that the proposed approach does compare favorably with Zhang's method, in terms

of correctness, robustness, and flexibility, and addresses issues (i) and (ii)

satisfactorily.

27 Jun 2025

Motor-actuated pendulums have been established as arguably the most common laboratory prototypes used in control system education because of the relevance to robot manipulator control in industry. Meanwhile, multi-rotor drones like quadcopters have become popular in industrial applications but have not been broadly employed in control education laboratory. Platforms with pendulums and multi-rotor copters present classical yet intriguing multi-degree of freedom (DoF) dynamics and coordinate systems for the control system investigation. In this paper, we introduce a novel control platform in which a 2-DoF pendulum capable of azimuth and elevation rotation is actuated through vectored thrust generated by a quadcopter. Designed as a benchmark for mechatronics and nonlinear control education and research, the system integrates detailed mechatronic implementation with different control strategies. Specifically, we apply and compare small perturbation linearization (SPL), state feedback linearization (SFL), and partial feedback linearization (PFL) to the nonlinear system dynamics. The performances are evaluated by time specifications of step response and Root-Mean-Square (RMS) error of trajectory tracking. The robustness of the closed-loop system is validated under external disturbances, and both simulation and experimental results are presented to highlight the strengths and limitations of the nonlinear model-based control approaches.

30 Jun 2007

A quantum master equation is obtained for identical fermions by including a relaxation term in addition to the mean-field Hamiltonian. [Huang C F and Huang K N 2004 Chinese J. Phys. 42 221; Gebauer R and Car R 2004 Phys. Rev. B 70 125324] It is proven in this paper that both the positivity and Pauli's exclusion principle are preserved under this equation when there exists an upper bound for the transition rate. Such an equation can be generalized to model BCS-type quasiparticles, and is reduced to a Markoff master equation of Lindblad form in the low-density limit with respect to particles or holes.

18 Jan 2022

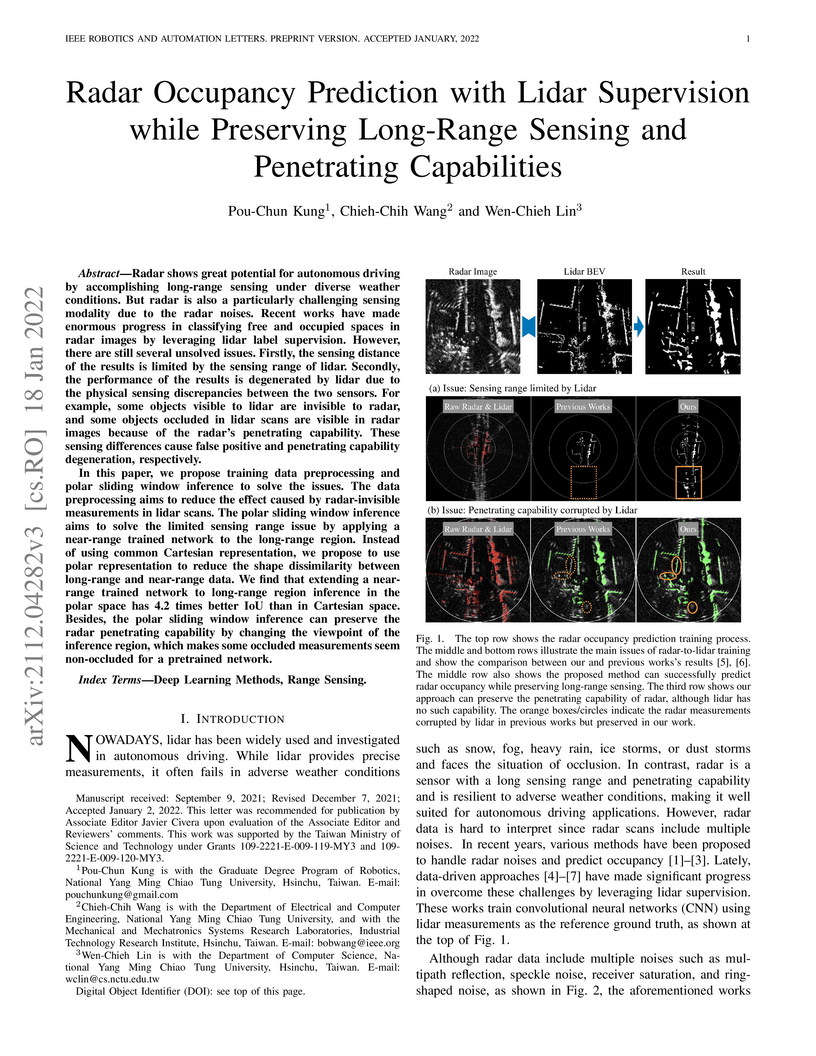

Radar shows great potential for autonomous driving by accomplishing long-range sensing under diverse weather conditions. But radar is also a particularly challenging sensing modality due to the radar noises. Recent works have made enormous progress in classifying free and occupied spaces in radar images by leveraging lidar label supervision. However, there are still several unsolved issues. Firstly, the sensing distance of the results is limited by the sensing range of lidar. Secondly, the performance of the results is degenerated by lidar due to the physical sensing discrepancies between the two sensors. For example, some objects visible to lidar are invisible to radar, and some objects occluded in lidar scans are visible in radar images because of the radar's penetrating capability. These sensing differences cause false positive and penetrating capability degeneration, respectively.

In this paper, we propose training data preprocessing and polar sliding window inference to solve the issues. The data preprocessing aims to reduce the effect caused by radar-invisible measurements in lidar scans. The polar sliding window inference aims to solve the limited sensing range issue by applying a near-range trained network to the long-range region. Instead of using common Cartesian representation, we propose to use polar representation to reduce the shape dissimilarity between long-range and near-range data. We find that extending a near-range trained network to long-range region inference in the polar space has 4.2 times better IoU than in Cartesian space. Besides, the polar sliding window inference can preserve the radar penetrating capability by changing the viewpoint of the inference region, which makes some occluded measurements seem non-occluded for a pretrained network.

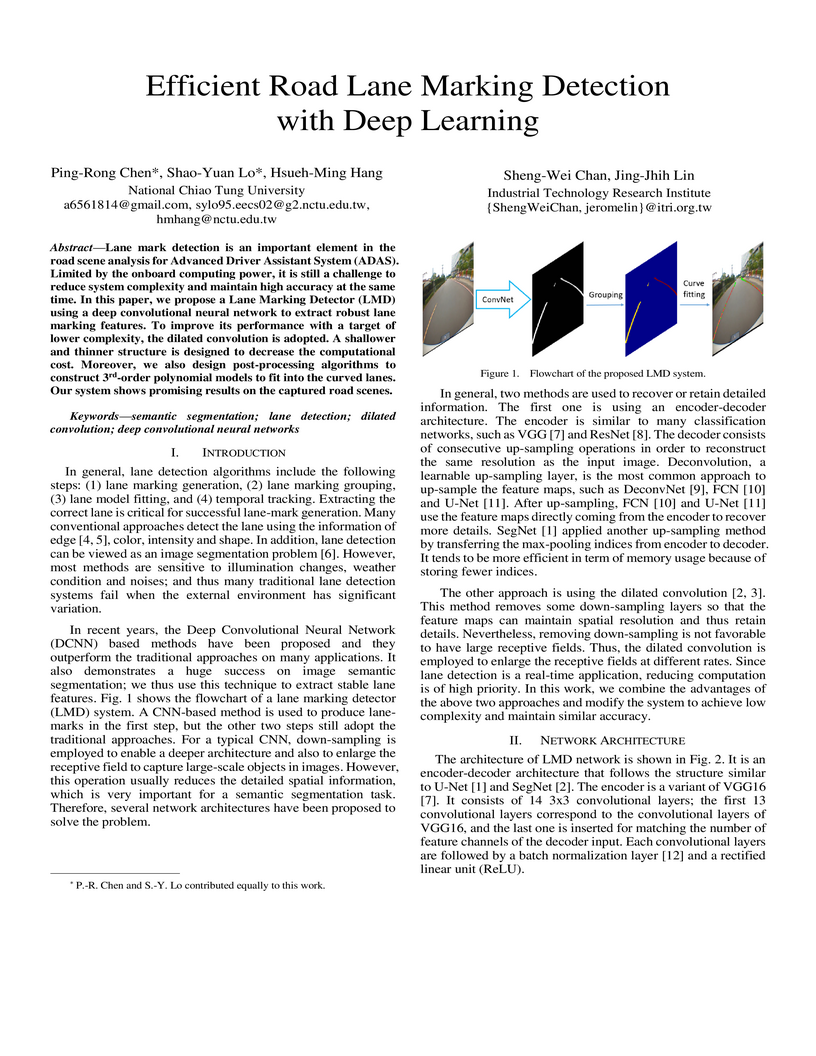

Lane mark detection is an important element in the road scene analysis for

Advanced Driver Assistant System (ADAS). Limited by the onboard computing

power, it is still a challenge to reduce system complexity and maintain high

accuracy at the same time. In this paper, we propose a Lane Marking Detector

(LMD) using a deep convolutional neural network to extract robust lane marking

features. To improve its performance with a target of lower complexity, the

dilated convolution is adopted. A shallower and thinner structure is designed

to decrease the computational cost. Moreover, we also design post-processing

algorithms to construct 3rd-order polynomial models to fit into the curved

lanes. Our system shows promising results on the captured road scenes.

A deep reinforcement learning system enables quadcopters to perform 3D obstacle avoidance in simulated environments, demonstrating an 86% obstacle avoidance rate in unseen test areas. This approach combines a goal-seeking navigation function with a Deep Q-Network for dynamic collision avoidance, utilizing the quadcopter's full 3D mobility.

Stochastic diffusion processes are pervasive in nature, from the seemingly

erratic Brownian motion to the complex interactions of synaptically-coupled

spiking neurons. Recently, drawing inspiration from Langevin dynamics,

neuromorphic diffusion models were proposed and have become one of the major

breakthroughs in the field of generative artificial intelligence. Unlike

discriminative models that have been well developed to tackle classification or

regression tasks, diffusion models as well as other generative models such as

ChatGPT aim at creating content based upon contexts learned. However, the more

complex algorithms of these models result in high computational costs using

today's technologies, creating a bottleneck in their efficiency, and impeding

further development. Here, we develop a spintronic voltage-controlled

magnetoelectric memory hardware for the neuromorphic diffusion process. The

in-memory computing capability of our spintronic devices goes beyond current

Von Neumann architecture, where memory and computing units are separated.

Together with the non-volatility of magnetic memory, we can achieve high-speed

and low-cost computing, which is desirable for the increasing scale of

generative models in the current era. We experimentally demonstrate that the

hardware-based true random diffusion process can be implemented for image

generation and achieve comparable image quality to software-based training as

measured by the Frechet inception distance (FID) score, achieving ~10^3 better

energy-per-bit-per-area over traditional hardware.

26 Mar 2025

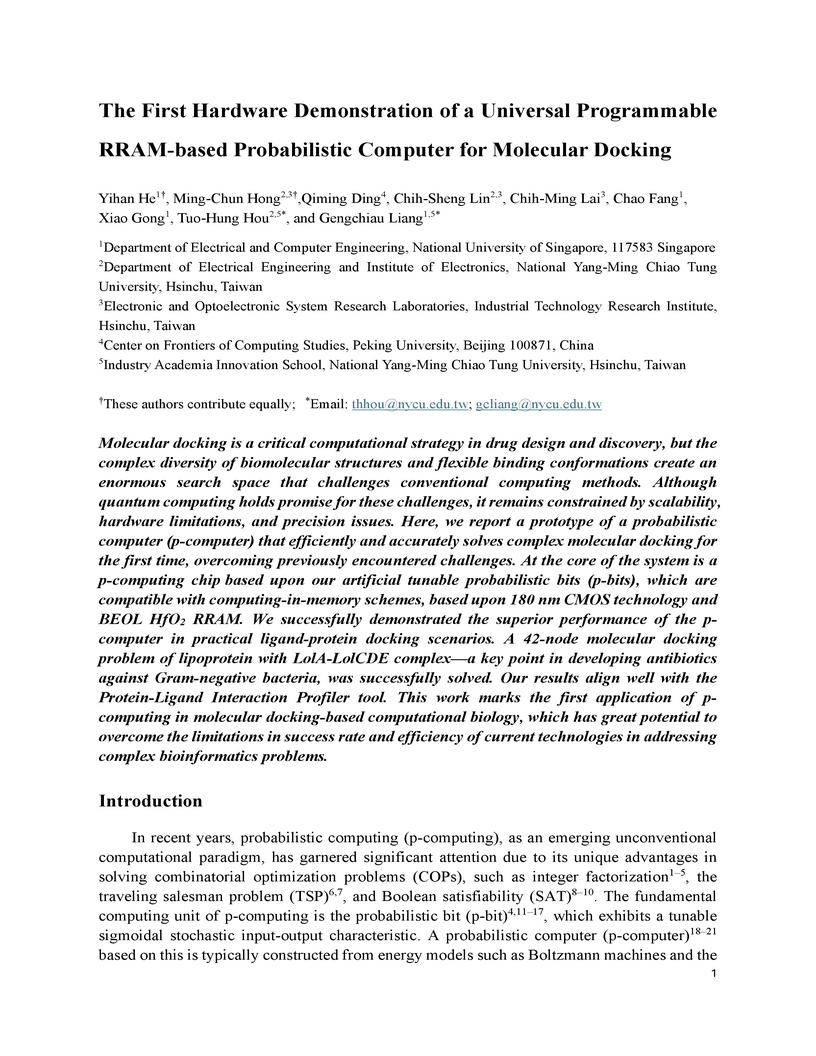

Molecular docking is a critical computational strategy in drug design and

discovery, but the complex diversity of biomolecular structures and flexible

binding conformations create an enormous search space that challenges

conventional computing methods. Although quantum computing holds promise for

these challenges, it remains constrained by scalability, hardware limitations,

and precision issues. Here, we report a prototype of a probabilistic computer

(p-computer) that efficiently and accurately solves complex molecular docking

for the first time, overcoming previously encountered challenges. At the core

of the system is a p-computing chip based upon our artificial tunable

probabilistic bits (p-bits), which are compatible with computing-in-memory

schemes, based upon 180 nm CMOS technology and BEOL HfO2 RRAM. We successfully

demonstrated the superior performance of the p-computer in practical

ligand-protein docking scenarios. A 42-node molecular docking problem of

lipoprotein with LolA-LolCDE complex-a key point in developing antibiotics

against Gram-negative bacteria, was successfully solved. Our results align well

with the Protein-Ligand Interaction Profiler tool. This work marks the first

application of p-computing in molecular docking-based computational biology,

which has great potential to overcome the limitations in success rate and

efficiency of current technologies in addressing complex bioinformatics

problems.

The next leap on the internet has already started as Semantic Web. At its

core, Semantic Web transforms the document oriented web to a data oriented web

enriched with semantics embedded as metadata. This change in perspective

towards the web offers numerous benefits for vast amount of data intensive

industries that are bound to the web and its related applications. The

industries are diverse as they range from Oil & Gas exploration to the

investigative journalism, and everything in between. This paper discusses eight

different industries which currently reap the benefits of Semantic Web. The

paper also offers a future outlook into Semantic Web applications and discusses

the areas in which Semantic Web would play a key role in the future.

13 Mar 2025

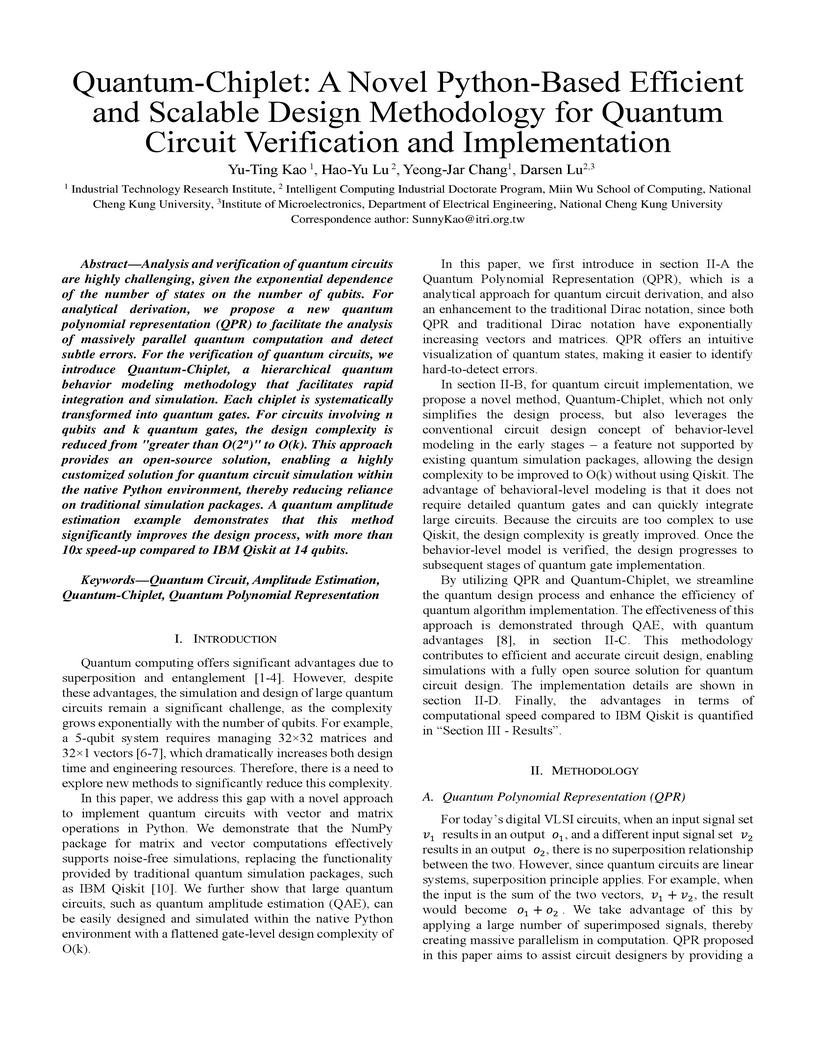

Analysis and verification of quantum circuits are highly challenging, given

the exponential dependence of the number of states on the number of qubits. For

analytical derivation, we propose a new quantum polynomial representation (QPR)

to facilitate the analysis of massively parallel quantum computation and detect

subtle errors. For the verification of quantum circuits, we introduce

Quantum-Chiplet, a hierarchical quantum behavior modeling methodology that

facilitates rapid integration and simulation. Each chiplet is systematically

transformed into quantum gates. For circuits involving n qubits and k quantum

gates, the design complexity is reduced from "greater than O(2^n)" to O(k).

This approach provides an open-source solution, enabling a highly customized

solution for quantum circuit simulation within the native Python environment,

thereby reducing reliance on traditional simulation packages. A quantum

amplitude estimation example demonstrates that this method significantly

improves the design process, with more than 10x speed-up compared to IBM Qiskit

at 14 qubits.

This study addresses the issue of leveraging federated learning to improve data privacy and performance in IVF embryo selection. The EM (Expectation-Maximization) algorithm is incorporated into deep learning models to form a federated learning framework for quality evaluation of blastomere cleavage using two-dimensional images. The framework comprises a server site and several client sites characterized in that each is locally trained with an EM algorithm. Upon the completion of the local EM training, a separate 5-mode mixture distribution is generated for each client, the clients' distribution statics are then uploaded to the server site and aggregated therein to produce a global (sharing) 5-mode distribution. During the inference phase, each client uses image classifiers and an instance segmentor, assisted by the global 5-mode distribution acting as a calibrator to (1) identify the absolute cleavage timing of blastomere, i.e., tPNa, tPNf, t2, t3, t4, t5, t6, t7, and t8, (2) track the cleavage process of blastomeres to detect the irregular cleavage patterns, and (3) assess the symmetry degree of blastomeres. Experimental results show that the proposed method outperforms commercial Time-Lapse Incubators in reducing the average error of timing prediction by twofold. The proposed facilitate frameworks the adaptability and scalability of classifiers and segmentor to data variability associated with patients in different locations or countries.

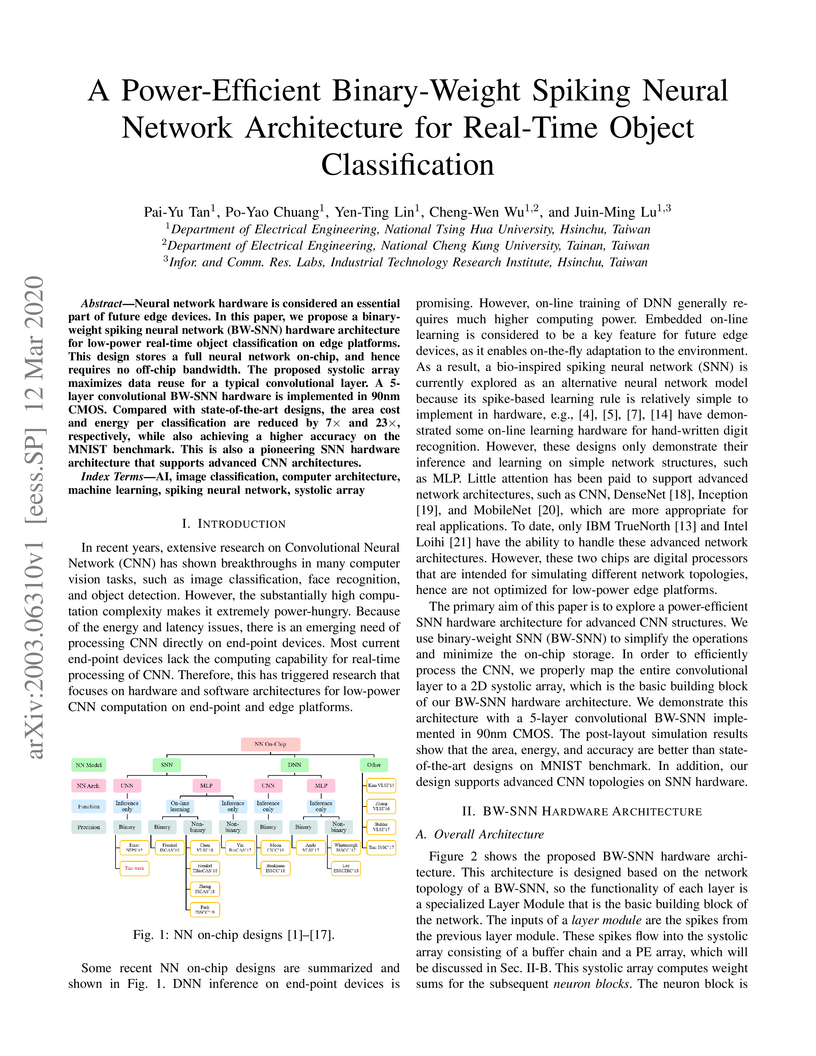

Neural network hardware is considered an essential part of future edge devices. In this paper, we propose a binary-weight spiking neural network (BW-SNN) hardware architecture for low-power real-time object classification on edge platforms. This design stores a full neural network on-chip, and hence requires no off-chip bandwidth. The proposed systolic array maximizes data reuse for a typical convolutional layer. A 5-layer convolutional BW-SNN hardware is implemented in 90nm CMOS. Compared with state-of-the-art designs, the area cost and energy per classification are reduced by 7× and 23×, respectively, while also achieving a higher accuracy on the MNIST benchmark. This is also a pioneering SNN hardware architecture that supports advanced CNN architectures.

19 Jun 2025

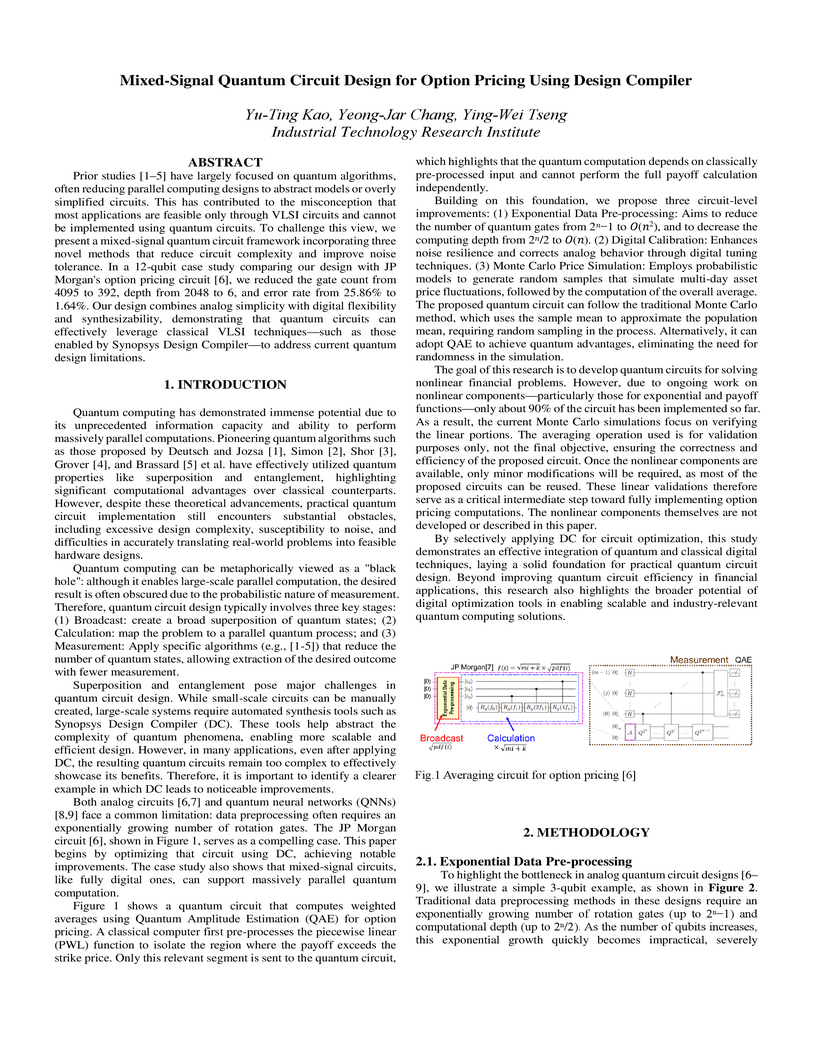

Prior studies have largely focused on quantum algorithms, often reducing parallel computing designs to abstract models or overly simplified circuits. This has contributed to the misconception that most applications are feasible only through VLSI circuits and cannot be implemented using quantum circuits. To challenge this view, we present a mixed-signal quantum circuit framework incorporating three novel methods that reduce circuit complexity and improve noise tolerance. In a 12 qubit case study comparing our design with JP Morgan's option pricing circuit, we reduced the gate count from 4095 to 392, depth from 2048 to 6, and error rate from 25.86\% to 1.64\%. Our design combines analog simplicity with digital flexibility and synthesizability, demonstrating that quantum circuits can effectively leverage classical VLSI techniques, such as those enabled by Synopsys Design Compiler to address current quantum design limitations.

There are no more papers matching your filters at the moment.