Israel Institute of Technology

Monash UniversityLeipzig University

Monash UniversityLeipzig University Northeastern University

Northeastern University Carnegie Mellon University

Carnegie Mellon University New York University

New York University Stanford University

Stanford University McGill University

McGill University University of British ColumbiaCSIRO’s Data61IBM Research

University of British ColumbiaCSIRO’s Data61IBM Research Columbia UniversityScaDS.AI

Columbia UniversityScaDS.AI Hugging Face

Hugging Face Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab

Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab MIT

MIT Queen Mary University of LondonUniversity of VermontSAP

Queen Mary University of LondonUniversity of VermontSAP ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",

ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",StarCoder and StarCoderBase are large language models for code developed by The BigCode community, demonstrating state-of-the-art performance among open-access models on Python code generation, achieving 33.6% pass@1 on HumanEval, and strong multi-language capabilities, all while integrating responsible AI practices.

Time series forecasting (TSF) possesses great practical values in various fields, including power and energy, transportation, etc. TSF methods have been studied based on knowledge from classical statistics to modern deep learning. Yet, all of them were developed based on one fundamental concept, the numerical data fitting. Thus, the models developed have long been known to be problem-specific and lacking application generalizability. Practitioners expect a TSF foundation model that serves TSF tasks in different applications. The central question is then how to develop such a TSF foundation model. This paper offers one pioneering study in the TSF foundation model development method and proposes a vision intelligence-powered framework, ViTime, for the first time. ViTime fundamentally shifts TSF from numerical fitting to operations based on a binary image-based time series metric space and naturally supports both point and probabilistic forecasting. We also provide rigorous theoretical analyses of ViTime, including quantization-induced system error bounds and principled strategies for optimal parameter selection. Furthermore, we propose RealTS, an innovative synthesis algorithm generating diverse and realistic training samples, effectively enriching the training data and significantly enhancing model generalizability. Extensive experiments demonstrate ViTime's state-of-the-art performance. In zero-shot scenarios, ViTime outperforms TimesFM by 9-15\%. With just 10\% fine-tuning data, ViTime surpasses both leading foundation models and fully-supervised benchmarks, a gap that widens with 100\% fine-tuning. ViTime also exhibits exceptional robustness, effectively handling missing data and outperforming TimesFM by 20-30\% under various data perturbations, validating the power of its visual space data operation paradigm.

Researchers from Technion, Google, and Harvard University introduce Principal-Agent Reinforcement Learning (PARL), a framework that integrates principal-agent theory with deep reinforcement learning to orchestrate AI agents using dynamic contracts. This method successfully achieves optimal social welfare in sequential social dilemmas with average principal payments of approximately 30% of the social welfare, demonstrating high agent compliance.

Running faster will only get you so far -- it is generally advisable to first understand where the roads lead, then get a car ...

The renaissance of machine learning (ML) and deep learning (DL) over the last decade is accompanied by an unscalable computational cost, limiting its advancement and weighing on the field in practice. In this thesis we take a systematic approach to address the algorithmic and methodological limitations at the root of these costs. We first demonstrate that DL training and pruning are predictable and governed by scaling laws -- for state of the art models and tasks, spanning image classification and language modeling, as well as for state of the art model compression via iterative pruning. Predictability, via the establishment of these scaling laws, provides the path for principled design and trade-off reasoning, currently largely lacking in the field. We then continue to analyze the sources of the scaling laws, offering an approximation-theoretic view and showing through the exploration of a noiseless realizable case that DL is in fact dominated by error sources very far from the lower error limit. We conclude by building on the gained theoretical understanding of the scaling laws' origins. We present a conjectural path to eliminate one of the current dominant error sources -- through a data bandwidth limiting hypothesis and the introduction of Nyquist learners -- which can, in principle, reach the generalization error lower limit (e.g. 0 in the noiseless case), at finite dataset size.

08 Jan 2025

The effects of Reynolds number across Re=1000, 2500, 5000, and 10000 on separated flow over a two-dimensional NACA0012 airfoil at an angle of attack of α=14∘ are investigated through the biglobal resolvent analysis. We identify modal structures and energy amplifications over a range of frequency, spanwise wavenumber, and discount parameter, providing insights across various timescales. Using temporal discounting, we find that the shear layer dynamics dominates over short time horizons, while the wake dynamics becomes the primary amplification mechanism over long time horizons. Spanwise effects also appear over long time horizon, sustained by low frequencies. At a fixed timescale, we investigate the influence of Reynolds number on response and forcing mode structures, as well as the energy gain over different frequencies. Across all Reynolds numbers, the response modes shift from wake-dominated structures at low frequencies to shear layer-dominated structures at higher frequencies. The frequency at which the dominant mechanism changes is independent of the Reynolds number. The response mode structures show similarities across different Reynolds numbers, with local streamwise wavelengths only depending on frequency. Comparisons at a different angle of attack (α=9∘) show that the transition from wake to shear layer dynamics with increasing frequency only occurs if the unsteady flow is three-dimensional. We also study the dominant frequencies associated with wake and shear layer dynamics across the angles of attack and Reynolds numbers, and present the characteristic scaling for each mechanism.

We propose to analyse the conditional distributional treatment effect (CoDiTE), which, in contrast to the more common conditional average treatment effect (CATE), is designed to encode a treatment's distributional aspects beyond the mean. We first introduce a formal definition of the CoDiTE associated with a distance function between probability measures. Then we discuss the CoDiTE associated with the maximum mean discrepancy via kernel conditional mean embeddings, which, coupled with a hypothesis test, tells us whether there is any conditional distributional effect of the treatment. Finally, we investigate what kind of conditional distributional effect the treatment has, both in an exploratory manner via the conditional witness function, and in a quantitative manner via U-statistic regression, generalising the CATE to higher-order moments. Experiments on synthetic, semi-synthetic and real datasets demonstrate the merits of our approach.

31 Aug 2021

Many natural language inference (NLI) datasets contain biases that allow models to perform well by only using a biased subset of the input, without considering the remainder features. For instance, models are able to make a classification decision by only using the hypothesis, without learning the true relationship between it and the premise. These structural biases lead discriminative models to learn unintended superficial features and to generalize poorly out of the training distribution. In this work, we reformulate the NLI task as a generative task, where a model is conditioned on the biased subset of the input and the label and generates the remaining subset of the input. We show that by imposing a uniform prior, we obtain a provably unbiased model. Through synthetic experiments, we find that this approach is highly robust to large amounts of bias. We then demonstrate empirically on two types of natural bias that this approach leads to fully unbiased models in practice. However, we find that generative models are difficult to train and they generally perform worse than discriminative baselines. We highlight the difficulty of the generative modeling task in the context of NLI as a cause for this worse performance. Finally, by fine-tuning the generative model with a discriminative objective, we reduce the performance gap between the generative model and the discriminative baseline, while allowing for a small amount of bias.

The subject of this paper is optimisation of weak lensing tomography: We

carry out numerical minimisation of a measure of total statistical error as a

function of the redshifts of the tomographic bin edges by means of a

Nelder-Mead algorithm in order to optimise the sensitivity of weak lensing with

respect to different optimisation targets. Working under the assumption of a

Gaussian likelihood for the parameters of a w0waCDM-model and using

Euclid's conservative survey specifications, we compare an equipopulated,

equidistant and optimised bin setting and find that in general the

equipopulated setting is very close to the optimal one, while an equidistant

setting is far from optimal and also suffers from the ad hoc choice of a

maximum redshift. More importantly, we find that nearly saturated information

content can be gained using already few tomographic bins. This is crucial for

photometric redshift surveys with large redshift errors. We consider a large

range of targets for the optimisation process that can be computed from the

parameter covariance (or equivalently, from the Fisher-matrix), extend these

studies to information entropy measures such as the Kullback-Leibler-divergence

and conclude that in many cases equipopulated binning yields results close to

the optimum, which we support by analytical arguments.

21 Sep 2025

Social scientists have argued that autonomous vehicles (AVs) need to act as effective social agents; they have to respond implicitly to other drivers' behaviors as human drivers would. In this paper, we investigate how contingent driving behavior in AVs influences human drivers' experiences. We compared three algorithmic driving models: one trained on human driving data that responds to interactions (a familiar contingent behavior) and two artificial models that intend to either always-yield or never-yield regardless of how the interaction unfolds (non-contingent behaviors). Results show a statistically significant relationship between familiar contingent behavior and positive driver experiences, reducing stress while promoting the decisive interactions that mitigate driver hesitance. The direct relationship between familiar contingency and positive experience indicates that AVs should incorporate socially familiar driving patterns through contextually-adaptive algorithms to improve the chances of successful deployment and acceptance in mixed human-AV traffic environments.

Researchers from Technion – Israel Institute of Technology developed CODIP, a test-time method that enhances the adversarial robustness of pre-trained Adversarial-Trained (AT) image classifiers. CODIP employs class-conditioned image transformations guided by perceptually aligned gradients and a distance-based prediction rule, yielding robust accuracy improvements of up to 26% on ImageNet across diverse attack types and model architectures.

We study parameter estimation and asymptotic inference for sparse nonlinear

regression. More specifically, we assume the data are given by $y = f( x^\top

\beta^* ) + \epsilon,wherefisnonlinear.Torecover\beta^*$, we propose

an ℓ1-regularized least-squares estimator. Unlike classical linear

regression, the corresponding optimization problem is nonconvex because of the

nonlinearity of f. In spite of the nonconvexity, we prove that under mild

conditions, every stationary point of the objective enjoys an optimal

statistical rate of convergence. In addition, we provide an efficient algorithm

that provably converges to a stationary point. We also access the uncertainty

of the obtained estimator. Specifically, based on any stationary point of the

objective, we construct valid hypothesis tests and confidence intervals for the

low dimensional components of the high-dimensional parameter β∗.

Detailed numerical results are provided to back up our theory.

Hyperparameter optimization is both a practical issue and an interesting

theoretical problem in training of deep architectures. Despite many recent

advances the most commonly used methods almost universally involve training

multiple and decoupled copies of the model, in effect sampling the

hyperparameter space. We show that at a negligible additional computational

cost, results can be improved by sampling nonlocal paths instead of points in

hyperparameter space. To this end we interpret hyperparameters as controlling

the level of correlated noise in training, which can be mapped to an effective

temperature. The usually independent instances of the model are coupled and

allowed to exchange their hyperparameters throughout the training using the

well established parallel tempering technique of statistical physics. Each

simulation corresponds then to a unique path, or history, in the joint

hyperparameter/model-parameter space. We provide empirical tests of our method,

in particular for dropout and learning rate optimization. We observed faster

training and improved resistance to overfitting and showed a systematic

decrease in the absolute validation error, improving over benchmark results.

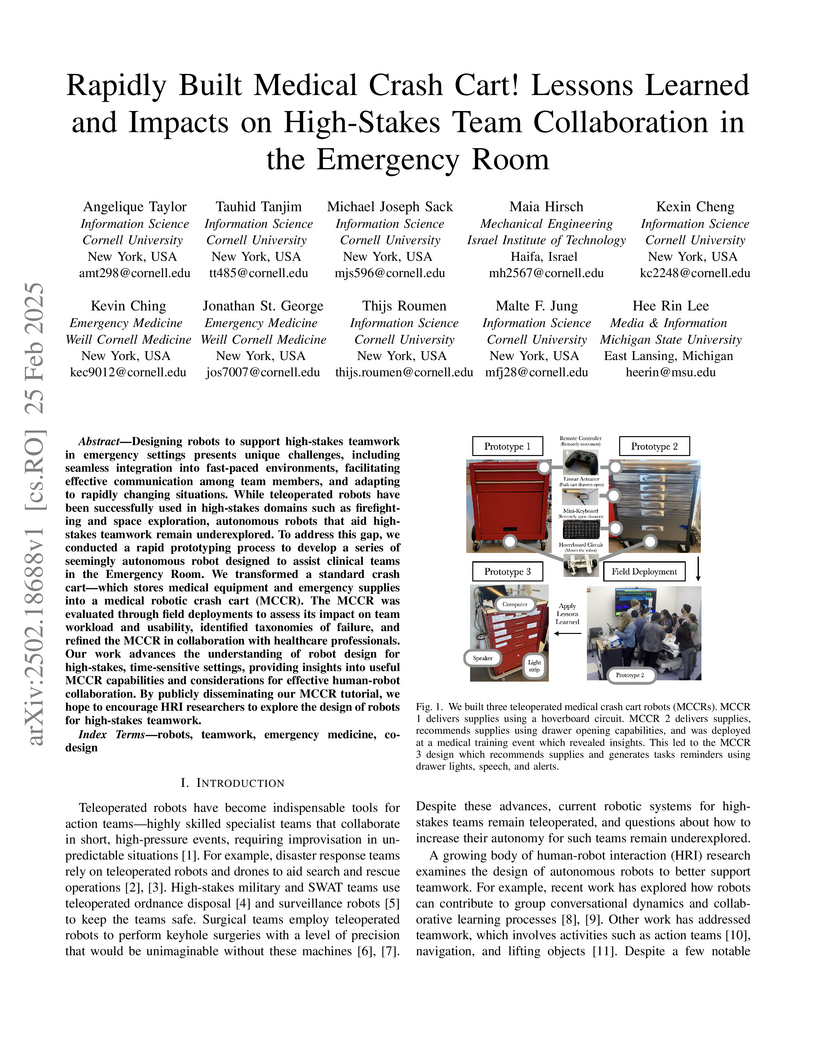

Designing robots to support high-stakes teamwork in emergency settings

presents unique challenges, including seamless integration into fast-paced

environments, facilitating effective communication among team members, and

adapting to rapidly changing situations. While teleoperated robots have been

successfully used in high-stakes domains such as firefighting and space

exploration, autonomous robots that aid highs-takes teamwork remain

underexplored. To address this gap, we conducted a rapid prototyping process to

develop a series of seemingly autonomous robot designed to assist clinical

teams in the Emergency Room. We transformed a standard crash cart--which stores

medical equipment and emergency supplies into a medical robotic crash cart

(MCCR). The MCCR was evaluated through field deployments to assess its impact

on team workload and usability, identified taxonomies of failure, and refined

the MCCR in collaboration with healthcare professionals. Our work advances the

understanding of robot design for high-stakes, time-sensitive settings,

providing insights into useful MCCR capabilities and considerations for

effective human-robot collaboration. By publicly disseminating our MCCR

tutorial, we hope to encourage HRI researchers to explore the design of robots

for high-stakes teamwork.

Fault detection and isolation is an area of engineering dealing with designing on-line protocols for systems that allow one to identify the existence of faults, pinpoint their exact location, and overcome them. We consider the case of multi-agent systems, where faults correspond to the disappearance of links in the underlying graph, simulating a communication failure between the corresponding agents. We study the case in which the agents and controllers are maximal equilibrium-independent passive (MEIP), and use the known connection between steady-states of these multi-agent systems and network optimization theory. We first study asymptotic methods of differentiating the faultless system from its faulty versions by studying their steady-state outputs. We explain how to apply the asymptotic differentiation to detect and isolate communication faults, with graph-theoretic guarantees on the number of faults that can be isolated, assuming the existence of a "convergence assertion protocol", a data-driven method of asserting that a multi-agent system converges to a conjectured limit. We then construct two data-driven model-based convergence assertion protocols. We demonstrate our results by a case study.

Networks of excitatory and inhibitory (EI) neurons form a canonical circuit

in the brain. Seminal theoretical results on dynamics of such networks are

based on the assumption that synaptic strengths depend on the type of neurons

they connect, but are otherwise statistically independent. Recent synaptic

physiology datasets however highlight the prominence of specific connectivity

patterns that go well beyond what is expected from independent connections.

While decades of influential research have demonstrated the strong role of the

basic EI cell type structure, to which extent additional connectivity features

influence dynamics remains to be fully determined. Here we examine the effects

of pairwise connectivity motifs on the linear dynamics in EI networks using an

analytical framework that approximates the connectivity in terms of low-rank

structures. This low-rank approximation is based on a mathematical derivation

of the dominant eigenvalues of the connectivity matrix and predicts the impact

on responses to external inputs of connectivity motifs and their interactions

with cell-type structure. Our results reveal that a particular pattern of

connectivity, chain motifs, have a much stronger impact on dominant eigenmodes

than other pairwise motifs. An overrepresentation of chain motifs induces a

strong positive eigenvalue in inhibition-dominated networks and generates a

potential instability that requires revisiting the classical

excitation-inhibition balance criteria. Examining effects of external inputs,

we show that chain motifs can on their own induce paradoxical responses where

an increased input to inhibitory neurons leads to a decrease in their activity

due to the recurrent feedback. These findings have direct implications for the

interpretation of experiments in which responses to optogenetic perturbations

are measured and used to infer the dynamical regime of cortical circuits.

One-Counter Nets (OCNs) are finite-state automata equipped with a counter

that is not allowed to become negative, but does not have zero tests. Their

simplicity and close connection to various other models (e.g., VASS, Counter

Machines and Pushdown Automata) make them an attractive model for studying the

border of decidability for the classical decision problems.

The deterministic fragment of OCNs (DOCNs) typically admits more tractable

decision problems, and while these problems and the expressive power of DOCNs

have been studied, the determinization problem, namely deciding whether an OCN

admits an equivalent DOCN, has not received attention.

We introduce four notions of OCN determinizability, which arise naturally due

to intricacies in the model, and specifically, the interpretation of the

initial counter value. We show that in general, determinizability is

undecidable under most notions, but over a singleton alphabet (i.e., 1

dimensional VASS) one definition becomes decidable, and the rest become

trivial, in that there is always an equivalent DOCN.

This paper proposes an attack-independent (non-adversarial training)

technique for improving adversarial robustness of neural network models, with

minimal loss of standard accuracy. We suggest creating a neighborhood around

each training example, such that the label is kept constant for all inputs

within that neighborhood. Unlike previous work that follows a similar

principle, we apply this idea by extending the training set with multiple

perturbations for each training example, drawn from within the neighborhood.

These perturbations are model independent, and remain constant throughout the

entire training process. We analyzed our method empirically on MNIST, SVHN, and

CIFAR-10, under different attacks and conditions. Results suggest that the

proposed approach improves standard accuracy over other defenses while having

increased robustness compared to vanilla adversarial training.

We address the prominent communication bottleneck in federated learning (FL). We specifically consider stochastic FL, in which models or compressed model updates are specified by distributions rather than deterministic parameters. Stochastic FL offers a principled approach to compression, and has been shown to reduce the communication load under perfect downlink transmission from the federator to the clients. However, in practice, both the uplink and downlink communications are constrained. We show that bi-directional compression for stochastic FL has inherent challenges, which we address by introducing BICompFL. Our BICompFL is experimentally shown to reduce the communication cost by an order of magnitude compared to multiple benchmarks, while maintaining state-of-the-art accuracies. Theoretically, we study the communication cost of BICompFL through a new analysis of an importance-sampling based technique, which exposes the interplay between uplink and downlink communication costs.

01 Jun 2018

The Earth-Moon system is suggested to have formed through a single giant collision, in which the Moon accreted from the impact-generated debris disk. However, such giant impacts are rare, and during its evolution the Earth experienced many more smaller impacts, producing smaller satellites that potentially coevolved. In the multiple-impact hypothesis of lunar formation, the current Moon was produced from the mergers of several smaller satellites (moonlets), each formed from debris disks produced by successive large impacts. In the Myrs between impacts, a pre-existing moonlet tidally evolves outward until a subsequent impact forms a new moonlet, at which point both moonlets will tidally evolve until a merger or system disruption. In this work, we examine the likelihood that pre-existing moonlets survive subsequent impact events, and explore the dynamics of Earth-moonlet systems that contain two moonlets generated Myrs apart. We demonstrate that pre-existing moonlets can tidally migrate outward, remain stable during subsequent impacts, and later merge with newly created moonlets (or re-collide with the Earth). Formation of the Moon from the mergers of several moonlets could therefore be a natural byproduct of the Earth's growth through multiple impacts. More generally, we examine the likelihood and consequences of Earth having prior moons, and find that the stability of moonlets against disruption by subsequent impacts implies that several large impacts could post-date Moon formation.

We investigate a novel global orientation regression approach for articulated objects using a deep convolutional neural network. This is integrated with an in-plane image derotation scheme, DeROT, to tackle the problem of per-frame fingertip detection in depth images. The method reduces the complexity of learning in the space of articulated poses which is demonstrated by using two distinct state-of-the-art learning based hand pose estimation methods applied to fingertip detection. Significant classification improvements are shown over the baseline implementation. Our framework involves no tracking, kinematic constraints or explicit prior model of the articulated object in hand. To support our approach we also describe a new pipeline for high accuracy magnetic annotation and labeling of objects imaged by a depth camera.

There are no more papers matching your filters at the moment.