JST Presto

This work introduces 3D Question Answering (3D-QA), a task where models answer free-form questions about 3D indoor scenes and localize relevant objects. Researchers at Kyoto University, ATR, and RIKEN AIP created ScanQA, a large-scale human-annotated dataset of over 41,000 question-answer pairs with 3D object groundings, and proposed an end-to-end baseline model that outperforms 2D and pipeline-based 3D approaches.

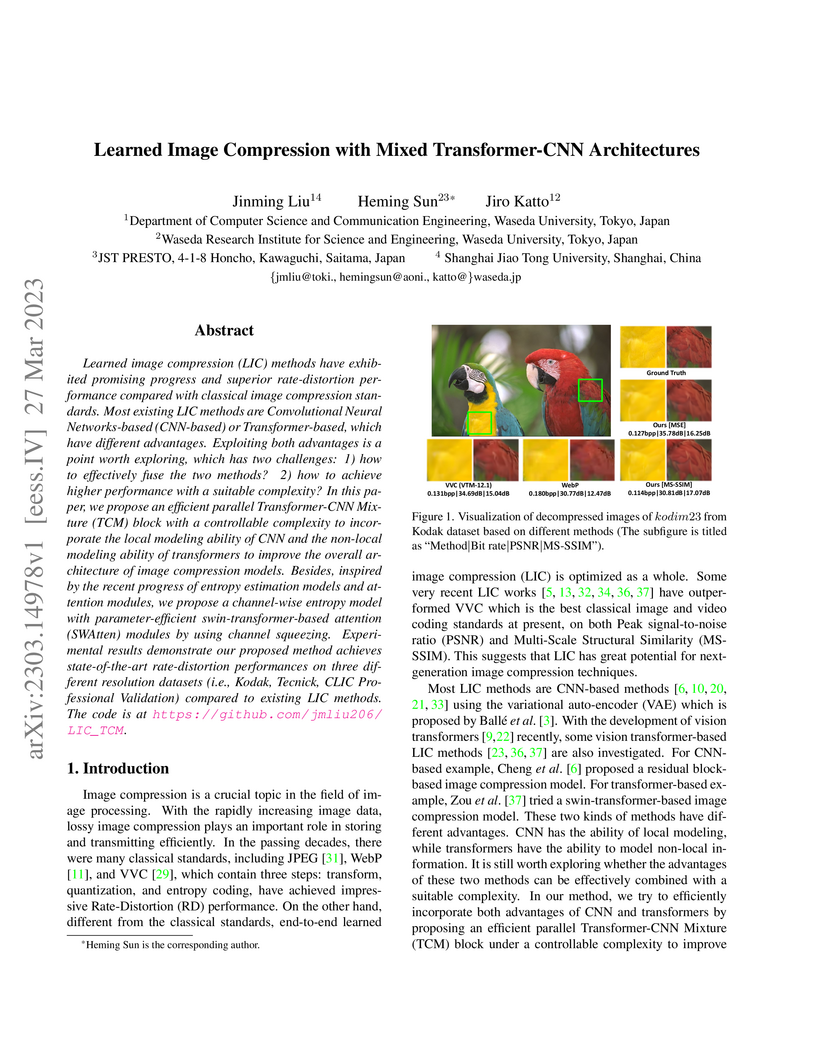

A learned image compression framework from Waseda University integrates Transformer-CNN Mixture blocks and a parameter-efficient Swin-Transformer attention module, achieving state-of-the-art rate-distortion performance. The method demonstrated over 11% BD-rate improvements compared to VVC across Kodak, Tecnick, and CLIC datasets.

Testing under what conditions the product satisfies the desired properties is a fundamental problem in manufacturing industry. If the condition and the property are respectively regarded as the input and the output of a black-box function, this task can be interpreted as the problem called Level Set Estimation (LSE) -- the problem of identifying input regions such that the function value is above (or below) a threshold. Although various methods for LSE problems have been developed so far, there are still many issues to be solved for their practical usage. As one of such issues, we consider the case where the input conditions cannot be controlled precisely, i.e., LSE problems under input uncertainty. We introduce a basic framework for handling input uncertainty in LSE problem, and then propose efficient methods with proper theoretical guarantees. The proposed methods and theories can be generally applied to a variety of challenges related to LSE under input uncertainty such as cost-dependent input uncertainties and unknown input uncertainties. We apply the proposed methods to artificial and real data to demonstrate the applicability and effectiveness.

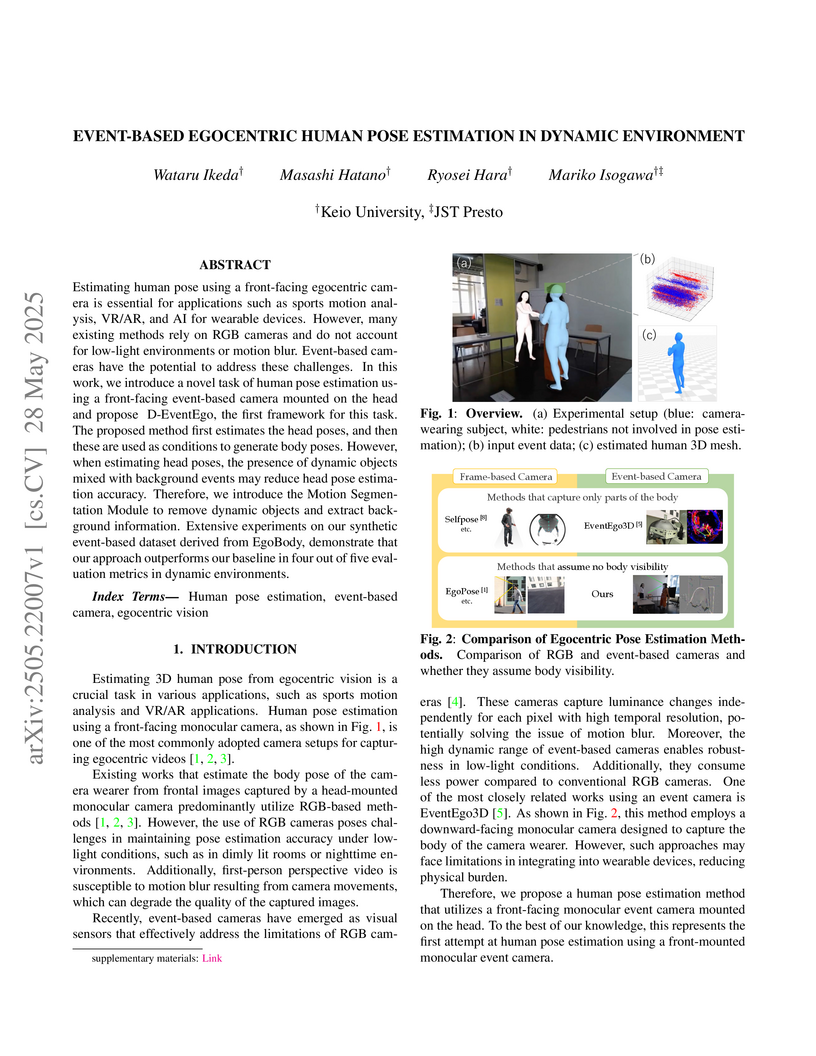

Keio University researchers developed the D-EventEgo framework, the first to estimate 3D human pose using a front-facing event-based camera mounted on the wearer's head, overcoming challenges like motion blur and low-light conditions prevalent in traditional RGB systems.

In this paper, from a theoretical perspective, we study how powerful graph

neural networks (GNNs) can be for learning approximation algorithms for

combinatorial problems. To this end, we first establish a new class of GNNs

that can solve a strictly wider variety of problems than existing GNNs. Then,

we bridge the gap between GNN theory and the theory of distributed local

algorithms. We theoretically demonstrate that the most powerful GNN can learn

approximation algorithms for the minimum dominating set problem and the minimum

vertex cover problem with some approximation ratios with the aid of the theory

of distributed local algorithms. We also show that most of the existing GNNs

such as GIN, GAT, GCN, and GraphSAGE cannot perform better than with these

ratios. This paper is the first to elucidate approximation ratios of GNNs for

combinatorial problems. Furthermore, we prove that adding coloring or

weak-coloring to each node feature improves these approximation ratios. This

indicates that preprocessing and feature engineering theoretically strengthen

model capabilities.

27 Feb 2020

There is an increased demand for task automation in robots. Contact-rich tasks, wherein multiple contact transitions occur in a series of operations, are extensively being studied to realize high accuracy. In this study, we propose a methodology that uses reinforcement learning (RL) to achieve high performance in robots for the execution of assembly tasks that require precise contact with objects without causing damage. The proposed method ensures the online generation of stiffness matrices that help improve the performance of local trajectory optimization. The method has an advantage of rapid response owing to short sampling time of the trajectory planning. The effectiveness of the method was verified via experiments involving two contact-rich tasks. The results indicate that the proposed method can be implemented in various contact-rich manipulations. A demonstration video shows the performance. (this https URL)

This work explores the application of deep learning, a machine learning technique that uses deep neural networks (DNN) in its core, to an automated theorem proving (ATP) problem. To this end, we construct a statistical model which quantifies the likelihood that a proof is indeed a correct one of a given proposition. Based on this model, we give a proof-synthesis procedure that searches for a proof in the order of the likelihood. This procedure uses an estimator of the likelihood of an inference rule being applied at each step of a proof. As an implementation of the estimator, we propose a proposition-to-proof architecture, which is a DNN tailored to the automated proof synthesis problem. To empirically demonstrate its usefulness, we apply our model to synthesize proofs of propositional logic. We train the proposition-to-proof model using a training dataset of proposition-proof pairs. The evaluation against a benchmark set shows the very high accuracy and an improvement to the recent work of neural proof synthesis.

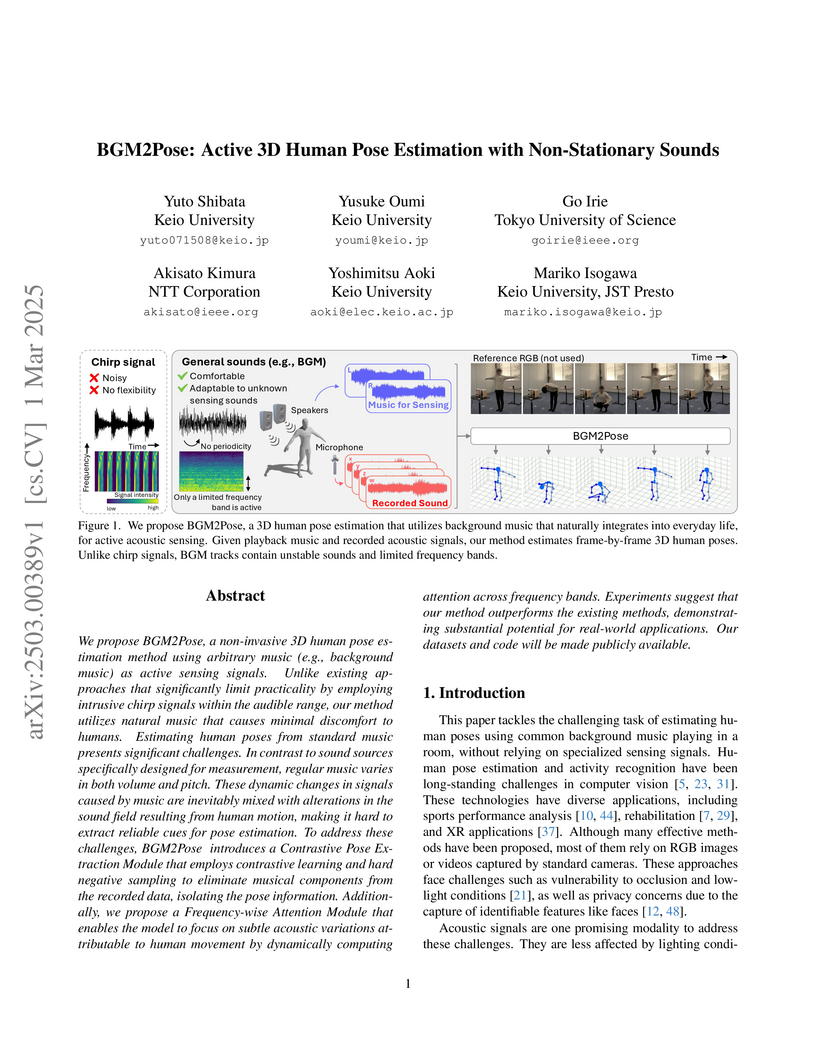

We propose BGM2Pose, a non-invasive 3D human pose estimation method using

arbitrary music (e.g., background music) as active sensing signals. Unlike

existing approaches that significantly limit practicality by employing

intrusive chirp signals within the audible range, our method utilizes natural

music that causes minimal discomfort to humans. Estimating human poses from

standard music presents significant challenges. In contrast to sound sources

specifically designed for measurement, regular music varies in both volume and

pitch. These dynamic changes in signals caused by music are inevitably mixed

with alterations in the sound field resulting from human motion, making it hard

to extract reliable cues for pose estimation. To address these challenges,

BGM2Pose introduces a Contrastive Pose Extraction Module that employs

contrastive learning and hard negative sampling to eliminate musical components

from the recorded data, isolating the pose information. Additionally, we

propose a Frequency-wise Attention Module that enables the model to focus on

subtle acoustic variations attributable to human movement by dynamically

computing attention across frequency bands. Experiments suggest that our method

outperforms the existing methods, demonstrating substantial potential for

real-world applications. Our datasets and code will be made publicly available.

We discuss the prediction accuracy of assumed statistical models in terms of prediction errors for the generalized linear model and penalized maximum likelihood methods. We derive the forms of estimators for the prediction errors, such as Cp criterion, information criteria, and leave-one-out cross validation (LOOCV) error, using the generalized approximate message passing (GAMP) algorithm and replica method. These estimators coincide with each other when the number of model parameters is sufficiently small; however, there is a discrepancy between them in particular in the parameter region where the number of model parameters is larger than the data dimension. In this paper, we review the prediction errors and corresponding estimators, and discuss their differences. In the framework of GAMP, we show that the information criteria can be expressed by using the variance of the estimates. Further, we demonstrate how to approach LOOCV error from the information criteria by utilizing the expression provided by GAMP.

09 Nov 2023

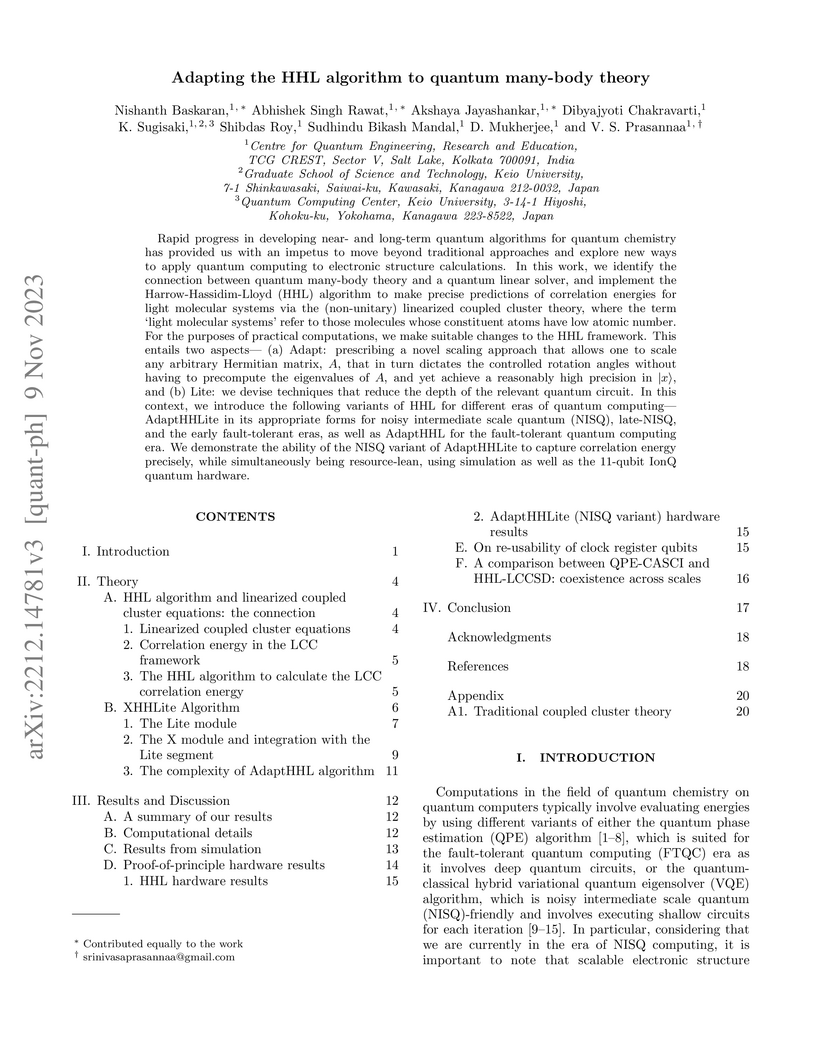

Rapid progress in developing near- and long-term quantum algorithms for quantum chemistry has provided us with an impetus to move beyond traditional approaches and explore new ways to apply quantum computing to electronic structure calculations. In this work, we identify the connection between quantum many-body theory and a quantum linear solver, and implement the Harrow-Hassidim-Lloyd (HHL) algorithm to make precise predictions of correlation energies for light molecular systems via the (non-unitary) linearised coupled cluster theory. We alter the HHL algorithm to integrate two novel aspects- (a) we prescribe a novel scaling approach that allows one to scale any arbitrary symmetric positive definite matrix A, to solve for Ax = b and achieve x with reasonable precision, all the while without having to compute the eigenvalues of A, and (b) we devise techniques that reduce the depth of the overall circuit. In this context, we introduce the following variants of HHL for different eras of quantum computing- AdaptHHLite in its appropriate forms for noisy intermediate scale quantum (NISQ), late-NISQ, and the early fault-tolerant eras, as well as AdaptHHL for the fault-tolerant quantum computing era. We demonstrate the ability of the NISQ variant of AdaptHHLite to capture correlation energy precisely, while simultaneously being resource-lean, using simulation as well as the 11-qubit IonQ quantum hardware.

18 Nov 2023

Energetic coherence is indispensable for various operations, including precise measurement of time and acceleration of quantum manipulations. Since energetic coherence is fragile, it is essential to understand the limits in distillation and dilution to restore damage. The resource theory of asymmetry (RTA) provides a rigorous framework to investigate energetic coherence as a resource to break time-translation symmetry. Recently, in the i.i.d. regime where identical copies of a state are converted into identical copies of another state, it has been shown that the convertibility of energetic coherence is governed by a standard measure of energetic coherence, called the quantum Fisher information (QFI). This fact means that QFI in the theory of energetic coherence takes the place of entropy in thermodynamics and entanglement entropy in entanglement theory. However, distillation and dilution in realistic situations take place in regimes beyond i.i.d., where quantum states often have complex correlations. Unlike entanglement theory, the conversion theory of energetic coherence in pure states in the non-i.i.d. regime has been an open problem. In this Letter, we solve this problem by introducing a new technique: an information-spectrum method for QFI. Two fundamental quantities, coherence cost and distillable coherence, are shown to be equal to the spectral QFI rates for arbitrary sequences of pure states. As a consequence, we find that both entanglement theory and RTA in the non-i.i.d. regime are understood in the information-spectrum method, while they are based on different quantities, i.e., entropy and QFI, respectively.

29 Mar 2022

Quantifying the correlation between the complex structures of amorphous materials and their physical properties has been a long-standing problem in materials science. In amorphous Si, a representative covalent amorphous solid, the presence of a medium-range order (MRO) has been intensively discussed. However, the specific atomic arrangement corresponding to the MRO and its relationship with physical properties, such as thermal conductivity, remain elusive. Here, we solve this problem by combining topological data analysis, machine learning, and molecular dynamics simulations. By using persistent homology, we constructed a topological descriptor that can predict the thermal conductivity. Moreover, from the inverse analysis of the descriptor, we determined the typical ring features that correlated with both the thermal conductivity and MRO. The results provide an avenue for controlling the material characteristics through the topology of nanostructures.

Vaccines are promising tools to control the spread of COVID-19. An effective vaccination campaign requires government policies and community engagement, sharing experiences for social support, and voicing concerns to vaccine safety and efficiency. The increasing use of online social platforms allows us to trace large-scale communication and infer public opinion in real-time. We collected more than 100 million vaccine-related tweets posted by 8 million users and used the Latent Dirichlet Allocation model to perform automated topic modeling of tweet texts during the vaccination campaign in Japan. We identified 15 topics grouped into 4 themes on Personal issue, Breaking news, Politics, and Conspiracy and humour. The evolution of the popularity of themes revealed a shift in public opinion, initially sharing the attention over personal issues (individual aspect), collecting information from the news (knowledge acquisition), and government criticisms, towards personal experiences once confidence in the vaccination campaign was established. An interrupted time series regression analysis showed that the Tokyo Olympic Games affected public opinion more than other critical events but not the course of the vaccination. Public opinion on politics was significantly affected by various events, positively shifting the attention in the early stages of the vaccination campaign and negatively later. Tweets about personal issues were mostly retweeted when the vaccination reached the younger population. The associations between the vaccination campaign stages and tweet themes suggest that the public engagement in the social platform contributed to speedup vaccine uptake by reducing anxiety via social learning and support.

09 Nov 2021

Variational inference (VI) combined with Bayesian nonlinear filtering

produces state-of-the-art results for latent time-series modeling. A body of

recent work has focused on sequential Monte Carlo (SMC) and its variants, e.g.,

forward filtering backward simulation (FFBSi). Although these studies have

succeeded, serious problems remain in particle degeneracy and biased gradient

estimators. In this paper, we propose Ensemble Kalman Variational Objective

(EnKO), a hybrid method of VI and the ensemble Kalman filter (EnKF), to infer

state space models (SSMs). Our proposed method can efficiently identify latent

dynamics because of its particle diversity and unbiased gradient estimators. We

demonstrate that our EnKO outperforms SMC-based methods in terms of predictive

ability and particle efficiency for three benchmark nonlinear system

identification tasks.

29 Jan 2018

We review recent developments of formulations to calculate the Dzyaloshinskii--Moriya (DM) interaction from first principles. In particular, we focus on three approaches. The first one evaluates the energy change due to the spin twisting by directly calculating the helical spin structure. The second one employs the spin gauge field technique to perform the derivative expansion with respect to the magnetic moment. This gives a clear picture that the DM interaction can be represented as the spin current in the equilibrium within the first order of the spin-orbit couplings. The third one is the perturbation expansion with respect to the exchange couplings and can be understood as the extension of the Ruderman--Kittel--Kasuya--Yosida (RKKY) interaction to the noncentrosymmetric spin-orbit systems. By calculating the DM interaction for the typical chiral ferromagnets Mn1−xFexGe and Fe1−xCoxGe, we discuss how these approaches work in actual systems.

09 Nov 2018

This study proposes an imitation learning method based on force and position

information. Force information is required for precise object manipulation but

is difficult to obtain because the acting and reaction forces cannnot be

separated. To separate the forces, we proposed to introduce bilateral control,

in which the acting and reaction forces are divided using two robots. In the

proposed method, two models of neural networks learn a task; to draw a line

along a ruler. We verify the possibility that force information is essential to

imitate the human skill of object manipulation.

Inspired by the recent evolution of deep neural networks (DNNs) in machine learning, we explore their application to PL-related topics. This paper is the first step towards this goal; we propose a proof-synthesis method for the negation-free propositional logic in which we use a DNN to obtain a guide of proof search. The idea is to view the proof-synthesis problem as a translation from a proposition to its proof. We train seq2seq, which is a popular network in neural machine translation, so that it generates a proof encoded as a λ-term of a given proposition. We implement the whole framework and empirically observe that a generated proof term is close to a correct proof in terms of the tree edit distance of AST. This observation justifies using the output from a trained seq2seq model as a guide for proof search.

In the data obtained by laser interferometric gravitational wave detectors, transient noise with non-stationary and non-Gaussian features occurs at a high rate. This often results in problems such as detector instability and the hiding and/or imitation of gravitational-wave signals. This transient noise has various characteristics in the time--frequency representation, which is considered to be associated with environmental and instrumental origins. Classification of transient noise can offer clues for exploring its origin and improving the performance of the detector. One approach for accomplishing this is supervised learning. However, in general, supervised learning requires annotation of the training data, and there are issues with ensuring objectivity in the classification and its corresponding new classes. By contrast, unsupervised learning can reduce the annotation work for the training data and ensure objectivity in the classification and its corresponding new classes. In this study, we propose an unsupervised learning architecture for the classification of transient noise that combines a variational autoencoder and invariant information clustering. To evaluate the effectiveness of the proposed architecture, we used the dataset (time--frequency two-dimensional spectrogram images and labels) of the Laser Interferometer Gravitational-wave Observatory (LIGO) first observation run prepared by the Gravity Spy project. The classes provided by our proposed unsupervised learning architecture were consistent with the labels annotated by the Gravity Spy project, which manifests the potential for the existence of unrevealed classes.

08 Apr 2020

Auditory frisson is the experience of feeling of cold or shivering related to

sound in the absence of a physical cold stimulus. Multiple examples of

frisson-inducing sounds have been reported, but the mechanism of auditory

frisson remains elusive. Typical frisson-inducing sounds may contain a looming

effect, in which a sound appears to approach the listener's peripersonal space.

Previous studies on sound in peripersonal space have provided objective

measurements of sound-inducing effects, but few have investigated the

subjective experience of frisson-inducing sounds. Here we explored whether it

is possible to produce subjective feelings of frisson by moving a noise sound

(white noise, rolling beads noise, or frictional noise produced by rubbing a

plastic bag) stimulus around a listener's head. Our results demonstrated that

sound-induced frisson can be experienced stronger when auditory stimuli are

rotated around the head (binaural moving sounds) than the one without the

rotation (monaural static sounds), regardless of the source of the noise sound.

Pearson's correlation analysis showed that several acoustic features of

auditory stimuli, such as variance of interaural level difference (ILD),

loudness, and sharpness, were correlated with the magnitude of subjective

frisson. We had also observed that the subjective feelings of frisson by moving

a musical sound had increased comparing with a static musical sound.

27 Jan 2023

Despite rapid progress in the development of quantum algorithms in quantum computing as well as numerical simulation methods in classical computing for atomic and molecular applications, no systematic and comprehensive electronic structure study of atomic systems that covers almost all of the elements in the periodic table using a single quantum algorithm has been reported. In this work, we address this gap by implementing the recently-proposed quantum algorithm, the Bayesian Phase Difference Estimation (BPDE) approach, to compute accurately fine-structure splittings, which are relativistic in origin and it also depends on quantum many-body (electron correlation) effects, of appropriately chosen states of atomic systems, including highly-charged superheavy ions. Our numerical simulations reveal that the BPDE algorithm, in the Dirac--Coulomb--Breit framework, can predict the fine-structure splitting of Boron-like ions to within 605.3 cm−1 of root mean square deviations from the experimental ones, in the (1s, 2s, 2p, 3s, 3p) active space. We performed our simulations of relativistic and electron correlation effects on Graphics Processing Unit (GPU) by utilizing NVIDIA's cuQuantum, and observe a ×42.7 speedup as compared to the CPU-only simulations in an 18-qubit active space.

There are no more papers matching your filters at the moment.