Karlstad University

14 Oct 2025

A polyatomic ideal gas with weak interaction between the translational and internal modes is considered. For the purpose of describing the behavior of such a gas, a Boltzmann equation is proposed in the form that the collision integral is a linear combination of inelastic and elastic (or resonant) collisions, and its basic properties are discussed. Then, in the case where the elastic collisions are dominant, fluid dynamic equations of Euler and Navier--Stokes type including two temperatures, i.e., translational and internal temperatures, as well as relaxation terms are systematically obtained by means of the Chapman--Enskog expansion. The obtained equations are different depending on the degree of weakness of the interaction between the translational and internal modes.

This paper presents a comprehensive review and hierarchical classification of performance-based concept drift detection methods from 2011-2020. It also unifies the terminology for concept drift types based on their mathematical definitions.

\usepackage{iopams} Recent developments have revealed that symmetries need not form a group, but instead can be non-invertible. Here we use analytical arguments and numerical evidence to illuminate how spontaneous symmetry breaking of a non-invertible symmetry is similar yet distinct from ordinary, invertible, symmetry breaking. We consider one-dimensional chains of group-valued qudits, whose local Hilbert space is spanned by elements of a finite group G (reducing to ordinary qubits when G=Z2). We construct Ising-type transverse-field Hamiltonians with Rep(G) symmetry whose generators multiply according to the tensor product of irreducible representations (irreps) of the group G. For non-Abelian G, the symmetry is non-invertible. In the symmetry broken phase there is one ground state per irrep on a closed chain. The symmetry breaking can be detected by local order parameters but, unlike the invertible case, different ground states have distinct entanglement patterns. We show that for each irrep of dimension greater than one the corresponding ground state exhibits string order, entanglement spectrum degeneracies, and has gapless edge modes on an open chain -- features usually associated with symmetry-protected topological order. Consequently, domain wall excitations behave as one-dimensional non-Abelian anyons with non-trivial internal Hilbert spaces and fusion rules. Our work identifies properties of non-invertible symmetry breaking that existing quantum hardware can probe.

Extended Berkeley Packet Filter (eBPF) is a runtime that enables users to load programs into the operating system (OS) kernel, like Linux or Windows, and execute them safely and efficiently at designated kernel hooks. Each program passes through a verifier that reasons about the safety guarantees for execution. Hosting a safe virtual machine runtime within the kernel makes it dynamically programmable. Unlike the popular approach of bypassing or completely replacing the kernel, eBPF gives users the flexibility to modify the kernel on the fly, rapidly experiment and iterate, and deploy solutions to achieve their workload-specific needs, while working in concert with the kernel.

In this paper, we present the first comprehensive description of the design and implementation of the eBPF runtime in the Linux kernel. We argue that eBPF today provides a mature and safe programming environment for the kernel. It has seen wide adoption since its inception and is increasingly being used not just to extend, but program entire components of the kernel, while preserving its runtime integrity. We outline the compelling advantages it offers for real-world production usage, and illustrate current use cases. Finally, we identify its key challenges, and discuss possible future directions.

02 Oct 2025

This work introduces the application of the Orthogonal Procrustes problem to the generation of synthetic data. The proposed methodology ensures that the resulting synthetic data preserves important statistical relationships among features, specifically the Pearson correlation. An empirical illustration using a large, real-world, tabular dataset of energy consumption demonstrates the effectiveness of the approach and highlights its potential for application in practical synthetic data generation. Our approach is not meant to replace existing generative models, but rather as a lightweight post-processing step that enforces exact Pearson correlation to an already generated synthetic dataset.

09 Feb 2025

Researchers from Karlstad University and Ludwig Maximilian University Munich developed and evaluated a comprehensive, automated framework for testing LLM-RAG systems, demonstrating how model parameters and RAG integration impact quality metrics in a real-world tourism application. The study found that conservative generation parameters are crucial for robust performance, and while RAG had minimal impact on general quality, it was essential for factual accuracy and domain specificity.

The research outlines a structured framework for achieving technical robustness in machine learning systems operating in production environments. It formalizes robustness specifications across Automation, DataOps, and ModelOps components of MLOps, surveys existing academic approaches and commercial tools, and identifies open challenges for building trustworthy AI.

19 Jul 2022

Ongoing research on anomaly detection for the Internet of Things (IoT) is a rapidly expanding field. This growth necessitates an examination of application trends and current gaps. The vast majority of those publications are in areas such as network and infrastructure security, sensor monitoring, smart home, and smart city applications and are extending into even more sectors. Recent advancements in the field have increased the necessity to study the many IoT anomaly detection applications. This paper begins with a summary of the detection methods and applications, accompanied by a discussion of the categorization of IoT anomaly detection algorithms. We then discuss the current publications to identify distinct application domains, examining papers chosen based on our search criteria. The survey considers 64 papers among recent publications published between January 2019 and July 2021. In recent publications, we observed a shortage of IoT anomaly detection methodologies, for example, when dealing with the integration of systems with various sensors, data and concept drifts, and data augmentation where there is a shortage of Ground Truth data. Finally, we discuss the present such challenges and offer new perspectives where further research is required.

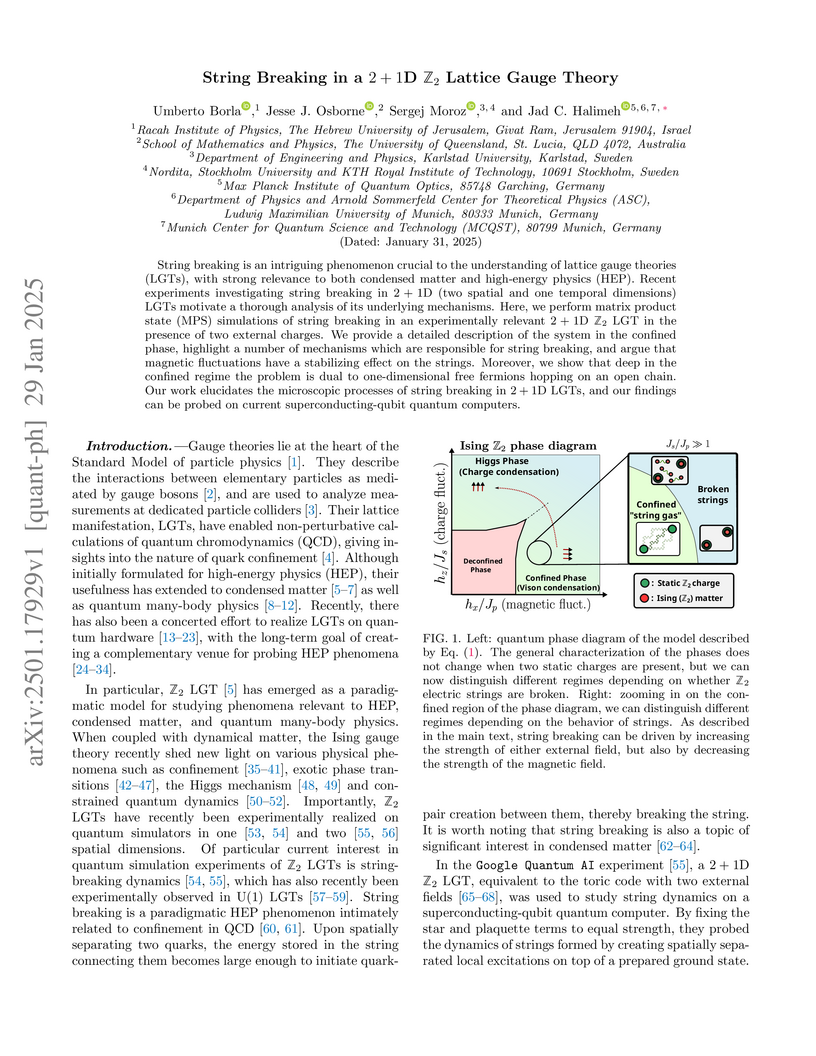

String breaking is an intriguing phenomenon crucial to the understanding of lattice gauge theories (LGTs), with strong relevance to both condensed matter and high-energy physics (HEP). Recent experiments investigating string breaking in 2+1D (two spatial and one temporal dimensions) LGTs motivate a thorough analysis of its underlying mechanisms. Here, we perform matrix product state (MPS) simulations of string breaking in an experimentally relevant 2+1D Z2 LGT in the presence of two external charges. We provide a detailed description of the system in the confined phase, highlight a number of mechanisms which are responsible for string breaking, and argue that magnetic fluctuations have a stabilizing effect on the strings. Moreover, we show that deep in the confined regime the problem is dual to one-dimensional free fermions hopping on an open chain. Our work elucidates the microscopic processes of string breaking in 2+1D LGTs, and our findings can be probed on current superconducting-qubit quantum computers.

Guided by symmetry principles, we construct an effective field theory that captures the long-wavelength dynamics of two-dimensional vortex crystals observed in rotating Bose-Einstein condensates trapped in a harmonic potential. By embedding the system into Newton--Cartan spacetime and analyzing its isometries, we identify the appropriate spacetime symmetry group for trapped condensates at finite angular momentum. After introducing a coarse-grained description of the vortex lattice we consider a homogeneous equilibrium configuration and discuss the associated symmetry breaking pattern. We apply the coset construction method to identify covariant structures that enter the effective action and discuss the physical interpretation of the inverse Higgs constraints. We verify that Kohn's theorem is satisfied within our construction and subsequently focus on the gapless sector of the theory. In this regime, the effective theory accommodates a single gapless excitation--the Tkachenko mode--for which we construct both the leading-order and next-to-leading-order actions, the latter including cubic interaction terms.

University of Florida

University of Florida Université Paris-SaclayUniversity of GenevaUniversity of OttawaKarlstad UniversityNagasaki UniversityUniversity of LorraineUniversity of KlagenfurtUniversity of KinshasaSyntheseAI Inc.Université Pédagogique NationaleMinistry of Public Health of the DRCGroupe de Recherche de Prospection et Valorisation des DonnéesInstitut Supérieur Pédagogique de KikwitUniversité Nouveaux HorizonsUniversity of Mbuji MayiCentre Intégré Universitaire de Santé et Services Sociaux du Nord-de-l’Île-de-MontréalHospital MonkoleUniversit

de Sherbrooke

Université Paris-SaclayUniversity of GenevaUniversity of OttawaKarlstad UniversityNagasaki UniversityUniversity of LorraineUniversity of KlagenfurtUniversity of KinshasaSyntheseAI Inc.Université Pédagogique NationaleMinistry of Public Health of the DRCGroupe de Recherche de Prospection et Valorisation des DonnéesInstitut Supérieur Pédagogique de KikwitUniversité Nouveaux HorizonsUniversity of Mbuji MayiCentre Intégré Universitaire de Santé et Services Sociaux du Nord-de-l’Île-de-MontréalHospital MonkoleUniversit

de SherbrookeArtificial Intelligence (AI) is revolutionizing various fields, including public health surveillance. In Africa, where health systems frequently encounter challenges such as limited resources, inadequate infrastructure, failed health information systems and a shortage of skilled health professionals, AI offers a transformative opportunity. This paper investigates the applications of AI in public health surveillance across the continent, presenting successful case studies and examining the benefits, opportunities, and challenges of implementing AI technologies in African healthcare settings. Our paper highlights AI's potential to enhance disease monitoring and health outcomes, and support effective public health interventions. The findings presented in the paper demonstrate that AI can significantly improve the accuracy and timeliness of disease detection and prediction, optimize resource allocation, and facilitate targeted public health strategies. Additionally, our paper identified key barriers to the widespread adoption of AI in African public health systems and proposed actionable recommendations to overcome these challenges.

Axion-like particles (ALPs) can induce localised oscillatory modulations in the spectra of photon sources passing through astrophysical magnetic fields. Ultra-deep Chandra observations of the Perseus cluster contain over 5×105 counts from the AGN of the central cluster galaxy NGC1275, and represent a dataset of extraordinary quality for ALP searches. We use this dataset to search for X-ray spectral irregularities from the AGN. The absence of irregularities at the O(30%) level allows us to place leading constraints on the ALP-photon mixing parameter gaγγ≲1.4−4.0×10−12GeV−1 for ma≲10−12 eV, depending on assumptions on the magnetic field realisation along the line of sight.

Diabetic Retinopathy (DR) stands as the leading cause of blindness globally,

particularly affecting individuals between the ages of 20 and 70. This paper

presents a Computer-Aided Diagnosis (CAD) system designed for the automatic

classification of retinal images into five distinct classes: Normal, Mild,

Moderate, Severe, and Proliferative Diabetic Retinopathy (PDR). The proposed

system leverages Convolutional Neural Networks (CNNs) employing pre-trained

deep learning models. Through the application of fine-tuning techniques, our

model is trained on fundus images of diabetic retinopathy with resolutions of

350x350x3 and 224x224x3. Experimental results obtained on the Kaggle platform,

utilizing resources comprising 4 CPUs, 17 GB RAM, and 1 GB Disk, demonstrate

the efficacy of our approach. The achieved Area Under the Curve (AUC) values

for CNN, MobileNet, VGG-16, InceptionV3, and InceptionResNetV2 models are 0.50,

0.70, 0.53, 0.63, and 0.69, respectively.

Data quality assessment has become a prominent component in the successful execution of complex data-driven artificial intelligence (AI) software systems. In practice, real-world applications generate huge volumes of data at speeds. These data streams require analysis and preprocessing before being permanently stored or used in a learning task. Therefore, significant attention has been paid to the systematic management and construction of high-quality datasets. Nevertheless, managing voluminous and high-velocity data streams is usually performed manually (i.e. offline), making it an impractical strategy in production environments. To address this challenge, DataOps has emerged to achieve life-cycle automation of data processes using DevOps principles. However, determining the data quality based on a fitness scale constitutes a complex task within the framework of DataOps. This paper presents a novel Data Quality Scoring Operations (DQSOps) framework that yields a quality score for production data in DataOps workflows. The framework incorporates two scoring approaches, an ML prediction-based approach that predicts the data quality score and a standard-based approach that periodically produces the ground-truth scores based on assessing several data quality dimensions. We deploy the DQSOps framework in a real-world industrial use case. The results show that DQSOps achieves significant computational speedup rates compared to the conventional approach of data quality scoring while maintaining high prediction performance.

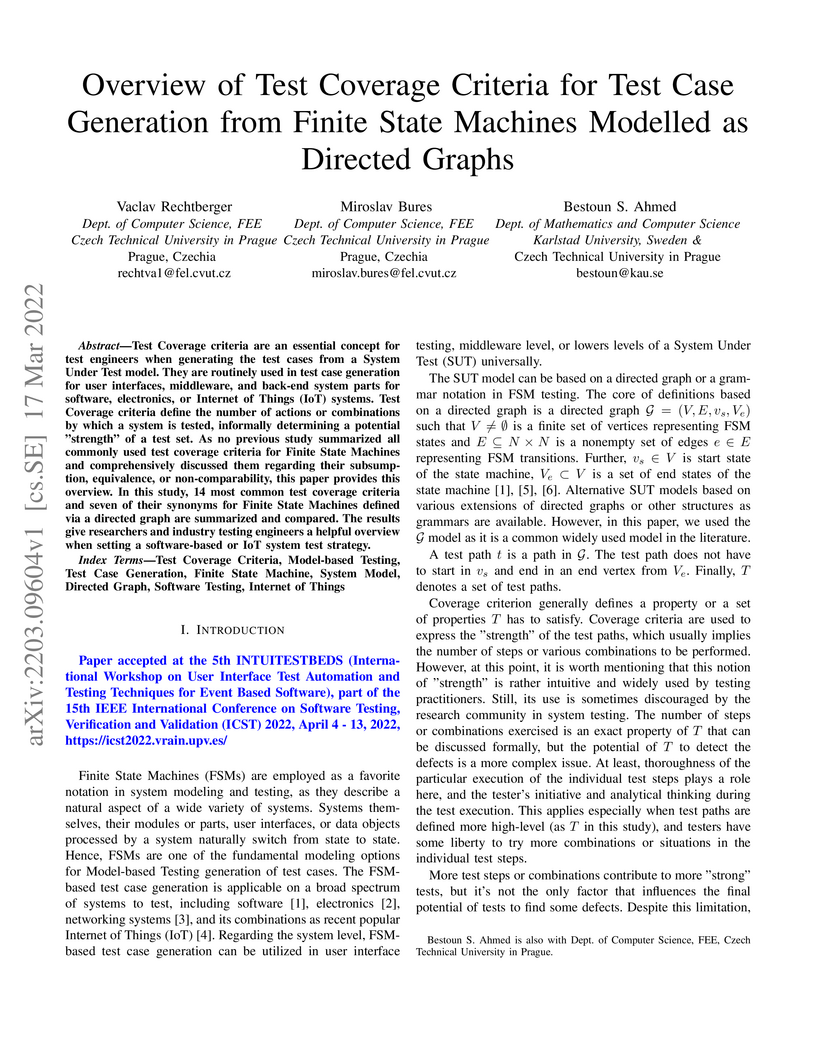

Test Coverage criteria are an essential concept for test engineers when

generating the test cases from a System Under Test model. They are routinely

used in test case generation for user interfaces, middleware, and back-end

system parts for software, electronics, or Internet of Things (IoT) systems.

Test Coverage criteria define the number of actions or combinations by which a

system is tested, informally determining a potential "strength" of a test set.

As no previous study summarized all commonly used test coverage criteria for

Finite State Machines and comprehensively discussed them regarding their

subsumption, equivalence, or non-comparability, this paper provides this

overview. In this study, 14 most common test coverage criteria and seven of

their synonyms for Finite State Machines defined via a directed graph are

summarized and compared. The results give researchers and industry testing

engineers a helpful overview when setting a software-based or IoT system test

strategy.

17 Jul 2024

We investigated the effective influence of grain structures on the heat transfer between a fluid and solid domain using mathematical homogenization. The presented model consists of heat equations inside the different domains, coupled through either perfect or imperfect thermal contact. The size and the period of the grains are of order ε, therefore forming a thin layer. The equation parameters inside the grains also depend on ε. We considered two distinct scenarios: Case (a), where the grains are disconnected, and Case (b), where the grains form a connected geometry but in a way such that the fluid and solid are still in contact. In both cases, we determined the effective differential equations for the limit ε→0 via the concept of two-scale convergence for thin layers. We also presented and studied a numerical algorithm to solve the homogenized problem.

Many emerging distributed applications, including big data analytics,

generate a number of flows that concurrently transport data across data center

networks. To improve their performance, it is required to account for the

behavior of a collection of flows, i.e., coflows, rather than individual.

State-of-the-art solutions allow for a near-optimal completion time by

continuously reordering the unfinished coflows at the end-host, using network

priorities. This paper shows that dynamically changing flow priorities at the

end host, without taking into account in-flight packets, can cause high-degrees

of packet re-ordering, thus imposing pressure on the congestion control and

potentially harming network performance in the presence of switches with

shallow buffers. We present pCoflow, a new solution that integrates end-host

based coflow ordering with in-network scheduling based on packet history. Our

evaluation shows that pCoflow improves in CCT upon state-of-the-art solutions

by up to 34% for varying load.

With the advent of advanced quantum processors capable of probing lattice gauge theories (LGTs) in higher spatial dimensions, it is crucial to understand string dynamics in such models to guide upcoming experiments and to make connections to high-energy physics (HEP). Using tensor network methods, we study the far-from-equilibrium quench dynamics of electric flux strings between two static charges in the 2+1D Z2 LGT with dynamical matter. We calculate the probabilities of finding the time-evolved wave function in string configurations of the same length as the initial string. At resonances determined by the the electric field strength and the mass, we identify various string breaking processes accompanied with matter creation. Away from resonance strings exhibit intriguing confined dynamics which, for strong electric fields, we fully characterize through effective perturbative models. Starting in maximal-length strings, we find that the wave function enters a dynamical regime where it splits into shorter strings and disconnected loops, with the latter bearing qualitative resemblance to glueballs in quantum chromodynamics (QCD). Our findings can be probed on state-of-the-art superconducting-qubit and trapped-ion quantum processors.

Researchers from Harvard University, TU Munich, and KITP propose that the Higgs phase of a gauge theory can be understood as a symmetry-protected topological (SPT) phase. They demonstrate this using the 2+1D Z₂ Fradkin-Shenker model, showing protected edge modes robust to explicit breaking of higher-form symmetries and predicting boundary phase transitions.

SoK: Chasing Accuracy and Privacy, and Catching Both in Differentially Private Histogram Publication

SoK: Chasing Accuracy and Privacy, and Catching Both in Differentially Private Histogram Publication

Histograms and synthetic data are of key importance in data analysis.

However, researchers have shown that even aggregated data such as histograms,

containing no obvious sensitive attributes, can result in privacy leakage. To

enable data analysis, a strong notion of privacy is required to avoid risking

unintended privacy violations.

Such a strong notion of privacy is differential privacy, a statistical notion

of privacy that makes privacy leakage quantifiable. The caveat regarding

differential privacy is that while it has strong guarantees for privacy,

privacy comes at a cost of accuracy. Despite this trade off being a central and

important issue in the adoption of differential privacy, there exists a gap in

the literature regarding providing an understanding of the trade off and how to

address it appropriately.

Through a systematic literature review (SLR), we investigate the

state-of-the-art within accuracy improving differentially private algorithms

for histogram and synthetic data publishing. Our contribution is two-fold: 1)

we identify trends and connections in the contributions to the field of

differential privacy for histograms and synthetic data and 2) we provide an

understanding of the privacy/accuracy trade off challenge by crystallizing

different dimensions to accuracy improvement. Accordingly, we position and

visualize the ideas in relation to each other and external work, and

deconstruct each algorithm to examine the building blocks separately with the

aim of pinpointing which dimension of accuracy improvement each

technique/approach is targeting. Hence, this systematization of knowledge (SoK)

provides an understanding of in which dimensions and how accuracy improvement

can be pursued without sacrificing privacy.

There are no more papers matching your filters at the moment.