King Saud University

SPILDL introduces a scalable and parallel inductive learner in Description Logic, drastically improving the performance of DL-based ILP algorithms by up to 560-fold on large datasets by integrating shared-memory and distributed-memory parallel computing with a high-throughput hypothesis evaluation engine. This advancement enables the generation of human-interpretable models from large, complex, multi-relational data.

27 Nov 2025

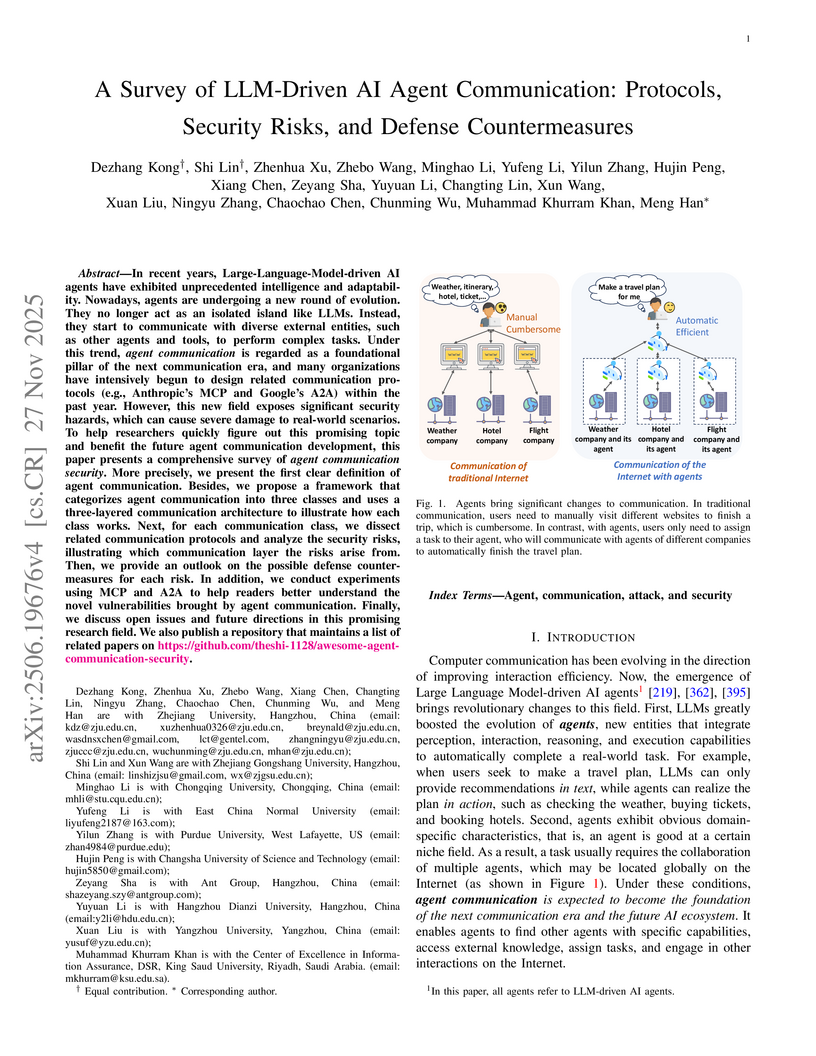

In recent years, Large-Language-Model-driven AI agents have exhibited unprecedented intelligence and adaptability. Nowadays, agents are undergoing a new round of evolution. They no longer act as an isolated island like LLMs. Instead, they start to communicate with diverse external entities, such as other agents and tools, to perform complex tasks. Under this trend, agent communication is regarded as a foundational pillar of the next communication era, and many organizations have intensively begun to design related communication protocols (e.g., Anthropic's MCP and Google's A2A) within the past year. However, this new field exposes significant security hazards, which can cause severe damage to real-world scenarios. To help researchers quickly figure out this promising topic and benefit the future agent communication development, this paper presents a comprehensive survey of agent communication security. More precisely, we present the first clear definition of agent communication. Besides, we propose a framework that categorizes agent communication into three classes and uses a three-layered communication architecture to illustrate how each class works. Next, for each communication class, we dissect related communication protocols and analyze the security risks, illustrating which communication layer the risks arise from. Then, we provide an outlook on the possible defense countermeasures for each risk. In addition, we conduct experiments using MCP and A2A to help readers better understand the novel vulnerabilities brought by agent communication. Finally, we discuss open issues and future directions in this promising research field. We also publish a repository that maintains a list of related papers on this https URL.

Researchers at King Saud University conducted the first fMRI investigation into prompt engineering expertise, identifying distinct neurocognitive markers that differentiate experts from intermediate users. Their findings reveal experts exhibit higher low-frequency power ratios in specific cognitive networks and increased functional connectivity in key language and executive control regions.

Fudan University

Fudan University Shanghai Jiao Tong University

Shanghai Jiao Tong University Zhejiang UniversityPeng Cheng Laboratory

Zhejiang UniversityPeng Cheng Laboratory Nanyang Technological UniversityKing Saud UniversityChina Mobile Research InstituteSoutheast UniversityVTT Technical Research Centre of Finland Ltd.

Nanyang Technological UniversityKing Saud UniversityChina Mobile Research InstituteSoutheast UniversityVTT Technical Research Centre of Finland Ltd. Mohamed bin Zayed University of Artificial IntelligenceCICT Mobile Communication Technology Co., Ltd.Huawei TechnologiesBeijing University of Posts and Telecommunications

Mohamed bin Zayed University of Artificial IntelligenceCICT Mobile Communication Technology Co., Ltd.Huawei TechnologiesBeijing University of Posts and Telecommunications University of Sydney

University of SydneyThis review paper from an international collaboration of researchers presents a three-stage conceptual framework for integrating Artificial Intelligence (AI) into Sixth-Generation (6G) networks: AI for Network (AI4NET), Network for AI (NET4AI), and AI as a Service (AIaaS). It details how AI can optimize network performance, how 6G can natively support AI, and how AI can become an inherent network service, introducing a Quality of AI Service (QoAIS) framework for evaluation.

08 Oct 2025

We study nonparametric estimation of univariate cumulative distribution functions (CDFs) pertaining to data missing at random. The proposed estimators smooth the inverse probability weighted (IPW) empirical CDF with the Bernstein operator, yielding monotone, [0,1]-valued curves that automatically adapt to bounded supports. We analyze two versions: a pseudo estimator that uses known propensities and a feasible estimator that uses propensities estimated nonparametrically from discrete auxiliary variables, the latter scenario being much more common in practice. For both, we derive pointwise bias and variance expansions, establish the optimal polynomial degree m with respect to the mean integrated squared error, and prove the asymptotic normality. A key finding is that the feasible estimator has a smaller variance than the pseudo estimator by an explicit nonnegative correction term. We also develop an efficient degree selection procedure via least-squares cross-validation. Monte Carlo experiments demonstrate that, for moderate to large sample sizes, the Bernstein-smoothed feasible estimator outperforms both its unsmoothed counterpart and an integrated version of the IPW kernel density estimator proposed by Dubnicka (2009) in the same context. A real-data application to fasting plasma glucose from the 2017-2018 NHANES survey illustrates the method in a practical setting. All code needed to reproduce our analyses is readily accessible on GitHub.

The emergence of ChatGPT marked a transformative milestone for Artificial Intelligence (AI), showcasing the remarkable potential of Large Language Models (LLMs) to generate human-like text. This wave of innovation has revolutionized how we interact with technology, seamlessly integrating LLMs into everyday tasks such as vacation planning, email drafting, and content creation. While English-speaking users have significantly benefited from these advancements, the Arabic world faces distinct challenges in developing Arabic-specific LLMs. Arabic, one of the languages spoken most widely around the world, serves more than 422 million native speakers in 27 countries and is deeply rooted in a rich linguistic and cultural heritage. Developing Arabic LLMs (ALLMs) presents an unparalleled opportunity to bridge technological gaps and empower communities. The journey of ALLMs has been both fascinating and complex, evolving from rudimentary text processing systems to sophisticated AI-driven models. This article explores the trajectory of ALLMs, from their inception to the present day, highlighting the efforts to evaluate these models through benchmarks and public leaderboards. We also discuss the challenges and opportunities that ALLMs present for the Arab world.

10 Oct 2025

A first-principles study characterizes the newly predicted SnSiGeN4 monolayer as an efficient direct band gap semiconductor for photocatalytic water splitting, exhibiting strong UV-visible light absorption. It achieves an OER overpotential of 0.48 V, an ORR overpotential of 0.23 eV, and a HER Gibbs free energy of -0.076 eV, surpassing noble metal benchmarks in several key metrics.

Most visual programming languages (VPLs) are domain-specific, with few general-purpose VPLs like Programming Without Coding Technology (PWCT). These general-purpose VPLs are developed using textual programming languages and improving them requires textual programming. In this thesis, we designed and developed PWCT2, a dual-language (Arabic/English), general-purpose, self-hosting visual programming language. Before doing so, we specifically designed a textual programming language called Ring for its development. Ring is a dynamically typed language with a lightweight implementation, offering syntax customization features. It permits the creation of domain-specific languages through new features that extend object-oriented programming, allowing for specialized languages resembling Cascading Style Sheets (CSS) or Supernova language. The Ring Compiler and Virtual Machine are designed using the PWCT visual programming language where the visual implementation is composed of 18,945 components that generate 24,743 lines of C code, which increases the abstraction level and hides unnecessary details. Using PWCT to develop Ring allowed us to realize several issues in PWCT, which led to the development of the PWCT2 visual programming language using the Ring textual programming language. PWCT2 provides approximately 36 times faster code generation and requires 20 times less storage for visual source files. It also allows for the conversion of Ring code into visual code, enabling the creation of a self-hosting VPL that can be developed using itself. PWCT2 consists of approximately 92,000 lines of Ring code and comes with 394 visual components. PWCT2 is distributed to many users through the Steam platform and has received positive feedback, On Steam, 1772 users have launched the software, and the total recorded usage time exceeds 17,000 hours, encouraging further research and development.

This paper conducts an extensive review of biometric user authentication literature, addressing three primary research questions: (1) commonly used biometric traits and their suitability for specific applications, (2) performance factors such as security, convenience, and robustness, and potential countermeasures against cyberattacks, and (3) factors affecting biometric system accuracy and po-tential improvements. Our analysis delves into physiological and behavioral traits, exploring their pros and cons. We discuss factors influencing biometric system effectiveness and highlight areas for enhancement. Our study differs from previous surveys by extensively examining biometric traits, exploring various application domains, and analyzing measures to mitigate cyberattacks. This paper aims to inform researchers and practitioners about the biometric authentication landscape and guide future advancements.

Examining the factors that the counterspeech uses are at the core of

understanding the optimal methods for confronting hate speech online. Various

studies have assessed the emotional base factors used in counter speech, such

as emotional empathy, offensiveness, and hostility. To better understand the

counterspeech used in conversations, this study distills persuasion modes into

reason, emotion, and credibility and evaluates their use in two types of

conversation interactions: closed (multi-turn) and open (single-turn)

concerning racism, sexism, and religious bigotry. The evaluation covers the

distinct behaviors seen with human-sourced as opposed to machine-generated

counterspeech. It also assesses the interplay between the stance taken and the

mode of persuasion seen in the counterspeech.

Notably, we observe nuanced differences in the counterspeech persuasion modes

used in open and closed interactions, especially in terms of the topic, with a

general tendency to use reason as a persuasion mode to express the counterpoint

to hate comments. The machine-generated counterspeech tends to exhibit an

emotional persuasion mode, while human counters lean toward reason.

Furthermore, our study shows that reason tends to obtain more supportive

replies than other persuasion modes. The findings highlight the potential for

incorporating persuasion modes into studies about countering hate speech, as

they can serve as an optimal means of explainability and pave the way for the

further adoption of the reply's stance and the role it plays in assessing what

comprises the optimal counterspeech.

Propaganda is a form of persuasion that has been used throughout history with

the intention goal of influencing people's opinions through rhetorical and

psychological persuasion techniques for determined ends. Although Arabic ranked

as the fourth most- used language on the internet, resources for propaganda

detection in languages other than English, especially Arabic, remain extremely

limited. To address this gap, the first Arabic dataset for Multi-label

Propaganda, Sentiment, and Emotion (MultiProSE) has been introduced. MultiProSE

is an open-source extension of the existing Arabic propaganda dataset, ArPro,

with the addition of sentiment and emotion annotations for each text. This

dataset comprises 8,000 annotated news articles, which is the largest

propaganda dataset to date. For each task, several baselines have been

developed using large language models (LLMs), such as GPT-4o-mini, and

pre-trained language models (PLMs), including three BERT-based models. The

dataset, annotation guidelines, and source code are all publicly released to

facilitate future research and development in Arabic language models and

contribute to a deeper understanding of how various opinion dimensions interact

in news media1.

18 Oct 2025

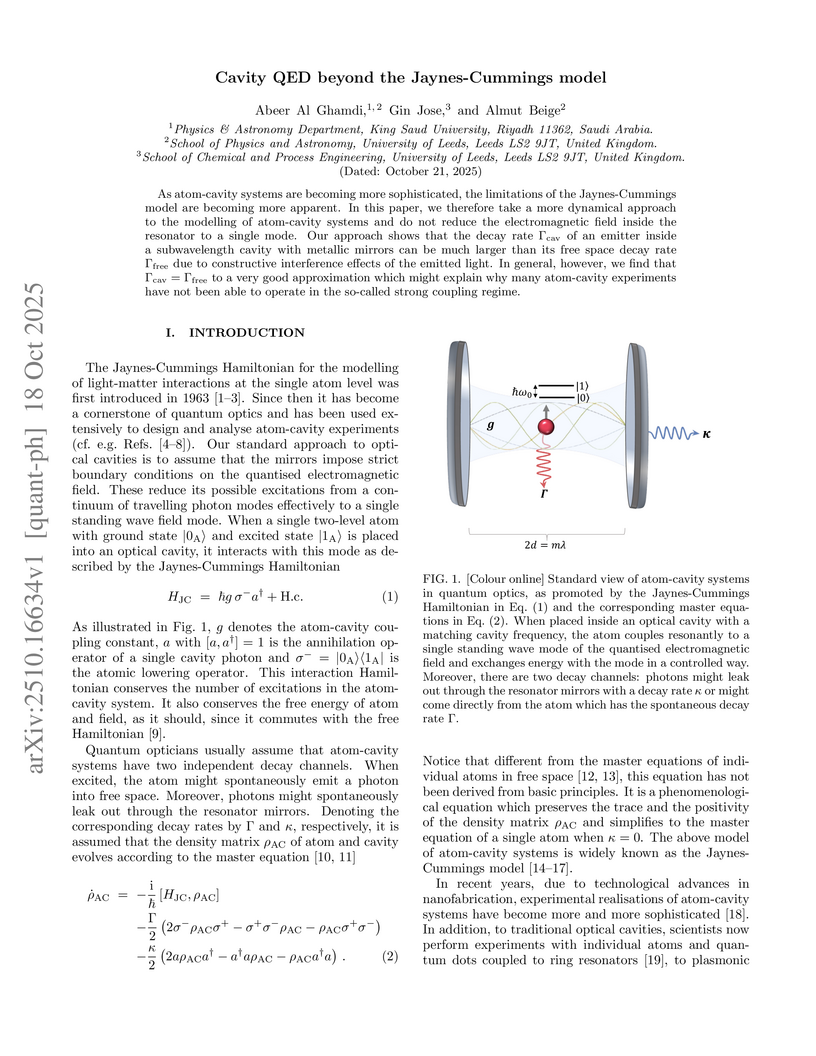

As atom-cavity systems are becoming more sophisticated, the limitations of the Jaynes-Cummings model are becoming more apparent. In this paper, we therefore take a more dynamical approach to the modelling of atom-cavity systems and do not reduce the electromagnetic field inside the resonator to a single mode. Our approach shows that the decay rate Gamma_cav of an emitter inside a subwavelength cavity with metallic mirrors can be much larger than its free space decay rate Gamma_free due to constructive interference effects of the emitted light. In general, however, we find that Gamma_cav = Gamma_free to a very good approximation which might explain why many atom-cavity experiments have not been able to operate in the so-called strong coupling regime.

17 Sep 2025

Requirements classification assigns natural language requirements to predefined classes, such as functional and non functional. Accurate classification reduces risk and improves software quality. Most existing models rely on supervised learning, which needs large labeled data that are costly, slow to create, and domain dependent; they also generalize poorly and often require retraining for each task. This study tests whether prompt based large language models can reduce data needs. We benchmark several models and prompting styles (zero shot, few shot, persona, and chain of thought) across multiple tasks on two English datasets, PROMISE and SecReq. For each task we compare model prompt configurations and then compare the best LLM setups with a strong fine tuned transformer baseline. Results show that prompt based LLMs, especially with few shot prompts, can match or exceed the baseline. Adding a persona, or persona plus chain of thought, can yield further gains. We conclude that prompt based LLMs are a practical and scalable option that reduces dependence on large annotations and can improve generalizability across tasks.

This survey offers a comprehensive overview of Large Language Models (LLMs)

designed for Arabic language and its dialects. It covers key architectures,

including encoder-only, decoder-only, and encoder-decoder models, along with

the datasets used for pre-training, spanning Classical Arabic, Modern Standard

Arabic, and Dialectal Arabic. The study also explores monolingual, bilingual,

and multilingual LLMs, analyzing their architectures and performance across

downstream tasks, such as sentiment analysis, named entity recognition, and

question answering. Furthermore, it assesses the openness of Arabic LLMs based

on factors, such as source code availability, training data, model weights, and

documentation. The survey highlights the need for more diverse dialectal

datasets and attributes the importance of openness for research reproducibility

and transparency. It concludes by identifying key challenges and opportunities

for future research and stressing the need for more inclusive and

representative models.

Mental health disorders significantly impact people globally, regardless of background, education, or socioeconomic status. However, access to adequate care remains a challenge, particularly for underserved communities with limited resources. Text mining tools offer immense potential to support mental healthcare by assisting professionals in diagnosing and treating patients. This study addresses the scarcity of Arabic mental health resources for developing such tools. We introduce MentalQA, a novel Arabic dataset featuring conversational-style question-and-answer (QA) interactions. To ensure data quality, we conducted a rigorous annotation process using a well-defined schema with quality control measures. Data was collected from a question-answering medical platform. The annotation schema for mental health questions and corresponding answers draws upon existing classification schemes with some modifications. Question types encompass six distinct categories: diagnosis, treatment, anatomy \& physiology, epidemiology, healthy lifestyle, and provider choice. Answer strategies include information provision, direct guidance, and emotional support. Three experienced annotators collaboratively annotated the data to ensure consistency. Our findings demonstrate high inter-annotator agreement, with Fleiss' Kappa of 0.61 for question types and 0.98 for answer strategies. In-depth analysis revealed insightful patterns, including variations in question preferences across age groups and a strong correlation between question types and answer strategies. MentalQA offers a valuable foundation for developing Arabic text mining tools capable of supporting mental health professionals and individuals seeking information.

13 Aug 2025

Kyungpook National University Carnegie Mellon UniversityKing Saud University

Carnegie Mellon UniversityKing Saud University Argonne National Laboratory

Argonne National Laboratory Arizona State UniversityFlorida State UniversityThe George Washington University

Arizona State UniversityFlorida State UniversityThe George Washington University Duke University

Duke University MITUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityMississippi State UniversityINFN, Sezione di PaviaINFN, Sezione di TorinoNew Mexico State UniversityUniversit`a degli Studi di BresciaINFN, Laboratori Nazionali di FrascatiUniversity of New HampshireUniversity of California RiversideJames Madison UniversityNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityINFN-Sezione di GenovaCatholic University of AmericaOhio UniversityINFN, Sezione di CataniaIdaho State UniversityCanisius CollegeDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityINFN Sezione di Roma Tor VergataUniversitaet GiessenUniversità di FerraraINFN-Sezione di Ferrara

MITUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityMississippi State UniversityINFN, Sezione di PaviaINFN, Sezione di TorinoNew Mexico State UniversityUniversit`a degli Studi di BresciaINFN, Laboratori Nazionali di FrascatiUniversity of New HampshireUniversity of California RiversideJames Madison UniversityNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityINFN-Sezione di GenovaCatholic University of AmericaOhio UniversityINFN, Sezione di CataniaIdaho State UniversityCanisius CollegeDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityINFN Sezione di Roma Tor VergataUniversitaet GiessenUniversità di FerraraINFN-Sezione di Ferrara

Carnegie Mellon UniversityKing Saud University

Carnegie Mellon UniversityKing Saud University Argonne National Laboratory

Argonne National Laboratory Arizona State UniversityFlorida State UniversityThe George Washington University

Arizona State UniversityFlorida State UniversityThe George Washington University Duke University

Duke University MITUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityMississippi State UniversityINFN, Sezione di PaviaINFN, Sezione di TorinoNew Mexico State UniversityUniversit`a degli Studi di BresciaINFN, Laboratori Nazionali di FrascatiUniversity of New HampshireUniversity of California RiversideJames Madison UniversityNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityINFN-Sezione di GenovaCatholic University of AmericaOhio UniversityINFN, Sezione di CataniaIdaho State UniversityCanisius CollegeDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityINFN Sezione di Roma Tor VergataUniversitaet GiessenUniversità di FerraraINFN-Sezione di Ferrara

MITUniversity of GlasgowUniversity of ConnecticutFlorida International UniversityMississippi State UniversityINFN, Sezione di PaviaINFN, Sezione di TorinoNew Mexico State UniversityUniversit`a degli Studi di BresciaINFN, Laboratori Nazionali di FrascatiUniversity of New HampshireUniversity of California RiversideJames Madison UniversityNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityINFN-Sezione di GenovaCatholic University of AmericaOhio UniversityINFN, Sezione di CataniaIdaho State UniversityCanisius CollegeDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityINFN Sezione di Roma Tor VergataUniversitaet GiessenUniversità di FerraraINFN-Sezione di FerraraExclusive photoproduction of K+Λ final states off a proton target has been an important component in the search for missing nucleon resonances and our understanding of the production of final states containing strange quarks. Polarization observables have been instrumental in this effort. The current work is an extension of previously published CLAS results on the beam-recoil transferred polarization observables Cx and Cz. We extend the kinematic range up to invariant mass W=3.33~GeV from the previous limit of W=2.5~GeV with significantly improved statistical precision in the region of overlap. These data will provide for tighter constraints on the reaction models used to unravel the spectrum of nucleon resonances and their properties by not only improving the statistical precision of the data within the resonance region, but also by constraining t-channel processes that dominate at higher W but extend into the resonance region.

In recent years, Deep Learning (DL) has been successfully applied to detect and classify Radio Frequency (RF) Signals. A DL approach is especially useful since it identifies the presence of a signal without needing full protocol information, and can also detect and/or classify non-communication waveforms, such as radar signals. In this work, we focus on the different pre-processing steps that can be used on the input training data, and test the results on a fixed DL architecture. While previous works have mostly focused exclusively on either time-domain or frequency domain approaches, we propose a hybrid image that takes advantage of both time and frequency domain information, and tackles the classification as a Computer Vision problem. Our initial results point out limitations to classical pre-processing approaches while also showing that it's possible to build a classifier that can leverage the strengths of multiple signal representations.

03 Aug 2025

Rensselaer Polytechnic Institute University of PittsburghKyungpook National University

University of PittsburghKyungpook National University Carnegie Mellon University

Carnegie Mellon University Tel Aviv University

Tel Aviv University INFNKing Saud University

INFNKing Saud University Argonne National Laboratory

Argonne National Laboratory Arizona State UniversityThe George Washington University

Arizona State UniversityThe George Washington University Université Paris-SaclayLos Alamos National LaboratoryFermi National Accelerator Laboratory

Université Paris-SaclayLos Alamos National LaboratoryFermi National Accelerator Laboratory MIT

MIT CEAUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityLomonosov Moscow State UniversityMississippi State UniversityUniversity of New HampshireNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityOhio UniversityUniversity of RichmondDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityIRFUUniversit`a di Roma Tor VergataCanisius UniversityUniversitaet GiessenUniversity of SouthUniversità di Ferrara

CEAUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityLomonosov Moscow State UniversityMississippi State UniversityUniversity of New HampshireNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityOhio UniversityUniversity of RichmondDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityIRFUUniversit`a di Roma Tor VergataCanisius UniversityUniversitaet GiessenUniversity of SouthUniversità di Ferrara

University of PittsburghKyungpook National University

University of PittsburghKyungpook National University Carnegie Mellon University

Carnegie Mellon University Tel Aviv University

Tel Aviv University INFNKing Saud University

INFNKing Saud University Argonne National Laboratory

Argonne National Laboratory Arizona State UniversityThe George Washington University

Arizona State UniversityThe George Washington University Université Paris-SaclayLos Alamos National LaboratoryFermi National Accelerator Laboratory

Université Paris-SaclayLos Alamos National LaboratoryFermi National Accelerator Laboratory MIT

MIT CEAUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityLomonosov Moscow State UniversityMississippi State UniversityUniversity of New HampshireNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityOhio UniversityUniversity of RichmondDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityIRFUUniversit`a di Roma Tor VergataCanisius UniversityUniversitaet GiessenUniversity of SouthUniversità di Ferrara

CEAUniversity of ConnecticutFlorida International UniversityOld Dominion UniversityLomonosov Moscow State UniversityMississippi State UniversityUniversity of New HampshireNorfolk State UniversityLamar UniversityGSI Helmholtzzentrum fur Schwerionenforschung GmbHChristopher Newport UniversityOhio UniversityUniversity of RichmondDuquesne UniversityCalifornia State University, Dominguez HillsFairfield UniversityIRFUUniversit`a di Roma Tor VergataCanisius UniversityUniversitaet GiessenUniversity of SouthUniversità di FerraraExtracting accurate results from neutrino oscillation and cross section experiments requires accurate simulation of the neutrino-nucleus interaction. The rescattering of outgoing hadrons (final state interactions) by the rest of the nucleus is an important component of these interactions. We present a new measurement of proton transparency (defined as the fraction of outgoing protons that emerge without significant rescattering) using electron-nucleus scattering data recorded by the CLAS detector at Jefferson Laboratory on helium, carbon, and iron targets. This analysis by the Electrons for Neutrinos (e4ν) collaboration uses a new data-driven method to extract the transparency. It defines transparency as the ratio of electron-scattering events with a detected proton to quasi-elastic electron-scattering events where a proton should have been knocked out. Our results are consistent with previous measurements that determined the transparency from the ratio of measured events to theoretically predicted events. We find that the GENIE event generator, which is widely used by oscillation experiments to simulate neutrino-nucleus interactions, needs to better describe both the nuclear ground state and proton rescattering in order to reproduce our measured transparency ratios, especially at lower proton momenta.

This paper presents a novel method to compute various measures of effectiveness (MOEs) at a signalized intersection using vehicle trajectory data collected by flying drones. MOEs are key parameters in determining the quality of service at signalized intersections. Specifically, this study investigates the use of drone raw data at a busy three-way signalized intersection in Athens, Greece, and builds on the open data initiative of the pNEUMA experiment. Using a microscopic approach and shockwave analysis on data extracted from realtime videos, we estimated the maximum queue length, whether, when, and where a spillback occurred, vehicle stops, vehicle travel time and delay, crash rates, fuel consumption, CO2 emissions, and fundamental diagrams. Results of the various MOEs were found to be promising, which confirms that the use of traffic data collected by drones has many applications. We also demonstrate that estimating MOEs in real-time is achievable using drone data. Such models have the ability to track individual vehicle movements within street networks and thus allow the modeler to consider any traffic conditions, ranging from highly under-saturated to highly over-saturated conditions. These microscopic models have the advantage of capturing the impact of transient vehicle behavior on various MOEs.

Loneliness and social isolation are serious and widespread problems among

older people, affecting their physical and mental health, quality of life, and

longevity. In this paper, we propose a ChatGPT-based conversational companion

system for elderly people. The system is designed to provide companionship and

help reduce feelings of loneliness and social isolation. The system was

evaluated with a preliminary study. The results showed that the system was able

to generate responses that were relevant to the created elderly personas.

However, it is essential to acknowledge the limitations of ChatGPT, such as

potential biases and misinformation, and to consider the ethical implications

of using AI-based companionship for the elderly, including privacy concerns.

There are no more papers matching your filters at the moment.