LIMOS

Researchers introduced "by the book analysis," a novel framework for algorithm analysis, applying it to the simplex method to derive a polynomial expected running time. This framework improves upon smoothed analysis by incorporating real-world solver characteristics and maintaining applicability to sparse linear programs.

This paper provides a short introduction to the mathematical foundation of quantum computation for researchers in computer science by providing an introduction fo the mathematical basis of calculations. This paper concerns the mathematical foundations of quantum computation addressing first the representation of qubit using the Bloch sphere and second the special relations between SU(2) and SO(3). The properties of SU(2) are introduced focusing especially about the double-covering of SO(3) and explaining how to map rotations of SO(3) into matrices of SU(2). Quantum physic operators are based on SU(2) since we have a direct relationship to SO(3) namely one isomorphism. We start first from basic representations of qubit in R^3 and representations of operators in SU(2) and we next discuss with operators that permit to move from one SU(2) to another one according to a specific operator of SU(2) that is related to rotation into R^3.

27 May 2017

We consider the set Bp of parametric block correlation matrices with p blocks of various (and possibly different) sizes, whose diagonal blocks are compound symmetry (CS) correlation matrices and off-diagonal blocks are constant matrices. Such matrices appear in probabilistic models on categorical data, when the levels are partitioned in p groups, assuming a constant correlation within a group and a constant correlation for each pair of groups. We obtain two necessary and sufficient conditions for positive definiteness of elements of Bp. Firstly we consider the block average map ϕ, consisting in replacing a block by its mean value. We prove that for any A ∈ Bp , A is positive definite if and only if ϕ(A) is positive definite. Hence it is equivalent to check the validity of the covariance matrix of group means, which only depends on the number of groups and not on their sizes. This theorem can be extended to a wider set of block matrices. Secondly, we consider the subset of Bp for which the between group correlation is the same for all pairs of groups. Positive definiteness then comes down to find the positive definite interval of a matrix pencil on Sp. We obtain a simple characterization by localizing the roots of the determinant with within group correlation values.

10 Feb 2025

This paper presents a clear, algorithmic method for constructing quantum circuits that implement the exponentiation of Pauli product operators, a foundational operation for quantum simulation and optimization algorithms. The proposed approach, detailed through three theorems and an explicit algorithm, is validated by comparison with Qiskit's `PauliTrotterEvolution` method.

In property testing, a tester makes queries to (an oracle for) a graph and, on a graph having or being far from having a property P, it decides with high probability whether the graph satisfies P or not. Often, testers are restricted to a constant number of queries. While the graph properties for which there exists such a tester are somewhat well characterized in the dense graph model, it is not the case for sparse graphs. In this area, Czumaj and Sohler (FOCS'19) proved that H-freeness (i.e. the property of excluding the graph H as a subgraph) can be tested with constant queries on planar graphs as well as on graph classes excluding a minor.

Using results from the sparsity toolkit, we propose a simpler alternative to the proof of Czumaj and Sohler, for a statement generalized to the broader notion of bounded expansion. That is, we prove that for any class C with bounded expansion and any graph H, testing H-freeness can be done with constant query complexity on any graph G in C, where the constant depends on H and C, but is independent of G.

While classes excluding a minor are prime examples of classes with bounded expansion, so are, for example, cubic graphs, graph classes with bounded maximum degree, graphs of bounded book thickness, or random graphs of bounded average degree.

We prove that the active-set method needs an exponential number of iterations in the worst-case to maximize a convex quadratic function subject to linear constraints, regardless of the pivot rule used. This substantially improves over the best previously known lower bound [IPCO 2025], which needs objective functions of polynomial degrees ω(logd) in dimension d, to a bound using a convex polynomial of degree 2. In particular, our result firmly resolves the open question [IPCO 2025] of whether a constant degree suffices, and it represents significant progress towards linear objectives, where the active-set method coincides with the simplex method and a lower bound for all pivot rules would constitute a major breakthrough.

Our result is based on a novel extended formulation, recursively constructed using deformed products. Its key feature is that it projects onto a polygonal approximation of a parabola while preserving all of its exponentially many vertices. We define a quadratic objective that forces the active-set method to follow the parabolic boundary of this projection, without allowing any shortcuts along chords corresponding to edges of its full-dimensional preimage.

05 Nov 2022

This paper concerns the Grover algorithm that permits to make amplification of quantum states previously tagged by an Oracle. Grover's algorithm allows searches in an unstructure database of n entries finding a marked element with a quadratic speedup. The algorithm requires a predefined number of runs to succeed with probability close to this http URL article provides a description of the amplitude amplification quantum algorithm mechanism in a very short computational way, based on tensor products and provides a geometric presentation of the successive system states. All the basis changes are fully described to provide an alternative to the wide spread Grover description based only on matrices and complex tensor computation. Our experiments encompass numerical evaluations of circuit using the Qiskit library of IBM that meet the theoretical considerations

Understanding the facial expressions of our interlocutor is important to

enrich the communication and to give it a depth that goes beyond the explicitly

expressed. In fact, studying one's facial expression gives insight into their

hidden emotion state. However, even as humans, and despite our empathy and

familiarity with the human emotional experience, we are only able to guess what

the other might be feeling. In the fields of artificial intelligence and

computer vision, Facial Emotion Recognition (FER) is a topic that is still in

full growth mostly with the advancement of deep learning approaches and the

improvement of data collection. The main purpose of this paper is to compare

the performance of three state-of-the-art networks, each having their own

approach to improve on FER tasks, on three FER datasets. The first and second

sections respectively describe the three datasets and the three studied network

architectures designed for an FER task. The experimental protocol, the results

and their interpretation are outlined in the remaining sections.

23 Jul 2022

This paper concerns quantum heuristics based on Mixer Hamiltonians that allow to restrict investigation on a specific subspace. Mixer Hamiltonian based approaches can be included in QAOA algorithm and we can state that Mixer Hamiltonians are mapping functions from the set of qubit-strings to the set of solutions. Mixer Hamiltonian offers an approach very similar to indirect representations commonly used in routing or in scheduling community for decades. After the initial publication of Cheng et al. in 1996 (Cheng et al., 1996), numerous propositions in OR lies on 1-to-n mapping functions, including the split algorithm that transform one TSP solution into a VRP solution. The objective is at first to give a compact and readable presentation of these Mixer Hamiltonians considering the functional analogies that exist between the OR community practices and the quantum field. Our experiments encompass numerical evaluations of circuit using the Qiskit library of IBM meeting the theoretical considerations.

07 Nov 2023

In recent years, interest has grown in alternative strategies for the search for New Physics beyond the Standard Model. One envisaged solution lies in the development of anomaly detection algorithms based on unsupervised machine learning techniques. In this paper, we propose a new Generative Adversarial Network-based auto-encoder model that allows both anomaly detection and model-independent background modeling. This algorithm can be integrated with other model-independent tools in a complete heavy resonance search strategy. The proposed strategy has been tested on the LHC Olympics 2020 dataset with promising results.

Several different types of identification problems have been already studied in the literature, where the objective is to distinguish any two vertices of a graph by their unique neighborhoods in a suitably chosen dominating or total-dominating set of the graph, often referred to as a \emph{code}. To study such problems under a unifying point of view, reformulations of the already studied problems in terms of covering problems in suitably constructed hypergraphs have been provided. Analyzing these hypergraph representations, we introduce a new separation property, called \emph{full-separation}, which has not yet been considered in the literature so far. We study it in combination with both domination and total-domination, and call the resulting codes \emph{full-separating-dominating codes} (or \emph{FD-codes} for short) and \emph{full-separating-total-dominating codes} (or \emph{FTD-codes} for short), respectively. We address the conditions for the existence of FD- and FTD-codes, bounds for their size and their relation to codes of the other types. We show that the problems of determining an FD- or an FTD-code of minimum cardinality in a graph is NP-hard. We also show that the cardinalities of minimum FD- and FTD-codes differ by at most one, but that it is NP-complete to decide if they are equal for a given graph in general. We find the exact values of minimum cardinalities of the FD- and FTD-codes on some familiar graph classes like paths, cycles, half-graphs and spiders. This helps us compare the two codes with other codes on these graph families thereby exhibiting extremal cases for several lower bounds.

Time serie classification is used in a diverse range of domain such as

meteorology, medicine and physics. It aims to classify chronological data. Many

accurate approaches have been built during the last decade and shapelet

transformation is one of them. However, none of these approaches does take data

uncertainty into account. Using uncertainty propagation techiniques, we propose

a new dissimilarity measure based on euclidean distance. We also show how to

use this new measure to adapt shapelet transformation to uncertain time series

classification. An experimental assessment of our contribution is done on some

state of the art datasets.

We investigate structural parameterizations of two identification problems: LOCATING-DOMINATING SET and TEST COVER. In the first problem, an input is a graph G on n vertices and an integer k, and one asks if there is a subset S of k vertices such that any two distinct vertices not in S are dominated by distinct subsets of S. In the second problem, an input is a set of items U, a set of subsets F of U called tests and an integer k, and one asks if there is a set S of at most k tests such that any two items belong to distinct subsets of tests of S. These two problems are "identification" analogues of DOMINATING SET and SET COVER, respectively. Chakraborty et al. [ISAAC 2024] proved that both the problems admit conditional double-exponential lower bounds and matching algorithms when parameterized by treewidth of the input graph. We continue this line of investigation and consider parameters larger than treewidth, like vertex cover number and feedback edge set number. We design a nontrivial dynamic programming scheme to solve TEST COVER in "slightly super-exponential" time 2O(∣U∣log∣U∣)(∣U∣+∣F∣)O(1) in the number ∣U∣ of items and LOCATING-DOMINATING SET in time 2O(vclogvc)⋅nO(1), where vc is the vertex cover number and n is the order of the graph. This shows that the lower bound results with respect to treewidth from Chakraborty et al. [ISAAC 2024] cannot be extended to vertex cover number. We also show that, parameterized by feedback edge set number, LOCATING-DOMINATING SET admits a linear kernel thereby answering an open question in [Cappelle et al., LAGOS 2021]. Finally, we show that neither LOCATING-DOMINATING SET nor TEST COVER is likely to admit a compression algorithm returning an input with a subquadratic number of bits, unless NP⊆coNP/poly.

Cancelable Biometrics (CB) stands for a range of biometric transformation schemes combining biometrics with user specific tokens to generate secure templates. Required properties are the irreversibility, unlikability and recognition accuracy of templates while making their revocation possible. In biometrics, a key-binding scheme is used for protecting a cryptographic key using a biometric data. The key can be recomputed only if a correct biometric data is acquired during authentication. Applications of key-binding schemes are typically disk encryption, where the cryptographic key is used to encrypt and decrypt the disk. In this paper, we cryptanalyze a recent key-binding scheme, called Cancelable Biometrics Vault (CBV) based on cancelable biometrics. More precisely, the introduced cancelable transformation, called BioEncoding scheme, for instantiating the CBV framework is attacked in terms of reversibility and linkability of templates. Subsequently, our linkability attack enables to recover the key in the vault without additional assumptions. Our cryptanalysis introduces a new perspective by uncovering the CBV scheme's revocability and linkability vulnerabilities, which were not previously identified in comparable biometric-based key-binding schemes.

The notion of graph covers (also referred to as locally bijective

homomorphisms) plays an important role in topological graph theory and has

found its computer science applications in models of local computation. For a

fixed target graph H, the {\sc H-Cover} problem asks if an input graph G

allows a graph covering projection onto H. Despite the fact that the quest

for characterizing the computational complexity of {\sc H-Cover} had been

started more than 30 years ago, only a handful of general results have been

known so far.

In this paper, we present a complete characterization of the computational

complexity of covering coloured graphs for the case that every equivalence

class in the degree partition of the target graph has at most two vertices. We

prove this result in a very general form. Following the lines of current

development of topological graph theory, we study graphs in the most relaxed

sense of the definition. In particular, we consider graphs that are mixed (they

may have both directed and undirected edges), may have multiple edges, loops,

and semi-edges. We show that a strong P/NP-complete dichotomy holds true in the

sense that for each such fixed target graph H, the {\sc H-Cover} problem is

either polynomial-time solvable for arbitrary inputs, or NP-complete even for

simple input graphs.

We prove that, paying a polynomial increase in size only, every unrestricted two-way nondeterministic finite automaton (2NFA) can be complemented by a 1-limited automaton (1-LA), a nondeterministic extension of 2NFAs still characterizing regular languages. The resulting machine is actually a restricted form of 1-LAs -- known as 2NFAs with common guess -- and is self-verifying. A corollary of our construction is that a single exponential is necessary and sufficient for complementing 1-LAs.

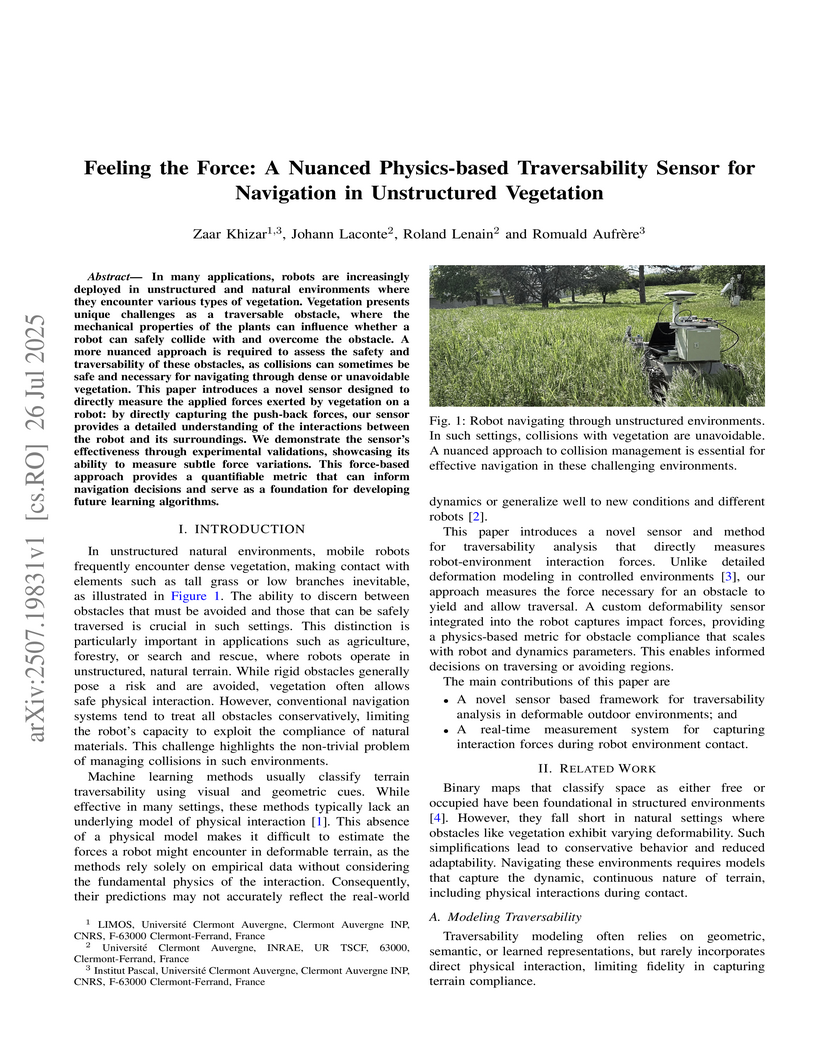

In many applications, robots are increasingly deployed in unstructured and natural environments where they encounter various types of vegetation. Vegetation presents unique challenges as a traversable obstacle, where the mechanical properties of the plants can influence whether a robot can safely collide with and overcome the obstacle. A more nuanced approach is required to assess the safety and traversability of these obstacles, as collisions can sometimes be safe and necessary for navigating through dense or unavoidable vegetation. This paper introduces a novel sensor designed to directly measure the applied forces exerted by vegetation on a robot: by directly capturing the push-back forces, our sensor provides a detailed understanding of the interactions between the robot and its surroundings. We demonstrate the sensor's effectiveness through experimental validations, showcasing its ability to measure subtle force variations. This force-based approach provides a quantifiable metric that can inform navigation decisions and serve as a foundation for developing future learning algorithms.

14 Aug 2025

We introduce a new graph-theoretic concept in the area of network monitoring. In this area, one wishes to monitor the vertices and/or the edges of a network (viewed as a graph) in order to detect and prevent failures. Inspired by two notions studied in the literature (edge-geodetic sets and distance-edge-monitoring sets), we define the notion of a monitoring edge-geodetic set (MEG-set for short) of a graph G as an edge-geodetic set S⊆V(G) of G (that is, every edge of G lies on some shortest path between two vertices of S) with the additional property that for every edge e of G, there is a vertex pair x,y of S such that e lies on all shortest paths between x and y. The motivation is that, if some edge e is removed from the network (for example if it ceases to function), the monitoring probes x and y will detect the failure since the distance between them will increase.

We explore the notion of MEG-sets by deriving the minimum size of a MEG-set for some basic graph classes (trees, cycles, unicyclic graphs, complete graphs, grids, hypercubes, corona products...) and we prove an upper bound using the feedback edge set of the graph.

We also show that determining the smallest size of an MEG-set of a graph is NP-hard, even for graphs of maximum degree at most~9.

We study a large family of graph covering problems, whose definitions rely on distances, for graphs of bounded cyclomatic number (that is, the minimum number of edges that need to be removed from the graph to destroy all cycles). These problems include (but are not restricted to) three families of problems: (i) variants of metric dimension, where one wants to choose a small set S of vertices of the graph such that every vertex is uniquely determined by its ordered vector of distances to the vertices of S; (ii) variants of geodetic sets, where one wants to select a small set S of vertices such that any vertex lies on some shortest path between two vertices of S; (iii) variants of path covers, where one wants to select a small set of paths such that every vertex or edge belongs to one of the paths. We generalize and/or improve previous results in the area which show that the optimal values for these problems can be upper-bounded by a linear function of the cyclomatic number and the degree~1-vertices of the graph. To this end, we develop and enhance a technique recently introduced in [C. Lu, Q. Ye, C. Zhu. Algorithmic aspect on the minimum (weighted) doubly resolving set problem of graphs, Journal of Combinatorial Optimization 44:2029--2039, 2022] and give near-optimal bounds in several cases. This solves (in some cases fully, in some cases partially) some conjectures and open questions from the literature. The method, based on breadth-first search, is of algorithmic nature and thus, all the constructions can be computed in linear time. Our results also imply an algorithmic consequence for the computation of the optimal solutions: for some of the problems, they can be computed in polynomial time for graphs of bounded cyclomatic number.

13 Sep 2024

Reproducibility is widely acknowledged as a fundamental principle in scientific research. Currently, the scientific community grapples with numerous challenges associated with reproducibility, often referred to as the ''reproducibility crisis.'' This crisis permeated numerous scientific disciplines. In this study, we examined the factors in scientific practices that might contribute to this lack of reproducibility. Significant focus is placed on the prevalent integration of computation in research, which can sometimes function as a black box in published papers. Our study primarily focuses on highperformance computing (HPC), which presents unique reproducibility challenges. This paper provides a comprehensive review of these concerns and potential solutions. Furthermore, we discuss the critical role of reproducible research in advancing science and identifying persisting issues within the field of HPC.

There are no more papers matching your filters at the moment.