La Sapienza University of Rome

Person Re-Identification is a key and challenging task in video surveillance. While traditional methods rely on visual data, issues like poor lighting, occlusion, and suboptimal angles often hinder performance. To address these challenges, we introduce WhoFi, a novel pipeline that utilizes Wi-Fi signals for person re-identification. Biometric features are extracted from Channel State Information (CSI) and processed through a modular Deep Neural Network (DNN) featuring a Transformer-based encoder. The network is trained using an in-batch negative loss function to learn robust and generalizable biometric signatures. Experiments on the NTU-Fi dataset show that our approach achieves competitive results compared to state-of-the-art methods, confirming its effectiveness in identifying individuals via Wi-Fi signals.

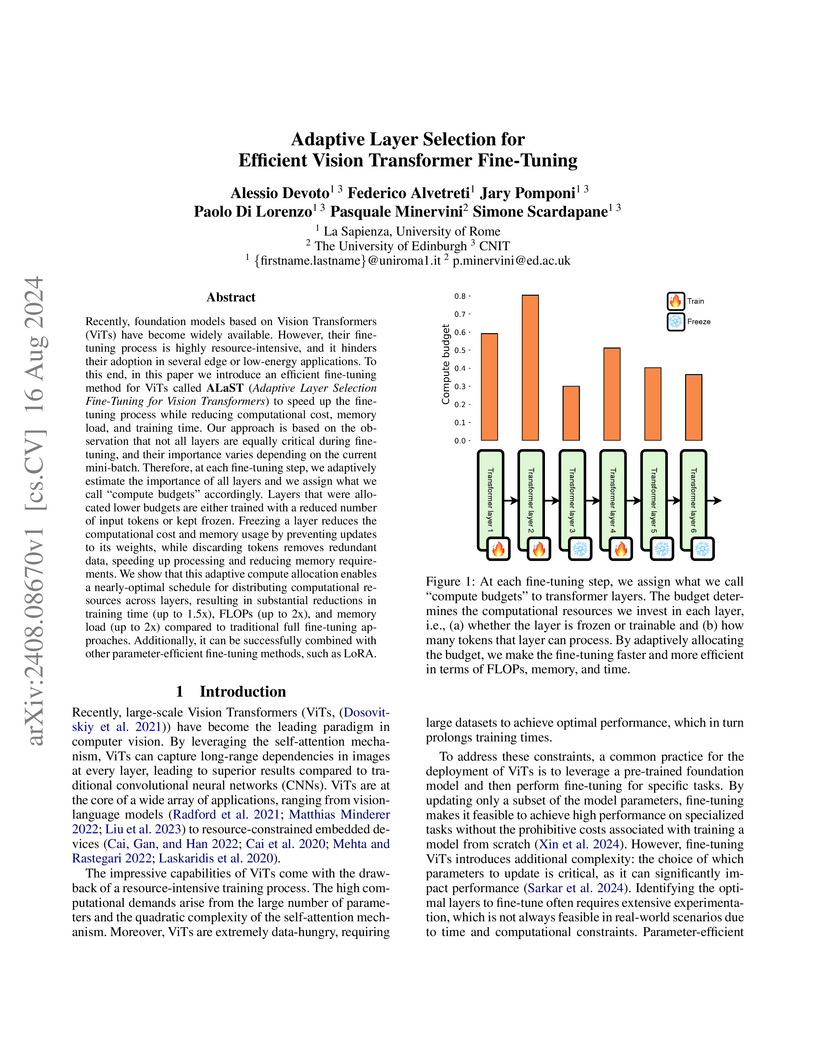

ALaST, an adaptive layer selection fine-tuning framework for Vision Transformers, dynamically learns layer and token importance to optimize resource allocation during training. The approach reduces computational operations by 40%, memory usage by 50%, and training time by 20% compared to full fine-tuning, all while preserving competitive classification accuracy.

This paper systematically dissects the components of Speech Foundation Model (SFM) and Large Language Model (LLM) architectures to determine their relative importance and optimal adapter designs for Speech-to-Text tasks. The research found that the Speech Foundation Model is the most critical component for performance, LLM choice has less impact, and no universal adapter design exists, with content-based adapters generally underperforming.

Research on social bots aims at advancing knowledge and providing solutions to one of the most debated forms of online manipulation. Yet, social bot research is plagued by widespread biases, hyped results, and misconceptions that set the stage for ambiguities, unrealistic expectations, and seemingly irreconcilable findings. Overcoming such issues is instrumental towards ensuring reliable solutions and reaffirming the validity of the scientific method. Here, we discuss a broad set of consequential methodological and conceptual issues that affect current social bots research, illustrating each with examples drawn from recent studies. More importantly, we demystify common misconceptions, addressing fundamental points on how social bots research is discussed. Our analysis surfaces the need to discuss research about online disinformation and manipulation in a rigorous, unbiased, and responsible way. This article bolsters such effort by identifying and refuting common fallacious arguments used by both proponents and opponents of social bots research, as well as providing directions toward sound methodologies for future research.

Normalization layers, such as Batch Normalization and Layer Normalization, are central components in modern neural networks, widely adopted to improve training stability and generalization. While their practical effectiveness is well documented, a detailed theoretical understanding of how normalization affects model behavior, starting from initialization, remains an important open question. In this work, we investigate how both the presence and placement of normalization within hidden layers influence the statistical properties of network predictions before training begins. In particular, we study how these choices shape the distribution of class predictions at initialization, which can range from unbiased (Neutral) to highly concentrated (Prejudiced) toward a subset of classes. Our analysis shows that normalization placement induces systematic differences in the initial prediction behavior of neural networks, which in turn shape the dynamics of learning. By linking architectural choices to prediction statistics at initialization, our work provides a principled understanding of how normalization can influence early training behavior and offers guidance for more controlled and interpretable network design.

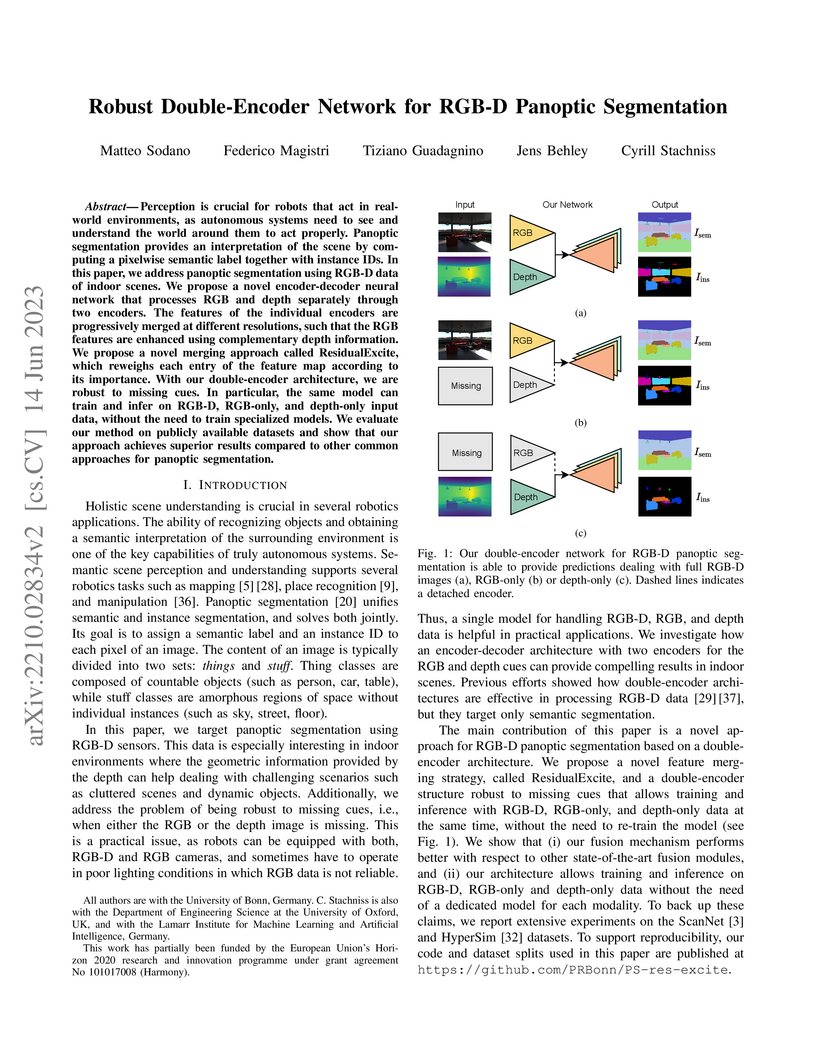

Perception is crucial for robots that act in real-world environments, as autonomous systems need to see and understand the world around them to act properly. Panoptic segmentation provides an interpretation of the scene by computing a pixelwise semantic label together with instance IDs. In this paper, we address panoptic segmentation using RGB-D data of indoor scenes. We propose a novel encoder-decoder neural network that processes RGB and depth separately through two encoders. The features of the individual encoders are progressively merged at different resolutions, such that the RGB features are enhanced using complementary depth information. We propose a novel merging approach called ResidualExcite, which reweighs each entry of the feature map according to its importance. With our double-encoder architecture, we are robust to missing cues. In particular, the same model can train and infer on RGB-D, RGB-only, and depth-only input data, without the need to train specialized models. We evaluate our method on publicly available datasets and show that our approach achieves superior results compared to other common approaches for panoptic segmentation.

22 Sep 2025

Integrating unmanned aerial vehicles into daily use requires controllers that ensure stable flight, efficient energy use, and reduced noise. Proportional integral derivative controllers remain standard but are highly sensitive to gain selection, with manual tuning often yielding suboptimal trade-offs. This paper studies different optimization techniques for the automated tuning of quadrotor proportional integral derivative gains under a unified simulation that couples a blade element momentum based aerodynamic model with a fast deep neural network surrogate, six degrees of freedom rigid body dynamics, turbulence, and a data driven acoustic surrogate model that predicts third octave spectra and propagates them to ground receivers. We compare three families of gradient-free optimizers: metaheuristics, Bayesian optimization, and deep reinforcement learning. Candidate controllers are evaluated using a composite cost function that incorporates multiple metrics, such as noise footprint and power consumption, simultaneously. Metaheuristics improve performance consistently, with Grey Wolf Optimization producing optimal results. Bayesian optimization is sample efficient but carries higher per iteration overhead and depends on the design domain. The reinforcement learning agents do not surpass the baseline in the current setup, suggesting the problem formulation requires further refinement. On unseen missions the best tuned controller maintains accurate tracking while reducing oscillations, power demand, and acoustic emissions. These results show that noise aware proportional integral derivative tuning through black box search can deliver quieter and more efficient flight without hardware changes.

CNRS

CNRS California Institute of TechnologyCharles University

California Institute of TechnologyCharles University Imperial College London

Imperial College London Cornell University

Cornell University Yale University

Yale University NASA Goddard Space Flight Center

NASA Goddard Space Flight Center University of Maryland

University of Maryland Université Paris-Saclay

Université Paris-Saclay Stockholm University

Stockholm University University of Arizona

University of Arizona Sorbonne UniversitéInstitut Polytechnique de Paris

Sorbonne UniversitéInstitut Polytechnique de Paris MITUniversité d’OrléansUtrecht UniversityUniversity of LeicesterJohns Hopkins University Applied Physics LaboratoryObservatoire de ParisJet Propulsion LaboratoryBrigham Young UniversityUniversity of IdahoLa Sapienza University of RomeSouthwest Research InstituteFree University BerlinAeolis ResearchENSUniversité de Reims Champagne ArdenneInstitut de Physique du Globe de ParisUVSQ Université Paris-SaclayAurora Technology B.V.ESA, European Space AgencyPlanetary Science Institute ColoradoUniversit PSLUniversit

de NantesUniversit

de ParisNASA, Ames Research CenterAix-Marseille Universit",Universit

Paris-Est`Ecole PolytechniqueUniversit

Bordeaux

MITUniversité d’OrléansUtrecht UniversityUniversity of LeicesterJohns Hopkins University Applied Physics LaboratoryObservatoire de ParisJet Propulsion LaboratoryBrigham Young UniversityUniversity of IdahoLa Sapienza University of RomeSouthwest Research InstituteFree University BerlinAeolis ResearchENSUniversité de Reims Champagne ArdenneInstitut de Physique du Globe de ParisUVSQ Université Paris-SaclayAurora Technology B.V.ESA, European Space AgencyPlanetary Science Institute ColoradoUniversit PSLUniversit

de NantesUniversit

de ParisNASA, Ames Research CenterAix-Marseille Universit",Universit

Paris-Est`Ecole PolytechniqueUniversit

BordeauxIn response to ESA Voyage 2050 announcement of opportunity, we propose an ambitious L-class mission to explore one of the most exciting bodies in the Solar System, Saturn largest moon Titan. Titan, a "world with two oceans", is an organic-rich body with interior-surface-atmosphere interactions that are comparable in complexity to the Earth. Titan is also one of the few places in the Solar System with habitability potential. Titan remarkable nature was only partly revealed by the Cassini-Huygens mission and still holds mysteries requiring a complete exploration using a variety of vehicles and instruments. The proposed mission concept POSEIDON (Titan POlar Scout/orbitEr and In situ lake lander DrONe explorer) would perform joint orbital and in situ investigations of Titan. It is designed to build on and exceed the scope and scientific/technological accomplishments of Cassini-Huygens, exploring Titan in ways that were not previously possible, in particular through full close-up and in situ coverage over long periods of time. In the proposed mission architecture, POSEIDON consists of two major elements: a spacecraft with a large set of instruments that would orbit Titan, preferably in a low-eccentricity polar orbit, and a suite of in situ investigation components, i.e. a lake lander, a "heavy" drone (possibly amphibious) and/or a fleet of mini-drones, dedicated to the exploration of the polar regions. The ideal arrival time at Titan would be slightly before the next northern Spring equinox (2039), as equinoxes are the most active periods to monitor still largely unknown atmospheric and surface seasonal changes. The exploration of Titan northern latitudes with an orbiter and in situ element(s) would be highly complementary with the upcoming NASA New Frontiers Dragonfly mission that will provide in situ exploration of Titan equatorial regions in the mid-2030s.

05 Feb 2024

We present Qibolab, an open-source software library for quantum hardware control integrated with the Qibo quantum computing middleware framework. Qibolab provides the software layer required to automatically execute circuit-based algorithms on custom self-hosted quantum hardware platforms. We introduce a set of objects designed to provide programmatic access to quantum control through pulses-oriented drivers for instruments, transpilers and optimization algorithms. Qibolab enables experimentalists and developers to delegate all complex aspects of hardware implementation to the library so they can standardize the deployment of quantum computing algorithms in a extensible hardware-agnostic way, using superconducting qubits as the first officially supported quantum technology. We first describe the status of all components of the library, then we show examples of control setup for superconducting qubits platforms. Finally, we present successful application results related to circuit-based algorithms.

26 Nov 2018

Coping with malware is getting more and more challenging, given their

relentless growth in complexity and volume. One of the most common approaches

in literature is using machine learning techniques, to automatically learn

models and patterns behind such complexity, and to develop technologies to keep

pace with malware evolution. This survey aims at providing an overview on the

way machine learning has been used so far in the context of malware analysis in

Windows environments, i.e. for the analysis of Portable Executables. We

systematize surveyed papers according to their objectives (i.e., the expected

output), what information about malware they specifically use (i.e., the

features), and what machine learning techniques they employ (i.e., what

algorithm is used to process the input and produce the output). We also outline

a number of issues and challenges, including those concerning the used

datasets, and identify the main current topical trends and how to possibly

advance them. In particular, we introduce the novel concept of malware analysis

economics, regarding the study of existing trade-offs among key metrics, such

as analysis accuracy and economical costs.

26 Nov 2024

In the current era of quantum computing, robust and efficient tools are essential to bridge the gap between simulations and quantum hardware execution. In this work, we introduce a machine learning approach to characterize the noise impacting a quantum chip and emulate it during simulations. Our algorithm leverages reinforcement learning, offering increased flexibility in reproducing various noise models compared to conventional techniques such as randomized benchmarking or heuristic noise models. The effectiveness of the RL agent has been validated through simulations and testing on real superconducting qubits. Additionally, we provide practical use-case examples for the study of renowned quantum algorithms.

29 Nov 2024

The decoding of error syndromes of surface codes with classical algorithms

may slow down quantum computation. To overcome this problem it is possible to

implement decoding algorithms based on artificial neural networks. This work

reports a study of decoders based on convolutional neural networks, tested on

different code distances and noise models. The results show that decoders based

on convolutional neural networks have good performance and can adapt to

different noise models. Moreover, explainable machine learning techniques have

been applied to the neural network of the decoder to better understand the

behaviour and errors of the algorithm, in order to produce a more robust and

performing algorithm.

13 Mar 2024

We study the effects of cut-off physics, in the form of a modified algebra inspired by Polymer Quantum Mechanics and by the Generalized Uncertainty Principle representation, on the collapse of a spherical dust cloud. We analyze both the Newtonian formulation, originally developed by Hunter, and the general relativistic formulation, that is the Oppenheimer-Snyder model; in both frameworks we find that the collapse is stabilized to an asymptotically static state above the horizon, and the singularity is removed. In the Newtonian case, by requiring the Newtonian approximation to be valid, we find lower bounds of the order of unity (in Planck units) for the deformation parameter of the modified algebra. We then study the behaviour of small perturbations on the non-singular collapsing backgrounds, and find that for certain range of the parameters (the polytropic index for the Newtonian case and the sound velocity in the relativistic setting) the collapse is stable to perturbations of all scales, and the non-singular super-Schwarzschild configurations have physical meaning.

We present an overview of recent developments concerning modifications of the

geometry of space-time to describe various physical processes of interactions

among classical and quantum configurations. We concentrate in two main lines of

research: the Metric Relativity and the Dynamical Bridge.

We introduce a novel measure for quantifying the error in input predictions.

The error is based on a minimum-cost hyperedge cover in a suitably defined

hypergraph and provides a general template which we apply to online graph

problems. The measure captures errors due to absent predicted requests as well

as unpredicted actual requests; hence, predicted and actual inputs can be of

arbitrary size. We achieve refined performance guarantees for previously

studied network design problems in the online-list model, such as Steiner tree

and facility location. Further, we initiate the study of learning-augmented

algorithms for online routing problems, such as the online traveling

salesperson problem and the online dial-a-ride problem, where (transportation)

requests arrive over time (online-time model). We provide a general algorithmic

framework and we give error-dependent performance bounds that improve upon

known worst-case barriers, when given accurate predictions, at the cost of

slightly increased worst-case bounds when given predictions of arbitrary

quality.

We investigate the possibility to apply quantum machine learning techniques

for data analysis, with particular regard to an interesting use-case in

high-energy physics. We propose an anomaly detection algorithm based on a

parametrized quantum circuit. This algorithm has been trained on a classical

computer and tested with simulations as well as on real quantum hardware. Tests

on NISQ devices have been performed with IBM quantum computers. For the

execution on quantum hardware specific hardware driven adaptations have been

devised and implemented. The quantum anomaly detection algorithm is able to

detect simple anomalies like different characters in handwritten digits as well

as more complex structures like anomalous patterns in the particle detectors

produced by the decay products of long-lived particles produced at a collider

experiment. For the high-energy physics application, performance is estimated

in simulation only, as the quantum circuit is not simple enough to be executed

on the available quantum hardware. This work demonstrates that it is possible

to perform anomaly detection with quantum algorithms, however, as amplitude

encoding of classical data is required for the task, due to the noise level in

the available quantum hardware, current implementation cannot outperform

classic anomaly detection algorithms based on deep neural networks.

04 Dec 2024

To better understand the mechanics of injection-induced seismicity, we developed a two-dimensional numerical code to simulate both seismic and aseismic slip on non-planar faults and fault networks driven by fluid diffusion along permeable faults. Our approach integrates a boundary element method to model fault slip governed by rate-and-state friction with a finite volume method for simulating fluid diffusion along fault networks. We demonstrate the method's capabilities with two illustrative examples: (1) fluid injection inducing slow slip on a primary rough, rate-strengthening fault, which subsequently triggers microseismicity on secondary, smaller faults, and (2) fluid injection on a single fault in a network of intersecting faults, leading to fluid diffusion and reactivation of slip throughout the network. In both cases, the simulated slow slip migrates more rapidly than the fluid pressure diffusion front. The observed migration patterns of microseismicity in the first example and slow slip in the second example resemble diffusion processes but involve diffusivity values that differ significantly from the fault hydraulic diffusivity. These results support the conclusion that the microseismicity front is not a direct proxy for the fluid diffusion front and cannot be used to directly infer hydraulic diffusivity, consistently with some decametric scale in-situ experiments of fault activation under controlled conditions. This work highlights the importance of distinguishing between mechanical and hydrological processes in the analysis of induced seismicity, providing a powerful tool for improving our understanding of fault behavior in response to fluid injection, in particular when a network of faults is involved.

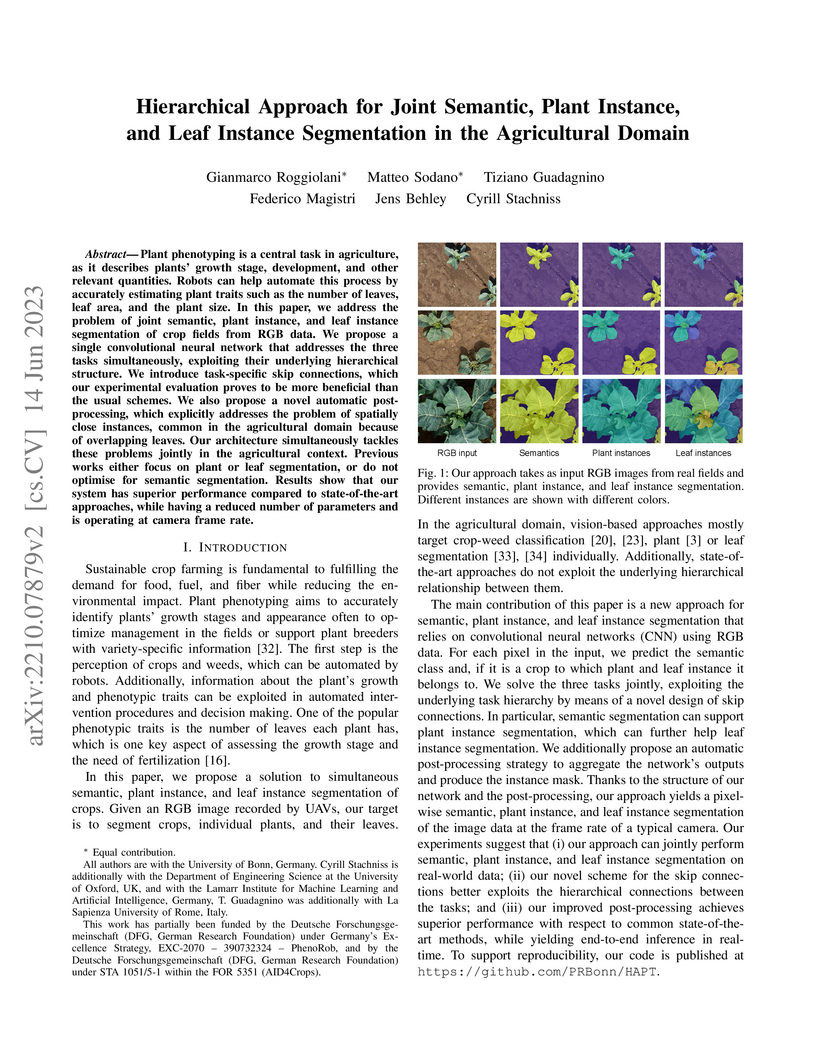

Plant phenotyping is a central task in agriculture, as it describes plants' growth stage, development, and other relevant quantities. Robots can help automate this process by accurately estimating plant traits such as the number of leaves, leaf area, and the plant size. In this paper, we address the problem of joint semantic, plant instance, and leaf instance segmentation of crop fields from RGB data. We propose a single convolutional neural network that addresses the three tasks simultaneously, exploiting their underlying hierarchical structure. We introduce task-specific skip connections, which our experimental evaluation proves to be more beneficial than the usual schemes. We also propose a novel automatic post-processing, which explicitly addresses the problem of spatially close instances, common in the agricultural domain because of overlapping leaves. Our architecture simultaneously tackles these problems jointly in the agricultural context. Previous works either focus on plant or leaf segmentation, or do not optimise for semantic segmentation. Results show that our system has superior performance compared to state-of-the-art approaches, while having a reduced number of parameters and is operating at camera frame rate.

Computed Tomography (CT) plays a pivotal role in medical diagnosis; however,

variability across reconstruction kernels hinders data-driven approaches, such

as deep learning models, from achieving reliable and generalized performance.

To this end, CT data harmonization has emerged as a promising solution to

minimize such non-biological variances by standardizing data across different

sources or conditions. In this context, Generative Adversarial Networks (GANs)

have proved to be a powerful framework for harmonization, framing it as a

style-transfer problem. However, GAN-based approaches still face limitations in

capturing complex relationships within the images, which are essential for

effective harmonization. In this work, we propose a novel texture-aware StarGAN

for CT data harmonization, enabling one-to-many translations across different

reconstruction kernels. Although the StarGAN model has been successfully

applied in other domains, its potential for CT data harmonization remains

unexplored. Furthermore, our approach introduces a multi-scale texture loss

function that embeds texture information across different spatial and angular

scales into the harmonization process, effectively addressing kernel-induced

texture variations. We conducted extensive experimentation on a publicly

available dataset, utilizing a total of 48667 chest CT slices from 197 patients

distributed over three different reconstruction kernels, demonstrating the

superiority of our method over the baseline StarGAN.

This paper presents a theoretical discussion for environmentally-conscious job deployment and migration in cloud environments, aiming to minimize the environmental impact of resource provisioning while incorporating sustainability requirements. As the demand for sustainable cloud services grows, it is crucial for cloud customers to select data center operators based on sustainability metrics and to accurately report the ecological footprint of their services. To this end, we analyze sustainability reports and define comprehensive environmental impact profiles for data centers, incorporating key sustainability indicators. We formalize the problem as an optimization model, balancing multiple environmental factors while respecting user preferences. A simulative case study demonstrates the {potential} of our approach compared to baseline strategies that optimize for single sustainability factors.

There are no more papers matching your filters at the moment.