METU

Recent advancements in text-to-image generative models, particularly latent diffusion models (LDMs), have demonstrated remarkable capabilities in synthesizing high-quality images from textual prompts. However, achieving identity personalization-ensuring that a model consistently generates subject-specific outputs from limited reference images-remains a fundamental challenge. To address this, we introduce Meta-Low-Rank Adaptation (Meta-LoRA), a novel framework that leverages meta-learning to encode domain-specific priors into LoRA-based identity personalization. Our method introduces a structured three-layer LoRA architecture that separates identity-agnostic knowledge from identity-specific adaptation. In the first stage, the LoRA Meta-Down layers are meta-trained across multiple subjects, learning a shared manifold that captures general identity-related features. In the second stage, only the LoRA-Mid and LoRA-Up layers are optimized to specialize on a given subject, significantly reducing adaptation time while improving identity fidelity. To evaluate our approach, we introduce Meta-PHD, a new benchmark dataset for identity personalization, and compare Meta-LoRA against state-of-the-art methods. Our results demonstrate that Meta-LoRA achieves superior identity retention, computational efficiency, and adaptability across diverse identity conditions. Our code, model weights, and dataset are released on this http URL.

In this paper we investigate learning visual models for the steps of ordinary tasks using weak supervision via instructional narrations and an ordered list of steps instead of strong supervision via temporal annotations. At the heart of our approach is the observation that weakly supervised learning may be easier if a model shares components while learning different steps: `pour egg' should be trained jointly with other tasks involving `pour' and `egg'. We formalize this in a component model for recognizing steps and a weakly supervised learning framework that can learn this model under temporal constraints from narration and the list of steps. Past data does not permit systematic studying of sharing and so we also gather a new dataset, CrossTask, aimed at assessing cross-task sharing. Our experiments demonstrate that sharing across tasks improves performance, especially when done at the component level and that our component model can parse previously unseen tasks by virtue of its compositionality.

Researchers from the Technical University of Denmark and Middle East Technical University developed DDRM-PR, an unsupervised framework that adapts Denoising Diffusion Restoration Models to solve the nonlinear Fourier phase retrieval problem by integrating pre-trained diffusion models with classical alternating projection. This method consistently achieves higher reconstruction fidelity, yielding up to 29.13 PSNR on simulated CelebA-HQ data and nearly doubling the PSNR (13.12 vs 7.85) on experimental scattering media data compared to traditional HIO.

07 Apr 2022

While exam-style questions are a fundamental educational tool serving a

variety of purposes, manual construction of questions is a complex process that

requires training, experience and resources. Automatic question generation (QG)

techniques can be utilized to satisfy the need for a continuous supply of new

questions by streamlining their generation. However, compared to automatic

question answering (QA), QG is a more challenging task. In this work, we

fine-tune a multilingual T5 (mT5) transformer in a multi-task setting for QA,

QG and answer extraction tasks using Turkish QA datasets. To the best of our

knowledge, this is the first academic work that performs automated text-to-text

question generation from Turkish texts. Experimental evaluations show that the

proposed multi-task setting achieves state-of-the-art Turkish question

answering and question generation performance on TQuADv1, TQuADv2 datasets and

XQuAD Turkish split. The source code and the pre-trained models are available

at this https URL

22 May 2025

This paper introduces a machine learning-based approach using Minimum Covariance Determinant (MCD) for detecting stock price crash risk and constructs a firm-specific investor sentiment index. The study finds a positive relationship between firm-specific investor sentiment and crash risk, which is particularly strong for small firms, while large firms show a negative relationship.

VIFT introduces a transformer-based architecture for visual-inertial odometry, leveraging causal transformers for temporal modeling and a Regularized Projective Manifold Gradient (RPMG) approach for robust rotation estimation. The system achieves state-of-the-art performance on the KITTI Odometry Dataset among learning-based methods, demonstrating the effectiveness of its specialized rotation regression and temporal fusion.

3D Gaussian Splatting has recently shown promising results as an alternative scene representation in SLAM systems to neural implicit representations. However, current methods either lack dense depth maps to supervise the mapping process or detailed training designs that consider the scale of the environment. To address these drawbacks, we present IG-SLAM, a dense RGB-only SLAM system that employs robust Dense-SLAM methods for tracking and combines them with Gaussian Splatting. A 3D map of the environment is constructed using accurate pose and dense depth provided by tracking. Additionally, we utilize depth uncertainty in map optimization to improve 3D reconstruction. Our decay strategy in map optimization enhances convergence and allows the system to run at 10 fps in a single process. We demonstrate competitive performance with state-of-the-art RGB-only SLAM systems while achieving faster operation speeds. We present our experiments on the Replica, TUM-RGBD, ScanNet, and EuRoC datasets. The system achieves photo-realistic 3D reconstruction in large-scale sequences, particularly in the EuRoC dataset.

Understanding road topology is crucial for autonomous driving. This paper

introduces TopoBDA (Topology with Bezier Deformable Attention), a novel

approach that enhances road topology comprehension by leveraging Bezier

Deformable Attention (BDA). TopoBDA processes multi-camera 360-degree imagery

to generate Bird's Eye View (BEV) features, which are refined through a

transformer decoder employing BDA. BDA utilizes Bezier control points to drive

the deformable attention mechanism, improving the detection and representation

of elongated and thin polyline structures, such as lane centerlines.

Additionally, TopoBDA integrates two auxiliary components: an instance mask

formulation loss and a one-to-many set prediction loss strategy, to further

refine centerline detection and enhance road topology understanding.

Experimental evaluations on the OpenLane-V2 dataset demonstrate that TopoBDA

outperforms existing methods, achieving state-of-the-art results in centerline

detection and topology reasoning. TopoBDA also achieves the best results on the

OpenLane-V1 dataset in 3D lane detection. Further experiments on integrating

multi-modal data -- such as LiDAR, radar, and SDMap -- show that multimodal

inputs can further enhance performance in road topology understanding.

The Floyd-Warshall algorithm is the most popular algorithm for determining

the shortest paths between all pairs in a graph. It is very a simple and an

elegant algorithm. However, if the graph does not contain any negative weighted

edge, using Dijkstra's shortest path algorithm for every vertex as a source

vertex to produce all pairs shortest paths of the graph works much better than

the Floyd-Warshall algorithm for sparse graphs. Also, for the graphs with

negative weighted edges, with no negative cycle, Johnson's algorithm still

performs significantly better than the Floyd-Warshall algorithm for sparse

graphs. Johnson's algorithm transforms the graph into a non-negative one by

using the Bellman-Ford algorithm, then, applies the Dijkstra's algorithm. Thus,

in general the Floyd-Warshall algorithm becomes very inefficient especially for

sparse graphs. In this paper, we show a simple improvement on the

Floyd-Warshall algorithm that will increases its performance especially for the

sparse graphs, so it can be used instead of more complicated alternatives.

The advancement in computing power has significantly reduced the training

times for deep learning, fostering the rapid development of networks designed

for object recognition. However, the exploration of object utility, which is

the affordance of the object, as opposed to object recognition, has received

comparatively less attention. This work focuses on the problem of exploration

of object affordances using existing networks trained on the object

classification dataset. While pre-trained networks have proven to be

instrumental in transfer learning for classification tasks, this work diverges

from conventional object classification methods. Instead, it employs

pre-trained networks to discern affordance labels without the need for

specialized layers, abstaining from modifying the final layers through the

addition of classification layers. To facilitate the determination of

affordance labels without such modifications, two approaches, i.e. subspace

clustering and manifold curvature methods are tested. These methods offer a

distinct perspective on affordance label recognition. Especially, manifold

curvature method has been successfully tested with nine distinct pre-trained

networks, each achieving an accuracy exceeding 95%. Moreover, it is observed

that manifold curvature and subspace clustering methods explore affordance

labels that are not marked in the ground truth, but object affords in various

cases.

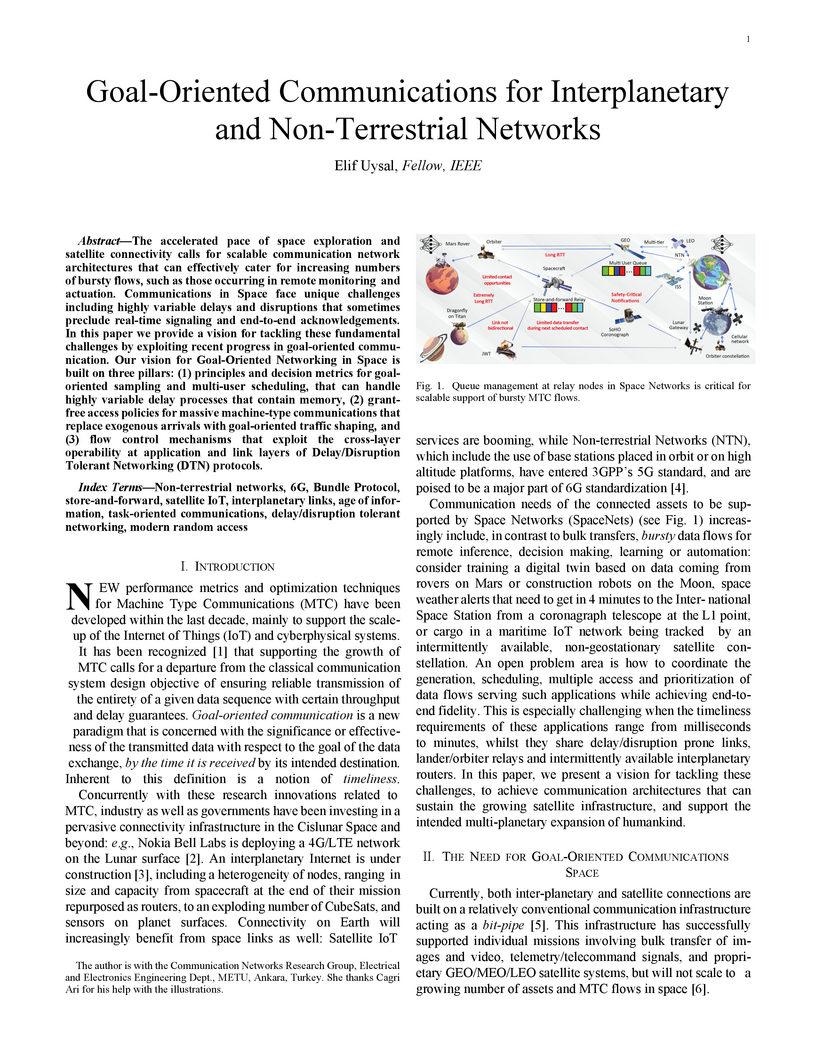

The accelerated pace of space exploration and satellite connectivity calls for scalable communication network architectures that can effectively cater for increasing numbers of bursty flows, such as those occurring in remote monitoring and actuation. Communications in Space face unique challenges including highly variable delays and disruptions that sometimes preclude real-time signaling and end-to-end acknowledgements. In this paper we provide a vision for tackling these fundamental challenges by exploiting recent progress in goal-oriented communication. Our vision for Goal-Oriented Networking in Space is built on three pillars: (1) principles and decision metrics for goal-oriented sampling and multi-user scheduling, that can handle highly variable delay processes that contain memory, (2) grant-free access policies for massive machine-type communications that replace exogenous arrivals with goal-oriented traffic shaping, and (3) flow control mechanisms that exploit the cross-layer operability at application and link layers of Delay/Disruption Tolerant Networking (DTN) protocols.

Ranking-based loss functions, such as Average Precision Loss and Rank&Sort Loss, outperform widely used score-based losses in object detection. These loss functions better align with the evaluation criteria, have fewer hyperparameters, and offer robustness against the imbalance between positive and negative classes. However, they require pairwise comparisons among P positive and N negative predictions, introducing a time complexity of O(PN), which is prohibitive since N is often large (e.g., 108 in ATSS). Despite their advantages, the widespread adoption of ranking-based losses has been hindered by their high time and space complexities.

In this paper, we focus on improving the efficiency of ranking-based loss functions. To this end, we propose Bucketed Ranking-based Losses which group negative predictions into B buckets (B≪N) in order to reduce the number of pairwise comparisons so that time complexity can be reduced. Our method enhances the time complexity, reducing it to O(max(Nlog(N),P2)). To validate our method and show its generality, we conducted experiments on 2 different tasks, 3 different datasets, 7 different detectors. We show that Bucketed Ranking-based (BR) Losses yield the same accuracy with the unbucketed versions and provide 2× faster training on average. We also train, for the first time, transformer-based object detectors using ranking-based losses, thanks to the efficiency of our BR. When we train CoDETR, a state-of-the-art transformer-based object detector, using our BR Loss, we consistently outperform its original results over several different backbones. Code is available at this https URL

Class-wise characteristics of training examples affect the performance of

deep classifiers. A well-studied example is when the number of training

examples of classes follows a long-tailed distribution, a situation that is

likely to yield sub-optimal performance for under-represented classes. This

class imbalance problem is conventionally addressed by approaches relying on

the class-wise cardinality of training examples, such as data resampling. In

this paper, we demonstrate that considering solely the cardinality of classes

does not cover all issues causing class imbalance. To measure class imbalance,

we propose "Class Uncertainty" as the average predictive uncertainty of the

training examples, and we show that this novel measure captures the differences

across classes better than cardinality. We also curate SVCI-20 as a novel

dataset in which the classes have equal number of training examples but they

differ in terms of their hardness; thereby causing a type of class imbalance

which cannot be addressed by the approaches relying on cardinality. We

incorporate our "Class Uncertainty" measure into a diverse set of ten class

imbalance mitigation methods to demonstrate its effectiveness on long-tailed

datasets as well as on our SVCI-20. Code and datasets will be made available.

13 Feb 2018

We study the problem of minimizing the time-average expected Age of Information for status updates sent by an energy-harvesting source with a finite-capacity battery. In prior literature, optimal policies were observed to have a threshold structure under Poisson energy arrivals, for the special case of a unit-capacity battery. In this paper, we generalize this result to any (integer) battery capacity, and explicitly characterize the threshold structure. We obtain tools to derive the optimal policy for arbitrary energy buffer (i.e. battery) size. One of these results is the unexpected equivalence of the minimum average AoI and the optimal threshold for the highest energy state.

Mixed-signal neuromorphic processors provide extremely low-power operation

for edge inference workloads, taking advantage of sparse asynchronous

computation within Spiking Neural Networks (SNNs). However, deploying robust

applications to these devices is complicated by limited controllability over

analog hardware parameters, as well as unintended parameter and dynamical

variations of analog circuits due to fabrication non-idealities. Here we

demonstrate a novel methodology for ofDine training and deployment of spiking

neural networks (SNNs) to the mixed-signal neuromorphic processor DYNAP-SE2.

The methodology utilizes gradient-based training using a differentiable

simulation of the mixed-signal device, coupled with an unsupervised weight

quantization method to optimize the network's parameters. Parameter noise

injection during training provides robustness to the effects of quantization

and device mismatch, making the method a promising candidate for real-world

applications under hardware constraints and non-idealities. This work extends

Rockpool, an open-source deep-learning library for SNNs, with support for

accurate simulation of mixed-signal SNN dynamics. Our approach simplifies the

development and deployment process for the neuromorphic community, making

mixed-signal neuromorphic processors more accessible to researchers and

developers.

29 Apr 2023

We consider a network with multiple sources and a base station that send time-sensitive information to remote clients. The Age of Incorrect Information (AoII) captures the freshness of the informative pieces of status update packets at the destinations. We derive the closed-form Whittle Index formulation for a push-based multi-user network over unreliable channels with AoII-dependent cost functions. We also propose a new semantic performance metric for pull-based systems, named the Age of Incorrect Information at Query (QAoII), that quantifies AoII at particular instants when clients generate queries. Simulation results demonstrate that the proposed Whittle Index-based scheduling policies for both AoII and QAoII-dependent cost functions are superior to benchmark policies, and adopting query-aware scheduling can significantly improve the timeliness for scenarios where a single user or multiple users are scheduled at a time.

Domain adaptation seeks to leverage the abundant label information in a source domain to improve classification performance in a target domain with limited labels. While the field has seen extensive methodological development, its theoretical foundations remain relatively underexplored. Most existing theoretical analyses focus on simplified settings where the source and target domains share the same input space and relate target-domain performance to measures of domain discrepancy. Although insightful, these analyses may not fully capture the behavior of modern approaches that align domains into a shared space via feature transformations. In this paper, we present a comprehensive theoretical study of domain adaptation algorithms based on domain alignment. We consider the joint learning of domain-aligning feature transformations and a shared classifier in a semi-supervised setting. We first derive generalization bounds in a broad setting, in terms of covering numbers of the relevant function classes. We then extend our analysis to characterize the sample complexity of domain-adaptive neural networks employing maximum mean discrepancy (MMD) or adversarial objectives. Our results rely on a rigorous analysis of the covering numbers of these architectures. We show that, for both MMD-based and adversarial models, the sample complexity admits an upper bound that scales quadratically with network depth and width. Furthermore, our analysis suggests that in semi-supervised settings, robustness to limited labeled target data can be achieved by scaling the target loss proportionally to the square root of the number of labeled target samples. Experimental evaluation in both shallow and deep settings lends support to our theoretical findings.

This paper tackles the problem of zero-shot sign language recognition (ZSSLR), where the goal is to leverage models learned over the seen sign classes to recognize the instances of unseen sign classes. In this context, readily available textual sign descriptions and attributes collected from sign language dictionaries are utilized as semantic class representations for knowledge transfer. For this novel problem setup, we introduce three benchmark datasets with their accompanying textual and attribute descriptions to analyze the problem in detail. Our proposed approach builds spatiotemporal models of body and hand regions. By leveraging the descriptive text and attribute embeddings along with these visual representations within a zero-shot learning framework, we show that textual and attribute based class definitions can provide effective knowledge for the recognition of previously unseen sign classes. We additionally introduce techniques to analyze the influence of binary attributes in correct and incorrect zero-shot predictions. We anticipate that the introduced approaches and the accompanying datasets will provide a basis for further exploration of zero-shot learning in sign language recognition.

A generalised Thurston-Bennequin invariant for a Q-singularity of a real

algebraic variety is defined as a linking form on the homologies of the real

link of the singularity. The main goal of this paper is to present a method to

calculate the linking form in terms of the very good resolution graph of a real

normal unibranch surface singularity. For such singularities, the value of the

linking form is the Thurston-Bennequin number of the real link of the

singularity. As a special case of unibranch surface singularities, the

behaviour of the linking form is investigated on the Brieskorn double points

x^m+y^n\pm z^2=0.

18 Apr 2015

In this paper, a data stream architecture is presented for electrical power quality (PQ) which is called PQStream. PQStream is developed to process and manage time-evolving data coming from the country-wide mobile measurements of electrical PQ parameters of the Turkish Electricity Transmission System. It is a full-fledged system with a data measurement module which carries out processing of continuous PQ data, a stream database which stores the output of the measurement module, and finally a Graphical User Interface for retrospective analysis of the PQ data stored in the stream database. The presented model is deployed and is available to PQ experts, academicians and researchers of the area. As further studies, data mining methods such as classification and clustering algorithms will be applied in order to deduce useful PQ information from this database of PQ data.

There are no more papers matching your filters at the moment.