MIIT Key Laboratory of Pattern Analysis and Machine Intelligence

LiMuon is a light and fast Muon optimizer that reduces the sample complexity to O(ϵ⁻³) under generalized smoothness and significantly decreases memory consumption through randomized low-rank approximations. It empirically achieves superior performance and memory efficiency when training large models like DistilGPT2 and ViT.

Model editing aims to efficiently alter the behavior of Large Language Models (LLMs) within a desired scope, while ensuring no adverse impact on other inputs. Recent years have witnessed various model editing methods been proposed. However, these methods either exhibit poor overall performance or struggle to strike a balance between generalization and locality. We propose MEMoE, a model editing adapter utilizing a Mixture of Experts (MoE) architecture with a knowledge anchor routing strategy. MEMoE updates knowledge using a bypass MoE structure, keeping the original parameters unchanged to preserve the general ability of LLMs. And, the knowledge anchor routing ensures that inputs requiring similar knowledge are routed to the same expert, thereby enhancing the generalization of the updated knowledge. Experimental results show the superiority of our approach over both batch editing and sequential batch editing tasks, exhibiting exceptional overall performance alongside outstanding balance between generalization and locality. Our code will be available.

In retrieval-augmented generation (RAG) question answering systems, generating citations for large language model (LLM) outputs enhances verifiability and helps users identify potential hallucinations. However, we observe two problems in the citations produced by existing attribution methods. First, the citations are typically provided at the sentence or even paragraph level. Long sentences or paragraphs may include a substantial amount of irrelevant content. Second, sentence-level citations may omit information that is essential for verifying the output, forcing users to read the surrounding context. In this paper, we propose generating sub-sentence citations that are both concise and sufficient, thereby reducing the effort required by users to confirm the correctness of the generated output. To this end, we first develop annotation guidelines for such citations and construct a corresponding dataset. Then, we propose an attribution framework for generating citations that adhere to our standards. This framework leverages LLMs to automatically generate fine-tuning data for our task and employs a credit model to filter out low-quality examples. Our experiments on the constructed dataset demonstrate that the propose approach can generate high-quality and more readable citations.

Researchers at Alibaba Group and Shanghai Jiao Tong University developed MAAB, a cooperative-competitive multi-agent framework for auto-bidding in online advertising. The framework balances advertiser utility, social welfare, and platform revenue by using a mixed credit assignment strategy, introducing personalized 'bar agents' to preserve revenue, and employing a mean-field approach for scalability, demonstrating improved social welfare in online A/B tests on Alibaba's platform.

The advancement of Large Language Models (LLMs) has led to significant enhancements in the performance of chatbot systems. Many researchers have dedicated their efforts to the development of bringing characteristics to chatbots. While there have been commercial products for developing role-driven chatbots using LLMs, it is worth noting that academic research in this area remains relatively scarce. Our research focuses on investigating the performance of LLMs in constructing Characteristic AI Agents by simulating real-life individuals across different settings. Current investigations have primarily focused on act on roles with simple profiles. In response to this research gap, we create a benchmark for the characteristic AI agents task, including dataset, techniques, and evaluation metrics. A dataset called ``Character100'' is built for this benchmark, comprising the most-visited people on Wikipedia for language models to role-play. With the constructed dataset, we conduct comprehensive assessment of LLMs across various settings. In addition, we devise a set of automatic metrics for quantitative performance evaluation. The experimental results underscore the potential directions for further improvement in the capabilities of LLMs in constructing characteristic AI agents. The benchmark is available at this https URL.

The HELPD framework, developed by researchers at Nanjing University of Aeronautics and Astronautics and The Chinese University of Hong Kong, introduces a method to mitigate multimodal hallucination in Large Vision-Language Models. It integrates hierarchical feedback learning with a vision-enhanced penalty decoding strategy, leading to over 15% reduction in hallucination across various LVLMs with minimal training.

The extraction of essential news elements through the 5W1H framework

(\textit{What}, \textit{When}, \textit{Where}, \textit{Why}, \textit{Who}, and

\textit{How}) is critical for event extraction and text summarization. The

advent of Large language models (LLMs) such as ChatGPT presents an opportunity

to address language-related tasks through simple prompts without fine-tuning

models with much time. While ChatGPT has encountered challenges in processing

longer news texts and analyzing specific attributes in context, especially

answering questions about \textit{What}, \textit{Why}, and \textit{How}. The

effectiveness of extraction tasks is notably dependent on high-quality

human-annotated datasets. However, the absence of such datasets for the 5W1H

extraction increases the difficulty of fine-tuning strategies based on

open-source LLMs. To address these limitations, first, we annotate a

high-quality 5W1H dataset based on four typical news corpora

(\textit{CNN/DailyMail}, \textit{XSum}, \textit{NYT}, \textit{RA-MDS}); second,

we design several strategies from zero-shot/few-shot prompting to efficient

fine-tuning to conduct 5W1H aspects extraction from the original news

documents. The experimental results demonstrate that the performance of the

fine-tuned models on our labelled dataset is superior to the performance of

ChatGPT. Furthermore, we also explore the domain adaptation capability by

testing the source-domain (e.g. NYT) models on the target domain corpus (e.g.

CNN/DailyMail) for the task of 5W1H extraction.

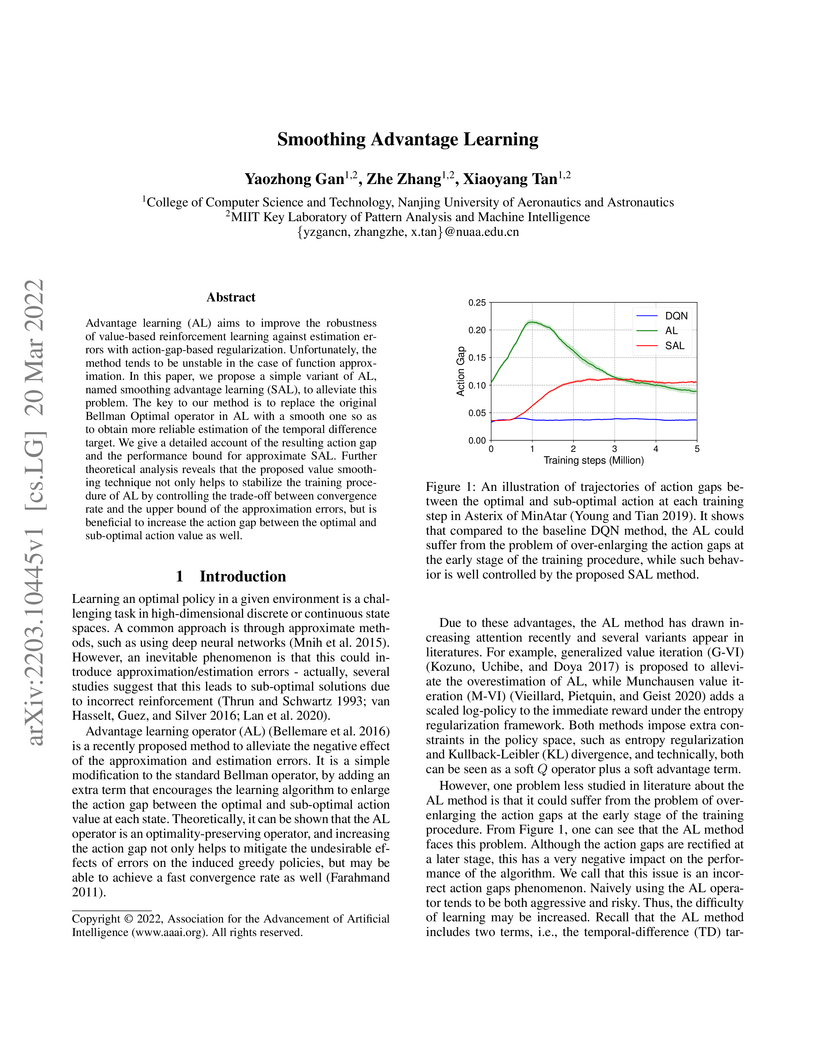

Advantage learning (AL) aims to improve the robustness of value-based

reinforcement learning against estimation errors with action-gap-based

regularization. Unfortunately, the method tends to be unstable in the case of

function approximation. In this paper, we propose a simple variant of AL, named

smoothing advantage learning (SAL), to alleviate this problem. The key to our

method is to replace the original Bellman Optimal operator in AL with a smooth

one so as to obtain more reliable estimation of the temporal difference target.

We give a detailed account of the resulting action gap and the performance

bound for approximate SAL. Further theoretical analysis reveals that the

proposed value smoothing technique not only helps to stabilize the training

procedure of AL by controlling the trade-off between convergence rate and the

upper bound of the approximation errors, but is beneficial to increase the

action gap between the optimal and sub-optimal action value as well.

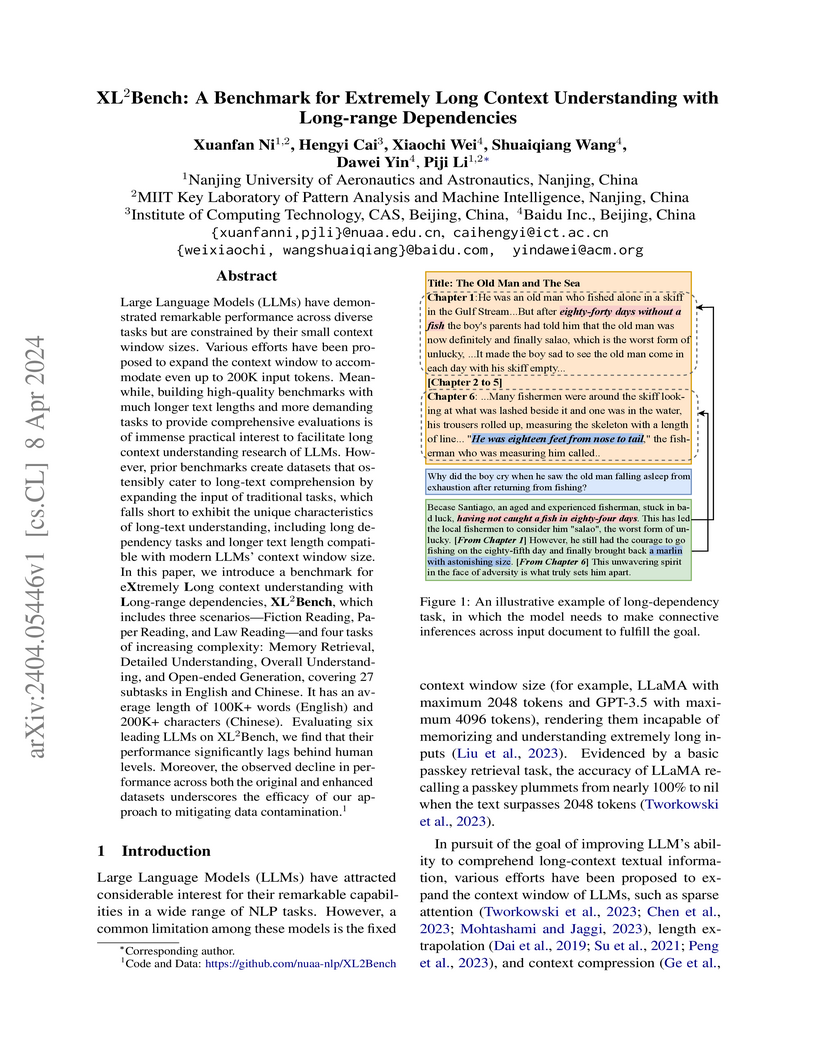

XL2Bench introduces a benchmark for evaluating large language models on extremely long context understanding, featuring texts over 100K words and tasks requiring long-range dependencies across fiction, academic, and legal domains. It reveals that current state-of-the-art LLMs struggle with holistic comprehension and accurately retrieving information from vast inputs, performing significantly below human levels.

Brain-to-Image reconstruction aims to recover visual stimuli perceived by

humans from brain activity. However, the reconstructed visual stimuli often

missing details and semantic inconsistencies, which may be attributed to

insufficient semantic information. To address this issue, we propose an

approach named Fine-grained Brain-to-Image reconstruction (FgB2I), which

employs fine-grained text as bridge to improve image reconstruction. FgB2I

comprises three key stages: detail enhancement, decoding fine-grained text

descriptions, and text-bridged brain-to-image reconstruction. In the

detail-enhancement stage, we leverage large vision-language models to generate

fine-grained captions for visual stimuli and experimentally validate its

importance. We propose three reward metrics (object accuracy, text-image

semantic similarity, and image-image semantic similarity) to guide the language

model in decoding fine-grained text descriptions from fMRI signals. The

fine-grained text descriptions can be integrated into existing reconstruction

methods to achieve fine-grained Brain-to-Image reconstruction.

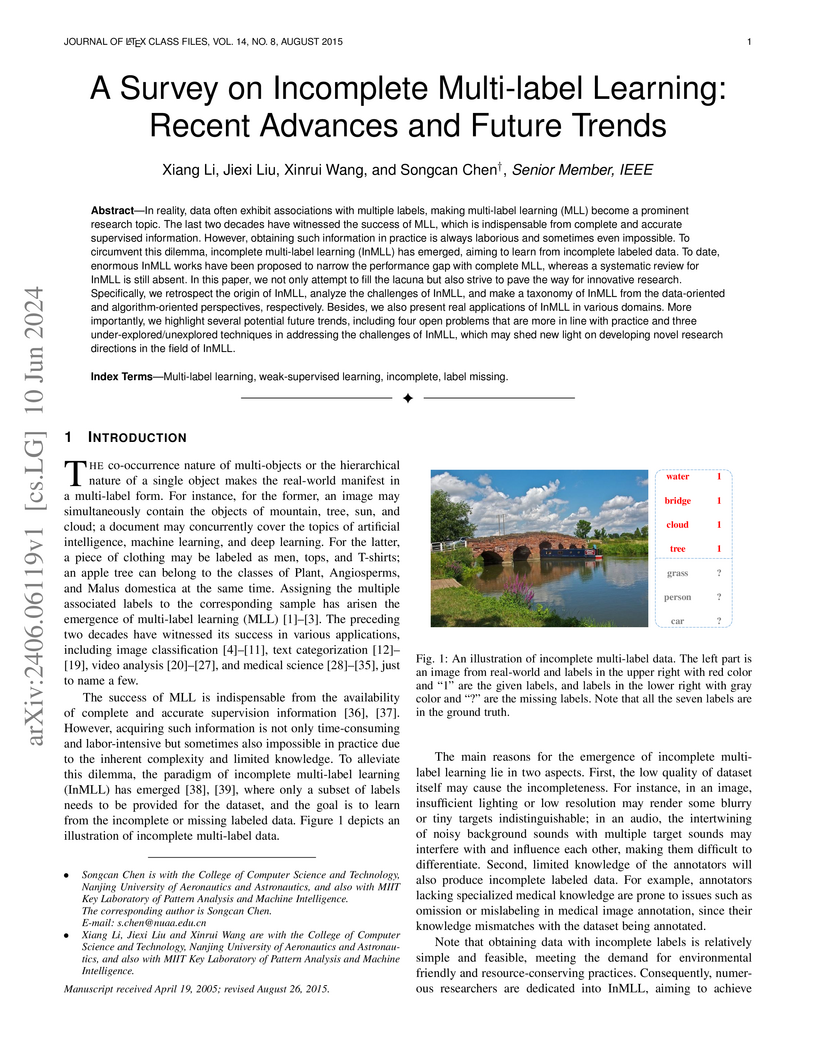

In reality, data often exhibit associations with multiple labels, making multi-label learning (MLL) become a prominent research topic. The last two decades have witnessed the success of MLL, which is indispensable from complete and accurate supervised information. However, obtaining such information in practice is always laborious and sometimes even impossible. To circumvent this dilemma, incomplete multi-label learning (InMLL) has emerged, aiming to learn from incomplete labeled data. To date, enormous InMLL works have been proposed to narrow the performance gap with complete MLL, whereas a systematic review for InMLL is still absent. In this paper, we not only attempt to fill the lacuna but also strive to pave the way for innovative research. Specifically, we retrospect the origin of InMLL, analyze the challenges of InMLL, and make a taxonomy of InMLL from the data-oriented and algorithm-oriented perspectives, respectively. Besides, we also present real applications of InMLL in various domains. More importantly, we highlight several potential future trends, including four open problems that are more in line with practice and three under-explored/unexplored techniques in addressing the challenges of InMLL, which may shed new light on developing novel research directions in the field of InMLL.

Large models recently are widely applied in artificial intelligence, so efficient training of large models has received widespread attention. More recently, a useful Muon optimizer is specifically designed for matrix-structured parameters of large models. Although some works have begun to studying Muon optimizer, the existing Muon and its variants still suffer from high sample complexity or high memory for large models. To fill this gap, we propose a light and fast Muon (LiMuon) optimizer for training large models, which builds on the momentum-based variance reduced technique and randomized Singular Value Decomposition (SVD). Our LiMuon optimizer has a lower memory than the current Muon and its variants. Moreover, we prove that our LiMuon has a lower sample complexity of O(ϵ−3) for finding an ϵ-stationary solution of non-convex stochastic optimization under the smooth condition. Recently, the existing convergence analysis of Muon optimizer mainly relies on the strict Lipschitz smooth assumption, while some artificial intelligence tasks such as training large language models (LLMs) do not satisfy this condition. We also proved that our LiMuon optimizer has a sample complexity of O(ϵ−3) under the generalized smooth condition. Numerical experimental results on training DistilGPT2 and ViT models verify efficiency of our LiMuon optimizer.

Minimax optimization recently is widely applied in many machine learning

tasks such as generative adversarial networks, robust learning and

reinforcement learning. In the paper, we study a class of nonconvex-nonconcave

minimax optimization with nonsmooth regularization, where the objective

function is possibly nonconvex on primal variable x, and it is nonconcave and

satisfies the Polyak-Lojasiewicz (PL) condition on dual variable y. Moreover,

we propose a class of enhanced momentum-based gradient descent ascent methods

(i.e., MSGDA and AdaMSGDA) to solve these stochastic nonconvex-PL minimax

problems. In particular, our AdaMSGDA algorithm can use various adaptive

learning rates in updating the variables x and y without relying on any

specifical types. Theoretically, we prove that our methods have the best known

sample complexity of O~(ϵ−3) only requiring one sample at

each loop in finding an ϵ-stationary solution. Some numerical

experiments on PL-game and Wasserstein-GAN demonstrate the efficiency of our

proposed methods.

As a newly emerging unsupervised learning paradigm, self-supervised learning

(SSL) recently gained widespread attention, which usually introduces a pretext

task without manual annotation of data. With its help, SSL effectively learns

the feature representation beneficial for downstream tasks. Thus the pretext

task plays a key role. However, the study of its design, especially its essence

currently is still open. In this paper, we borrow a multi-view perspective to

decouple a class of popular pretext tasks into a combination of view data

augmentation (VDA) and view label classification (VLC), where we attempt to

explore the essence of such pretext task while providing some insights into its

design. Specifically, a simple multi-view learning framework is specially

designed (SSL-MV), which assists the feature learning of downstream tasks

(original view) through the same tasks on the augmented views. SSL-MV focuses

on VDA while abandons VLC, empirically uncovering that it is VDA rather than

generally considered VLC that dominates the performance of such SSL.

Additionally, thanks to replacing VLC with VDA tasks, SSL-MV also enables an

integrated inference combining the predictions from the augmented views,

further improving the performance. Experiments on several benchmark datasets

demonstrate its advantages.

Unsupervised Domain Adaptation (UDA) aims to classify unlabeled target domain by transferring knowledge from labeled source domain with domain shift. Most of the existing UDA methods try to mitigate the adverse impact induced by the shift via reducing domain discrepancy. However, such approaches easily suffer a notorious mode collapse issue due to the lack of labels in target domain. Naturally, one of the effective ways to mitigate this issue is to reliably estimate the pseudo labels for target domain, which itself is hard. To overcome this, we propose a novel UDA method named Progressive Adaptation of Subspaces approach (PAS) in which we utilize such an intuition that appears much reasonable to gradually obtain reliable pseudo labels. Speci fically, we progressively and steadily refine the shared subspaces as bridge of knowledge transfer by adaptively anchoring/selecting and leveraging those target samples with reliable pseudo labels. Subsequently, the refined subspaces can in turn provide more reliable pseudo-labels of the target domain, making the mode collapse highly mitigated. Our thorough evaluation demonstrates that PAS is not only effective for common UDA, but also outperforms the state-of-the arts for more challenging Partial Domain Adaptation (PDA) situation, where the source label set subsumes the target one.

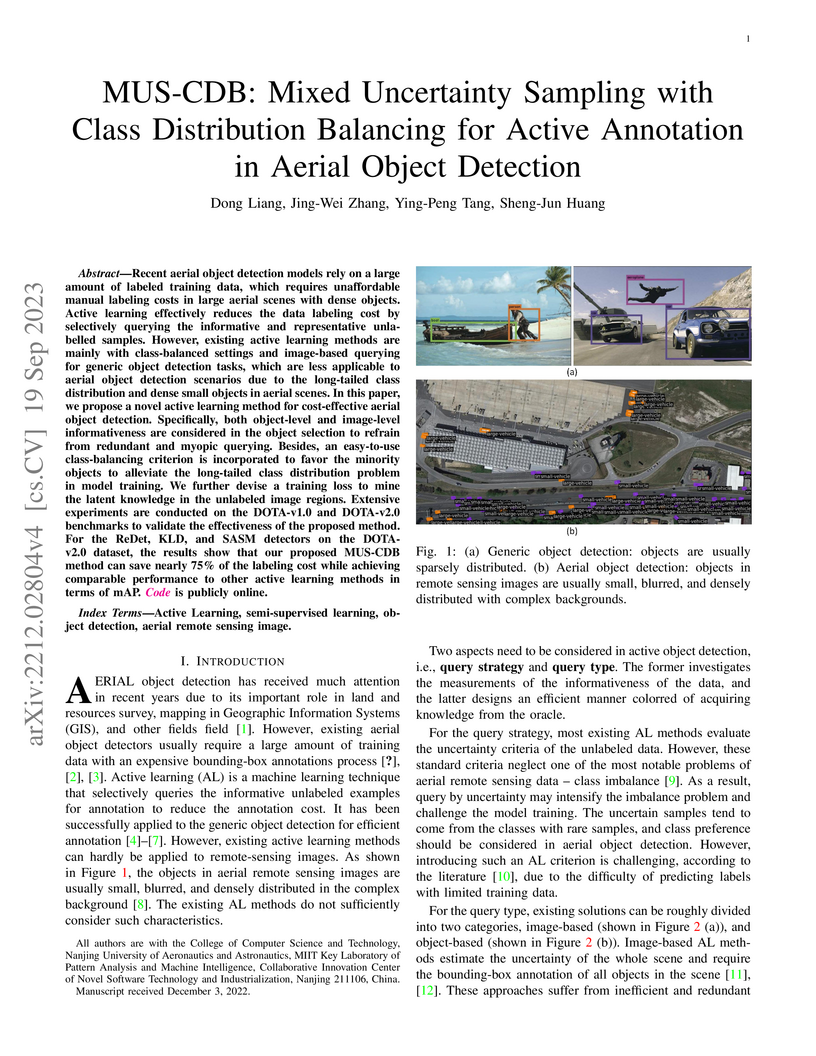

Recent aerial object detection models rely on a large amount of labeled training data, which requires unaffordable manual labeling costs in large aerial scenes with dense objects. Active learning effectively reduces the data labeling cost by selectively querying the informative and representative unlabelled samples. However, existing active learning methods are mainly with class-balanced settings and image-based querying for generic object detection tasks, which are less applicable to aerial object detection scenarios due to the long-tailed class distribution and dense small objects in aerial scenes. In this paper, we propose a novel active learning method for cost-effective aerial object detection. Specifically, both object-level and image-level informativeness are considered in the object selection to refrain from redundant and myopic querying. Besides, an easy-to-use class-balancing criterion is incorporated to favor the minority objects to alleviate the long-tailed class distribution problem in model training. We further devise a training loss to mine the latent knowledge in the unlabeled image regions. Extensive experiments are conducted on the DOTA-v1.0 and DOTA-v2.0 benchmarks to validate the effectiveness of the proposed method. For the ReDet, KLD, and SASM detectors on the DOTA-v2.0 dataset, the results show that our proposed MUS-CDB method can save nearly 75\% of the labeling cost while achieving comparable performance to other active learning methods in terms of this http URL is publicly online (this https URL).

In image anomaly detection, significant advancements have been made using un-

and self-supervised methods with datasets containing only normal samples.

However, these approaches often struggle with fine-grained anomalies. This

paper introduces \textbf{GRAD}: Bi-\textbf{G}rid \textbf{R}econstruction for

Image \textbf{A}nomaly \textbf{D}etection, which employs two continuous grids

to enhance anomaly detection from both normal and abnormal perspectives. In

this work: 1) Grids as feature repositories that improve generalization and

mitigate the Identical Shortcut (IS) issue; 2) An abnormal feature grid that

refines normal feature boundaries, boosting detection of fine-grained defects;

3) The Feature Block Paste (FBP) module, which synthesizes various anomalies at

the feature level for quick abnormal grid deployment. GRAD's robust

representation capabilities also allow it to handle multiple classes with a

single model. Evaluations on datasets like MVTecAD, VisA, and GoodsAD show

significant performance improvements in fine-grained anomaly detection. GRAD

excels in overall accuracy and in discerning subtle differences, demonstrating

its superiority over existing methods.

Like k-means and Gaussian Mixture Model (GMM), fuzzy c-means (FCM) with soft partition has also become a popular clustering algorithm and still is extensively studied. However, these algorithms and their variants still suffer from some difficulties such as determination of the optimal number of clusters which is a key factor for clustering quality. A common approach for overcoming this difficulty is to use the trial-and-validation strategy, i.e., traversing every integer from large number like n to 2 until finding the optimal number corresponding to the peak value of some cluster validity index. But it is scarcely possible to naturally construct an adaptively agglomerative hierarchical cluster structure as using the trial-and-validation strategy. Even possible, existing different validity indices also lead to different number of clusters. To effectively mitigate the problems while motivated by convex clustering, in this paper we present a Centroid Auto-Fused Hierarchical Fuzzy c-means method (CAF-HFCM) whose optimization procedure can automatically agglomerate to form a cluster hierarchy, more importantly, yielding an optimal number of clusters without resorting to any validity index. Although a recently-proposed robust-learning fuzzy c-means (RL-FCM) can also automatically obtain the best number of clusters without the help of any validity index, so-involved 3 hyper-parameters need to adjust expensively, conversely, our CAF-HFCM involves just 1 hyper-parameter which makes the corresponding adjustment is relatively easier and more operational. Further, as an additional benefit from our optimization objective, the CAF-HFCM effectively reduces the sensitivity to the initialization of clustering performance. Moreover, our proposed CAF-HFCM method is able to be straightforwardly extended to various variants of FCM.

In addition to high accuracy, robustness is becoming increasingly important for machine learning models in various applications. Recently, much research has been devoted to improving the model robustness by training with noise perturbations. Most existing studies assume a fixed perturbation level for all training examples, which however hardly holds in real tasks. In fact, excessive perturbations may destroy the discriminative content of an example, while deficient perturbations may fail to provide helpful information for improving the robustness. Motivated by this observation, we propose to adaptively adjust the perturbation levels for each example in the training process. Specifically, a novel active learning framework is proposed to allow the model to interactively query the correct perturbation level from human experts. By designing a cost-effective sampling strategy along with a new query type, the robustness can be significantly improved with a few queries. Both theoretical analysis and experimental studies validate the effectiveness of the proposed approach.

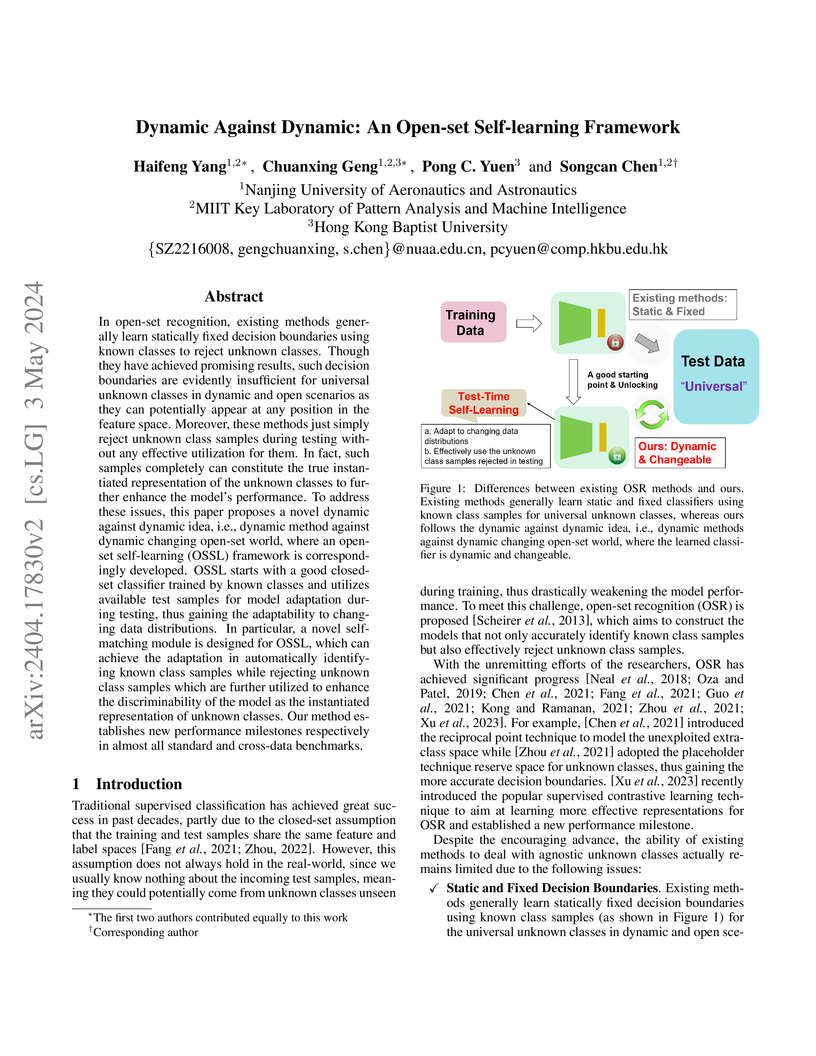

In open-set recognition, existing methods generally learn statically fixed decision boundaries using known classes to reject unknown classes. Though they have achieved promising results, such decision boundaries are evidently insufficient for universal unknown classes in dynamic and open scenarios as they can potentially appear at any position in the feature space. Moreover, these methods just simply reject unknown class samples during testing without any effective utilization for them. In fact, such samples completely can constitute the true instantiated representation of the unknown classes to further enhance the model's performance. To address these issues, this paper proposes a novel dynamic against dynamic idea, i.e., dynamic method against dynamic changing open-set world, where an open-set self-learning (OSSL) framework is correspondingly developed. OSSL starts with a good closed-set classifier trained by known classes and utilizes available test samples for model adaptation during testing, thus gaining the adaptability to changing data distributions. In particular, a novel self-matching module is designed for OSSL, which can achieve the adaptation in automatically identifying known class samples while rejecting unknown class samples which are further utilized to enhance the discriminability of the model as the instantiated representation of unknown classes. Our method establishes new performance milestones respectively in almost all standard and cross-data benchmarks.

There are no more papers matching your filters at the moment.