Missouri University of Science and Technology

Researchers from multiple US universities systematically evaluated large language models as academic peer reviewers, identifying consistent rating inflation (averaging 1.16 points higher than human reviewers), divergences in evaluative focus compared to humans, and significant susceptibility to covert prompt injection attacks that can force specific ratings (30% success for 10/10) or suppress weaknesses.

A comparative study evaluated 10 actively maintained open-source PDF parsing tools for full-text extraction and table detection across six diverse document categories using the DocLayNet dataset. The research found that rule-based tools perform effectively for general text in most document types, while learning-based models like Nougat and Table Transformer achieve superior performance for scientific documents and complex table detection respectively.

Convolutional neural networks (CNNs) have made resounding success in many computer vision tasks such as image classification and object detection. However, their performance degrades rapidly on tougher tasks where images are of low resolution or objects are small. In this paper, we point out that this roots in a defective yet common design in existing CNN architectures, namely the use of strided convolution and/or pooling layers, which results in a loss of fine-grained information and learning of less effective feature representations. To this end, we propose a new CNN building block called SPD-Conv in place of each strided convolution layer and each pooling layer (thus eliminates them altogether). SPD-Conv is comprised of a space-to-depth (SPD) layer followed by a non-strided convolution (Conv) layer, and can be applied in most if not all CNN architectures. We explain this new design under two most representative computer vision tasks: object detection and image classification. We then create new CNN architectures by applying SPD-Conv to YOLOv5 and ResNet, and empirically show that our approach significantly outperforms state-of-the-art deep learning models, especially on tougher tasks with low-resolution images and small objects. We have open-sourced our code at this https URL.

Neural IR has advanced through two distinct paths: entity-oriented approaches leveraging knowledge graphs and multi-vector models capturing fine-grained semantics. We introduce QDER, a neural re-ranking model that unifies these approaches by integrating knowledge graph semantics into a multi-vector model. QDER's key innovation lies in its modeling of query-document relationships: rather than computing similarity scores on aggregated embeddings, we maintain individual token and entity representations throughout the ranking process, performing aggregation only at the final scoring stage - an approach we call "late aggregation." We first transform these fine-grained representations through learned attention patterns, then apply carefully chosen mathematical operations for precise matches. Experiments across five standard benchmarks show that QDER achieves significant performance gains, with improvements of 36% in nDCG@20 over the strongest baseline on TREC Robust 2004 and similar improvements on other datasets. QDER particularly excels on difficult queries, achieving an nDCG@20 of 0.70 where traditional approaches fail completely (nDCG@20 = 0.0), setting a foundation for future work in entity-aware retrieval.

15 Oct 2025

The synthesis and characterization, along with the resulting properties, of fully dense (Cr,Mo,Ta,V,W)C high-entropy carbide ceramics were studied. The ceramics were synthesized from metal oxide and carbon powders by carbothermal reduction, followed by spark plasma sintering at various temperatures for densification. Increasing the densification temperature resulted in grain growth and an increase in the lattice parameter. Thermal diffusivity increased linearly with testing temperature, resulting in thermal conductivity values ranging from approximately 7 Wm−1K−1 at room temperature to 12 Wm−1K−1 at 200 ∘C. Measured heat capacity values matched theoretical estimates made using the Neumann--Kopp rule. Room-temperature electrical resistivity decreased from 137 to 120 μΩ⋅cm as the excess carbon decreased from 5.4 to 0.1 vol%, suggesting an enhanced electronic contribution to thermal conductivity as excess carbon decreased. All specimens exhibited a Vickers hardness of approximately 29 GPa under a 0.49 N load. These results underscore the tunability of this high-entropy carbide system.

Large Language Models (LLMs) have revolutionized content creation across digital platforms, offering unprecedented capabilities in natural language generation and understanding. These models enable beneficial applications such as content generation, question and answering (Q&A), programming, and code reasoning. Meanwhile, they also pose serious risks by inadvertently or intentionally producing toxic, offensive, or biased content. This dual role of LLMs, both as powerful tools for solving real-world problems and as potential sources of harmful language, presents a pressing sociotechnical challenge. In this survey, we systematically review recent studies spanning unintentional toxicity, adversarial jailbreaking attacks, and content moderation techniques. We propose a unified taxonomy of LLM-related harms and defenses, analyze emerging multimodal and LLM-assisted jailbreak strategies, and assess mitigation efforts, including reinforcement learning with human feedback (RLHF), prompt engineering, and safety alignment. Our synthesis highlights the evolving landscape of LLM safety, identifies limitations in current evaluation methodologies, and outlines future research directions to guide the development of robust and ethically aligned language technologies.

This paper presents a novel hybrid tokenization strategy that enhances the performance of DNA Language Models (DLMs) by combining 6-mer tokenization with Byte Pair Encoding (BPE-600). Traditional k-mer tokenization is effective at capturing local DNA sequence structures but often faces challenges, including uneven token distribution and a limited understanding of global sequence context. To address these limitations, we propose merging unique 6mer tokens with optimally selected BPE tokens generated through 600 BPE cycles. This hybrid approach ensures a balanced and context-aware vocabulary, enabling the model to capture both short and long patterns within DNA sequences simultaneously. A foundational DLM trained on this hybrid vocabulary was evaluated using next-k-mer prediction as a fine-tuning task, demonstrating significantly improved performance. The model achieved prediction accuracies of 10.78% for 3-mers, 10.1% for 4-mers, and 4.12% for 5-mers, outperforming state-of-the-art models such as NT, DNABERT2, and GROVER. These results highlight the ability of the hybrid tokenization strategy to preserve both the local sequence structure and global contextual information in DNA modeling. This work underscores the importance of advanced tokenization methods in genomic language modeling and lays a robust foundation for future applications in downstream DNA sequence analysis and biological research.

Researchers at Missouri University of Science and Technology developed REGENT, a neural re-ranker that integrates token-level lexical signals and query-specific entity representations via a novel relevance-guided attention mechanism. This approach significantly outperforms existing baselines, achieving a 108% relative MAP improvement over BM25 on TREC Robust04 by enabling deeper semantic reasoning for complex queries and long documents.

University of Notre DameGuangdong University of Technology

University of Notre DameGuangdong University of Technology HKUSTGuangdong Polytechnic Normal UniversityMissouri University of Science and TechnologyGuangdong Academy of Medical SciencesGuangdong Cardiovascular InstituteGuangdong Provincial Key Laboratory of South China Structural Heart DiseaseGuangdong Provincial People

’s HospitalUniversity of Engineering and Technology, MardanShenzhen Children

’s Hospital

HKUSTGuangdong Polytechnic Normal UniversityMissouri University of Science and TechnologyGuangdong Academy of Medical SciencesGuangdong Cardiovascular InstituteGuangdong Provincial Key Laboratory of South China Structural Heart DiseaseGuangdong Provincial People

’s HospitalUniversity of Engineering and Technology, MardanShenzhen Children

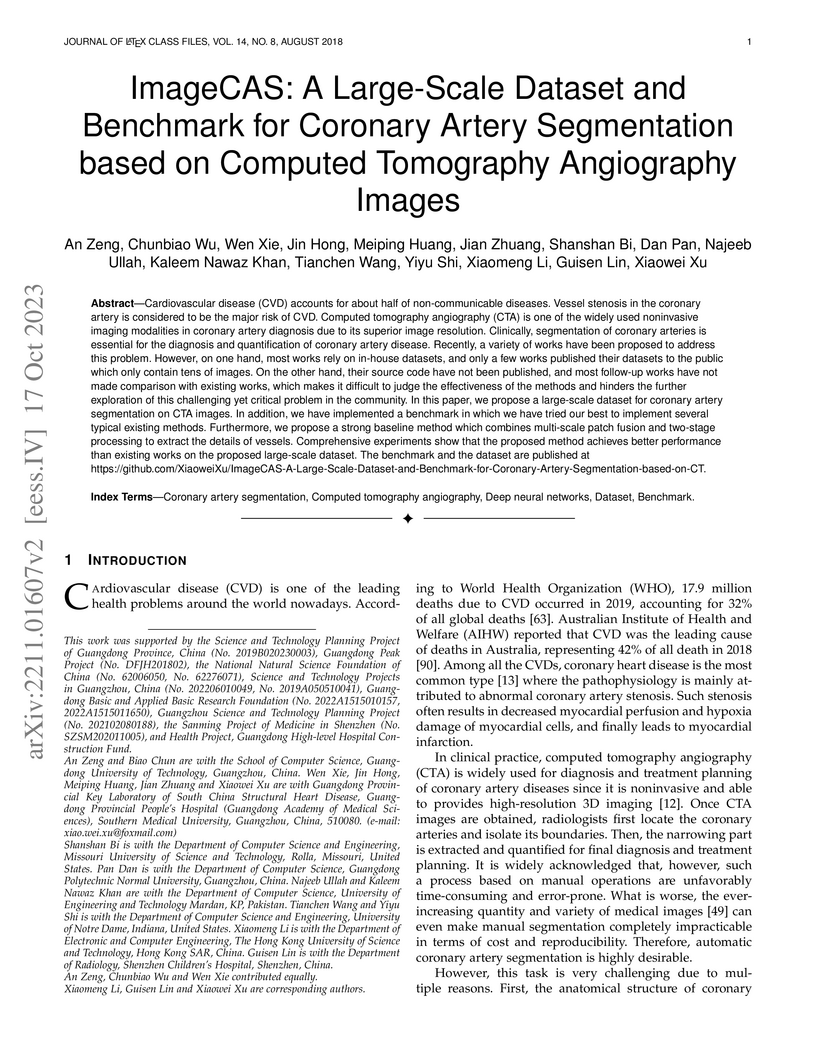

’s HospitalCardiovascular disease (CVD) accounts for about half of non-communicable diseases. Vessel stenosis in the coronary artery is considered to be the major risk of CVD. Computed tomography angiography (CTA) is one of the widely used noninvasive imaging modalities in coronary artery diagnosis due to its superior image resolution. Clinically, segmentation of coronary arteries is essential for the diagnosis and quantification of coronary artery disease. Recently, a variety of works have been proposed to address this problem. However, on one hand, most works rely on in-house datasets, and only a few works published their datasets to the public which only contain tens of images. On the other hand, their source code have not been published, and most follow-up works have not made comparison with existing works, which makes it difficult to judge the effectiveness of the methods and hinders the further exploration of this challenging yet critical problem in the community. In this paper, we propose a large-scale dataset for coronary artery segmentation on CTA images. In addition, we have implemented a benchmark in which we have tried our best to implement several typical existing methods. Furthermore, we propose a strong baseline method which combines multi-scale patch fusion and two-stage processing to extract the details of vessels. Comprehensive experiments show that the proposed method achieves better performance than existing works on the proposed large-scale dataset. The benchmark and the dataset are published at this https URL.

Infrared imaging has emerged as a robust solution for urban object detection under low-light and adverse weather conditions, offering significant advantages over traditional visible-light cameras. However, challenges such as class imbalance, thermal noise, and computational constraints can significantly hinder model performance in practical settings. To address these issues, we evaluate multiple YOLO variants on the FLIR ADAS V2 dataset, ultimately selecting YOLOv8 as our baseline due to its balanced accuracy and efficiency. Building on this foundation, we present \texttt{MS-YOLO} (\textbf{M}obileNetv4 and \textbf{S}lideLoss based on YOLO), which replaces YOLOv8's CSPDarknet backbone with the more efficient MobileNetV4, reducing computational overhead by \textbf{1.5%} while sustaining high accuracy. In addition, we introduce \emph{SlideLoss}, a novel loss function that dynamically emphasizes under-represented and occluded samples, boosting precision without sacrificing recall. Experiments on the FLIR ADAS V2 benchmark show that \texttt{MS-YOLO} attains competitive mAP and superior precision while operating at only \textbf{6.7 GFLOPs}. These results demonstrate that \texttt{MS-YOLO} effectively addresses the dual challenge of maintaining high detection quality while minimizing computational costs, making it well-suited for real-time edge deployment in urban environments.

Researchers from the University of Alabama, University of Oklahoma, and Missouri University of Science and Technology introduce a four-stage, source-tracked pipeline using large language models to reliably extract 47 distinct material features across composition, processing, microstructure, and properties from scientific literature. The system achieves an F1-score of 0.959, with zero false positive materials and a 3.3% material miss rate, demonstrating a robust method for curating high-quality experimental materials datasets.

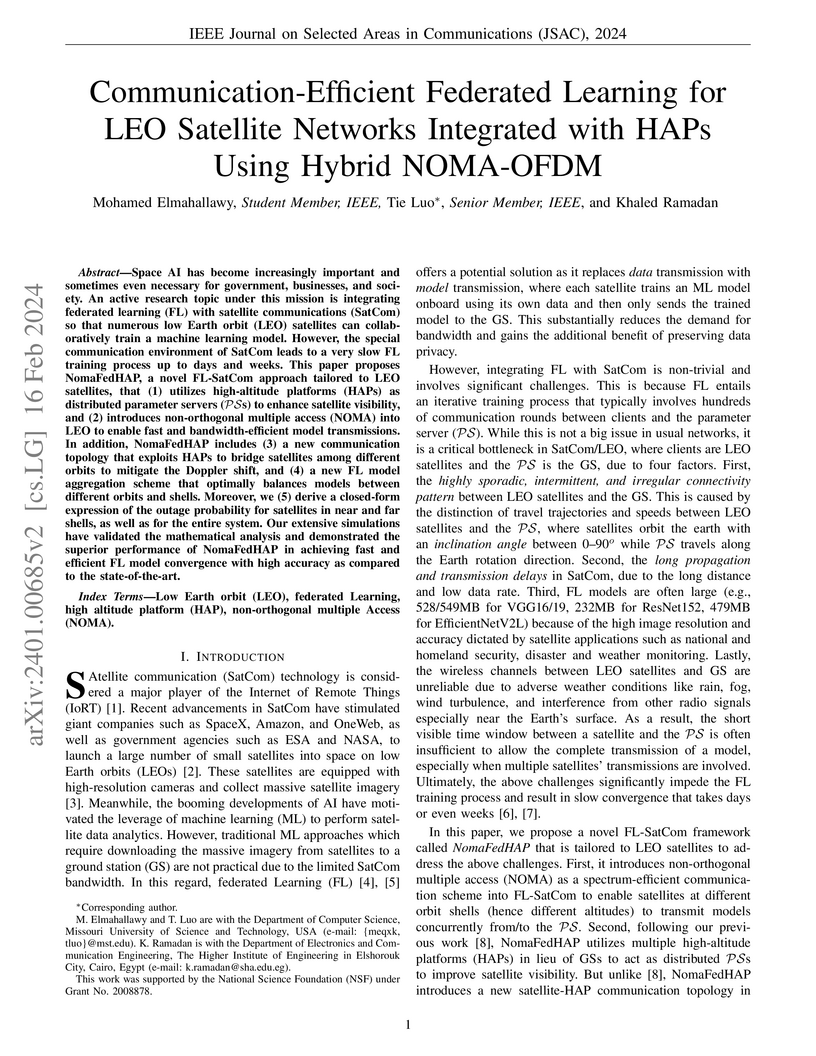

Space AI has become increasingly important and sometimes even necessary for government, businesses, and society. An active research topic under this mission is integrating federated learning (FL) with satellite communications (SatCom) so that numerous low Earth orbit (LEO) satellites can collaboratively train a machine learning model. However, the special communication environment of SatCom leads to a very slow FL training process up to days and weeks. This paper proposes NomaFedHAP, a novel FL-SatCom approach tailored to LEO satellites, that (1) utilizes high-altitude platforms (HAPs) as distributed parameter servers (PS) to enhance satellite visibility, and (2) introduces non-orthogonal multiple access (NOMA) into LEO to enable fast and bandwidth-efficient model transmissions. In addition, NomaFedHAP includes (3) a new communication topology that exploits HAPs to bridge satellites among different orbits to mitigate the Doppler shift, and (4) a new FL model aggregation scheme that optimally balances models between different orbits and shells. Moreover, we (5) derive a closed-form expression of the outage probability for satellites in near and far shells, as well as for the entire system. Our extensive simulations have validated the mathematical analysis and demonstrated the superior performance of NomaFedHAP in achieving fast and efficient FL model convergence with high accuracy as compared to the state-of-the-art.

24 Oct 2025

The emergence of new, off-path smart network cards (SmartNICs), known generally as Data Processing Units (DPU), has opened a wide range of research opportunities. Of particular interest is the use of these and related devices in tandem with their host's CPU, creating a heterogeneous computing system with new properties and strengths to be explored, capable of accelerating a wide variety of workloads. This survey begins by providing the motivation and relevant background information for this new field, including its origins, a few current hardware offerings, major programming languages and frameworks for using them, and associated challenges. We then review and categorize a number of recent works in the field, covering a wide variety of studies, benchmarks, and application areas, such as data center infrastructure, commercial uses, and AI and ML acceleration. We conclude with a few observations.

12 Feb 2024

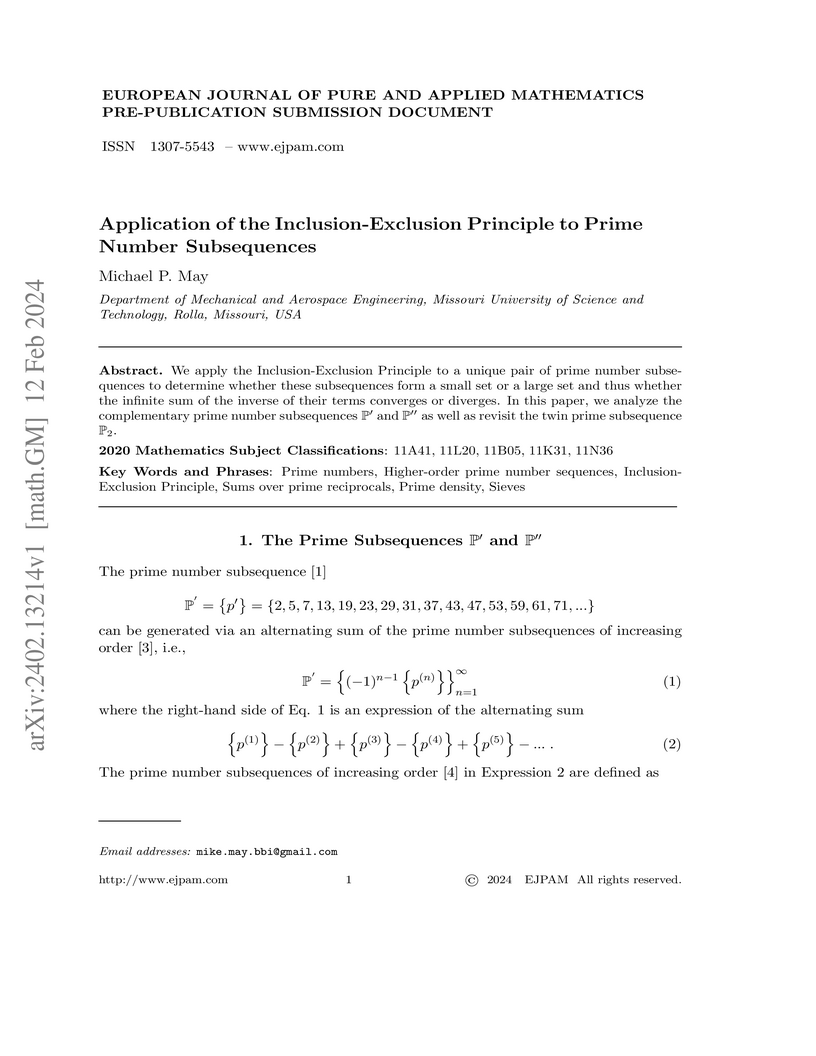

Michael P. May applied an adapted Inclusion-Exclusion Principle to characterize two specific prime subsequences, P' and P'', finding that P' has a divergent reciprocal sum while P'' has a convergent reciprocal sum, indicating P'' is sparser than twin primes.

This paper presents Deep ARTMAP, a novel extension of the ARTMAP architecture

that generalizes the self-consistent modular ART (SMART) architecture to enable

hierarchical learning (supervised and unsupervised) across arbitrary

transformations of data. The Deep ARTMAP framework operates as a divisive

clustering mechanism, supporting an arbitrary number of modules with

customizable granularity within each module. Inter-ART modules regulate the

clustering at each layer, permitting unsupervised learning while enforcing a

one-to-many mapping from clusters in one layer to the next. While Deep ARTMAP

reduces to both ARTMAP and SMART in particular configurations, it offers

significantly enhanced flexibility, accommodating a broader range of data

transformations and learning modalities.

National Astronomical Observatory of Japan University of Oxford

University of Oxford The University of Texas at Austin

The University of Texas at Austin The Pennsylvania State UniversityKorea Institute for Advanced StudySOKENDAI (The Graduate University for Advanced Studies)Missouri University of Science and TechnologyInstituto de Física de Cantabria (IFCA)University of the Western CapeInstitute for Cosmic Ray Research, The University of TokyoMcDonald ObservatoryLeibniz-Institut for Astrophysik Potsdam (AIP)Kavli Institute for the Physics and Mathematics of the Universe (WPI), The University of TokyoCSIC-Univ. de CantabriaLudwig-Maximilians-Universität MünchenUniversitȁt HeidelbergE. A. Milne Centre for Astrophysics University of HullCentre of Excellence for Data Science, Artificial Intelligence & Modelling (DAIM), University of HullMIRAI Technology Institute, Shiseido Co., Ltd.Max Planck-Institut für Astrophysik

The Pennsylvania State UniversityKorea Institute for Advanced StudySOKENDAI (The Graduate University for Advanced Studies)Missouri University of Science and TechnologyInstituto de Física de Cantabria (IFCA)University of the Western CapeInstitute for Cosmic Ray Research, The University of TokyoMcDonald ObservatoryLeibniz-Institut for Astrophysik Potsdam (AIP)Kavli Institute for the Physics and Mathematics of the Universe (WPI), The University of TokyoCSIC-Univ. de CantabriaLudwig-Maximilians-Universität MünchenUniversitȁt HeidelbergE. A. Milne Centre for Astrophysics University of HullCentre of Excellence for Data Science, Artificial Intelligence & Modelling (DAIM), University of HullMIRAI Technology Institute, Shiseido Co., Ltd.Max Planck-Institut für Astrophysik

University of Oxford

University of Oxford The University of Texas at Austin

The University of Texas at Austin The Pennsylvania State UniversityKorea Institute for Advanced StudySOKENDAI (The Graduate University for Advanced Studies)Missouri University of Science and TechnologyInstituto de Física de Cantabria (IFCA)University of the Western CapeInstitute for Cosmic Ray Research, The University of TokyoMcDonald ObservatoryLeibniz-Institut for Astrophysik Potsdam (AIP)Kavli Institute for the Physics and Mathematics of the Universe (WPI), The University of TokyoCSIC-Univ. de CantabriaLudwig-Maximilians-Universität MünchenUniversitȁt HeidelbergE. A. Milne Centre for Astrophysics University of HullCentre of Excellence for Data Science, Artificial Intelligence & Modelling (DAIM), University of HullMIRAI Technology Institute, Shiseido Co., Ltd.Max Planck-Institut für Astrophysik

The Pennsylvania State UniversityKorea Institute for Advanced StudySOKENDAI (The Graduate University for Advanced Studies)Missouri University of Science and TechnologyInstituto de Física de Cantabria (IFCA)University of the Western CapeInstitute for Cosmic Ray Research, The University of TokyoMcDonald ObservatoryLeibniz-Institut for Astrophysik Potsdam (AIP)Kavli Institute for the Physics and Mathematics of the Universe (WPI), The University of TokyoCSIC-Univ. de CantabriaLudwig-Maximilians-Universität MünchenUniversitȁt HeidelbergE. A. Milne Centre for Astrophysics University of HullCentre of Excellence for Data Science, Artificial Intelligence & Modelling (DAIM), University of HullMIRAI Technology Institute, Shiseido Co., Ltd.Max Planck-Institut für AstrophysikResearchers from the HETDEX collaboration present the first direct detection of the Lyα intensity mapping cross-power spectrum, correlating Lyα-emitting galaxies with diffuse Lyα emission from undetected sources at redshifts 1.9 to 3.5. This measurement constrains the cosmic Lyα luminosity density and indicates that star-forming galaxies are the dominant source of the Lyα background on large scales.

19 Dec 2024

This article provides an overview of the current state of machine learning in gravitational-wave research with interferometric detectors. Such applications are often still in their early days, but have reached sufficient popularity to warrant an assessment of their impact across various domains, including detector studies, noise and signal simulations, and the detection and interpretation of astrophysical signals.

In detector studies, machine learning could be useful to optimize instruments like LIGO, Virgo, KAGRA, and future detectors. Algorithms could predict and help in mitigating environmental disturbances in real time, ensuring detectors operate at peak performance. Furthermore, machine-learning tools for characterizing and cleaning data after it is taken have already become crucial tools for achieving the best sensitivity of the LIGO--Virgo--KAGRA network.

In data analysis, machine learning has already been applied as an alternative to traditional methods for signal detection, source localization, noise reduction, and parameter estimation. For some signal types, it can already yield improved efficiency and robustness, though in many other areas traditional methods remain dominant.

As the field evolves, the role of machine learning in advancing gravitational-wave research is expected to become increasingly prominent. This report highlights recent advancements, challenges, and perspectives for the current detector generation, with a brief outlook to the next generation of gravitational-wave detectors.

Adversarial examples (AE) with good transferability enable practical

black-box attacks on diverse target models, where insider knowledge about the

target models is not required. Previous methods often generate AE with no or

very limited transferability; that is, they easily overfit to the particular

architecture and feature representation of the source, white-box model and the

generated AE barely work for target, black-box models. In this paper, we

propose a novel approach to enhance AE transferability using Gradient Norm

Penalty (GNP). It drives the loss function optimization procedure to converge

to a flat region of local optima in the loss landscape. By attacking 11

state-of-the-art (SOTA) deep learning models and 6 advanced defense methods, we

empirically show that GNP is very effective in generating AE with high

transferability. We also demonstrate that it is very flexible in that it can be

easily integrated with other gradient based methods for stronger transfer-based

attacks.

30 Sep 2025

We consider statistical inference for network-linked regression problems, where covariates may include network summary statistics computed for each node. In settings involving network data, it is often natural to posit that latent variables govern connection probabilities in the graph. Since the presence of these latent features makes classical regression assumptions even less tenable, we propose an assumption-lean framework for linear regression with jointly exchangeable regression arrays. We establish an analog of the Aldous-Hoover representation for such arrays, which may be of independent interest. Moreover, we consider two different projection parameters as potential targets and establish conditions under which asymptotic normality and bootstrap consistency hold when commonly used network statistics, including local subgraph frequencies and spectral embeddings, are used as covariates. In the case of linear regression with local count statistics, we show that a bias-corrected estimator allows one to target a more natural inferential target under weaker sparsity conditions compared to the OLS estimator. Our inferential tools are illustrated using both simulated data and real data related to the academic climate of elementary schools.

The application of Machine learning to finance has become a familiar approach, even more so in stock market forecasting. The stock market is highly volatile, and huge amounts of data are generated every minute globally. The extraction of effective intelligence from this data is of critical importance. However, a collaboration of numerical stock data with qualitative text data can be a challenging task. In this work, we accomplish this by providing an unprecedented, publicly available dataset with technical and fundamental data and sentiment that we gathered from news archives, TV news captions, radio transcripts, tweets, daily financial newspapers, etc. The text data entries used for sentiment extraction total more than 1.4 Million. The dataset consists of daily entries from January 2018 to December 2022 for eight companies representing diverse industrial sectors and the Dow Jones Industrial Average (DJIA) as a whole. Holistic Fundamental and Technical data is provided training ready for Model learning and deployment. Most importantly, the data generated could be used for incremental online learning with real-time data points retrieved daily since no stagnant data was utilized. All the data was retired from APIs or self-designed robust information retrieval technologies with extremely low latency and zero monetary cost. These adaptable technologies facilitate data extraction for any stock. Moreover, the utilization of Spearman's rank correlation over real-time data, linking stock returns with sentiment analysis has produced noteworthy results for the DJIA and the eight other stocks, achieving accuracy levels surpassing 60%. The dataset is made available at this https URL.

There are no more papers matching your filters at the moment.