Ontario Tech University

Researchers at Ontario Tech University introduce a combinatorial test design methodology to construct Large Language Model (LLM) benchmarks resistant to data leakage. Their empirical evaluation of this approach, named HumanEval T, revealed that LLMs consistently performed 4.75% to 13.75% worse on the new variants compared to the original HumanEval, indicating existing benchmarks are susceptible to data contamination.

MR3 is a multilingual, rubric-agnostic reward reasoning model designed to evaluate Large Language Model outputs with explicit reasoning and scalar scores across 72 languages. The model achieved an average accuracy of 84.94% on multilingual pairwise preference benchmarks, surpassing its 9x larger teacher model (GPT-OSS-120B) by +0.87 points, and demonstrated improved reasoning faithfulness, particularly in low-resource languages.

Researchers from Mount Sinai and Stanford present a comprehensive framework for quantifying and managing uncertainty in medical Large Language Models, combining probabilistic modeling, linguistic analysis, and dynamic calibration techniques to enable more reliable and transparent clinical decision support while acknowledging inherent limitations in medical knowledge.

Masoud Makrehchi proposes a framework for understanding AI by analyzing it through the lenses of risk, transformation, and continuity. The work argues for a balanced approach to AI governance that accounts for both its evolutionary patterns and singularity-class tail risks.

Despite the widespread adoption of Large language models (LLMs), their

remarkable capabilities remain limited to a few high-resource languages.

Additionally, many low-resource languages (\eg African languages) are often

evaluated only on basic text classification tasks due to the lack of

appropriate or comprehensive benchmarks outside of high-resource languages. In

this paper, we introduce IrokoBench -- a human-translated benchmark dataset for

17 typologically-diverse low-resource African languages covering three tasks:

natural language inference~(AfriXNLI), mathematical reasoning~(AfriMGSM), and

multi-choice knowledge-based question answering~(AfriMMLU). We use IrokoBench

to evaluate zero-shot, few-shot, and translate-test settings~(where test sets

are translated into English) across 10 open and six proprietary LLMs. Our

evaluation reveals a significant performance gap between high-resource

languages~(such as English and French) and low-resource African languages. We

observe a significant performance gap between open and proprietary models, with

the highest performing open model, Gemma 2 27B only at 63\% of the

best-performing proprietary model GPT-4o performance. In addition, machine

translating the test set to English before evaluation helped to close the gap

for larger models that are English-centric, such as Gemma 2 27B and LLaMa 3.1

70B. These findings suggest that more efforts are needed to develop and adapt

LLMs for African languages.

Researchers introduced M4-RAG, a comprehensive benchmark for evaluating Retrieval-Augmented Generation (RAG) in multilingual, multicultural, and multimodal contexts, finding that RAG benefits smaller Vision-Language Models but shows diminishing returns or performance degradation for larger models, along with a pervasive English bias in reasoning.

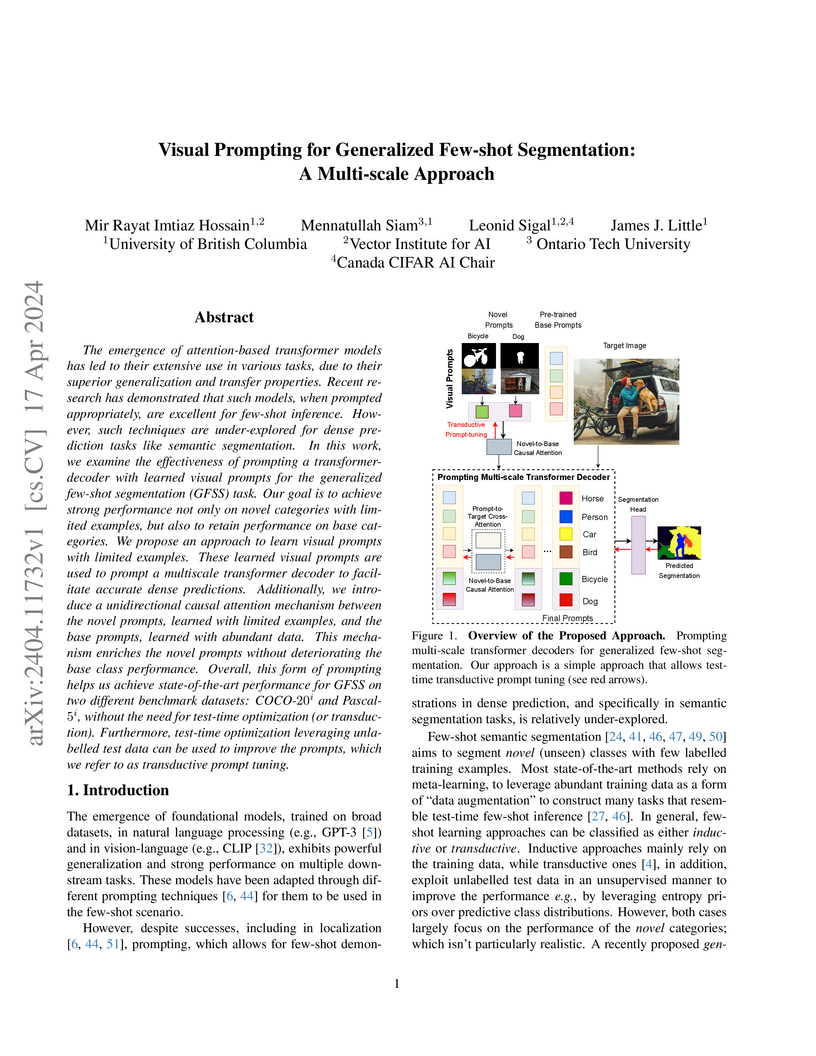

Researchers developed a non-meta-learning framework for Generalized Few-shot Semantic Segmentation (GFSS) that employs visual prompting within a multi-scale transformer decoder. This method achieved new state-of-the-art performance on COCO-20i and PASCAL-5i benchmarks, with its inductive variant outperforming previous transductive methods by up to 2.28% mean mIoU in 5-shot scenarios.

URIEL is a knowledge base offering geographical, phylogenetic, and

typological vector representations for 7970 languages. It includes distance

measures between these vectors for 4005 languages, which are accessible via the

lang2vec tool. Despite being frequently cited, URIEL is limited in terms of

linguistic inclusion and overall usability. To tackle these challenges, we

introduce URIEL+, an enhanced version of URIEL and lang2vec that addresses

these limitations. In addition to expanding typological feature coverage for

2898 languages, URIEL+ improves the user experience with robust, customizable

distance calculations to better suit the needs of users. These upgrades also

offer competitive performance on downstream tasks and provide distances that

better align with linguistic distance studies.

Researchers from Thomson Reuters Labs demonstrated that guiding large language models with prompts explicitly designed around established legal reasoning frameworks, such as TRRAC, substantially improves performance on complex legal textual entailment tasks. This approach set new state-of-the-art accuracies for the COLIEE competition, achieving an 81.48% accuracy on the 2021 dataset.

This study compared large language model (LLM)-based thematic analysis with human-led methods using digital mental health interview data. LLM-based analysis proved significantly more time and cost-efficient while identifying core themes and achieving earlier thematic saturation, though human analysis consistently provided greater depth, contextual nuance, and a broader range of specific themes.

Link prediction is a fundamental problem for graph-structured data (e.g.,

social networks, drug side-effect networks, etc.). Graph neural networks have

offered robust solutions for this problem, specifically by learning the

representation of the subgraph enclosing the target link (i.e., pair of nodes).

However, these solutions do not scale well to large graphs as extraction and

operation on enclosing subgraphs are computationally expensive, especially for

large graphs. This paper presents a scalable link prediction solution, that we

call ScaLed, which utilizes sparse enclosing subgraphs to make predictions. To

extract sparse enclosing subgraphs, ScaLed takes multiple random walks from a

target pair of nodes, then operates on the sampled enclosing subgraph induced

by all visited nodes. By leveraging the smaller sampled enclosing subgraph,

ScaLed can scale to larger graphs with much less overhead while maintaining

high accuracy. ScaLed further provides the flexibility to control the trade-off

between computation overhead and accuracy. Through comprehensive experiments,

we have shown that ScaLed can produce comparable accuracy to those reported by

the existing subgraph representation learning frameworks while being less

computationally demanding.

The paper asserts that emulating empathy in human-robot interaction is a key component to achieve satisfying social, trustworthy, and ethical robot interaction with older people. Following comments from older adult study participants, the paper identifies a gap. Despite the acceptance of robot care scenarios, participants expressed the poor quality of the social aspect. Current human-robot designs, to a certain extent, neglect to include empathy as a theorized design pathway. Using rhetorical theory, this paper defines the socio-cultural expectations for convincing empathetic relationships. It analyzes and then summarizes how society understands, values, and negotiates empathic interaction between human companions in discursive exchanges, wherein empathy acts as a societal value system. Using two public research collections on robots, with one geared specifically to gerontechnology for older people, it substantiates the lack of attention to empathy in public materials produced by robot companies. This paper contends that using an empathetic care vocabulary as a design pathway is a productive underlying foundation for designing humanoid social robots that aim to support older people's goals of aging-in-place. It argues that the integration of affective AI into the sociotechnical assemblages of human-socially assistive robot interaction ought to be scrutinized to ensure it is based on genuine cultural values involving empathetic qualities.

Learning with limited labelled data is a challenging problem in various applications, including remote sensing. Few-shot semantic segmentation is one approach that can encourage deep learning models to learn from few labelled examples for novel classes not seen during the training. The generalized few-shot segmentation setting has an additional challenge which encourages models not only to adapt to the novel classes but also to maintain strong performance on the training base classes. While previous datasets and benchmarks discussed the few-shot segmentation setting in remote sensing, we are the first to propose a generalized few-shot segmentation benchmark for remote sensing. The generalized setting is more realistic and challenging, which necessitates exploring it within the remote sensing context. We release the dataset augmenting OpenEarthMap with additional classes labelled for the generalized few-shot evaluation setting. The dataset is released during the OpenEarthMap land cover mapping generalized few-shot challenge in the L3D-IVU workshop in conjunction with CVPR 2024. In this work, we summarize the dataset and challenge details in addition to providing the benchmark results on the two phases of the challenge for the validation and test sets.

Researchers from Ontario Tech University developed an IoT Agentic Search Engine (IoT-ASE) that uses Large Language Models and Retrieval Augmented Generation to provide real-time, context-aware search for IoT data. This system, built within the SensorsConnect framework and utilizing a unified data model, achieved 92% accuracy in retrieving intent-based services, addressing fragmentation and enabling natural language interaction with diverse IoT ecosystems.

Linguistic feature datasets such as URIEL+ are valuable for modelling cross-lingual relationships, but their high dimensionality and sparsity, especially for low-resource languages, limit the effectiveness of distance metrics. We propose a pipeline to optimize the URIEL+ typological feature space by combining feature selection and imputation, producing compact yet interpretable typological representations. We evaluate these feature subsets on linguistic distance alignment and downstream tasks, demonstrating that reduced-size representations of language typology can yield more informative distance metrics and improve performance in multilingual NLP applications.

Cantonese, although spoken by millions, remains under-resourced due to policy and diglossia. To address this scarcity of evaluation frameworks for Cantonese, we introduce \textsc{\textbf{CantoNLU}}, a benchmark for Cantonese natural language understanding (NLU). This novel benchmark spans seven tasks covering syntax and semantics, including word sense disambiguation, linguistic acceptability judgment, language detection, natural language inference, sentiment analysis, part-of-speech tagging, and dependency parsing. In addition to the benchmark, we provide model baseline performance across a set of models: a Mandarin model without Cantonese training, two Cantonese-adapted models obtained by continual pre-training a Mandarin model on Cantonese text, and a monolingual Cantonese model trained from scratch. Results show that Cantonese-adapted models perform best overall, while monolingual models perform better on syntactic tasks. Mandarin models remain competitive in certain settings, indicating that direct transfer may be sufficient when Cantonese domain data is scarce. We release all datasets, code, and model weights to facilitate future research in Cantonese NLP.

This study presents a comparative analysis of the a complex SQL benchmark, TPC-DS, with two existing text-to-SQL benchmarks, BIRD and Spider. Our findings reveal that TPC-DS queries exhibit a significantly higher level of structural complexity compared to the other two benchmarks. This underscores the need for more intricate benchmarks to simulate realistic scenarios effectively. To facilitate this comparison, we devised several measures of structural complexity and applied them across all three benchmarks. The results of this study can guide future research in the development of more sophisticated text-to-SQL benchmarks.

We utilized 11 distinct Language Models (LLMs) to generate SQL queries based on the query descriptions provided by the TPC-DS benchmark. The prompt engineering process incorporated both the query description as outlined in the TPC-DS specification and the database schema of TPC-DS. Our findings indicate that the current state-of-the-art generative AI models fall short in generating accurate decision-making queries. We conducted a comparison of the generated queries with the TPC-DS gold standard queries using a series of fuzzy structure matching techniques based on query features. The results demonstrated that the accuracy of the generated queries is insufficient for practical real-world application.

Machine translation (MT) post-editing and research data collection often rely on inefficient, disconnected workflows. We introduce TranslationCorrect, an integrated framework designed to streamline these tasks. TranslationCorrect combines MT generation using models like NLLB, automated error prediction using models like XCOMET or LLM APIs (providing detailed reasoning), and an intuitive post-editing interface within a single environment. Built with human-computer interaction (HCI) principles in mind to minimize cognitive load, as confirmed by a user study. For translators, it enables them to correct errors and batch translate efficiently. For researchers, TranslationCorrect exports high-quality span-based annotations in the Error Span Annotation (ESA) format, using an error taxonomy inspired by Multidimensional Quality Metrics (MQM). These outputs are compatible with state-of-the-art error detection models and suitable for training MT or post-editing systems. Our user study confirms that TranslationCorrect significantly improves translation efficiency and user satisfaction over traditional annotation methods.

High-quality datasets are fundamental to training and evaluating machine

learning models, yet their creation-especially with accurate human

annotations-remains a significant challenge. Many dataset paper submissions

lack originality, diversity, or rigorous quality control, and these

shortcomings are often overlooked during peer review. Submissions also

frequently omit essential details about dataset construction and properties.

While existing tools such as datasheets aim to promote transparency, they are

largely descriptive and do not provide standardized, measurable methods for

evaluating data quality. Similarly, metadata requirements at conferences

promote accountability but are inconsistently enforced. To address these

limitations, this position paper advocates for the integration of systematic,

rubric-based evaluation metrics into the dataset review process-particularly as

submission volumes continue to grow. We also explore scalable, cost-effective

methods for synthetic data generation, including dedicated tools and

LLM-as-a-judge approaches, to support more efficient evaluation. As a call to

action, we introduce DataRubrics, a structured framework for assessing the

quality of both human- and model-generated datasets. Leveraging recent advances

in LLM-based evaluation, DataRubrics offers a reproducible, scalable, and

actionable solution for dataset quality assessment, enabling both authors and

reviewers to uphold higher standards in data-centric research. We also release

code to support reproducibility of LLM-based evaluations at

this https URL

Despite major advances in machine translation (MT) in recent years, progress remains limited for many low-resource languages that lack large-scale training data and linguistic resources. Cantonese and Wu Chinese are two Sinitic examples, although each enjoys more than 80 million speakers around the world. In this paper, we introduce SiniticMTError, a novel dataset that builds on existing parallel corpora to provide error span, error type, and error severity annotations in machine-translated examples from English to Mandarin, Cantonese, and Wu Chinese. Our dataset serves as a resource for the MT community to utilize in fine-tuning models with error detection capabilities, supporting research on translation quality estimation, error-aware generation, and low-resource language evaluation. We report our rigorous annotation process by native speakers, with analyses on inter-annotator agreement, iterative feedback, and patterns in error type and severity.

There are no more papers matching your filters at the moment.