Palo Alto Research Center

Conflict-Based Search (CBS) is a powerful algorithmic framework for optimally solving classical multi-agent path finding (MAPF) problems, where time is discretized into the time steps. Continuous-time CBS (CCBS) is a recently proposed version of CBS that guarantees optimal solutions without the need to discretize time. However, the scalability of CCBS is limited because it does not include any known improvements of CBS. In this paper, we begin to close this gap and explore how to adapt successful CBS improvements, namely, prioritizing conflicts (PC), disjoint splitting (DS), and high-level heuristics, to the continuous time setting of CCBS. These adaptions are not trivial, and require careful handling of different types of constraints, applying a generalized version of the Safe interval path planning (SIPP) algorithm, and extending the notion of cardinal conflicts. We evaluate the effect of the suggested enhancements by running experiments both on general graphs and 2k-neighborhood grids. CCBS with these improvements significantly outperforms vanilla CCBS, solving problems with almost twice as many agents in some cases and pushing the limits of multiagent path finding in continuous-time domains.

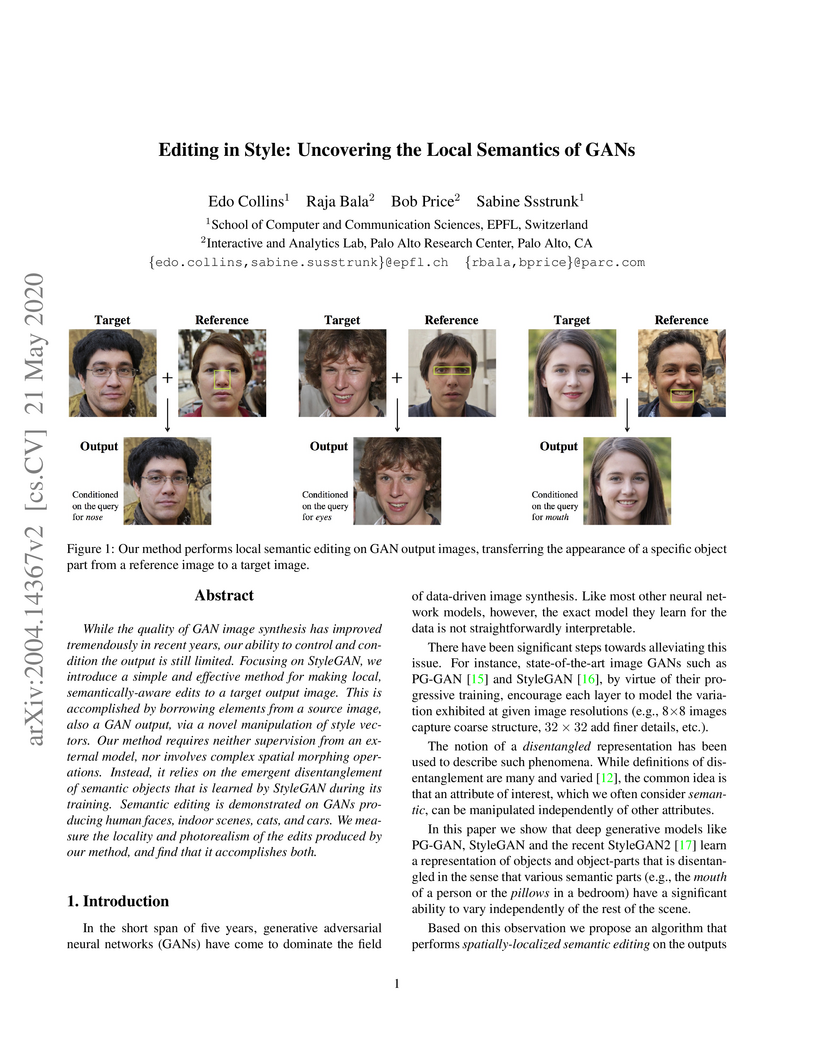

While the quality of GAN image synthesis has improved tremendously in recent

years, our ability to control and condition the output is still limited.

Focusing on StyleGAN, we introduce a simple and effective method for making

local, semantically-aware edits to a target output image. This is accomplished

by borrowing elements from a source image, also a GAN output, via a novel

manipulation of style vectors. Our method requires neither supervision from an

external model, nor involves complex spatial morphing operations. Instead, it

relies on the emergent disentanglement of semantic objects that is learned by

StyleGAN during its training. Semantic editing is demonstrated on GANs

producing human faces, indoor scenes, cats, and cars. We measure the locality

and photorealism of the edits produced by our method, and find that it

accomplishes both.

22 Oct 2021

In fluid physics, data-driven models to enhance or accelerate solution

methods are becoming increasingly popular for many application domains, such as

alternatives to turbulence closures, system surrogates, or for new physics

discovery. In the context of reduced order models of high-dimensional

time-dependent fluid systems, machine learning methods grant the benefit of

automated learning from data, but the burden of a model lies on its

reduced-order representation of both the fluid state and physical dynamics. In

this work, we build a physics-constrained, data-driven reduced order model for

the Navier-Stokes equations to approximate spatio-temporal turbulent fluid

dynamics. The model design choices mimic numerical and physical constraints by,

for example, implicitly enforcing the incompressibility constraint and

utilizing continuous Neural Ordinary Differential Equations for tracking the

evolution of the differential equation. We demonstrate this technique on

three-dimensional, moderate Reynolds number turbulent fluid flow. In assessing

the statistical quality and characteristics of the machine-learned model

through rigorous diagnostic tests, we find that our model is capable of

reconstructing the dynamics of the flow over large integral timescales,

favoring accuracy at the larger length scales. More significantly,

comprehensive diagnostics suggest that physically-interpretable model

parameters, corresponding to the representations of the fluid state and

dynamics, have attributable and quantifiable impact on the quality of the model

predictions and computational complexity.

We propose replacing scene text in videos using deep style transfer and

learned photometric transformations.Building on recent progress on still image

text replacement,we present extensions that alter text while preserving the

appearance and motion characteristics of the original video.Compared to the

problem of still image text replacement,our method addresses additional

challenges introduced by video, namely effects induced by changing lighting,

motion blur, diverse variations in camera-object pose over time,and

preservation of temporal consistency. We parse the problem into three steps.

First, the text in all frames is normalized to a frontal pose using a

spatio-temporal trans-former network. Second, the text is replaced in a single

reference frame using a state-of-art still-image text replacement method.

Finally, the new text is transferred from the reference to remaining frames

using a novel learned image transformation network that captures lighting and

blur effects in a temporally consistent manner. Results on synthetic and

challenging real videos show realistic text trans-fer, competitive quantitative

and qualitative performance,and superior inference speed relative to

alternatives. We introduce new synthetic and real-world datasets with paired

text objects. To the best of our knowledge this is the first attempt at deep

video text replacement.

03 Dec 2024

Model-based reasoning agents are ill-equipped to act in novel situations in

which their model of the environment no longer sufficiently represents the

world. We propose HYDRA - a framework for designing model-based agents

operating in mixed discrete-continuous worlds, that can autonomously detect

when the environment has evolved from its canonical setup, understand how it

has evolved, and adapt the agents' models to perform effectively. HYDRA is

based upon PDDL+, a rich modeling language for planning in mixed,

discrete-continuous environments. It augments the planning module with visual

reasoning, task selection, and action execution modules for closed-loop

interaction with complex environments. HYDRA implements a novel meta-reasoning

process that enables the agent to monitor its own behavior from a variety of

aspects. The process employs a diverse set of computational methods to maintain

expectations about the agent's own behavior in an environment. Divergences from

those expectations are useful in detecting when the environment has evolved and

identifying opportunities to adapt the underlying models. HYDRA builds upon

ideas from diagnosis and repair and uses a heuristics-guided search over model

changes such that they become competent in novel conditions. The HYDRA

framework has been used to implement novelty-aware agents for three diverse

domains - CartPole++ (a higher dimension variant of a classic control problem),

Science Birds (an IJCAI competition problem), and PogoStick (a specific problem

domain in Minecraft). We report empirical observations from these domains to

demonstrate the efficacy of various components in the novelty meta-reasoning

process.

23 Jan 2023

The Winograd Schema Challenge - a set of twin sentences involving pronoun

reference disambiguation that seem to require the use of commonsense knowledge

- was proposed by Hector Levesque in 2011. By 2019, a number of AI systems,

based on large pre-trained transformer-based language models and fine-tuned on

these kinds of problems, achieved better than 90% accuracy. In this paper, we

review the history of the Winograd Schema Challenge and discuss the lasting

contributions of the flurry of research that has taken place on the WSC in the

last decade. We discuss the significance of various datasets developed for WSC,

and the research community's deeper understanding of the role of surrogate

tasks in assessing the intelligence of an AI system.

We present a case study of artificial intelligence techniques applied to the

control of production printing equipment. Like many other real-world

applications, this complex domain requires high-speed autonomous

decision-making and robust continual operation. To our knowledge, this work

represents the first successful industrial application of embedded

domain-independent temporal planning. Our system handles execution failures and

multi-objective preferences. At its heart is an on-line algorithm that combines

techniques from state-space planning and partial-order scheduling. We suggest

that this general architecture may prove useful in other applications as more

intelligent systems operate in continual, on-line settings. Our system has been

used to drive several commercial prototypes and has enabled a new product

architecture for our industrial partner. When compared with state-of-the-art

off-line planners, our system is hundreds of times faster and often finds

better plans. Our experience demonstrates that domain-independent AI planning

based on heuristic search can flexibly handle time, resources, replanning, and

multiple objectives in a high-speed practical application without requiring

hand-coded control knowledge.

28 Feb 2017

Graphlets are induced subgraphs of a large network and are important for

understanding and modeling complex networks. Despite their practical

importance, graphlets have been severely limited to applications and domains

with relatively small graphs. Most previous work has focused on exact

algorithms, however, it is often too expensive to compute graphlets exactly in

massive networks with billions of edges, and finding an approximate count is

usually sufficient for many applications. In this work, we propose an unbiased

graphlet estimation framework that is (a) fast with significant speedups

compared to the state-of-the-art, (b) parallel with nearly linear-speedups, (c)

accurate with <1% relative error, (d) scalable and space-efficient for massive

networks with billions of edges, and (e) flexible for a variety of real-world

settings, as well as estimating macro and micro-level graphlet statistics

(e.g., counts) of both connected and disconnected graphlets. In addition, an

adaptive approach is introduced that finds the smallest sample size required to

obtain estimates within a given user-defined error bound. On 300 networks from

20 domains, we obtain <1% relative error for all graphlets. This is

significantly more accurate than existing methods while using less data.

Moreover, it takes a few seconds on billion edge graphs (as opposed to

days/weeks). These are by far the largest graphlet computations to date.

29 Mar 2022

Low phase noise lasers based on the combination of III-V semiconductors and

silicon photonics are well established in the near-infrared spectral regime.

Recent advances in the development of low-loss silicon nitride-based photonic

integrated resonators have allowed to outperform bulk external diode and fiber

lasers in both phase noise and frequency agility in the 1550

nm-telecommunication window. Here, we demonstrate for the first time a hybrid

integrated laser composed of a gallium nitride (GaN) based laser diode and a

silicon nitride photonic chip-based microresonator operating at record low

wavelengths as low as 410 nm in the near-ultraviolet wavelength region suitable

for addressing atomic transitions of atoms and ions used in atomic clocks,

quantum computing, or for underwater LiDAR. Using self-injection locking to a

high Q (0.4 × 106) photonic integrated microresonator we observe a

phase noise reduction of the Fabry-P\'erot laser at 461 nm by a factor greater

than 100×, limited by the device quality factor and back-reflection.

10 Sep 2019

The underlying physics of quantum mechanics has been discussed for decades without an agreed resolution to many questions. The measurement problem, wave function collapse and entangled states are mired in complexity and the difficulty of even agreeing on a definition of a measurement. This paper explores a completely different aspect of quantum mechanics, the physical mechanism for phonon quantization, to gain insights into quantum mechanics that may help address the broader questions.

Our research aims at developing intelligent systems to reduce the transportation-related energy expenditure of a large city by influencing individual behavior. We introduce COPTER - an intelligent travel assistant that evaluates multi-modal travel alternatives to find a plan that is acceptable to a person given their context and preferences. We propose a formulation for acceptable planning that brings together ideas from AI, machine learning, and economics. This formulation has been incorporated in COPTER that produces acceptable plans in real-time. We adopt a novel empirical evaluation framework that combines human decision data with a high fidelity multi-modal transportation simulation to demonstrate a 4\% energy reduction and 20\% delay reduction in a realistic deployment scenario in Los Angeles, California, USA.

Our research aims to develop interactive, social agents that can coach people

to learn new tasks, skills, and habits. In this paper, we focus on coaching

sedentary, overweight individuals (i.e., trainees) to exercise regularly. We

employ adaptive goal setting in which the intelligent health coach generates,

tracks, and revises personalized exercise goals for a trainee. The goals become

incrementally more difficult as the trainee progresses through the training

program. Our approach is model-based - the coach maintains a parameterized

model of the trainee's aerobic capability that drives its expectation of the

trainee's performance. The model is continually revised based on trainee-coach

interactions. The coach is embodied in a smartphone application, NutriWalking,

which serves as a medium for coach-trainee interaction. We adopt a task-centric

evaluation approach for studying the utility of the proposed algorithm in

promoting regular aerobic exercise. We show that our approach can adapt the

trainee program not only to several trainees with different capabilities, but

also to how a trainee's capability improves as they begin to exercise more.

Experts rate the goals selected by the coach better than other plausible goals,

demonstrating that our approach is consistent with clinical recommendations.

Further, in a 6-week observational study with sedentary participants, we show

that the proposed approach helps increase exercise volume performed each week.

This paper presents a general graph representation learning framework called DeepGL for learning deep node and edge representations from large (attributed) graphs. In particular, DeepGL begins by deriving a set of base features (e.g., graphlet features) and automatically learns a multi-layered hierarchical graph representation where each successive layer leverages the output from the previous layer to learn features of a higher-order. Contrary to previous work, DeepGL learns relational functions (each representing a feature) that generalize across-networks and therefore useful for graph-based transfer learning tasks. Moreover, DeepGL naturally supports attributed graphs, learns interpretable features, and is space-efficient (by learning sparse feature vectors). In addition, DeepGL is expressive, flexible with many interchangeable components, efficient with a time complexity of O(∣E∣), and scalable for large networks via an efficient parallel implementation. Compared with the state-of-the-art method, DeepGL is (1) effective for across-network transfer learning tasks and attributed graph representation learning, (2) space-efficient requiring up to 6x less memory, (3) fast with up to 182x speedup in runtime performance, and (4) accurate with an average improvement of 20% or more on many learning tasks.

Turning is the most commonly available and least expensive machining

operation, in terms of both machine-hour rates and tool insert prices. A

practical CNC process planner has to maximize the utilization of turning, not

only to attain precision requirements for turnable surfaces, but also to

minimize the machining cost, while non-turnable features can be left for other

processes such as milling. Most existing methods rely on separation of surface

features and lack guarantees when analyzing complex parts with interacting

features. In a previous study, we demonstrated successful implementation of a

feature-free milling process planner based on configuration space methods used

for spatial reasoning and AI search for planning. This paper extends the

feature-free method to include turning process planning. It opens up the

opportunity for seamless integration of turning actions into a mill-turn

process planner that can handle arbitrarily complex shapes with or without a

priori knowledge of feature semantics.

Northeastern University

Northeastern University University of Notre Dame

University of Notre Dame GoogleUniversity of Bern

GoogleUniversity of Bern University of California, San DiegoUniversity of PisaUniversity of HoustonUniversitat de BarcelonaInstitute of PhysicsAalto University School of SciencePalo Alto Research CenterIMT Alti StudiMax-Planck-Institut f ur Physik komplexer Systeme

University of California, San DiegoUniversity of PisaUniversity of HoustonUniversitat de BarcelonaInstitute of PhysicsAalto University School of SciencePalo Alto Research CenterIMT Alti StudiMax-Planck-Institut f ur Physik komplexer SystemeRepresented as graphs, real networks are intricate combinations of order and

disorder. Fixing some of the structural properties of network models to their

values observed in real networks, many other properties appear as statistical

consequences of these fixed observables, plus randomness in other respects.

Here we employ the dk-series, a complete set of basic characteristics of the

network structure, to study the statistical dependencies between different

network properties. We consider six real networks---the Internet, US airport

network, human protein interactions, technosocial web of trust, English word

network, and an fMRI map of the human brain---and find that many important

local and global structural properties of these networks are closely reproduced

by dk-random graphs whose degree distributions, degree correlations, and

clustering are as in the corresponding real network. We discuss important

conceptual, methodological, and practical implications of this evaluation of

network randomness, and release software to generate dk-random graphs.

Graph classification is a problem with practical applications in many

different domains. Most of the existing methods take the entire graph into

account when calculating graph features. In a graphlet-based approach, for

instance, the entire graph is processed to get the total count of different

graphlets or sub-graphs. In the real-world, however, graphs can be both large

and noisy with discriminative patterns confined to certain regions in the graph

only. In this work, we study the problem of attentional processing for graph

classification. The use of attention allows us to focus on small but

informative parts of the graph, avoiding noise in the rest of the graph. We

present a novel RNN model, called the Graph Attention Model (GAM), that

processes only a portion of the graph by adaptively selecting a sequence of

"interesting" nodes. The model is equipped with an external memory component

which allows it to integrate information gathered from different parts of the

graph. We demonstrate the effectiveness of the model through various

experiments.

02 Jul 2019

UC Berkeley

UC Berkeley University College London

University College London Texas A&M University

Texas A&M University University of MarylandSanta Fe InstituteGoogle Inc.QC Ware Corp1QB Information Technologies (1QBit)National Security AgencyPalo Alto Research CenterFraunhofer IOSB-INAUSRA Research Institute for Advanced Computer Science (RIACS)NASA, Ames Research Center

University of MarylandSanta Fe InstituteGoogle Inc.QC Ware Corp1QB Information Technologies (1QBit)National Security AgencyPalo Alto Research CenterFraunhofer IOSB-INAUSRA Research Institute for Advanced Computer Science (RIACS)NASA, Ames Research CenterThere have been multiple attempts to demonstrate that quantum annealing and,

in particular, quantum annealing on quantum annealing machines, has the

potential to outperform current classical optimization algorithms implemented

on CMOS technologies. The benchmarking of these devices has been controversial.

Initially, random spin-glass problems were used, however, these were quickly

shown to be not well suited to detect any quantum speedup. Subsequently,

benchmarking shifted to carefully crafted synthetic problems designed to

highlight the quantum nature of the hardware while (often) ensuring that

classical optimization techniques do not perform well on them. Even worse, to

date a true sign of improved scaling with the number of problem variables

remains elusive when compared to classical optimization techniques. Here, we

analyze the readiness of quantum annealing machines for real-world application

problems. These are typically not random and have an underlying structure that

is hard to capture in synthetic benchmarks, thus posing unexpected challenges

for optimization techniques, both classical and quantum alike. We present a

comprehensive computational scaling analysis of fault diagnosis in digital

circuits, considering architectures beyond D-wave quantum annealers. We find

that the instances generated from real data in multiplier circuits are harder

than other representative random spin-glass benchmarks with a comparable number

of variables. Although our results show that transverse-field quantum annealing

is outperformed by state-of-the-art classical optimization algorithms, these

benchmark instances are hard and small in the size of the input, therefore

representing the first industrial application ideally suited for testing

near-term quantum annealers and other quantum algorithmic strategies for

optimization problems.

Architectures that implement the Common Model of Cognition - Soar, ACT-R, and Sigma - have a prominent place in research on cognitive modeling as well as on designing complex intelligent agents. In this paper, we explore how computational models of analogical processing can be brought into these architectures to enable concept acquisition from examples obtained interactively. We propose a new analogical concept memory for Soar that augments its current system of declarative long-term memories. We frame the problem of concept learning as embedded within the larger context of interactive task learning (ITL) and embodied language processing (ELP). We demonstrate that the analogical learning methods implemented in the proposed memory can quickly learn a diverse types of novel concepts that are useful not only in recognition of a concept in the environment but also in action selection. Our approach has been instantiated in an implemented cognitive system \textsc{Aileen} and evaluated on a simulated robotic domain.

In contrast to today's IP-based host-oriented Internet architecture,

Information-Centric Networking (ICN) emphasizes content by making it directly

addressable and routable. Named Data Networking (NDN) architecture is an

instance of ICN that is being developed as a candidate next-generation Internet

architecture. By opportunistically caching content within the network (in

routers), NDN appears to be well-suited for large-scale content distribution

and for meeting the needs of increasingly mobile and bandwidth-hungry

applications that dominate today's Internet.

One key feature of NDN is the requirement for each content object to be

digitally signed by its producer. Thus, NDN should be, in principle, immune to

distributing fake (aka "poisoned") content. However, in practice, this poses

two challenges for detecting fake content in NDN routers: (1) overhead due to

signature verification and certificate chain traversal, and (2) lack of trust

context, i.e., determining which public keys are trusted to verify which

content. Because of these issues, NDN does not force routers to verify content

signatures, which makes the architecture susceptible to content poisoning

attacks.

This paper explores root causes of, and some cures for, content poisoning

attacks in NDN. In the process, it becomes apparent that meaningful mitigation

of content poisoning is contingent upon a network-layer trust management

architecture, elements of which we construct while carefully justifying

specific design choices. This work represents the initial effort towards

comprehensive trust management for NDN.

PATO: Producibility-Aware Topology Optimization using Deep Learning for Metal Additive Manufacturing

PATO: Producibility-Aware Topology Optimization using Deep Learning for Metal Additive Manufacturing

In this paper, we propose PATO-a producibility-aware topology optimization

(TO) framework to help efficiently explore the design space of components

fabricated using metal additive manufacturing (AM), while ensuring

manufacturability with respect to cracking. Specifically, parts fabricated

through Laser Powder Bed Fusion are prone to defects such as warpage or

cracking due to high residual stress values generated from the steep thermal

gradients produced during the build process. Maturing the design for such parts

and planning their fabrication can span months to years, often involving

multiple handoffs between design and manufacturing engineers. PATO is based on

the a priori discovery of crack-free designs, so that the optimized part can be

built defect-free at the outset. To ensure that the design is crack free during

optimization, producibility is explicitly encoded within the standard

formulation of TO, using a crack index. Multiple crack indices are explored and

using experimental validation, maximum shear strain index (MSSI) is shown to be

an accurate crack index. Simulating the build process is a coupled,

multi-physics computation and incorporating it in the TO loop can be

computationally prohibitive. We leverage the current advances in deep

convolutional neural networks and present a high-fidelity surrogate model based

on an Attention-based U-Net architecture to predict the MSSI values as a

spatially varying field over the part's domain. Further, we employ automatic

differentiation to directly compute the gradient of maximum MSSI with respect

to the input design variables and augment it with the performance-based

sensitivity field to optimize the design while considering the trade-off

between weight, manufacturability, and functionality. We demonstrate the

effectiveness of the proposed method through benchmark studies in 3D as well as

experimental validation.

There are no more papers matching your filters at the moment.