Queen Mary University of London

Queen Mary University of LondonMoralBERT introduces a suite of fine-tuned BERT models that accurately identify moral values in social media discussions, leveraging the Moral Foundations Theory across diverse datasets. The models, particularly MoralBERT_adv with domain-adversarial training, significantly outperform lexicon-based methods, traditional machine learning, and zero-shot GPT-4 for in-domain predictions, achieving up to 32% higher F1 scores.

This research introduces a co-evolutionary framework where individual strategies and the game environments they embody undergo simultaneous evolutionary selection. It demonstrates how such dynamic environments, particularly when structured by complex networks, foster the emergence and maintenance of cooperative behavior from various initial conditions.

Researchers from Queen Mary University of London and the Allen Institute for AI developed a code-agnostic approach using clinical language models to predict long-term Type 2 Diabetes microvascular complications from electronic health records. Their text-based models generally outperformed code-based methods, achieving a Micro-AUPRC of 0.51 for 5-year predictions, with performance further improving to 0.66 when recent clinical history was prioritized.

06 May 2025

Retrieval-Augmented Generation with Model Context Protocol (RAG-MCP) enables large language models to efficiently select from large sets of external tools by retrieving only relevant tool descriptions, reducing prompt token usage by 73% while maintaining tool selection accuracy across increasing tool pool sizes.

MMAR introduces the first comprehensive benchmark designed to assess complex reasoning capabilities across various audio modalities, including speech, sound, music, and their combinations. Experiments on this challenging dataset reveal that most open-source audio models perform near random chance, with the best performing model, Gemini 2.0 Flash, achieving approximately 62% accuracy, highlighting a substantial gap in current audio reasoning abilities.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Monash University

Monash University Carnegie Mellon University

Carnegie Mellon University University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley University College London

University College London Cornell UniversityCSIRO’s Data61

Cornell UniversityCSIRO’s Data61 Hugging FaceTU Darmstadt

Hugging FaceTU Darmstadt InriaSingapore Management UniversitySea AI Lab

InriaSingapore Management UniversitySea AI Lab MITIntelAWS AI Labs

MITIntelAWS AI Labs Shanghai Jiaotong University

Shanghai Jiaotong University Queen Mary University of London

Queen Mary University of London University of VirginiaUNC-Chapel Hill

University of VirginiaUNC-Chapel Hill ServiceNowContextual AIDetomo Inc

ServiceNowContextual AIDetomo IncBigCodeBench is a new benchmark that evaluates Large Language Models on their ability to generate Python code requiring diverse function calls and complex instructions, revealing that current models like GPT-4o achieve a maximum of 60% accuracy on these challenging tasks, significantly lagging human performance.

ViMo introduces the first generative visual GUI world model that predicts future application states as high-fidelity images, decoupling graphic and text generation to overcome pixel-level text rendering challenges. This model enhances App agents' decision-making by providing visual foresight, leading to improved task completion and action accuracy.

Monash UniversityLeipzig University

Monash UniversityLeipzig University Northeastern University

Northeastern University Carnegie Mellon University

Carnegie Mellon University New York University

New York University Stanford University

Stanford University McGill University

McGill University University of British ColumbiaCSIRO’s Data61IBM Research

University of British ColumbiaCSIRO’s Data61IBM Research Columbia UniversityScaDS.AI

Columbia UniversityScaDS.AI Hugging Face

Hugging Face Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab

Johns Hopkins UniversityWeizmann Institute of ScienceThe Alan Turing InstituteSea AI Lab MIT

MIT Queen Mary University of LondonUniversity of VermontSAP

Queen Mary University of LondonUniversity of VermontSAP ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",

ServiceNowIsrael Institute of TechnologyWellesley CollegeEleuther AIRobloxUniversity ofTelefonica I+DTechnical University ofNotre DameMunichDiscover Dollar Pvt LtdUnfoldMLAllahabadTechnion –Saama AI Research LabTolokaForschungszentrum J",StarCoder and StarCoderBase are large language models for code developed by The BigCode community, demonstrating state-of-the-art performance among open-access models on Python code generation, achieving 33.6% pass@1 on HumanEval, and strong multi-language capabilities, all while integrating responsible AI practices.

07 Nov 2025

Diffusion models have transformed image generation, yet controlling their outputs to reliably erase undesired concepts remains challenging. Existing approaches usually require task-specific training and struggle to generalize across both concrete (e.g., objects) and abstract (e.g., styles) concepts. We propose CASteer (Cross-Attention Steering), a training-free framework for concept erasure in diffusion models using steering vectors to influence hidden representations dynamically. CASteer precomputes concept-specific steering vectors by averaging neural activations from images generated for each target concept. During inference, it dynamically applies these vectors to suppress undesired concepts only when they appear, ensuring that unrelated regions remain unaffected. This selective activation enables precise, context-aware erasure without degrading overall image quality. This approach achieves effective removal of harmful or unwanted content across a wide range of visual concepts, all without model retraining. CASteer outperforms state-of-the-art concept erasure techniques while preserving unrelated content and minimizing unintended effects. Pseudocode is provided in the supplementary.

As an instance-level recognition problem, person re-identification (ReID) relies on discriminative features, which not only capture different spatial scales but also encapsulate an arbitrary combination of multiple scales. We call features of both homogeneous and heterogeneous scales omni-scale features. In this paper, a novel deep ReID CNN is designed, termed Omni-Scale Network (OSNet), for omni-scale feature learning. This is achieved by designing a residual block composed of multiple convolutional streams, each detecting features at a certain scale. Importantly, a novel unified aggregation gate is introduced to dynamically fuse multi-scale features with input-dependent channel-wise weights. To efficiently learn spatial-channel correlations and avoid overfitting, the building block uses pointwise and depthwise convolutions. By stacking such block layer-by-layer, our OSNet is extremely lightweight and can be trained from scratch on existing ReID benchmarks. Despite its small model size, OSNet achieves state-of-the-art performance on six person ReID datasets, outperforming most large-sized models, often by a clear margin. Code and models are available at: \url{this https URL}.

Recent advancements in multimodal large language models (MLLMs) have focused on integrating multiple modalities, yet their ability to simultaneously process and reason across different inputs remains underexplored. We introduce OmniBench, a novel benchmark designed to evaluate models' ability to recognize, interpret, and reason across visual, acoustic, and textual inputs simultaneously. We define language models capable of such tri-modal processing as omni-language models (OLMs). OmniBench features high-quality human annotations that require integrated understanding across all modalities. Our evaluation reveals that: i) open-source OLMs show significant limitations in instruction-following and reasoning in tri-modal contexts; and ii) most baseline models perform poorly (around 50% accuracy) even with textual alternatives to image/audio inputs. To address these limitations, we develop OmniInstruct, an 96K-sample instruction tuning dataset for training OLMs. We advocate for developing more robust tri-modal integration techniques and training strategies to enhance OLM performance. Codes and data could be found at our repo (this https URL).

Ginestra Bianconi's work proposes a theory where gravity arises from a Lorentz-invariant entropic action, defined as the quantum relative entropy between the spacetime metric and a matter-induced metric. This framework yields modified Einstein equations that are at most second-order in derivatives, naturally recovering standard General Relativity in a low coupling limit and demonstrating the emergence of a positive cosmological constant dependent on an auxiliary field.

This book provides a structured and comprehensive guide for preparing for deep learning job interviews and graduate exams, featuring hundreds of fully-solved problems. Authored by Shlomo Kashani and edited by Amir Ivry, the resource aims to deepen candidates' conceptual understanding and practical problem-solving skills, enabling them to confidently articulate complex deep learning concepts.

A comprehensive benchmark and evaluation framework for assessing video-language models' spatio-temporal reasoning capabilities, introducing V-STaR dataset and the Reverse Spatio-Temporal Reasoning task that reveals models' ability to ground "what," "when," and "where" aspects of video understanding through coarse-to-fine questioning chains.

MERT introduces a general-purpose, computationally affordable, self-supervised acoustic music understanding model that employs a novel multi-task framework with both acoustic and music-specific teachers. It achieves state-of-the-art performance across 14 diverse Music Information Retrieval tasks while being significantly more efficient than prior large generative models.

24 Jun 2025

University of Toronto

University of Toronto California Institute of Technology

California Institute of Technology University of Pittsburgh

University of Pittsburgh Carnegie Mellon University

Carnegie Mellon University Stanford University

Stanford University Cornell University

Cornell University McGill University

McGill University University of British Columbia

University of British Columbia University of Pennsylvania

University of Pennsylvania Johns Hopkins University

Johns Hopkins University Arizona State University

Arizona State University Princeton UniversityCardiff University

Princeton UniversityCardiff University Queen Mary University of London

Queen Mary University of London Flatiron InstituteNISTUniversity of Cape TownUniversity of KwaZulu-NatalWMAP

Flatiron InstituteNISTUniversity of Cape TownUniversity of KwaZulu-NatalWMAPUtilizing the Atacama Cosmology Telescope's Data Release 6, researchers rigorously tested the standard "Lambda Cold Dark Matter" (ΛCDM) cosmological model and constrained numerous extensions to it, finding continued consistency with ΛCDM and setting the tightest limits to date on many fundamental physics parameters, while observing no statistical preference for models designed to alleviate cosmological tensions like the Hubble or S₈ discrepancies.

Researchers developed Deliberate Practice Policy Optimization (DPPO), a metacognitive training framework that integrates Reinforcement Learning and Supervised Fine-tuning to build embodied intelligence. The resulting Pelican-VL 1.0 model (72B parameters) achieved a 20.3% performance improvement over its base model and outperformed several 200B-level closed-source models on various embodied tasks.

The advent of large language models (LLMs) has revolutionized the field of natural language processing, yet they might be attacked to produce harmful content. Despite efforts to ethically align LLMs, these are often fragile and can be circumvented by jailbreaking attacks through optimized or manual adversarial prompts. To address this, we introduce the Information Bottleneck Protector (IBProtector), a defense mechanism grounded in the information bottleneck principle, and we modify the objective to avoid trivial solutions. The IBProtector selectively compresses and perturbs prompts, facilitated by a lightweight and trainable extractor, preserving only essential information for the target LLMs to respond with the expected answer. Moreover, we further consider a situation where the gradient is not visible to be compatible with any LLM. Our empirical evaluations show that IBProtector outperforms current defense methods in mitigating jailbreak attempts, without overly affecting response quality or inference speed. Its effectiveness and adaptability across various attack methods and target LLMs underscore the potential of IBProtector as a novel, transferable defense that bolsters the security of LLMs without requiring modifications to the underlying models.

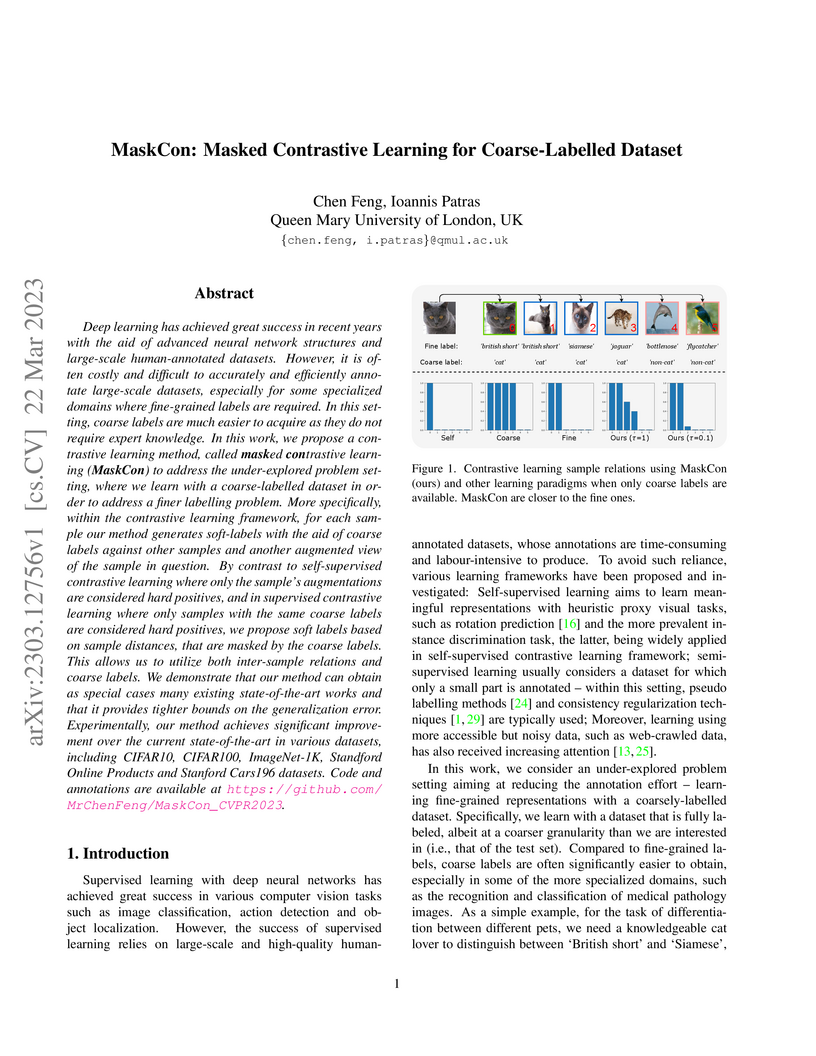

Deep learning has achieved great success in recent years with the aid of advanced neural network structures and large-scale human-annotated datasets. However, it is often costly and difficult to accurately and efficiently annotate large-scale datasets, especially for some specialized domains where fine-grained labels are required. In this setting, coarse labels are much easier to acquire as they do not require expert knowledge. In this work, we propose a contrastive learning method, called Masked Contrastive learning~(MaskCon) to address the under-explored problem setting, where we learn with a coarse-labelled dataset in order to address a finer labelling problem. More specifically, within the contrastive learning framework, for each sample our method generates soft-labels with the aid of coarse labels against other samples and another augmented view of the sample in question. By contrast to self-supervised contrastive learning where only the sample's augmentations are considered hard positives, and in supervised contrastive learning where only samples with the same coarse labels are considered hard positives, we propose soft labels based on sample distances, that are masked by the coarse labels. This allows us to utilize both inter-sample relations and coarse labels. We demonstrate that our method can obtain as special cases many existing state-of-the-art works and that it provides tighter bounds on the generalization error. Experimentally, our method achieves significant improvement over the current state-of-the-art in various datasets, including CIFAR10, CIFAR100, ImageNet-1K, Standford Online Products and Stanford Cars196 datasets. Code and annotations are available at this https URL.

20 Aug 2025

The Dynamic Risk-Aware MPPI (DRA-MPPI) framework enables mobile robots to navigate crowded environments by efficiently approximating joint collision probabilities from multi-modal human movement predictions. This method maintains high success rates (98-99%) and low collision probabilities while preserving operational efficiency in both simulations and real-robot deployments.

There are no more papers matching your filters at the moment.