Ryerson University

The non-uniform photoelectric response of infrared imaging systems results in

fixed-pattern stripe noise being superimposed on infrared images, which

severely reduces image quality. As the applications of degraded infrared images

are limited, it is crucial to effectively preserve original details. Existing

image destriping methods struggle to concurrently remove all stripe noise

artifacts, preserve image details and structures, and balance real-time

performance. In this paper we propose a novel algorithm for destriping degraded

images, which takes advantage of neighbouring column signal correlation to

remove independent column stripe noise. This is achieved through an iterative

deep unfolding algorithm where the estimated noise of one network iteration is

used as input to the next iteration. This progression substantially reduces the

search space of possible function approximations, allowing for efficient

training on larger datasets. The proposed method allows for a more precise

estimation of stripe noise to preserve scene details more accurately. Extensive

experimental results demonstrate that the proposed model outperforms existing

destriping methods on artificially corrupted images on both quantitative and

qualitative assessments.

This research from Tsinghua University and Toronto Metropolitan University proposes two multi-agent reinforcement learning methods, CPPI-MADDPG and TIPP-MADDPG, which integrate portfolio insurance strategies with MADDPG for quantitative trading. Empirical validation on real-world stock data shows these hybrid strategies achieve higher annual returns and superior risk-adjusted returns with reduced maximum drawdowns compared to traditional and pure MARL baselines.

Prior works have proposed several strategies to reduce the computational cost of self-attention mechanism. Many of these works consider decomposing the self-attention procedure into regional and local feature extraction procedures that each incurs a much smaller computational complexity. However, regional information is typically only achieved at the expense of undesirable information lost owing to down-sampling. In this paper, we propose a novel Transformer architecture that aims to mitigate the cost issue, named Dual Vision Transformer (Dual-ViT). The new architecture incorporates a critical semantic pathway that can more efficiently compress token vectors into global semantics with reduced order of complexity. Such compressed global semantics then serve as useful prior information in learning finer pixel level details, through another constructed pixel pathway. The semantic pathway and pixel pathway are then integrated together and are jointly trained, spreading the enhanced self-attention information in parallel through both of the pathways. Dual-ViT is henceforth able to reduce the computational complexity without compromising much accuracy. We empirically demonstrate that Dual-ViT provides superior accuracy than SOTA Transformer architectures with reduced training complexity. Source code is available at \url{this https URL}.

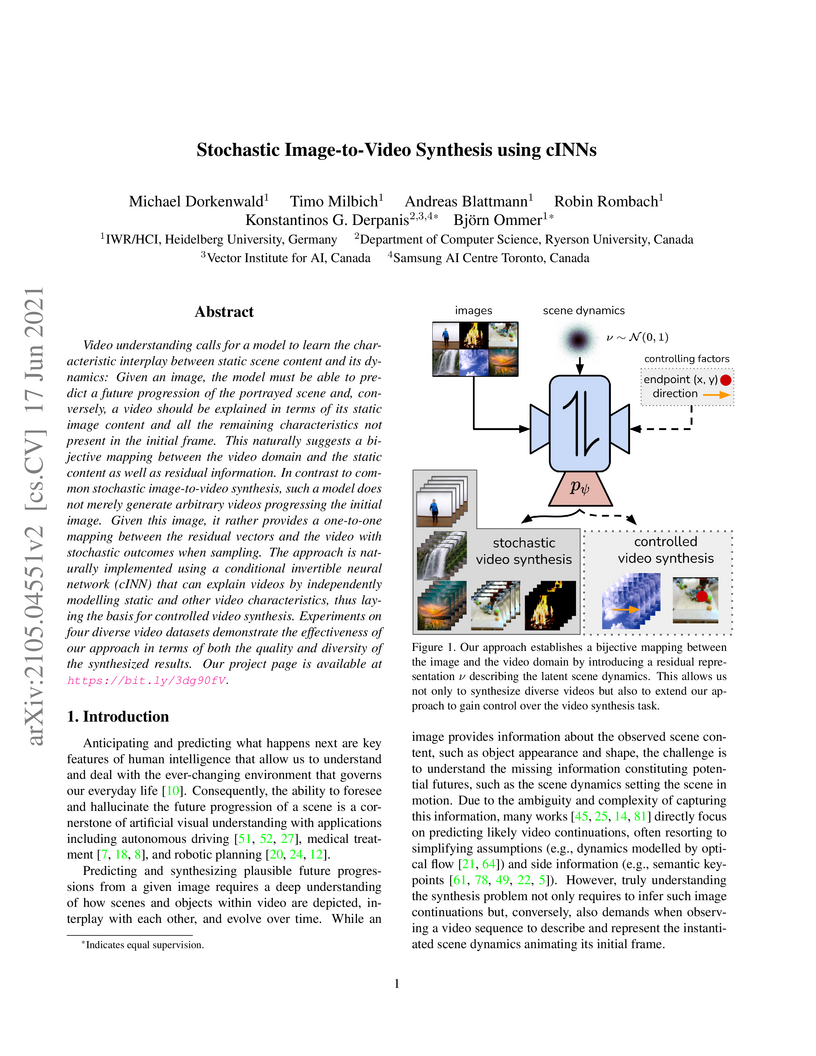

Video understanding calls for a model to learn the characteristic interplay between static scene content and its dynamics: Given an image, the model must be able to predict a future progression of the portrayed scene and, conversely, a video should be explained in terms of its static image content and all the remaining characteristics not present in the initial frame. This naturally suggests a bijective mapping between the video domain and the static content as well as residual information. In contrast to common stochastic image-to-video synthesis, such a model does not merely generate arbitrary videos progressing the initial image. Given this image, it rather provides a one-to-one mapping between the residual vectors and the video with stochastic outcomes when sampling. The approach is naturally implemented using a conditional invertible neural network (cINN) that can explain videos by independently modelling static and other video characteristics, thus laying the basis for controlled video synthesis. Experiments on four diverse video datasets demonstrate the effectiveness of our approach in terms of both the quality and diversity of the synthesized results. Our project page is available at this https URL.

Deep learning has become very popular for tasks such as predictive modeling

and pattern recognition in handling big data. Deep learning is a powerful

machine learning method that extracts lower level features and feeds them

forward for the next layer to identify higher level features that improve

performance. However, deep neural networks have drawbacks, which include many

hyper-parameters and infinite architectures, opaqueness into results, and

relatively slower convergence on smaller datasets. While traditional machine

learning algorithms can address these drawbacks, they are not typically capable

of the performance levels achieved by deep neural networks. To improve

performance, ensemble methods are used to combine multiple base learners. Super

learning is an ensemble that finds the optimal combination of diverse learning

algorithms. This paper proposes deep super learning as an approach which

achieves log loss and accuracy results competitive to deep neural networks

while employing traditional machine learning algorithms in a hierarchical

structure. The deep super learner is flexible, adaptable, and easy to train

with good performance across different tasks using identical hyper-parameter

values. Using traditional machine learning requires fewer hyper-parameters,

allows transparency into results, and has relatively fast convergence on

smaller datasets. Experimental results show that the deep super learner has

superior performance compared to the individual base learners, single-layer

ensembles, and in some cases deep neural networks. Performance of the deep

super learner may further be improved with task-specific tuning.

Fixing bugs in a timely manner lowers various potential costs in software maintenance. However, manual bug fixing scheduling can be time-consuming, cumbersome, and error-prone. In this paper, we propose the Schedule and Dependency-aware Bug Triage (S-DABT), a bug triaging method that utilizes integer programming and machine learning techniques to assign bugs to suitable developers. Unlike prior works that largely focus on a single component of the bug reports, our approach takes into account the textual data, bug fixing costs, and bug dependencies. We further incorporate the schedule of developers in our formulation to have a more comprehensive model for this multifaceted problem. As a result, this complete formulation considers developers' schedules and the blocking effects of the bugs while covering the most significant aspects of the previously proposed methods. Our numerical study on four open-source software systems, namely, EclipseJDT, LibreOffice, GCC, and Mozilla, shows that taking into account the schedules of the developers decreases the average bug fixing times. We find that S-DABT leads to a high level of developer utilization through a fair distribution of the tasks among the developers and efficient use of the free spots in their schedules. Via the simulation of the issue tracking system, we also show how incorporating the schedule in the model formulation reduces the bug fixing time, improves the assignment accuracy, and utilizes the capability of each developer without much comprising in the model run times. We find that S-DABT decreases the complexity of the bug dependency graph by prioritizing blocking bugs and effectively reduces the infeasible assignment ratio due to bug dependencies. Consequently, we recommend considering developers' schedules while automating bug triage.

With the rapid growth of the Internet of Things (IoT) and a wide range of mobile devices, the conventional cloud computing paradigm faces significant challenges (high latency, bandwidth cost, etc.). Motivated by those constraints and concerns for the future of the IoT, modern architectures are gearing toward distributing the cloud computational resources to remote locations where most end-devices are located. Edge and fog computing are considered as the key enablers for applications where centralized cloud-based solutions are not suitable. In this paper, we review the high-level definition of edge, fog, cloud computing, and their configurations in various IoT scenarios. We further discuss their interactions and collaborations in many applications such as cloud offloading, smart cities, health care, and smart agriculture. Though there are still challenges in the development of such distributed systems, early research to tackle those limitations have also surfaced.

Local Interpretable Model-Agnostic Explanations (LIME) is a popular technique

used to increase the interpretability and explainability of black box Machine

Learning (ML) algorithms. LIME typically generates an explanation for a single

prediction by any ML model by learning a simpler interpretable model (e.g.

linear classifier) around the prediction through generating simulated data

around the instance by random perturbation, and obtaining feature importance

through applying some form of feature selection. While LIME and similar local

algorithms have gained popularity due to their simplicity, the random

perturbation and feature selection methods result in "instability" in the

generated explanations, where for the same prediction, different explanations

can be generated. This is a critical issue that can prevent deployment of LIME

in a Computer-Aided Diagnosis (CAD) system, where stability is of utmost

importance to earn the trust of medical professionals. In this paper, we

propose a deterministic version of LIME. Instead of random perturbation, we

utilize agglomerative Hierarchical Clustering (HC) to group the training data

together and K-Nearest Neighbour (KNN) to select the relevant cluster of the

new instance that is being explained. After finding the relevant cluster, a

linear model is trained over the selected cluster to generate the explanations.

Experimental results on three different medical datasets show the superiority

for Deterministic Local Interpretable Model-Agnostic Explanations (DLIME),

where we quantitatively determine the stability of DLIME compared to LIME

utilizing the Jaccard similarity among multiple generated explanations.

Path planning is an essential component of mobile robotics. Classical path

planning algorithms, such as wavefront and rapidly-exploring random tree (RRT)

are used heavily in autonomous robots. With the recent advances in machine

learning, development of learning-based path planning algorithms has been

experiencing rapid growth. An unified path planning interface that facilitates

the development and benchmarking of existing and new algorithms is needed. This

paper presents PathBench, a platform for developing, visualizing, training,

testing, and benchmarking of existing and future, classical and learning-based

path planning algorithms in 2D and 3D grid world environments. Many existing

path planning algorithms are supported; e.g. A*, Dijkstra, waypoint planning

networks, value iteration networks, gated path planning networks; and

integrating new algorithms is easy and clearly specified. The benchmarking

ability of PathBench is explored in this paper by comparing algorithms across

five different hardware systems and three different map types, including

built-in PathBench maps, video game maps, and maps from real world databases.

Metrics, such as path length, success rate, and computational time, were used

to evaluate algorithms. Algorithmic analysis was also performed on a real world

robot to demonstrate PathBench's support for Robot Operating System (ROS).

PathBench is open source.

08 Feb 2024

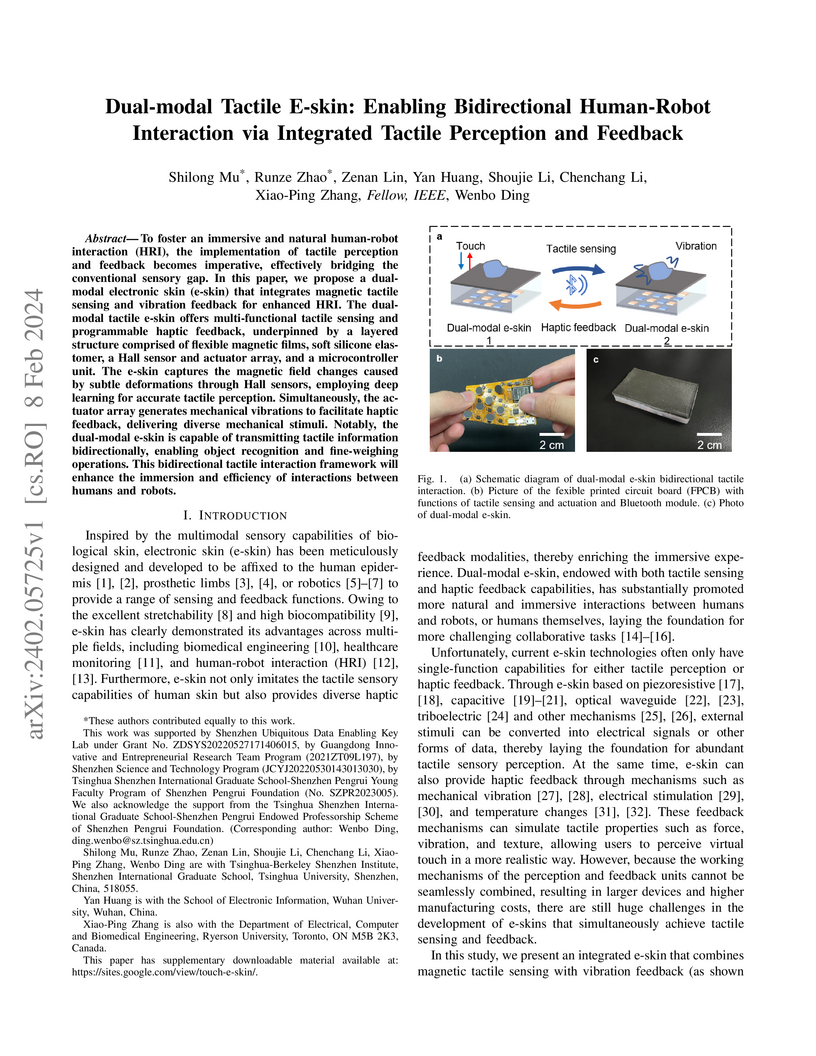

To foster an immersive and natural human-robot interaction, the implementation of tactile perception and feedback becomes imperative, effectively bridging the conventional sensory gap. In this paper, we propose a dual-modal electronic skin (e-skin) that integrates magnetic tactile sensing and vibration feedback for enhanced human-robot interaction. The dual-modal tactile e-skin offers multi-functional tactile sensing and programmable haptic feedback, underpinned by a layered structure comprised of flexible magnetic films, soft silicone, a Hall sensor and actuator array, and a microcontroller unit. The e-skin captures the magnetic field changes caused by subtle deformations through Hall sensors, employing deep learning for accurate tactile perception. Simultaneously, the actuator array generates mechanical vibrations to facilitate haptic feedback, delivering diverse mechanical stimuli. Notably, the dual-modal e-skin is capable of transmitting tactile information bidirectionally, enabling object recognition and fine-weighing operations. This bidirectional tactile interaction framework will enhance the immersion and efficiency of interactions between humans and robots.

25 Feb 2021

We consider Flipping Coins, a partizan version of the impartial game Turning Turtles, played on lines of coins. We show the values of this game are numbers, and these are found by first applying a reduction, then decomposing the position into an iterated ordinal sum. This is unusual since moves in the middle of the line do not eliminate the rest of the line. Moreover, when G is decomposed into lines H and K, then G=(H:KR). This is in contrast to Hackenbush Strings where G=(H:K).

Real-world complex networks are usually being modeled as graphs. The concept of graphs assumes that the relations within the network are binary (for instance, between pairs of nodes); however, this is not always true for many real-life scenarios, such as peer-to-peer communication schemes, paper co-authorship, or social network interactions. For such scenarios, it is often the case that the underlying network is better and more naturally modeled by hypergraphs. A hypergraph is a generalization of a graph in which a single (hyper)edge can connect any number of vertices. Hypergraphs allow modelers to have a complete representation of multi-relational (many-to-many) networks; hence, they are extremely suitable for analyzing and discovering more subtle dependencies in such data structures.

Working with hypergraphs requires new software libraries that make it possible to perform operations on them, from basic algorithms (such as searching or traversing the network) to computing significant hypergraph measures, to including more challenging algorithms (such as community detection). In this paper, we present a new software library, this http URL, written in the Julia language and designed for high-performance computing on hypergraphs and propose two new algorithms for analyzing their properties: s-betweenness and modified label propagation.

We also present various approaches for hypergraph visualization integrated into our tool. In order to demonstrate how to exploit the library in practice, we discuss two case studies based on the 2019 Yelp Challenge dataset and the collaboration network built upon the Game of Thrones TV series. The results are promising and they confirm the ability of hypergraphs to provide more insight than standard graph-based approaches.

In recent years, computer-aided diagnosis has become an increasingly popular topic. Methods based on convolutional neural networks have achieved good performance in medical image segmentation and classification. Due to the limitations of the convolution operation, the long-term spatial features are often not accurately obtained. Hence, we propose a TransClaw U-Net network structure, which combines the convolution operation with the transformer operation in the encoding part. The convolution part is applied for extracting the shallow spatial features to facilitate the recovery of the image resolution after upsampling. The transformer part is used to encode the patches, and the self-attention mechanism is used to obtain global information between sequences. The decoding part retains the bottom upsampling structure for better detail segmentation performance. The experimental results on Synapse Multi-organ Segmentation Datasets show that the performance of TransClaw U-Net is better than other network structures. The ablation experiments also prove the generalization performance of TransClaw U-Net.

An intelligent observer looks at the world and sees not only what is, but what is moving and what can be moved. In other words, the observer sees how the present state of the world can transform in the future. We propose a model that predicts future images by learning to represent the present state and its transformation given only a sequence of images. To do so, we introduce an architecture with a latent state composed of two components designed to capture (i) the present image state and (ii) the transformation between present and future states, respectively. We couple this latent state with a recurrent neural network (RNN) core that predicts future frames by transforming past states into future states by applying the accumulated state transformation with a learned operator. We describe how this model can be integrated into an encoder-decoder convolutional neural network (CNN) architecture that uses weighted residual connections to integrate representations of the past with representations of the future. Qualitatively, our approach generates image sequences that are stable and capture realistic motion over multiple predicted frames, without requiring adversarial training. Quantitatively, our method achieves prediction results comparable to state-of-the-art results on standard image prediction benchmarks (Moving MNIST, KTH, and UCF101).

14 May 2021

Ethereum Smart Contracts based on Blockchain Technology (BT)enables monetary transactions among peers on a blockchain network independent of a central authorizing agency. Ethereum smart contracts are programs that are deployed as decentralized applications, having the building blocks of the blockchain consensus protocol. This enables consumers to make agreements in a transparent and conflict-free environment. However, there exist some security vulnerabilities within these smart contracts that are a potential threat to the applications and their consumers and have shown in the past to cause huge financial losses. In this study, we review the existing literature and broadly classify the BT applications. As Ethereum smart contracts find their application mostly in e-commerce applications, we believe these are more commonly vulnerable to attacks. In these smart contracts, we mainly focus on identifying vulnerabilities that programmers and users of smart contracts must avoid. This paper aims at explaining eight vulnerabilities that are specific to the application level of BT by analyzing the past exploitation case scenarios of these security vulnerabilities. We also review some of the available tools and applications that detect these vulnerabilities in terms of their approach and effectiveness. We also investigated the availability of detection tools for identifying these security vulnerabilities and lack thereof to identify some of them

05 Oct 2020

Mobile edge computing (MEC) is a promising technology to support mission-critical vehicular applications, such as intelligent path planning and safety applications. In this paper, a collaborative edge computing framework is developed to reduce the computing service latency and improve service reliability for vehicular networks. First, a task partition and scheduling algorithm (TPSA) is proposed to decide the workload allocation and schedule the execution order of the tasks offloaded to the edge servers given a computation offloading strategy. Second, an artificial intelligence (AI) based collaborative computing approach is developed to determine the task offloading, computing, and result delivery policy for vehicles. Specifically, the offloading and computing problem is formulated as a Markov decision process. A deep reinforcement learning technique, i.e., deep deterministic policy gradient, is adopted to find the optimal solution in a complex urban transportation network. By our approach, the service cost, which includes computing service latency and service failure penalty, can be minimized via the optimal workload assignment and server selection in collaborative computing. Simulation results show that the proposed AI-based collaborative computing approach can adapt to a highly dynamic environment with outstanding performance.

30 Jun 2012

This article reviews many manifestations and applications of dual

representations of pairs of groups, primarily in atomic and nuclear physics.

Examples are given to show how such paired representations are powerful aids in

understanding the dynamics associated with shell-model coupling schemes and in

identifying the physical situations for which a given scheme is most

appropriate. In particular, they suggest model Hamiltonians that are diagonal

in the various coupling schemes. The dual pairing of group representations has

been applied profitably in mathematics to the study of invariant theory. We

show that parallel applications to the theory of symmetry and dynamical groups

in physics are equally valuable. In particular, the pairing of the

representations of a discrete group with those of a continuous Lie group or

those of a compact Lie with those of a non-compact Lie group makes it possible

to infer many properties of difficult groups from those of simpler groups. This

review starts with the representations of the symmetric and unitary groups,

which are used extensively in the many-particle quantum mechanics of bosonic

and fermionic systems. It gives a summary of the many solutions and

computational techniques for solving problems that arise in applications of

symmetry methods in physics and which result from the famous Schur-Weyl duality

theorem for the pairing of these representations. It continues to examine many

chains of symmetry groups and dual chains of dynamical groups associated with

the several coupling schemes in atomic and nuclear shell models and the

valuable insights and applications that result from this examination.

Back to Basics: Unsupervised Learning of Optical Flow via Brightness Constancy and Motion Smoothness

Back to Basics: Unsupervised Learning of Optical Flow via Brightness Constancy and Motion Smoothness

Recently, convolutional networks (convnets) have proven useful for predicting

optical flow. Much of this success is predicated on the availability of large

datasets that require expensive and involved data acquisition and laborious la-

beling. To bypass these challenges, we propose an unsuper- vised approach

(i.e., without leveraging groundtruth flow) to train a convnet end-to-end for

predicting optical flow be- tween two images. We use a loss function that

combines a data term that measures photometric constancy over time with a

spatial term that models the expected variation of flow across the image.

Together these losses form a proxy measure for losses based on the groundtruth

flow. Empiri- cally, we show that a strong convnet baseline trained with the

proposed unsupervised approach outperforms the same network trained with

supervision on the KITTI dataset.

This paper considers the joint channel estimation and device activity detection in the grant-free random access systems, where a large number of Internet-of-Things devices intend to communicate with a low-earth orbit satellite in a sporadic way. In addition, the massive multiple-input multiple-output (MIMO) with orthogonal time-frequency space (OTFS) modulation is adopted to combat the dynamics of the terrestrial-satellite link. We first analyze the input-output relationship of the single-input single-output OTFS when the large delay and Doppler shift both exist, and then extend it to the grant-free random access with massive MIMO-OTFS. Next, by exploring the sparsity of channel in the delay-Doppler-angle domain, a two-dimensional pattern coupled hierarchical prior with the sparse Bayesian learning and covariance-free method (TDSBL-CF) is developed for the channel estimation. Then, the active devices are detected by computing the energy of the estimated channel. Finally, the generalized approximate message passing algorithm combined with the sparse Bayesian learning and two-dimensional convolution (ConvSBL-GAMP) is proposed to decrease the computations of the TDSBL-CF algorithm. Simulation results demonstrate that the proposed algorithms outperform conventional methods.

Electrocardiogram (ECG) is an authoritative source to diagnose and counter

critical cardiovascular syndromes such as arrhythmia and myocardial infarction

(MI). Current machine learning techniques either depend on manually extracted

features or large and complex deep learning networks which merely utilize the

1D ECG signal directly. Since intelligent multimodal fusion can perform at the

stateof-the-art level with an efficient deep network, therefore, in this paper,

we propose two computationally efficient multimodal fusion frameworks for ECG

heart beat classification called Multimodal Image Fusion (MIF) and Multimodal

Feature Fusion (MFF). At the input of these frameworks, we convert the raw ECG

data into three different images using Gramian Angular Field (GAF), Recurrence

Plot (RP) and Markov Transition Field (MTF). In MIF, we first perform image

fusion by combining three imaging modalities to create a single image modality

which serves as input to the Convolutional Neural Network (CNN). In MFF, we

extracted features from penultimate layer of CNNs and fused them to get unique

and interdependent information necessary for better performance of classifier.

These informational features are finally used to train a Support Vector Machine

(SVM) classifier for ECG heart-beat classification. We demonstrate the

superiority of the proposed fusion models by performing experiments on

PhysioNets MIT-BIH dataset for five distinct conditions of arrhythmias which

are consistent with the AAMI EC57 protocols and on PTB diagnostics dataset for

Myocardial Infarction (MI) classification. We achieved classification accuracy

of 99.7% and 99.2% on arrhythmia and MI classification, respectively.

There are no more papers matching your filters at the moment.