TU Graz

Entezari et al. demonstrate that accounting for permutation invariance between neural network layers removes apparent loss barriers, suggesting that independently trained solutions effectively reside within the same connected basin in the loss landscape. Their extensive empirical analysis shows that the linear interpolation barrier between models largely vanishes when hidden units are optimally permuted.

16 Sep 2025

Strassen's theorem asserts that for given marginal probabilities μ,ν there exists a martingale starting in μ and terminating in ν if and only if μ,ν are in convex order. From a financial perspective, it guarantees the existence of market-consistent martingale pricing measures for arbitrage-free prices of European call options and thus plays a fundamental role in robust finance. Arbitrage-free prices of American options demand a stronger version of martingales which are 'biased' in a specific sense. In this paper, we derive an extension of Strassen's theorem that links them to an appropriate strengthening of the convex order. Moreover, we provide a characterization of this order through integrals with respect to compensated Poisson processes.

REPAIR (REnormalizing Permuted Activations for Interpolation Repair) addresses the performance drop observed when linearly interpolating between independently trained deep neural networks. It identifies 'variance collapse' in activation statistics as the cause and corrects it by re-normalizing internal activations, enabling substantial reductions in the interpolation barrier, such as from 16% to 1.5% for ResNet18 on CIFAR-10.

Prompt ensembling of Large Language Model (LLM) generated category-specific prompts has emerged as an effective method to enhance zero-shot recognition ability of Vision-Language Models (VLMs). To obtain these category-specific prompts, the present methods rely on hand-crafting the prompts to the LLMs for generating VLM prompts for the downstream tasks. However, this requires manually composing these task-specific prompts and still, they might not cover the diverse set of visual concepts and task-specific styles associated with the categories of interest. To effectively take humans out of the loop and completely automate the prompt generation process for zero-shot recognition, we propose Meta-Prompting for Visual Recognition (MPVR). Taking as input only minimal information about the target task, in the form of its short natural language description, and a list of associated class labels, MPVR automatically produces a diverse set of category-specific prompts resulting in a strong zero-shot classifier. MPVR generalizes effectively across various popular zero-shot image recognition benchmarks belonging to widely different domains when tested with multiple LLMs and VLMs. For example, MPVR obtains a zero-shot recognition improvement over CLIP by up to 19.8% and 18.2% (5.0% and 4.5% on average over 20 datasets) leveraging GPT and Mixtral LLMs, respectively

The transfer learning paradigm of model pre-training and subsequent

fine-tuning produces high-accuracy models. While most studies recommend scaling

the pre-training size to benefit most from transfer learning, a question

remains: what data and method should be used for pre-training? We investigate

the impact of pre-training data distribution on the few-shot and full

fine-tuning performance using 3 pre-training methods (supervised, contrastive

language-image and image-image), 7 pre-training datasets, and 9 downstream

datasets. Through extensive controlled experiments, we find that the choice of

the pre-training data source is essential for the few-shot transfer, but its

role decreases as more data is made available for fine-tuning. Additionally, we

explore the role of data curation and examine the trade-offs between label

noise and the size of the pre-training dataset. We find that using 2000X more

pre-training data from LAION can match the performance of supervised ImageNet

pre-training. Furthermore, we investigate the effect of pre-training methods,

comparing language-image contrastive vs. image-image contrastive, and find that

the latter leads to better downstream accuracy

Path planning for wheeled mobile robots is a critical component in the field of automation and intelligent transportation systems. Car-like vehicles, which have non-holonomic constraints on their movement capability impose additional requirements on the planned paths. Traditional path planning algorithms, such as A* , are widely used due to their simplicity and effectiveness in finding optimal paths in complex environments. However, these algorithms often do not consider vehicle dynamics, resulting in paths that are infeasible or impractical for actual driving. Specifically, a path that minimizes the number of grid cells may still be too curvy or sharp for a car-like vehicle to navigate smoothly. This paper addresses the need for a path planning solution that not only finds a feasible path but also ensures that the path is smooth and drivable. By adapting the A* algorithm for a curvature constraint and incorporating a cost function that considers the smoothness of possible paths, we aim to bridge the gap between grid based path planning and smooth paths that are drivable by car-like vehicles. The proposed method leverages motion primitives, pre-computed using a ribbon based path planner that produces smooth paths of minimum curvature. The motion primitives guide the A* algorithm in finding paths of minimal length and curvature. With the proposed modification on the A* algorithm, the planned paths can be constraint to have a minimum turning radius much larger than the grid size. We demonstrate the effectiveness of the proposed algorithm in different unstructured environments. In a two-stage planning approach, first the modified A* algorithm finds a grid-based path and the ribbon based path planner creates a smooth path within the area of grid cells. The resulting paths are smooth with small curvatures independent of the orientation of the grid axes and even in presence of sharp obstacles.

Compositional Reasoning (CR) entails grasping the significance of attributes, relations, and word order. Recent Vision-Language Models (VLMs), comprising a visual encoder and a Large Language Model (LLM) decoder, have demonstrated remarkable proficiency in such reasoning tasks. This prompts a crucial question: have VLMs effectively tackled the CR challenge? We conjecture that existing CR benchmarks may not adequately push the boundaries of modern VLMs due to the reliance on an LLM-only negative text generation pipeline. Consequently, the negatives produced either appear as outliers from the natural language distribution learned by VLMs' LLM decoders or as improbable within the corresponding image context. To address these limitations, we introduce ConMe -- a compositional reasoning benchmark and a novel data generation pipeline leveraging VLMs to produce `hard CR Q&A'. Through a new concept of VLMs conversing with each other to collaboratively expose their weaknesses, our pipeline autonomously generates, evaluates, and selects challenging compositional reasoning questions, establishing a robust CR benchmark, also subsequently validated manually. Our benchmark provokes a noteworthy, up to 33%, decrease in CR performance compared to preceding benchmarks, reinstating the CR challenge even for state-of-the-art VLMs.

Some of the most successful knowledge graph embedding (KGE) models for link prediction -- CP, RESCAL, TuckER, ComplEx -- can be interpreted as energy-based models. Under this perspective they are not amenable for exact maximum-likelihood estimation (MLE), sampling and struggle to integrate logical constraints. This work re-interprets the score functions of these KGEs as circuits -- constrained computational graphs allowing efficient marginalisation. Then, we design two recipes to obtain efficient generative circuit models by either restricting their activations to be non-negative or squaring their outputs. Our interpretation comes with little or no loss of performance for link prediction, while the circuits framework unlocks exact learning by MLE, efficient sampling of new triples, and guarantee that logical constraints are satisfied by design. Furthermore, our models scale more gracefully than the original KGEs on graphs with millions of entities.

18 Jun 2024

We prove new lower bounds on the maximum size of subsets A⊆{1,…,N} or A⊆Fpn not containing three-term arithmetic progressions. In the setting of {1,…,N}, this is the first improvement upon a classical construction of Behrend from 1946 beyond lower-order factors (in particular, it is the first quasipolynomial improvement). In the setting of Fpn for a fixed prime p and large n, we prove a lower bound of (cp)n for some absolute constant c>1/2 (for c=1/2, such a bound can be obtained via classical constructions from the 1940s, but improving upon this has been a well-known open problem).

This research introduces a novel approach for continual personalization of text-to-image diffusion models by leveraging Diffusion Classifier (DC) scores as a regularization prior during training. The method effectively mitigates catastrophic forgetting and improves generation quality, outperforming existing techniques on diverse datasets and long task sequences.

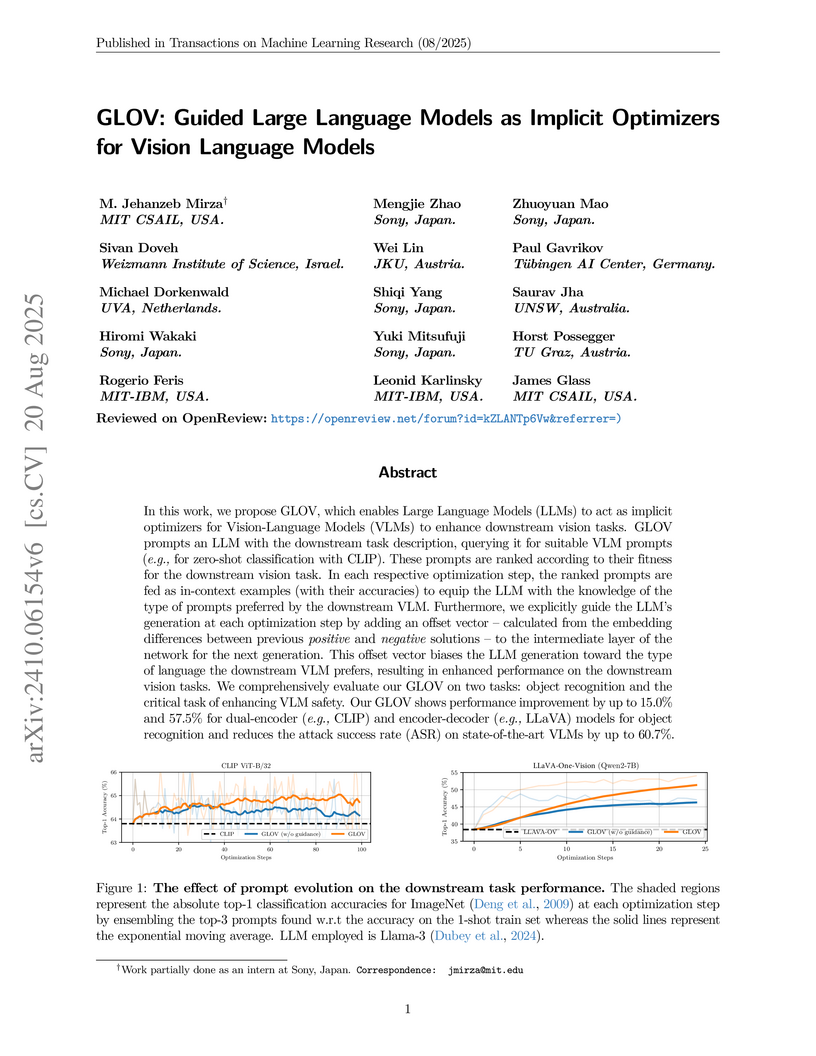

In this work, we propose GLOV, which enables Large Language Models (LLMs) to act as implicit optimizers for Vision-Language Models (VLMs) to enhance downstream vision tasks. GLOV prompts an LLM with the downstream task description, querying it for suitable VLM prompts (e.g., for zero-shot classification with CLIP). These prompts are ranked according to their fitness for the downstream vision task. In each respective optimization step, the ranked prompts are fed as in-context examples (with their accuracies) to equip the LLM with the knowledge of the type of prompts preferred by the downstream VLM. Furthermore, we explicitly guide the LLM's generation at each optimization step by adding an offset vector -- calculated from the embedding differences between previous positive and negative solutions -- to the intermediate layer of the network for the next generation. This offset vector biases the LLM generation toward the type of language the downstream VLM prefers, resulting in enhanced performance on the downstream vision tasks. We comprehensively evaluate our GLOV on two tasks: object recognition and the critical task of enhancing VLM safety. Our GLOV shows performance improvement by up to 15.0% and 57.5% for dual-encoder (e.g., CLIP) and encoder-decoder (e.g., LlaVA) models for object recognition and reduces the attack success rate (ASR) on state-of-the-art VLMs by up to 60.7%.

Polynomial chaos expansion (PCE) is a classical and widely used surrogate modeling technique in physical simulation and uncertainty quantification. By taking a linear combination of a set of basis polynomials - orthonormal with respect to the distribution of uncertain input parameters - PCE enables tractable inference of key statistical quantities, such as (conditional) means, variances, covariances, and Sobol sensitivity indices, which are essential for understanding the modeled system and identifying influential parameters and their interactions. As the number of basis functions grows exponentially with the number of parameters, PCE does not scale well to high-dimensional problems. We address this challenge by combining PCE with ideas from probabilistic circuits, resulting in the deep polynomial chaos expansion (DeepPCE) - a deep generalization of PCE that scales effectively to high-dimensional input spaces. DeepPCE achieves predictive performance comparable to that of multi-layer perceptrons (MLPs), while retaining PCE's ability to compute exact statistical inferences via simple forward passes.

The formation of inorganic cloud particles takes place in several atmospheric environments including those of warm, hot, rocky and gaseous exoplanets, brown dwarfs, and AGB stars. The cloud particle formation needs to be triggered by the in-situ formation of condensation seeds since it can not be assumed that such condensation seeds preexist in these chemically complex gas-phase environments. We aim to develop a methodology to calculate the thermochemical properties of clusters as key inputs to model the formation of condensation nuclei in gases of changing chemical composition. TiO2 is used as benchmark species for cluster sizes N = 1 - 15. We create 90000 candidate geometries, for cluster sizes N = 3 - 15. We employ a hierarchical optimisation approach, consisting of a force field description, density functional based tight binding (DFTB) and all-electron density functional theory (DFT) to obtain accurate energies and thermochemical properties for the clusters. We find B3LYP/cc-pVTZ including Grimmes empirical dispersion to perform most accurately with respect to experimentally derived thermochemical properties of the TiO2 molecule. We present a hitherto unreported global minimum candidate for size N = 13. The DFT derived thermochemical cluster data are used to evaluate the nucleation rates for a given temperature-pressure profile of a model hot Jupiter atmosphere. We find that with the updated and refined cluster data, nucleation becomes unfeasible at slightly lower temperatures, raising the lower boundary for seed formation in the atmosphere. The approach presented in this paper allows to find stable isomers for small (TiO2)N clusters. The choice of functional and basis set for the all-electron DFT calculations have a measurable impact on the resulting surface tension and nucleation rate and the updated thermochemical data is recommended for future considerations.

Machine learning plays an important role in the operation of current wind

energy production systems. One central application is predictive maintenance to

increase efficiency and lower electricity costs by reducing downtimes.

Integrating physics-based knowledge in neural networks to enforce their

physical plausibilty is a promising method to improve current approaches, but

incomplete system information often impedes their application in real world

scenarios. We describe a simple and efficient way for physics-constrained deep

learning-based predictive maintenance for wind turbine gearbox bearings with

partial system knowledge. The approach is based on temperature nowcasting

constrained by physics, where unknown system coefficients are treated as

learnable neural network parameters. Results show improved generalization

performance to unseen environments compared to a baseline neural network, which

is especially important in low data scenarios often encountered in real-world

applications.

LaFTer proposes a label-free and parameter-efficient method to adapt vision-language models like CLIP to new visual classification tasks by leveraging large language models to generate synthetic text descriptions and using unlabeled target domain images for unsupervised fine-tuning. The approach achieved an average absolute gain of 6.7% over zero-shot CLIP and often surpassed few-shot supervised methods across 12 diverse datasets.

Experimentally acquired microscopy images are unavoidably affected by the

presence of noise and other unwanted signals, which degrade their quality and

might hide relevant features. With the recent increase in image acquisition

rate, modern denoising and restoration solutions become necessary. This study

focuses on image decomposition and denoising of microscopy images through a

workflow based on total variation (TV), addressing images obtained from various

microscopy techniques, including atomic force microscopy (AFM), scanning

tunneling microscopy (STM), and scanning electron microscopy (SEM). Our

approach consists in restoring an image by extracting its unwanted signal

components and subtracting them from the raw one, or by denoising it. We

evaluate the performance of TV-L1, Huber-ROF, and TGV-L1 in achieving

this goal in distinct study cases. Huber-ROF proved to be the most flexible

one, while TGV-L1 is the most suitable for denoising. Our results suggest a

wider applicability of this method in microscopy, restricted not only to STM,

AFM, and SEM images. The Python code used for this study is publicly available

as part of AiSurf. It is designed to be integrated into experimental workflows

for image acquisition or can be used to denoise previously acquired images.

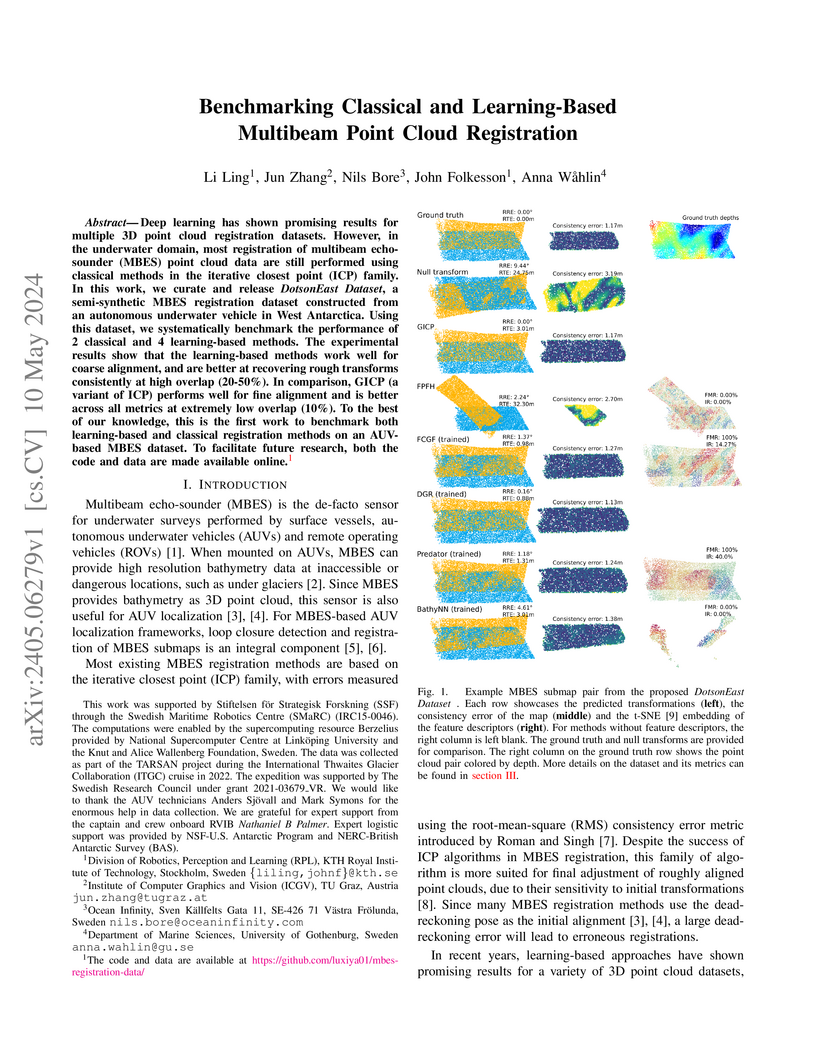

Deep learning has shown promising results for multiple 3D point cloud

registration datasets. However, in the underwater domain, most registration of

multibeam echo-sounder (MBES) point cloud data are still performed using

classical methods in the iterative closest point (ICP) family. In this work, we

curate and release DotsonEast Dataset, a semi-synthetic MBES registration

dataset constructed from an autonomous underwater vehicle in West Antarctica.

Using this dataset, we systematically benchmark the performance of 2 classical

and 4 learning-based methods. The experimental results show that the

learning-based methods work well for coarse alignment, and are better at

recovering rough transforms consistently at high overlap (20-50%). In

comparison, GICP (a variant of ICP) performs well for fine alignment and is

better across all metrics at extremely low overlap (10%). To the best of our

knowledge, this is the first work to benchmark both learning-based and

classical registration methods on an AUV-based MBES dataset. To facilitate

future research, both the code and data are made available online.

18 Mar 2019

The extension of persistent homology to multi-parameter setups is an algorithmic challenge. Since most computation tasks scale badly with the size of the input complex, an important pre-processing step consists of simplifying the input while maintaining the homological information. We present an algorithm that drastically reduces the size of an input. Our approach is an extension of the chunk algorithm for persistent homology (Bauer et al., Topological Methods in Data Analysis and Visualization III, 2014). We show that our construction produces the smallest multi-filtered chain complex among all the complexes quasi-isomorphic to the input, improving on the guarantees of previous work in the context of discrete Morse theory. Our algorithm also offers an immediate parallelization scheme in shared memory. Already its sequential version compares favorably with existing simplification schemes, as we show by experimental evaluation.

Causal discovery and causal reasoning are classically treated as separate and consecutive tasks: one first infers the causal graph, and then uses it to estimate causal effects of interventions. However, such a two-stage approach is uneconomical, especially in terms of actively collected interventional data, since the causal query of interest may not require a fully-specified causal model. From a Bayesian perspective, it is also unnatural, since a causal query (e.g., the causal graph or some causal effect) can be viewed as a latent quantity subject to posterior inference -- other unobserved quantities that are not of direct interest (e.g., the full causal model) ought to be marginalized out in this process and contribute to our epistemic uncertainty. In this work, we propose Active Bayesian Causal Inference (ABCI), a fully-Bayesian active learning framework for integrated causal discovery and reasoning, which jointly infers a posterior over causal models and queries of interest. In our approach to ABCI, we focus on the class of causally-sufficient, nonlinear additive noise models, which we model using Gaussian processes. We sequentially design experiments that are maximally informative about our target causal query, collect the corresponding interventional data, and update our beliefs to choose the next experiment. Through simulations, we demonstrate that our approach is more data-efficient than several baselines that only focus on learning the full causal graph. This allows us to accurately learn downstream causal queries from fewer samples while providing well-calibrated uncertainty estimates for the quantities of interest.

The recently introduced concept of D-variation unifies previous concepts of variation of multivariate functions. In this paper, we give an affirmative answer to the open question from Pausinger \& Svane (J. Complexity, 2014) whether every function of bounded Hardy--Krause variation is Borel measurable and has bounded D-variation. Moreover, we show that the space of functions of bounded D-variation can be turned into a commutative Banach algebra.

There are no more papers matching your filters at the moment.