Tampere University

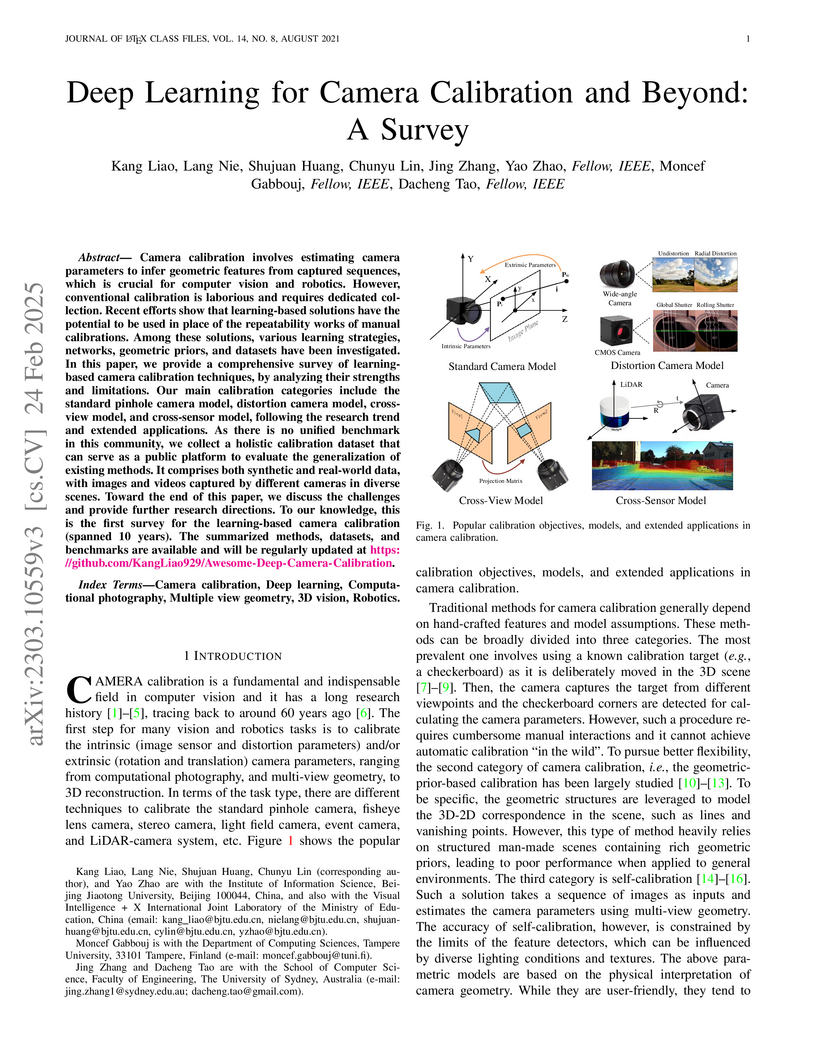

This comprehensive survey consolidates a decade of research (2015-2024) in deep learning for camera calibration, providing a systematic taxonomy for over 200 papers, outlining key trends and challenges, and introducing a holistic public benchmark dataset. It offers a structured understanding of various learning-based methods and their applications across different camera models.

Researchers from Tampere University and Nokia Technologies developed the Attractor-based Joint Diarization, Counting, and Separation System (A-DCSS), which addresses the challenge of separating speech from an unknown number of speakers, each contributing multiple non-contiguous utterances. The system achieved superior speech separation performance and high accuracy in speaker diarization and counting across various challenging acoustic conditions.

While direction of arrival (DOA) of sound events is generally estimated from multichannel audio data recorded in a microphone array, sound events usually derive from visually perceptible source objects, e.g., sounds of footsteps come from the feet of a walker. This paper proposes an audio-visual sound event localization and detection (SELD) task, which uses multichannel audio and video information to estimate the temporal activation and DOA of target sound events. Audio-visual SELD systems can detect and localize sound events using signals from a microphone array and audio-visual correspondence. We also introduce an audio-visual dataset, Sony-TAu Realistic Spatial Soundscapes 2023 (STARSS23), which consists of multichannel audio data recorded with a microphone array, video data, and spatiotemporal annotation of sound events. Sound scenes in STARSS23 are recorded with instructions, which guide recording participants to ensure adequate activity and occurrences of sound events. STARSS23 also serves human-annotated temporal activation labels and human-confirmed DOA labels, which are based on tracking results of a motion capture system. Our benchmark results demonstrate the benefits of using visual object positions in audio-visual SELD tasks. The data is available at this https URL.

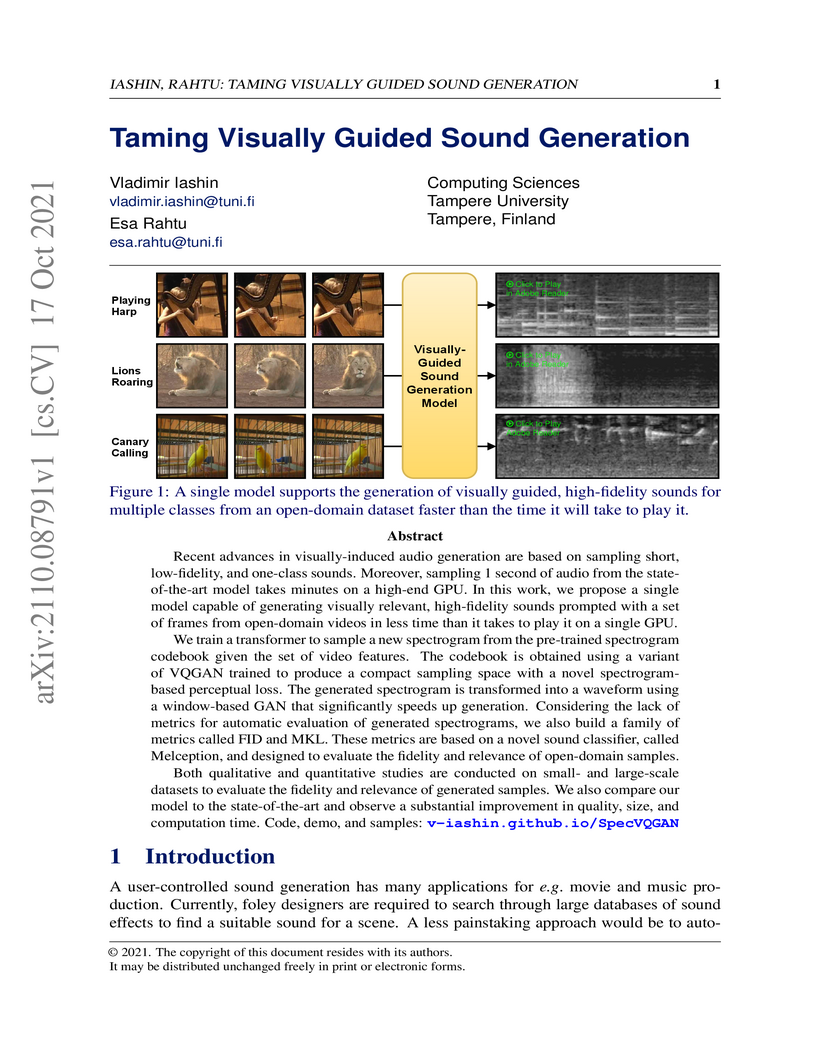

Recent advances in visually-induced audio generation are based on sampling

short, low-fidelity, and one-class sounds. Moreover, sampling 1 second of audio

from the state-of-the-art model takes minutes on a high-end GPU. In this work,

we propose a single model capable of generating visually relevant,

high-fidelity sounds prompted with a set of frames from open-domain videos in

less time than it takes to play it on a single GPU.

We train a transformer to sample a new spectrogram from the pre-trained

spectrogram codebook given the set of video features. The codebook is obtained

using a variant of VQGAN trained to produce a compact sampling space with a

novel spectrogram-based perceptual loss. The generated spectrogram is

transformed into a waveform using a window-based GAN that significantly speeds

up generation. Considering the lack of metrics for automatic evaluation of

generated spectrograms, we also build a family of metrics called FID and MKL.

These metrics are based on a novel sound classifier, called Melception, and

designed to evaluate the fidelity and relevance of open-domain samples.

Both qualitative and quantitative studies are conducted on small- and

large-scale datasets to evaluate the fidelity and relevance of generated

samples. We also compare our model to the state-of-the-art and observe a

substantial improvement in quality, size, and computation time. Code, demo, and

samples: v-iashin.github.io/SpecVQGAN

This paper offers a practical guide for developing Retrieval Augmented Generation (RAG) systems that utilize PDF documents as a knowledge source. It details step-by-step implementations using both OpenAI's Assistant API and open-source models like Llama, alongside insights into common technical challenges and a comparative analysis of each approach. The work includes an evaluation based on participant feedback, which highlighted the value of hands-on exercises and practical concerns such as data security.

The MEMERAG benchmark introduces the first native multilingual meta-evaluation dataset for Retrieval Augmented Generation (RAG) systems, providing human expert annotations for faithfulness and relevance across five languages. It enables the development of more reliable automatic evaluation methods for RAG, demonstrating that detailed prompting significantly improves LLM-as-a-judge alignment with human judgments.

29 Apr 2024

Researchers from Tampere University and other European institutions developed a multi-agent Large Language Model system to enhance code review by providing comprehensive, contextually-aware feedback. The system identifies potential bugs, code smells, and inefficiencies, offering actionable recommendations and educational guidance across various programming languages and application domains.

Researchers introduced TactfulToM, a new benchmark designed to evaluate Large Language Models' (LLMs) understanding of "white lies" in realistic social conversations. The evaluation revealed that current LLMs significantly underperform human baselines in discerning prosocial motivations behind these subtle deceptions, even with advanced reasoning techniques.

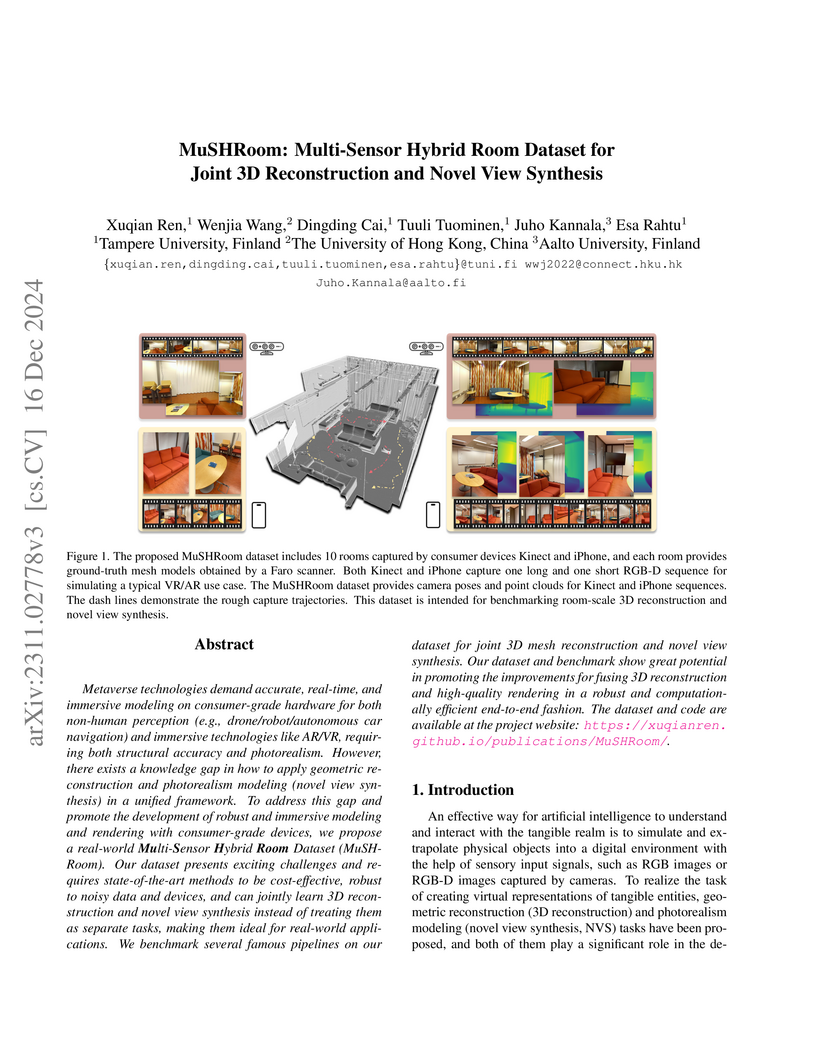

Metaverse technologies demand accurate, real-time, and immersive modeling on

consumer-grade hardware for both non-human perception (e.g.,

drone/robot/autonomous car navigation) and immersive technologies like AR/VR,

requiring both structural accuracy and photorealism. However, there exists a

knowledge gap in how to apply geometric reconstruction and photorealism

modeling (novel view synthesis) in a unified framework. To address this gap and

promote the development of robust and immersive modeling and rendering with

consumer-grade devices, we propose a real-world Multi-Sensor Hybrid Room

Dataset (MuSHRoom). Our dataset presents exciting challenges and requires

state-of-the-art methods to be cost-effective, robust to noisy data and

devices, and can jointly learn 3D reconstruction and novel view synthesis

instead of treating them as separate tasks, making them ideal for real-world

applications. We benchmark several famous pipelines on our dataset for joint 3D

mesh reconstruction and novel view synthesis. Our dataset and benchmark show

great potential in promoting the improvements for fusing 3D reconstruction and

high-quality rendering in a robust and computationally efficient end-to-end

fashion. The dataset and code are available at the project website:

this https URL

22 Sep 2025

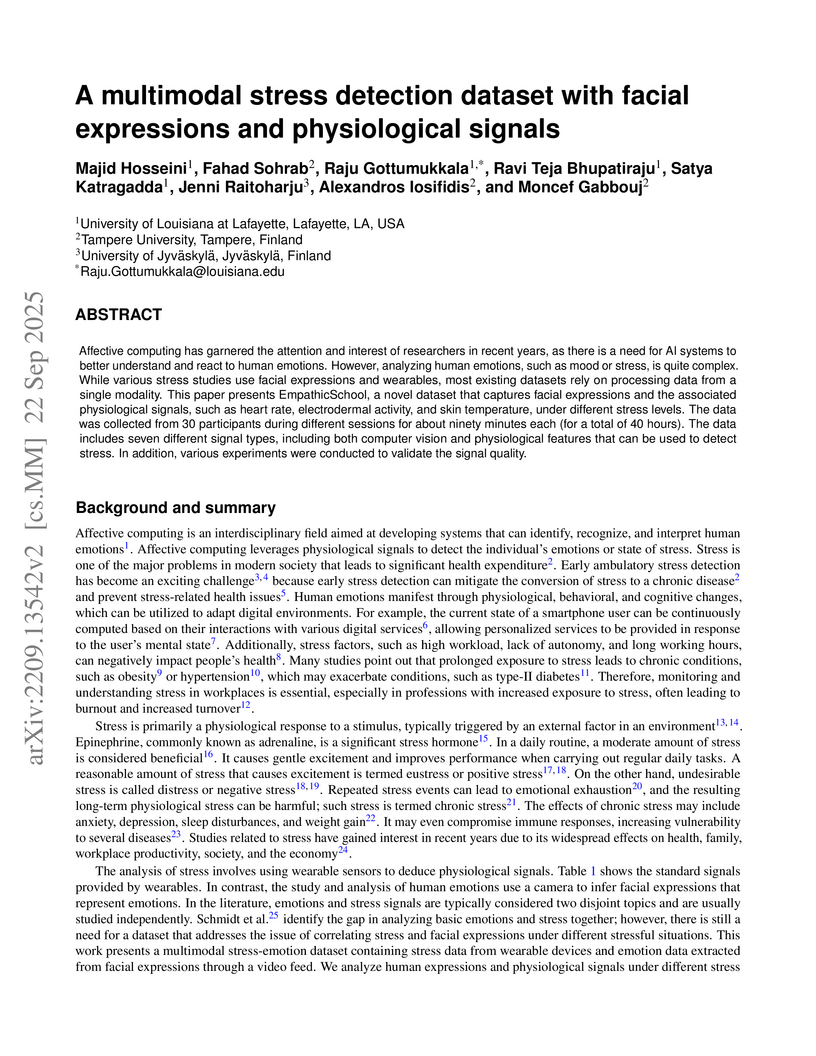

Affective computing has garnered the attention and interest of researchers in recent years, as there is a need for AI systems to better understand and react to human emotions. However, analyzing human emotions, such as mood or stress, is quite complex. While various stress studies use facial expressions and wearables, most existing datasets rely on processing data from a single modality. This paper presents EmpathicSchool, a novel dataset that captures facial expressions and the associated physiological signals, such as heart rate, electrodermal activity, and skin temperature, under different stress levels. The data was collected from 30 participants during different sessions for about ninety minutes each (for a total of 40 hours). The data includes seven different signal types, including both computer vision and physiological features that can be used to detect stress. In addition, various experiments were conducted to validate the signal quality.

This work enhances 3D Gaussian Splatting (3DGS) by integrating a physical image formation model directly into the differentiable rendering pipeline to compensate for motion blur and rolling shutter distortion. The approach leverages Visual-Inertial Odometry (VIO) to regularize pose and velocity optimization, yielding significantly sharper and more accurate 3D reconstructions from casually captured handheld video data, outperforming prior 2D and 3D compensation techniques.

We introduce V-AURA, the first autoregressive model to achieve high temporal alignment and relevance in video-to-audio generation. V-AURA uses a high-framerate visual feature extractor and a cross-modal audio-visual feature fusion strategy to capture fine-grained visual motion events and ensure precise temporal alignment. Additionally, we propose VisualSound, a benchmark dataset with high audio-visual relevance. VisualSound is based on VGGSound, a video dataset consisting of in-the-wild samples extracted from YouTube. During the curation, we remove samples where auditory events are not aligned with the visual ones. V-AURA outperforms current state-of-the-art models in temporal alignment and semantic relevance while maintaining comparable audio quality. Code, samples, VisualSound and models are available at this https URL

Universitat Pompeu Fabra University of PennsylvaniaTampere UniversityComplexity Science HubCentral European UniversityUniversity of KonstanzConstructor UniversityBarcelona Supercomputing CenterWroclaw University of Science and TechnologyCENTAI InstituteNational Laboratory for Health SecurityInstitute for Cross-Disciplinary Physics and Complex Systems (IFISC) UIB-CSICHUN-REN Rényi Institute of MathematicsUniversidad Nacional Autóma de México

University of PennsylvaniaTampere UniversityComplexity Science HubCentral European UniversityUniversity of KonstanzConstructor UniversityBarcelona Supercomputing CenterWroclaw University of Science and TechnologyCENTAI InstituteNational Laboratory for Health SecurityInstitute for Cross-Disciplinary Physics and Complex Systems (IFISC) UIB-CSICHUN-REN Rényi Institute of MathematicsUniversidad Nacional Autóma de México

University of PennsylvaniaTampere UniversityComplexity Science HubCentral European UniversityUniversity of KonstanzConstructor UniversityBarcelona Supercomputing CenterWroclaw University of Science and TechnologyCENTAI InstituteNational Laboratory for Health SecurityInstitute for Cross-Disciplinary Physics and Complex Systems (IFISC) UIB-CSICHUN-REN Rényi Institute of MathematicsUniversidad Nacional Autóma de México

University of PennsylvaniaTampere UniversityComplexity Science HubCentral European UniversityUniversity of KonstanzConstructor UniversityBarcelona Supercomputing CenterWroclaw University of Science and TechnologyCENTAI InstituteNational Laboratory for Health SecurityInstitute for Cross-Disciplinary Physics and Complex Systems (IFISC) UIB-CSICHUN-REN Rényi Institute of MathematicsUniversidad Nacional Autóma de MéxicoOpinion dynamics, the study of how individual beliefs and collective public opinion evolve, is a fertile domain for applying statistical physics to complex social phenomena. Like physical systems, societies exhibit macroscopic regularities from localized interactions, leading to outcomes such as consensus or fragmentation. This field has grown significantly, attracting interdisciplinary methods and driven by a surge in large-scale behavioral data. This review covers its rapid progress, bridging the literature dispersion. We begin with essential concepts and definitions, encompassing the nature of opinions, microscopic and macroscopic dynamics. This foundation leads to an overview of empirical research, from lab experiments to large-scale data analysis, which informs and validates models of opinion dynamics. We then present individual-based models, categorized by their macroscopic phenomena (e.g., consensus, polarization, echo chambers) and microscopic mechanisms (e.g., homophily, assimilation). We also review social contagion phenomena, highlighting their connection to opinion dynamics. Furthermore, the review covers common analytical and computational tools, including stochastic processes, treatments, simulations, and optimization. Finally, we explore emerging frontiers, such as connecting empirical data to models and using AI agents as testbeds for novel social phenomena. By systematizing terminology and emphasizing analogies with traditional physics, this review aims to consolidate knowledge, provide a robust theoretical foundation, and shape future research in opinion dynamics.

Conventional Fourier-domain Optical Coherence Tomography (FD-OCT) systems depend on resampling into wavenumber (k) domain to extract the depth profile. This either necessitates additional hardware resources or amplifies the existing computational complexity. Moreover, the OCT images also suffer from speckle noise, due to systemic reliance on low coherence interferometry. We propose a streamlined and computationally efficient approach based on Deep-Learning (DL) which enables reconstructing speckle-reduced OCT images directly from the wavelength domain. For reconstruction, two encoder-decoder styled networks namely Spatial Domain Convolution Neural Network (SD-CNN) and Fourier Domain CNN (FD-CNN) are used sequentially. The SD-CNN exploits the highly degraded images obtained by Fourier transforming the domain fringes to reconstruct the deteriorated morphological structures along with suppression of unwanted noise. The FD-CNN leverages this output to enhance the image quality further by optimization in Fourier domain (FD). We quantitatively and visually demonstrate the efficacy of the method in obtaining high-quality OCT images. Furthermore, we illustrate the computational complexity reduction by harnessing the power of DL models. We believe that this work lays the framework for further innovations in the realm of OCT image reconstruction.

In this study, we present a comprehensive public dataset for driver drowsiness detection, integrating multimodal signals of facial, behavioral, and biometric indicators. Our dataset includes 3D facial video using a depth camera, IR camera footage, posterior videos, and biometric signals such as heart rate, electrodermal activity, blood oxygen saturation, skin temperature, and accelerometer data. This data set provides grip sensor data from the steering wheel and telemetry data from the American truck simulator game to provide more information about drivers' behavior while they are alert and drowsy. Drowsiness levels were self-reported every four minutes using the Karolinska Sleepiness Scale (KSS). The simulation environment consists of three monitor setups, and the driving condition is completely like a car. Data were collected from 19 subjects (15 M, 4 F) in two conditions: when they were fully alert and when they exhibited signs of sleepiness. Unlike other datasets, our multimodal dataset has a continuous duration of 40 minutes for each data collection session per subject, contributing to a total length of 1,400 minutes, and we recorded gradual changes in the driver state rather than discrete alert/drowsy labels. This study aims to create a comprehensive multimodal dataset of driver drowsiness that captures a wider range of physiological, behavioral, and driving-related signals. The dataset will be available upon request to the corresponding author.

Existing multimodal audio generation models often lack precise user control, which limits their applicability in professional Foley workflows. In particular, these models focus on the entire video and do not provide precise methods for prioritizing a specific object within a scene, generating unnecessary background sounds, or focusing on the wrong objects. To address this gap, we introduce the novel task of video object segmentation-aware audio generation, which explicitly conditions sound synthesis on object-level segmentation maps. We present SAGANet, a new multimodal generative model that enables controllable audio generation by leveraging visual segmentation masks along with video and textual cues. Our model provides users with fine-grained and visually localized control over audio generation. To support this task and further research on segmentation-aware Foley, we propose Segmented Music Solos, a benchmark dataset of musical instrument performance videos with segmentation information. Our method demonstrates substantial improvements over current state-of-the-art methods and sets a new standard for controllable, high-fidelity Foley synthesis. Code, samples, and Segmented Music Solos are available at this https URL

21 Sep 2025

Systematic literature review (SLR) is foundational to evidence-based research, enabling scholars to identify, classify, and synthesize existing studies to address specific research questions. Conducting an SLR is, however, largely a manual process. In recent years, researchers have made significant progress in automating portions of the SLR pipeline to reduce the effort and time required for high-quality reviews; nevertheless, there remains a lack of AI-agent-based systems that automate the entire SLR workflow. To this end, we introduce a novel multi-AI-agent system designed to fully automate SLRs. Leveraging large language models (LLMs), our system streamlines the review process to enhance efficiency and accuracy. Through a user-friendly interface, researchers specify a topic; the system then generates a search string to retrieve relevant academic papers. Next, an inclusion/exclusion filtering step is applied to titles relevant to the research area. The system subsequently summarizes paper abstracts and retains only those directly related to the field of study. In the final phase, it conducts a thorough analysis of the selected papers with respect to predefined research questions. This paper presents the system, describes its operational framework, and demonstrates how it substantially reduces the time and effort traditionally required for SLRs while maintaining comprehensiveness and precision. The code for this project is available at: this https URL .

Self-supervised learning (SSL) has made significant advances in speech representation learning. Models like wav2vec 2.0 and HuBERT have achieved state-of-the-art results in tasks such as speech recognition, particularly in monolingual settings. However, multilingual SSL models tend to underperform their monolingual counterparts on each individual language, especially in multilingual scenarios with few languages such as the bilingual setting. In this work, we investigate a novel approach to reduce this performance gap by introducing limited visual grounding into bilingual speech SSL models. Our results show that visual grounding benefits both monolingual and bilingual models, with especially pronounced gains for the latter, reducing the multilingual performance gap on zero-shot phonetic discrimination from 31.5% for audio-only models to 8.04% with grounding.

18 Aug 2024

The research by Tampere University and University of Jyväskylä develops an AI-based multiagent system that uses Large Language Models (LLMs) to automate and improve requirements elicitation, analysis, and prioritization in software development. This system, which simulates different team roles, generated more comprehensive and higher-quality user stories with GPT-4o, while Mixtral-8x7B offered the fastest response times for initial drafts.

17 Jun 2022

Audio question answering (AQA) is a multimodal translation task where a

system analyzes an audio signal and a natural language question, to generate a

desirable natural language answer. In this paper, we introduce Clotho-AQA, a

dataset for Audio question answering consisting of 1991 audio files each

between 15 to 30 seconds in duration selected from the Clotho dataset. For each

audio file, we collect six different questions and corresponding answers by

crowdsourcing using Amazon Mechanical Turk. The questions and answers are

produced by different annotators. Out of the six questions for each audio, two

questions each are designed to have 'yes' and 'no' as answers, while the

remaining two questions have other single-word answers. For each question, we

collect answers from three different annotators. We also present two baseline

experiments to describe the usage of our dataset for the AQA task - an

LSTM-based multimodal binary classifier for 'yes' or 'no' type answers and an

LSTM-based multimodal multi-class classifier for 828 single-word answers. The

binary classifier achieved an accuracy of 62.7% and the multi-class classifier

achieved a top-1 accuracy of 54.2% and a top-5 accuracy of 93.7%. Clotho-AQA

dataset is freely available online at this https URL

There are no more papers matching your filters at the moment.