University of Moratuwa

01 Oct 2025

Recent advancements in LLMs have contributed to the rise of advanced conversational assistants that can assist with user needs through natural language conversation. This paper presents a ScheduleMe, a multi-agent calendar assistant for users to manage google calendar events in natural language. The system uses a graph-structured coordination mechanism where a central supervisory agent supervises specialized task agents, allowing modularity, conflicts resolution, and context-aware interactions to resolve ambiguities and evaluate user commands. This approach sets an example of how structured reasoning and agent cooperation might convince operators to increase the usability and flexibility of personal calendar assistant tools.

Low-resource languages such as Sinhala are often overlooked by open-source Large Language Models (LLMs). In this research, we extend an existing multilingual LLM (Llama-3-8B) to better serve Sinhala. We enhance the LLM tokenizer with Sinhala specific vocabulary and perform continual pre-training on a cleaned 10 million Sinhala corpus, resulting in the SinLlama model. This is the very first decoder-based open-source LLM with explicit Sinhala support. When SinLlama was instruction fine-tuned for three text classification tasks, it outperformed base and instruct variants of Llama-3-8B by a significant margin.

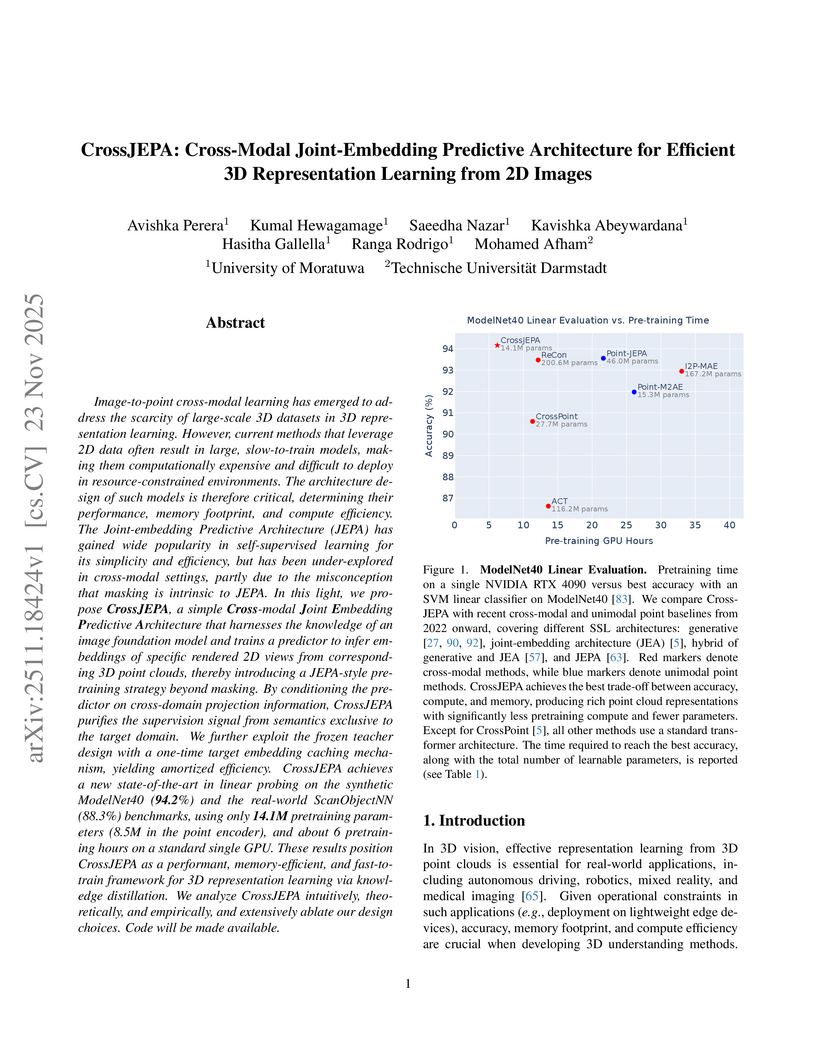

CrossJEPA introduces a mask-free Joint-embedding Predictive Architecture for efficient 3D representation learning, leveraging a frozen 2D image foundation model to guide a 3D point cloud encoder. It achieves 94.2% accuracy on ModelNet40 and 88.3% on ScanObjectNN using only 14.1M parameters and approximately 6 pretraining GPU hours.

The rapid increase in video content production has resulted in enormous data volumes, creating significant challenges for efficient analysis and resource management. To address this, robust video analysis tools are essential. This paper presents an innovative proof of concept using Generative Artificial Intelligence (GenAI) in the form of Vision Language Models to enhance the downstream video analysis process. Our tool generates customized textual summaries based on user-defined queries, providing focused insights within extensive video datasets. Unlike traditional methods that offer generic summaries or limited action recognition, our approach utilizes Vision Language Models to extract relevant information, improving analysis precision and efficiency. The proposed method produces textual summaries from extensive CCTV footage, which can then be stored for an indefinite time in a very small storage space compared to videos, allowing users to quickly navigate and verify significant events without exhaustive manual review. Qualitative evaluations result in 80% and 70% accuracy in temporal and spatial quality and consistency of the pipeline respectively.

This comparative study evaluates three distinct modern Aspect-Based Sentiment Analysis (ABSA) paradigms on standard restaurant and laptop review datasets: LLaMA 2 fine-tuning with QLoRA, few-shot learning with SETFIT, and the PyABSA framework using FAST LSA V2. The research demonstrates that specialized ABSA frameworks and few-shot learning approaches offer strong performance, with FAST LSA V2 achieving accuracies of 87.67% for restaurant reviews and 82.60% for laptop reviews, positioning them as competitive alternatives without surpassing the absolute state-of-the-art reported in literature.

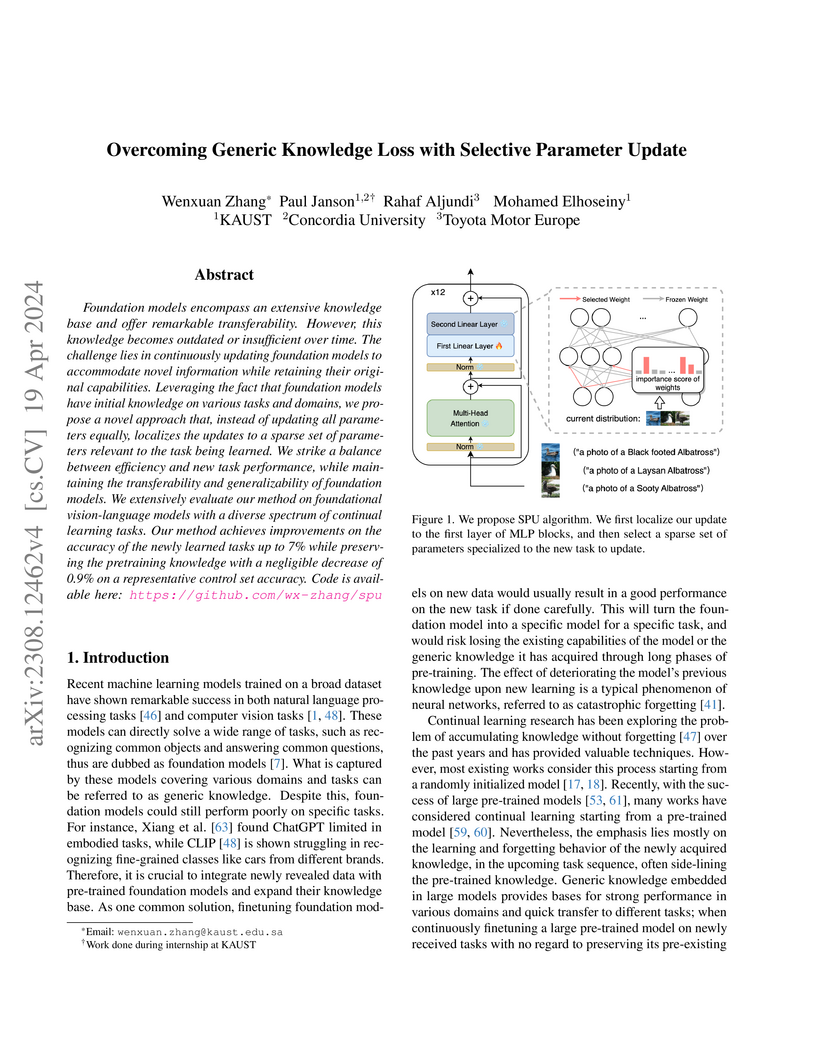

Researchers from KAUST, Concordia University, and Toyota Motor Europe developed Selective Parameter Update (SPU), a method designed to enable continuous adaptation of foundation models while preserving their pre-trained generic knowledge. The approach achieved state-of-the-art performance in learning new tasks with an average 2.86% accuracy improvement, while crucially limiting the drop in generic knowledge accuracy to just 0.94% on a control set.

In human-computer interaction, head pose estimation profoundly influences

application functionality. Although utilizing facial landmarks is valuable for

this purpose, existing landmark-based methods prioritize precision over

simplicity and model size, limiting their deployment on edge devices and in

compute-poor environments. To bridge this gap, we propose \textbf{Grouped

Attention Deep Sets (GADS)}, a novel architecture based on the Deep Set

framework. By grouping landmarks into regions and employing small Deep Set

layers, we reduce computational complexity. Our multihead attention mechanism

extracts and combines inter-group information, resulting in a model that is

7.5× smaller and executes 25× faster than the current lightest

state-of-the-art model. Notably, our method achieves an impressive reduction,

being 4321× smaller than the best-performing model. We introduce vanilla

GADS and Hybrid-GADS (landmarks + RGB) and evaluate our models on three

benchmark datasets -- AFLW2000, BIWI, and 300W-LP. We envision our architecture

as a robust baseline for resource-constrained head pose estimation methods.

We present Seg-TTO, a novel framework for zero-shot, open-vocabulary semantic

segmentation (OVSS), designed to excel in specialized domain tasks. While

current open-vocabulary approaches show impressive performance on standard

segmentation benchmarks under zero-shot settings, they fall short of supervised

counterparts on highly domain-specific datasets. We focus on

segmentation-specific test-time optimization to address this gap. Segmentation

requires an understanding of multiple concepts within a single image while

retaining the locality and spatial structure of representations. We propose a

novel self-supervised objective adhering to these requirements and use it to

align the model parameters with input images at test time. In the textual

modality, we learn multiple embeddings for each category to capture diverse

concepts within an image, while in the visual modality, we calculate

pixel-level losses followed by embedding aggregation operations specific to

preserving spatial structure. Our resulting framework termed Seg-TTO is a

plug-and-play module. We integrate Seg-TTO with three state-of-the-art OVSS

approaches and evaluate across 22 challenging OVSS tasks covering a range of

specialized domains. Our Seg-TTO demonstrates clear performance improvements

(up to 27% mIoU increase on some datasets) establishing new state-of-the-art.

Our code and models will be released publicly.

People often utilise online media (e.g., Facebook, Reddit) as a platform to express their psychological distress and seek support. State-of-the-art NLP techniques demonstrate strong potential to automatically detect mental health issues from text. Research suggests that mental health issues are reflected in emotions (e.g., sadness) indicated in a person's choice of language. Therefore, we developed a novel emotion-annotated mental health corpus (EmoMent), consisting of 2802 Facebook posts (14845 sentences) extracted from two South Asian countries - Sri Lanka and India. Three clinical psychology postgraduates were involved in annotating these posts into eight categories, including 'mental illness' (e.g., depression) and emotions (e.g., 'sadness', 'anger'). EmoMent corpus achieved 'very good' inter-annotator agreement of 98.3% (i.e. % with two or more agreement) and Fleiss' Kappa of 0.82. Our RoBERTa based models achieved an F1 score of 0.76 and a macro-averaged F1 score of 0.77 for the first task (i.e. predicting a mental health condition from a post) and the second task (i.e. extent of association of relevant posts with the categories defined in our taxonomy), respectively.

MTP (Meaning-Typed Programming) introduces a language abstraction that automatically generates prompts and handles responses for integrating Large Language Models into applications. This approach significantly reduces development complexity and consistently achieves high accuracy, while also demonstrating improved efficiency in token usage, cost, and runtime compared to existing frameworks.

Nisansa de Silva's survey offers a critical overview of publicly available Natural Language Processing tools and research for Sinhala, a resource-poor language. It reveals significant challenges including fragmented efforts, limited public access to datasets and code (only 11.43% of papers released datasets), and a prevalent focus on foundational tasks like OCR rather than higher-level semantic analysis.

The transition to microservices has revolutionized software architectures,

offering enhanced scalability and modularity. However, the distributed and

dynamic nature of microservices introduces complexities in ensuring system

reliability, making anomaly detection crucial for maintaining performance and

functionality. Anomalies stemming from network and performance issues must be

swiftly identified and addressed. Existing anomaly detection techniques often

rely on statistical models or machine learning methods that struggle with the

high-dimensional, interdependent data inherent in microservice applications.

Current techniques and available datasets predominantly focus on system traces

and logs, limiting their ability to support advanced detection models. This

paper addresses these gaps by introducing the RS-Anomic dataset generated using

the open-source RobotShop microservice application. The dataset captures

multivariate performance metrics and response times under normal and anomalous

conditions, encompassing ten types of anomalies. We propose a novel anomaly

detection model called Graph Attention and LSTM-based Microservice Anomaly

Detection (GAL-MAD), leveraging Graph Attention and Long Short-Term Memory

architectures to capture spatial and temporal dependencies in microservices. We

utilize SHAP values to localize anomalous services and identify root causes to

enhance explainability. Experimental results demonstrate that GAL-MAD

outperforms state-of-the-art models on the RS-Anomic dataset, achieving higher

accuracy and recall across varying anomaly rates. The explanations provide

actionable insights into service anomalies, which benefits system

administrators.

03 Jul 2023

Continuous Authentication (CA) using behavioural biometrics is a type of

biometric identification that recognizes individuals based on their unique

behavioural characteristics, like their typing style. However, the existing

systems that use keystroke or touch stroke data have limited accuracy and

reliability. To improve this, smartphones' Inertial Measurement Unit (IMU)

sensors, which include accelerometers, gyroscopes, and magnetometers, can be

used to gather data on users' behavioural patterns, such as how they hold their

phones. Combining this IMU data with keystroke data can enhance the accuracy of

behavioural biometrics-based CA. This paper proposes BehaveFormer, a new

framework that employs keystroke and IMU data to create a reliable and accurate

behavioural biometric CA system. It includes two Spatio-Temporal Dual Attention

Transformer (STDAT), a novel transformer we introduce to extract more

discriminative features from keystroke dynamics. Experimental results on three

publicly available datasets (Aalto DB, HMOG DB, and HuMIdb) demonstrate that

BehaveFormer outperforms the state-of-the-art behavioural biometric-based CA

systems. For instance, on the HuMIdb dataset, BehaveFormer achieved an EER of

2.95\%. Additionally, the proposed STDAT has been shown to improve the

BehaveFormer system even when only keystroke data is used. For example, on the

Aalto DB dataset, BehaveFormer achieved an EER of 1.80\%. These results

demonstrate the effectiveness of the proposed STDAT and the incorporation of

IMU data for behavioural biometric authentication.

Current video-based Masked Autoencoders (MAEs) primarily focus on learning

effective spatiotemporal representations from a visual perspective, which may

lead the model to prioritize general spatial-temporal patterns but often

overlook nuanced semantic attributes like specific interactions or sequences

that define actions - such as action-specific features that align more closely

with human cognition for space-time correspondence. This can limit the model's

ability to capture the essence of certain actions that are contextually rich

and continuous. Humans are capable of mapping visual concepts, object view

invariance, and semantic attributes available in static instances to comprehend

natural dynamic scenes or videos. Existing MAEs for videos and static images

rely on separate datasets for videos and images, which may lack the rich

semantic attributes necessary for fully understanding the learned concepts,

especially when compared to using video and corresponding sampled frame images

together. To this end, we propose CrossVideoMAE an end-to-end self-supervised

cross-modal contrastive learning MAE that effectively learns both video-level

and frame-level rich spatiotemporal representations and semantic attributes.

Our method integrates mutual spatiotemporal information from videos with

spatial information from sampled frames within a feature-invariant space, while

encouraging invariance to augmentations within the video domain. This objective

is achieved through jointly embedding features of visible tokens and combining

feature correspondence within and across modalities, which is critical for

acquiring rich, label-free guiding signals from both video and frame image

modalities in a self-supervised manner. Extensive experiments demonstrate that

our approach surpasses previous state-of-the-art methods and ablation studies

validate the effectiveness of our approach.

A survey of Machine Learning Operations (MLOps) tools identifies a diverse ecosystem, highlighting that while platforms like AWS SageMaker and MLReef offer comprehensive features including data versioning, hyperparameter tuning, and performance monitoring, a fully functional MLOps platform capable of complete automation with minimal human intervention remains unavailable.

Test-time prompt tuning (TPT) has emerged as a promising technique for adapting large vision-language models (VLMs) to unseen tasks without relying on labeled data. However, the lack of dispersion between textual features can hurt calibration performance, which raises concerns about VLMs' reliability, trustworthiness, and safety. Current TPT approaches primarily focus on improving prompt calibration by either maximizing average textual feature dispersion or enforcing orthogonality constraints to encourage angular separation. However, these methods may not always have optimal angular separation between class-wise textual features, which implies overlooking the critical role of angular diversity. To address this, we propose A-TPT, a novel TPT framework that introduces angular diversity to encourage uniformity in the distribution of normalized textual features induced by corresponding learnable prompts. This uniformity is achieved by maximizing the minimum pairwise angular distance between features on the unit hypersphere. We show that our approach consistently surpasses state-of-the-art TPT methods in reducing the aggregate average calibration error while maintaining comparable accuracy through extensive experiments with various backbones on different datasets. Notably, our approach exhibits superior zero-shot calibration performance on natural distribution shifts and generalizes well to medical datasets. We provide extensive analyses, including theoretical aspects, to establish the grounding of A-TPT. These results highlight the potency of promoting angular diversity to achieve well-dispersed textual features, significantly improving VLM calibration during test-time adaptation. Our code will be made publicly available.

Aspect-based Sentiment Analysis (ABSA) is a critical task in Natural Language

Processing (NLP) that focuses on extracting sentiments related to specific

aspects within a text, offering deep insights into customer opinions.

Traditional sentiment analysis methods, while useful for determining overall

sentiment, often miss the implicit opinions about particular product or service

features. This paper presents a comprehensive review of the evolution of ABSA

methodologies, from lexicon-based approaches to machine learning and deep

learning techniques. We emphasize the recent advancements in Transformer-based

models, particularly Bidirectional Encoder Representations from Transformers

(BERT) and its variants, which have set new benchmarks in ABSA tasks. We

focused on finetuning Llama and Mistral models, building hybrid models using

the SetFit framework, and developing our own model by exploiting the strengths

of state-of-the-art (SOTA) Transformer-based models for aspect term extraction

(ATE) and aspect sentiment classification (ASC). Our hybrid model Instruct -

DeBERTa uses SOTA InstructABSA for aspect extraction and DeBERTa-V3-baseabsa-V1

for aspect sentiment classification. We utilize datasets from different domains

to evaluate our model's performance. Our experiments indicate that the proposed

hybrid model significantly improves the accuracy and reliability of sentiment

analysis across all experimented domains. As per our findings, our hybrid model

Instruct - DeBERTa is the best-performing model for the joint task of ATE and

ASC for both SemEval restaurant 2014 and SemEval laptop 2014 datasets

separately. By addressing the limitations of existing methodologies, our

approach provides a robust solution for understanding detailed consumer

feedback, thus offering valuable insights for businesses aiming to enhance

customer satisfaction and product development.

Penalty kicks often decide championships, yet goalkeepers must anticipate the kicker's intent from subtle biomechanical cues within a very short time window. This study introduces a real-time, multi-modal deep learning framework to predict the direction of a penalty kick (left, middle, or right) before ball contact. The model uses a dual-branch architecture: a MobileNetV2-based CNN extracts spatial features from RGB frames, while 2D keypoints are processed by an LSTM network with attention mechanisms. Pose-derived keypoints further guide visual focus toward task-relevant regions. A distance-based thresholding method segments input sequences immediately before ball contact, ensuring consistent input across diverse footage. A custom dataset of 755 penalty kick events was created from real match videos, with frame-level annotations for object detection, shooter keypoints, and final ball placement. The model achieved 89% accuracy on a held-out test set, outperforming visual-only and pose-only baselines by 14-22%. With an inference time of 22 milliseconds, the lightweight and interpretable design makes it suitable for goalkeeper training, tactical analysis, and real-time game analytics.

Large Language Models (LLMs) have shown significant advances in the past

year. In addition to new versions of GPT and Llama, several other LLMs have

been introduced recently. Some of these are open models available for download

and modification.

Although multilingual large language models have been available for some

time, their performance on low-resourced languages such as Sinhala has been

poor. We evaluated four recent LLMs on their performance directly in the

Sinhala language, and by translation to and from English. We also evaluated

their fine-tunability with a small amount of fine-tuning data. Claude and GPT

4o perform well out-of-the-box and do significantly better than previous

versions. Llama and Mistral perform poorly but show some promise of improvement

with fine tuning.

Solving the problem of Optical Character Recognition (OCR) on printed text for Latin and its derivative scripts can now be considered settled due to the volumes of research done on English and other High-Resourced Languages (HRL). However, for Low-Resourced Languages (LRL) that use unique scripts, it remains an open problem. This study presents a comparative analysis of the zero-shot performance of six distinct OCR engines on two LRLs: Sinhala and Tamil. The selected engines include both commercial and open-source systems, aiming to evaluate the strengths of each category. The Cloud Vision API, Surya, Document AI, and Tesseract were evaluated for both Sinhala and Tamil, while Subasa OCR and EasyOCR were examined for only one language due to their limitations. The performance of these systems was rigorously analysed using five measurement techniques to assess accuracy at both the character and word levels. According to the findings, Surya delivered the best performance for Sinhala across all metrics, with a WER of 2.61%. Conversely, Document AI excelled across all metrics for Tamil, highlighted by a very low CER of 0.78%. In addition to the above analysis, we also introduce a novel synthetic Tamil OCR benchmarking dataset.

There are no more papers matching your filters at the moment.