Cambridge UniversityCambridge

Critique-GRPO introduces an online reinforcement learning framework that integrates natural language critiques and numerical feedback to enhance large language model reasoning capabilities. This method enables more effective self-improvement, addresses performance plateaus and persistent failures, and achieves superior performance on challenging mathematical and general reasoning tasks.

MetaMath, a finetuned language model, leverages MetaMathQA, a novel dataset generated via question bootstrapping and answer augmentation, to enhance open-source LLMs' mathematical reasoning. MetaMath-70B achieves 82.3% on GSM8K, outperforming GPT-3.5-Turbo, and significantly improves backward reasoning abilities.

This paper argues that the choice of optimizer qualitatively alters the properties of learned solutions in deep neural networks, acting as a powerful mechanism for encoding inductive biases beyond architecture and data. Illustrative experiments demonstrate that non-diagonal preconditioners reduce catastrophic forgetting in continual learning by leading to more localized representations, and it reinterprets sparsity-inducing reparameterizations as optimizer designs.

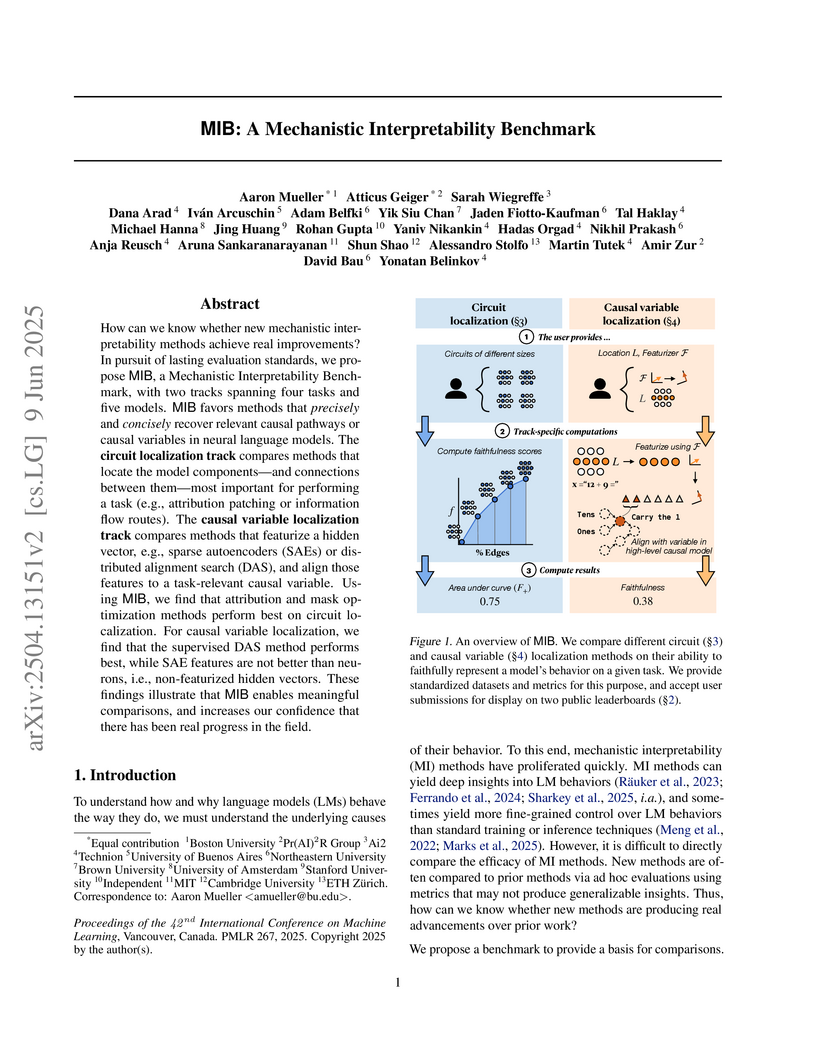

MIB introduces a standardized benchmark for evaluating mechanistic interpretability methods, providing a framework for comparing techniques that localize causal circuits and align neural network features with high-level concepts. Its findings indicate the effectiveness of gradient-based attribution for circuit discovery and demonstrate that supervised feature learning outperforms Sparse Autoencoders for causal variable localization in many scenarios.

24 Sep 2025

CNRS

CNRS HKUSTCambridge UniversityINSA ToulouseLNCMIUniversité Toulouse Paul SabatierHere's a breakdown of the affiliations:THINK:1. **Department of Physics, The Hong Kong University of Science and Technology, Kowloon, Hong Kong, China*** Organization: The Hong Kong University of Science and Technology2. **Université Grenoble Alpes, INSA Toulouse, Université Toulouse Paul Sabatier, CNRS, LNCMI, Grenoble, France.*** This is a composite affiliation. I should extract each distinct organization mentioned.* Organization: Université Grenoble Alpes* Organization: INSA Toulouse* Organization: Université Toulouse Paul Sabatier* Organization: CNRS* Organization: LNCMI (This is a specific laboratory, often treated as an organization in these contexts, especially when listed alongside universities and national research centers).3. **Clare Hall College, Cambridge University, Cambridge, The United Kingdom*** Organization: Clare Hall College* Organization: Cambridge University (or University of Cambridge)Universit

Grenoble Alpes":

HKUSTCambridge UniversityINSA ToulouseLNCMIUniversité Toulouse Paul SabatierHere's a breakdown of the affiliations:THINK:1. **Department of Physics, The Hong Kong University of Science and Technology, Kowloon, Hong Kong, China*** Organization: The Hong Kong University of Science and Technology2. **Université Grenoble Alpes, INSA Toulouse, Université Toulouse Paul Sabatier, CNRS, LNCMI, Grenoble, France.*** This is a composite affiliation. I should extract each distinct organization mentioned.* Organization: Université Grenoble Alpes* Organization: INSA Toulouse* Organization: Université Toulouse Paul Sabatier* Organization: CNRS* Organization: LNCMI (This is a specific laboratory, often treated as an organization in these contexts, especially when listed alongside universities and national research centers).3. **Clare Hall College, Cambridge University, Cambridge, The United Kingdom*** Organization: Clare Hall College* Organization: Cambridge University (or University of Cambridge)Universit

Grenoble Alpes":We have fabricated three-dimensional (3D) networks of ultrathin carbon nanotubes (CNTs) within the ~5-Angstrom diameter pores of zeolite ZSM-5 crystals using the chemical vapour deposition (CVD) process. The 1D electronic characteristics of ultrathin CNTs are characterized by van Hove singularities in the density of states. Boron doping was strategically employed to tune the Fermi energy near a van Hove singularity, which is supported by extensive ab-initio calculations, while the 3D network structure ensures the formation of a phase-coherent bulk superconducting state under a 1D to 3D crossover. We report characteristic signatures of superconductivity using four complementary experimental methods: magnetization, specific heat, resistivity, and point-contact spectroscopy, all consistently support a critical temperature Tc at ambient conditions ranging from 220 to 250 K. In particular, point-contact spectroscopy revealed a multigap nature of superconductivity with a large ~30 meV leading gap, in rough agreement with the prediction of the Bardeen-Cooper-Schrieffer (BCS) theory of superconductivity. The differential conductance response displays a particle-hole symmetry and is tuneable between the tunnelling and Andreev limits via the transparency of the contact, as uniquely expected for a superconductor. Preliminary experiments also reveal a giant pressure effect which increases the Tc above the ambient temperature.

Researchers from NYU, Cambridge, and CMU introduce new open-addressed hashing algorithms that resolve decades-old problems, demonstrating optimal or significantly improved probe complexities without element reordering. Their work disproves a 1985 conjecture by Andrew Yao, establishing that greedy open addressing can achieve O(log² δ⁻¹) worst-case expected probes, an asymptotic improvement over the conjectured Θ(δ⁻¹).

02 Jun 2025

This research provides the exact analytical expression for the most general tree-level boost-breaking cosmological correlator, essential for capturing realistic inflationary physics. It also develops a systematic framework for constructing accurate and efficient approximate templates for these signals, revealing new observable features such as a transient cosmological collider signal.

When using large language models (LLMs) in high-stakes applications, we need to know when we can trust their predictions. Some works argue that prompting high-performance LLMs is sufficient to produce calibrated uncertainties, while others introduce sampling methods that can be prohibitively expensive. In this work, we first argue that prompting on its own is insufficient to achieve good calibration and then show that fine-tuning on a small dataset of correct and incorrect answers can create an uncertainty estimate with good generalization and small computational overhead. We show that a thousand graded examples are sufficient to outperform baseline methods and that training through the features of a model is necessary for good performance and tractable for large open-source models when using LoRA. We also investigate the mechanisms that enable reliable LLM uncertainty estimation, finding that many models can be used as general-purpose uncertainty estimators, applicable not just to their own uncertainties but also the uncertainty of other models. Lastly, we show that uncertainty estimates inform human use of LLMs in human-AI collaborative settings through a user study.

LLaMP introduces a multimodal Retrieval-Augmented Generation framework utilizing hierarchical ReAct agents to ground Large Language Models in high-fidelity materials data and simulations. This approach substantially reduces LLM hallucination in scientific inquiries, demonstrating high accuracy and reproducibility across diverse materials properties and enabling intuitive language-driven atomistic simulations.

Structured large matrices are prevalent in machine learning. A particularly important class is curvature matrices like the Hessian, which are central to understanding the loss landscape of neural nets (NNs), and enable second-order optimization, uncertainty quantification, model pruning, data attribution, and more. However, curvature computations can be challenging due to the complexity of automatic differentiation, and the variety and structural assumptions of curvature proxies, like sparsity and Kronecker factorization. In this position paper, we argue that linear operators -- an interface for performing matrix-vector products -- provide a general, scalable, and user-friendly abstraction to handle curvature matrices. To support this position, we developed curvlinops, a library that provides curvature matrices through a unified linear operator interface. We demonstrate with curvlinops how this interface can hide complexity, simplify applications, be extensible and interoperable with other libraries, and scale to large NNs.

Physics simulation is paramount for modeling and utilizing 3D scenes in various real-world applications. However, integrating with state-of-the-art 3D scene rendering techniques such as Gaussian Splatting (GS) remains challenging. Existing models use additional meshing mechanisms, including triangle or tetrahedron meshing, marching cubes, or cage meshes. Alternatively, we can modify the physics-grounded Newtonian dynamics to align with 3D Gaussian components. Current models take the first-order approximation of a deformation map, which locally approximates the dynamics by linear transformations. In contrast, our GS for Physics-Based Simulations (GASP) pipeline uses parametrized flat Gaussian distributions. Consequently, the problem of modeling Gaussian components using the physics engine is reduced to working with 3D points. In our work, we present additional rules for manipulating Gaussians, demonstrating how to adapt the pipeline to incorporate meshes, control Gaussian sizes during simulations, and enhance simulation efficiency. This is achieved through the Gaussian grouping strategy, which implements hierarchical structuring and enables simulations to be performed exclusively on selected Gaussians. The resulting solution can be integrated into any physics engine that can be treated as a black box. As demonstrated in our studies, the proposed pipeline exhibits superior performance on a diverse range of benchmark datasets designed for 3D object rendering. The project webpage, which includes additional visualizations, can be found at this https URL.

22 Feb 2024

Recent advances in AI combine large language models (LLMs) with vision encoders that bring forward unprecedented technical capabilities to leverage for a wide range of healthcare applications. Focusing on the domain of radiology, vision-language models (VLMs) achieve good performance results for tasks such as generating radiology findings based on a patient's medical image, or answering visual questions (e.g., 'Where are the nodules in this chest X-ray?'). However, the clinical utility of potential applications of these capabilities is currently underexplored. We engaged in an iterative, multidisciplinary design process to envision clinically relevant VLM interactions, and co-designed four VLM use concepts: Draft Report Generation, Augmented Report Review, Visual Search and Querying, and Patient Imaging History Highlights. We studied these concepts with 13 radiologists and clinicians who assessed the VLM concepts as valuable, yet articulated many design considerations. Reflecting on our findings, we discuss implications for integrating VLM capabilities in radiology, and for healthcare AI more generally.

Capability evaluations play a critical role in ensuring the safe deployment of frontier AI systems, but this role may be undermined by intentional underperformance or ``sandbagging.'' We present a novel model-agnostic method for detecting sandbagging behavior using noise injection. Our approach is founded on the observation that introducing Gaussian noise into the weights of models either prompted or fine-tuned to sandbag can considerably improve their performance. We test this technique across a range of model sizes and multiple-choice question benchmarks (MMLU, AI2, WMDP). Our results demonstrate that noise injected sandbagging models show performance improvements compared to standard models. Leveraging this effect, we develop a classifier that consistently identifies sandbagging behavior. Our unsupervised technique can be immediately implemented by frontier labs or regulatory bodies with access to weights to improve the trustworthiness of capability evaluations.

Explainable AI (XAI) has established itself as an important component of

AI-driven interactive systems. With Augmented Reality (AR) becoming more

integrated in daily lives, the role of XAI also becomes essential in AR because

end-users will frequently interact with intelligent services. However, it is

unclear how to design effective XAI experiences for AR. We propose XAIR, a

design framework that addresses "when", "what", and "how" to provide

explanations of AI output in AR. The framework was based on a

multi-disciplinary literature review of XAI and HCI research, a large-scale

survey probing 500+ end-users' preferences for AR-based explanations, and three

workshops with 12 experts collecting their insights about XAI design in AR.

XAIR's utility and effectiveness was verified via a study with 10 designers and

another study with 12 end-users. XAIR can provide guidelines for designers,

inspiring them to identify new design opportunities and achieve effective XAI

designs in AR.

Deep learning has profoundly transformed remote sensing, yet prevailing

architectures like Convolutional Neural Networks (CNNs) and Vision Transformers

(ViTs) remain constrained by critical trade-offs: CNNs suffer from limited

receptive fields, while ViTs grapple with quadratic computational complexity,

hindering their scalability for high-resolution remote sensing data. State

Space Models (SSMs), particularly the recently proposed Mamba architecture,

have emerged as a paradigm-shifting solution, combining linear computational

scaling with global context modeling. This survey presents a comprehensive

review of Mamba-based methodologies in remote sensing, systematically analyzing

about 120 Mamba-based remote sensing studies to construct a holistic taxonomy

of innovations and applications. Our contributions are structured across five

dimensions: (i) foundational principles of vision Mamba architectures, (ii)

micro-architectural advancements such as adaptive scan strategies and hybrid

SSM formulations, (iii) macro-architectural integrations, including

CNN-Transformer-Mamba hybrids and frequency-domain adaptations, (iv) rigorous

benchmarking against state-of-the-art methods in multiple application tasks,

such as object detection, semantic segmentation, change detection, etc. and (v)

critical analysis of unresolved challenges with actionable future directions.

By bridging the gap between SSM theory and remote sensing practice, this survey

establishes Mamba as a transformative framework for remote sensing analysis. To

our knowledge, this paper is the first systematic review of Mamba architectures

in remote sensing. Our work provides a structured foundation for advancing

research in remote sensing systems through SSM-based methods. We curate an

open-source repository

(this https URL) to foster

community-driven advancements.

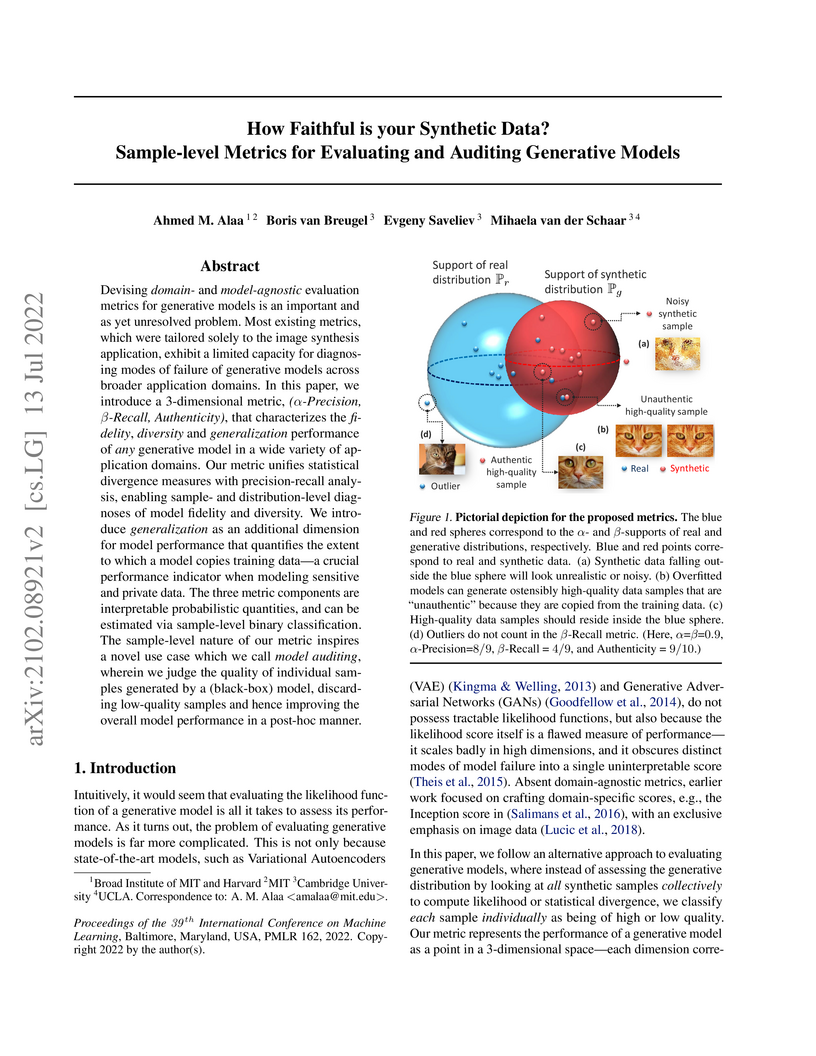

A comprehensive, sample-level evaluation metric consisting of "u03b1-Precision", "u03b2-Recall", and Authenticity quantifies the fidelity, diversity, and generalization capabilities of generative models. This framework accurately ranks model performance, diagnoses specific failure modes, and enables post-hoc auditing to enhance the utility and privacy of generated synthetic data, particularly in sensitive domains.

Connecting optimal transport and variational inference, we present a

principled and systematic framework for sampling and generative modelling

centred around divergences on path space. Our work culminates in the

development of the \emph{Controlled Monte Carlo Diffusion} sampler (CMCD) for

Bayesian computation, a score-based annealing technique that crucially adapts

both forward and backward dynamics in a diffusion model. On the way, we clarify

the relationship between the EM-algorithm and iterative proportional fitting

(IPF) for Schr{\"o}dinger bridges, deriving as well a regularised objective

that bypasses the iterative bottleneck of standard IPF-updates. Finally, we

show that CMCD has a strong foundation in the Jarzinsky and Crooks identities

from statistical physics, and that it convincingly outperforms competing

approaches across a wide array of experiments.

Parkinson's disease (PD) is a neurodegenerative disorder associated with the accumulation of misfolded alpha-synuclein aggregates, forming Lewy bodies and neuritic shape used for pathology diagnostics. Automatic analysis of immunohistochemistry histopathological images with Deep Learning provides a promising tool for better understanding the spatial organization of these aggregates. In this study, we develop an automated image processing pipeline to segment and classify these aggregates in whole-slide images (WSIs) of midbrain tissue from PD and incidental Lewy Body Disease (iLBD) cases based on weakly supervised segmentation, robust to immunohistochemical labelling variability, with a ResNet50 classifier. Our approach allows to differentiate between major aggregate morphologies, including Lewy bodies and neurites with a balanced accuracy of 80%. This framework paves the way for large-scale characterization of the spatial distribution and heterogeneity of alpha-synuclein aggregates in brightfield immunohistochemical tissue, and for investigating their poorly understood relationships with surrounding cells such as microglia and astrocytes.

Researchers at the ALTA Institute, Cambridge University, demonstrated that generative LLMs like ChatGPT can perform Automatic Speech Recognition (ASR) error correction in training-free zero-shot and one-shot settings. By leveraging ASR N-best lists, these methods consistently improved accuracy over baseline ASR outputs, sometimes outperforming bespoke fine-tuned models on out-of-domain data.

This report outlines the scientific potential and technical feasibility for the Future Circular Collider (FCC) at CERN, proposing a staged approach with an electron-positron collider followed by a hadron collider in the same tunnel to enable unprecedented precision measurements of fundamental particles and extend the direct discovery reach for new physics. The study details accelerator designs, detector concepts, and physics programs, demonstrating the capability to improve electroweak precision by orders of magnitude and probe new physics up to 40-50 TeV.

There are no more papers matching your filters at the moment.