Dalian Maritime University

Early-stage fire scenes (0-15 minutes after ignition) represent a crucial temporal window for emergency interventions. During this stage, the smoke produced by combustion significantly reduces the visibility of surveillance systems, severely impairing situational awareness and hindering effective emergency response and rescue operations. Consequently, there is an urgent need to remove smoke from images to obtain clear scene information. However, the development of smoke removal algorithms remains limited due to the lack of large-scale, real-world datasets comprising paired smoke-free and smoke-degraded images. To address these limitations, we present a real-world surveillance image desmoking benchmark dataset named SmokeBench, which contains image pairs captured under diverse scenes setup and smoke concentration. The curated dataset provides precisely aligned degraded and clean images, enabling supervised learning and rigorous evaluation. We conduct comprehensive experiments by benchmarking a variety of desmoking methods on our dataset. Our dataset provides a valuable foundation for advancing robust and practical image desmoking in real-world fire scenes. This dataset has been released to the public and can be downloaded from this https URL.

17 Jul 2025

Large language models (LLMs) have transformed natural language processing but face critical deployment challenges in device-edge systems due to resource limitations and communication overhead. To address these issues, collaborative frameworks have emerged that combine small language models (SLMs) on devices with LLMs at the edge, using speculative decoding (SD) to improve efficiency. However, existing solutions often trade inference accuracy for latency or suffer from high uplink transmission costs when verifying candidate tokens. In this paper, we propose Distributed Split Speculative Decoding (DSSD), a novel architecture that not only preserves the SLM-LLM split but also partitions the verification phase between the device and edge. In this way, DSSD replaces the uplink transmission of multiple vocabulary distributions with a single downlink transmission, significantly reducing communication latency while maintaining inference quality. Experiments show that our solution outperforms current methods, and codes are at: this https URL

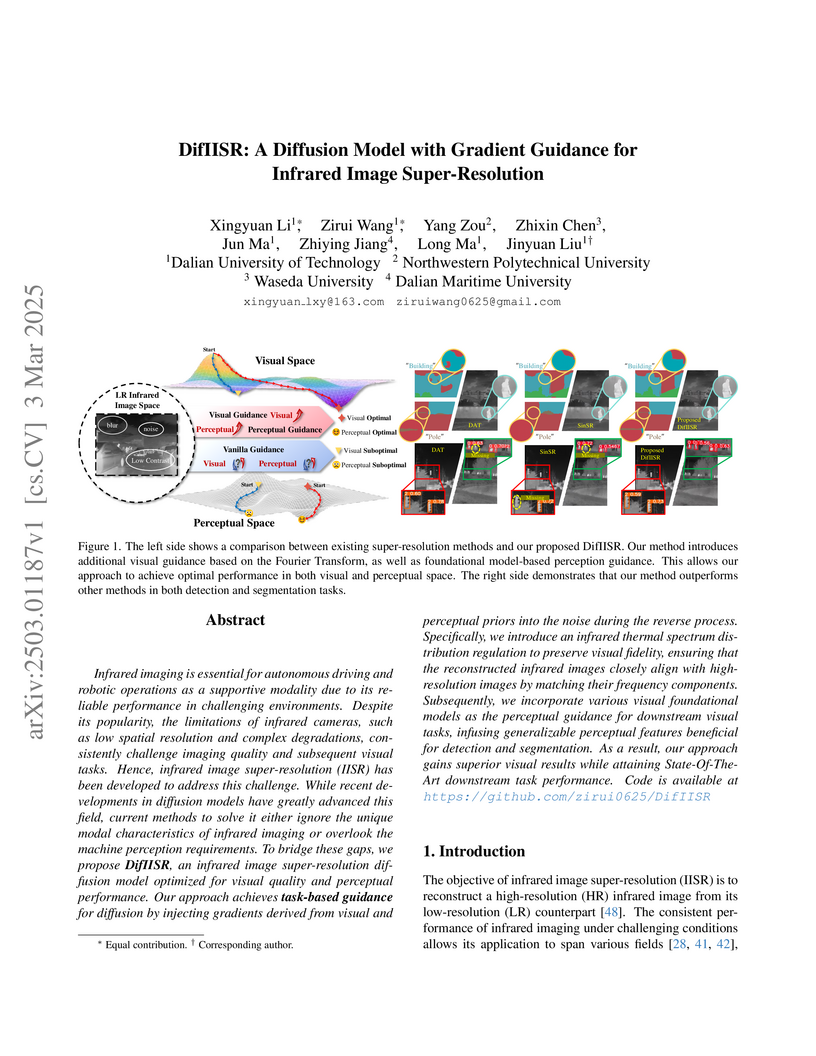

Researchers from Dalian University of Technology introduce DifIISR, a novel diffusion-based framework for infrared image super-resolution that combines gradient-based guidance with thermal spectrum preservation, achieving superior visual quality and downstream task performance through innovative dual visual-perceptual optimization.

DCEvo presents a unified framework for infrared and visible image fusion that simultaneously optimizes for visual quality and downstream computer vision tasks like object detection and segmentation. The framework achieved an average improvement of 9.32% in visual quality metrics while enhancing detection performance on M3FD, RoadScene, and TNO datasets.

24 Nov 2025

In-context learning (ICL) with large language models (LLMs) has emerged as a promising paradigm for named entity recognition (NER) in low-resource scenarios. However, existing ICL-based NER methods suffer from three key limitations: (1) reliance on dynamic retrieval of annotated examples, which is problematic when annotated data is scarce; (2) limited generalization to unseen domains due to the LLM's insufficient internal domain knowledge; and (3) failure to incorporate external knowledge or resolve entity ambiguities. To address these challenges, we propose KDR-Agent, a novel multi-agent framework for multi-domain low-resource in-context NER that integrates Knowledge retrieval, Disambiguation, and Reflective analysis. KDR-Agent leverages natural-language type definitions and a static set of entity-level contrastive demonstrations to reduce dependency on large annotated corpora. A central planner coordinates specialized agents to (i) retrieve factual knowledge from Wikipedia for domain-specific mentions, (ii) resolve ambiguous entities via contextualized reasoning, and (iii) reflect on and correct model predictions through structured self-assessment. Experiments across ten datasets from five domains demonstrate that KDR-Agent significantly outperforms existing zero-shot and few-shot ICL baselines across multiple LLM backbones. The code and data can be found at this https URL.

Harbin Institute of Technology researchers develop ACDepth, a robust monocular depth estimation framework that addresses performance degradation under adverse weather conditions by generating high-quality degraded training data using fine-tuned diffusion models with LoRA adapters and implementing multi-granularity knowledge distillation that includes novel ordinal guidance for uncertain regions, achieving 2.50% absRel improvement on night scenes and 2.61% on rainy scenes compared to previous state-of-the-art methods while demonstrating strong zero-shot generalization across unseen adverse weather datasets.

Topic discovery in scientific literature provides valuable insights for researchers to identify emerging trends and explore new avenues for investigation, facilitating easier scientific information retrieval. Many machine learning methods, particularly deep embedding techniques, have been applied to discover research topics. However, most existing topic discovery methods rely on word embedding to capture the semantics and lack a comprehensive understanding of scientific publications, struggling with complex, high-dimensional text relationships. Inspired by the exceptional comprehension of textual information by large language models (LLMs), we propose an advanced topic discovery method enhanced by LLMs to improve scientific topic identification, namely SciTopic. Specifically, we first build a textual encoder to capture the content from scientific publications, including metadata, title, and abstract. Next, we construct a space optimization module that integrates entropy-based sampling and triplet tasks guided by LLMs, enhancing the focus on thematic relevance and contextual intricacies between ambiguous instances. Then, we propose to fine-tune the textual encoder based on the guidance from the LLMs by optimizing the contrastive loss of the triplets, forcing the text encoder to better discriminate instances of different topics. Finally, extensive experiments conducted on three real-world datasets of scientific publications demonstrate that SciTopic outperforms the state-of-the-art (SOTA) scientific topic discovery methods, enabling researchers to gain deeper and faster insights.

Visual gaze estimation, with its wide-ranging application scenarios, has

garnered increasing attention within the research community. Although existing

approaches infer gaze solely from image signals, recent advances in

visual-language collaboration have demonstrated that the integration of

linguistic information can significantly enhance performance across various

visual tasks. Leveraging the remarkable transferability of large-scale

Contrastive Language-Image Pre-training (CLIP) models, we address the open and

urgent question of how to effectively apply linguistic cues to gaze estimation.

In this work, we propose GazeCLIP, a novel gaze estimation framework that

deeply explores text-face collaboration. Specifically, we introduce a

meticulously designed linguistic description generator to produce text signals

enriched with coarse directional cues. Furthermore, we present a CLIP-based

backbone adept at characterizing text-face pairs for gaze estimation,

complemented by a fine-grained multimodal fusion module that models the

intricate interrelationships between heterogeneous inputs. Extensive

experiments on three challenging datasets demonstrate the superiority of

GazeCLIP, which achieves state-of-the-art accuracy. Our findings underscore the

potential of using visual-language collaboration to advance gaze estimation and

open new avenues for future research in multimodal learning for visual tasks.

The implementation code and the pre-trained model will be made publicly

available.

Underwater images often suffer from various issues such as low brightness, color shift, blurred details, and noise due to light absorption and scattering caused by water and suspended particles. Previous underwater image enhancement (UIE) methods have primarily focused on spatial domain enhancement, neglecting the frequency domain information inherent in the images. However, the degradation factors of underwater images are closely intertwined in the spatial domain. Although certain methods focus on enhancing images in the frequency domain, they overlook the inherent relationship between the image degradation factors and the information present in the frequency domain. As a result, these methods frequently enhance certain attributes of the improved image while inadequately addressing or even exacerbating other attributes. Moreover, many existing methods heavily rely on prior knowledge to address color shift problems in underwater images, limiting their flexibility and robustness. In order to overcome these limitations, we propose the Embedding Frequency and Dual Color Encoder Network (FDCE-Net) in our paper. The FDCE-Net consists of two main structures: (1) Frequency Spatial Network (FS-Net) aims to achieve initial enhancement by utilizing our designed Frequency Spatial Residual Block (FSRB) to decouple image degradation factors in the frequency domain and enhance different attributes separately. (2) To tackle the color shift issue, we introduce the Dual-Color Encoder (DCE). The DCE establishes correlations between color and semantic representations through cross-attention and leverages multi-scale image features to guide the optimization of adaptive color query. The final enhanced images are generated by combining the outputs of FS-Net and DCE through a fusion network. These images exhibit rich details, clear textures, low noise and natural colors.

Understanding the dynamics of momentum and game fluctuation in tennis matches

is cru-cial for predicting match outcomes and enhancing player performance. In

this study, we present a comprehensive analysis of these factors using a

dataset from the 2023 Wimbledon final. Ini-tially, we develop a

sliding-window-based scoring model to assess player performance, ac-counting

for the influence of serving dominance through a serve decay factor.

Additionally, we introduce a novel approach, Lasso-Ridge-based XGBoost, to

quantify momentum effects, lev-eraging the predictive power of XGBoost while

mitigating overfitting through regularization. Through experimentation, we

achieve an accuracy of 94% in predicting match outcomes, iden-tifying key

factors influencing winning rates. Subsequently, we propose a Derivative of the

winning rate algorithm to quantify game fluctuation, employing an LSTM_Deep

model to pre-dict fluctuation scores. Our model effectively captures temporal

correlations in momentum fea-tures, yielding mean squared errors ranging from

0.036 to 0.064. Furthermore, we explore me-ta-learning using MAML to transfer

our model to predict outcomes in ping-pong matches, though results indicate a

comparative performance decline. Our findings provide valuable in-sights into

momentum dynamics and game fluctuation, offering implications for sports

analytics and player training strategies.

Wireless communication systems face significant challenges in meeting the increasing demands for higher data rates and more reliable connectivity in complex environments. Stacked intelligent metasurfaces (SIMs) have emerged as a promising technology for realizing wave-domain signal processing, with mobile SIMs offering superior communication performance compared to their fixed counterparts. In this paper, we investigate a novel unmanned aerial vehicle (UAV)-mounted SIMs (UAV-SIMs) assisted communication system within the low-altitude economy (LAE) networks paradigm, where UAVs function as both base stations that cache SIM-processed data and mobile platforms that flexibly deploy SIMs to enhance uplink communications from ground users. To maximize network capacity, we formulate a UAV-SIM-based joint optimization problem (USBJOP) that comprehensively addresses three critical aspects: the association between UAV-SIMs and users, the three-dimensional positioning of UAV-SIMs, and the phase shifts across multiple SIM layers. Due to the inherent non-convexity and NP-hardness of USBJOP, we decompose it into three sub-optimization problems, \textit{i.e.}, association between UAV-SIMs and users optimization problem (AUUOP), UAV location optimization problem (ULOP), and UAV-SIM phase shifts optimization problem (USPSOP), and solve them using an alternating optimization strategy. Specifically, we transform AUUOP and ULOP into convex forms solvable by the CVX tool, while addressing USPSOP through a generative artificial intelligence (GAI)-based hybrid optimization algorithm. Simulations demonstrate that our proposed approach significantly outperforms benchmark schemes, achieving approximately 1.5 times higher network capacity compared to suboptimal alternatives. Additionally, our proposed GAI method reduces the algorithm runtime by 10\% while maintaining solution quality.

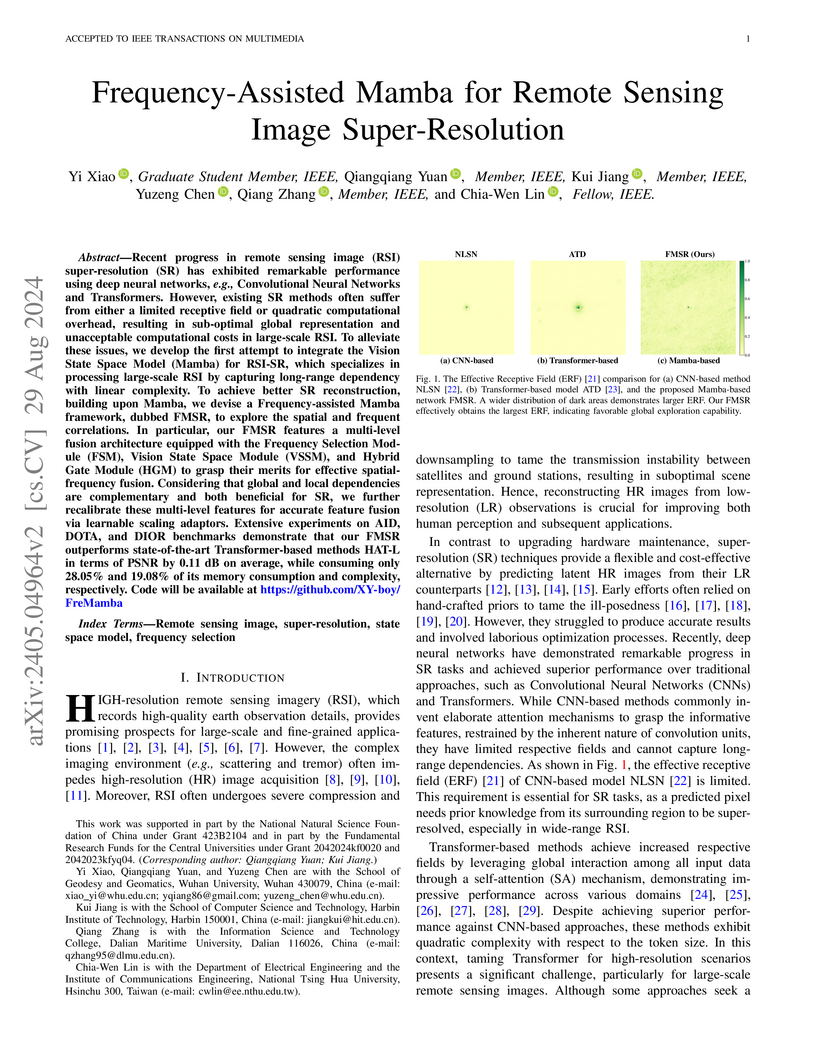

Recent progress in remote sensing image (RSI) super-resolution (SR) has exhibited remarkable performance using deep neural networks, e.g., Convolutional Neural Networks and Transformers. However, existing SR methods often suffer from either a limited receptive field or quadratic computational overhead, resulting in sub-optimal global representation and unacceptable computational costs in large-scale RSI. To alleviate these issues, we develop the first attempt to integrate the Vision State Space Model (Mamba) for RSI-SR, which specializes in processing large-scale RSI by capturing long-range dependency with linear complexity. To achieve better SR reconstruction, building upon Mamba, we devise a Frequency-assisted Mamba framework, dubbed FMSR, to explore the spatial and frequent correlations. In particular, our FMSR features a multi-level fusion architecture equipped with the Frequency Selection Module (FSM), Vision State Space Module (VSSM), and Hybrid Gate Module (HGM) to grasp their merits for effective spatial-frequency fusion. Considering that global and local dependencies are complementary and both beneficial for SR, we further recalibrate these multi-level features for accurate feature fusion via learnable scaling adaptors. Extensive experiments on AID, DOTA, and DIOR benchmarks demonstrate that our FMSR outperforms state-of-the-art Transformer-based methods HAT-L in terms of PSNR by 0.11 dB on average, while consuming only 28.05% and 19.08% of its memory consumption and complexity, respectively. Code will be available at this https URL

13 May 2025

With the continuous growth in the scale and complexity of software systems,

defect remediation has become increasingly difficult and costly. Automated

defect prediction tools can proactively identify software changes prone to

defects within software projects, thereby enhancing software development

efficiency. However, existing work in heterogeneous and complex software

projects continues to face challenges, such as struggling with heterogeneous

commit structures and ignoring cross-line dependencies in code changes, which

ultimately reduce the accuracy of defect identification. To address these

challenges, we propose an approach called RC_Detector. RC_Detector comprises

three main components: the bug-fixing graph construction component, the code

semantic aggregation component, and the cross-line semantic retention

component. The bug-fixing graph construction component identifies the code

syntax structures and program dependencies within bug-fixing commits and

transforms them into heterogeneous graph formats by converting the source code

into vector representations. The code semantic aggregation component adapts to

heterogeneous data by using heterogeneous attention to learn the hidden

semantic representation of target code lines. The cross-line semantic retention

component regulates propagated semantic information by using attenuation and

reinforcement gates derived from old and new code semantic representations,

effectively preserving cross-line semantic relationships. Extensive experiments

were conducted to evaluate the performance of our model by collecting data from

87 open-source projects, including 675 bug-fixing commits. The experimental

results demonstrate that our model outperforms state-of-the-art approaches,

achieving significant improvements of

83.15%,96.83%,78.71%,74.15%,54.14%,91.66%,91.66%, and 34.82% in MFR,

respectively, compared with the state-of-the-art approaches.

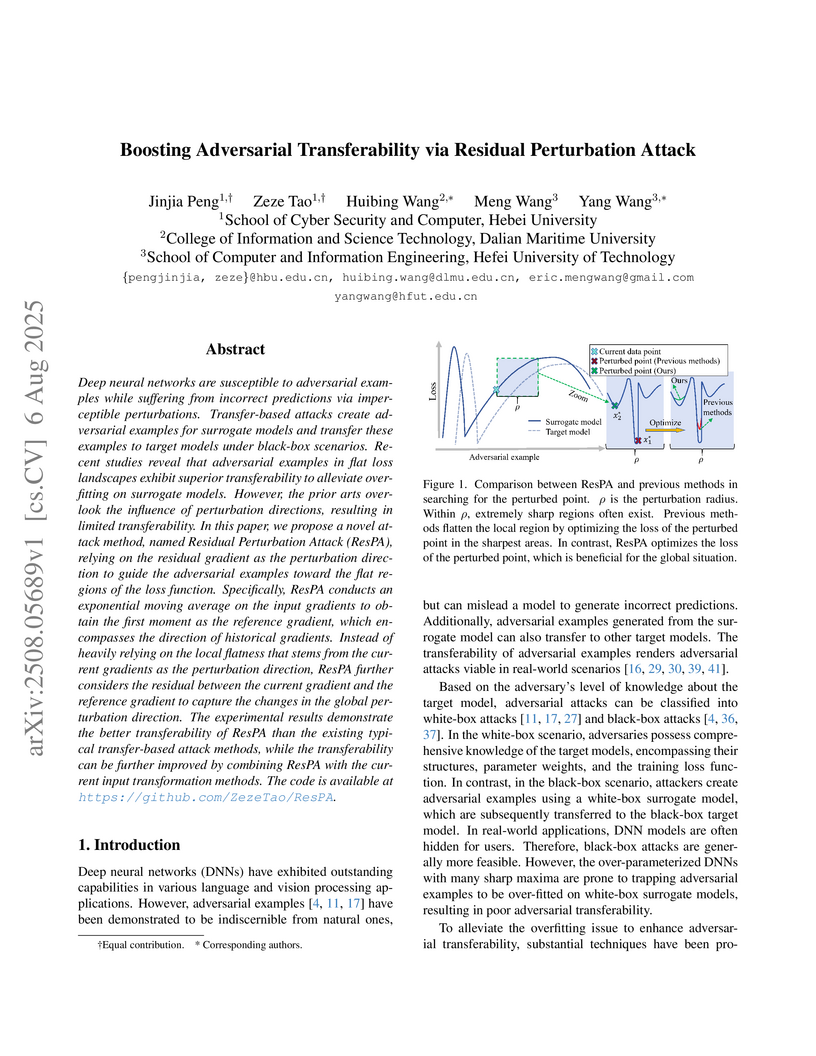

Deep neural networks are susceptible to adversarial examples while suffering from incorrect predictions via imperceptible perturbations. Transfer-based attacks create adversarial examples for surrogate models and transfer these examples to target models under black-box scenarios. Recent studies reveal that adversarial examples in flat loss landscapes exhibit superior transferability to alleviate overfitting on surrogate models. However, the prior arts overlook the influence of perturbation directions, resulting in limited transferability. In this paper, we propose a novel attack method, named Residual Perturbation Attack (ResPA), relying on the residual gradient as the perturbation direction to guide the adversarial examples toward the flat regions of the loss function. Specifically, ResPA conducts an exponential moving average on the input gradients to obtain the first moment as the reference gradient, which encompasses the direction of historical gradients. Instead of heavily relying on the local flatness that stems from the current gradients as the perturbation direction, ResPA further considers the residual between the current gradient and the reference gradient to capture the changes in the global perturbation direction. The experimental results demonstrate the better transferability of ResPA than the existing typical transfer-based attack methods, while the transferability can be further improved by combining ResPA with the current input transformation methods. The code is available at this https URL.

A novel cloud-edge collaborative inference architecture, CE-LSLM, enables efficient and privacy-preserving Large Language Model (LLM) inference by distributing tasks between cloud LLMs and edge Small Language Models (SLMs). The framework demonstrated reduced latency, increased throughput (4-6x higher at moderate request rates), and zero user data upload, maintaining comparable generation quality for applications in 6G networks.

Graph Neural Networks (GNNs) have demonstrated strong performance across tasks such as node classification, link prediction, and graph classification, but remain vulnerable to backdoor attacks that implant imperceptible triggers during training to control predictions. While node-level attacks exploit local message passing, graph-level attacks face the harder challenge of manipulating global representations while maintaining stealth. We identify two main sources of anomaly in existing graph classification backdoor methods: structural deviation from rare subgraph triggers and semantic deviation caused by label flipping, both of which make poisoned graphs easily detectable by anomaly detection models. To address this, we propose DPSBA, a clean-label backdoor framework that learns in-distribution triggers via adversarial training guided by anomaly-aware discriminators. DPSBA effectively suppresses both structural and semantic anomalies, achieving high attack success while significantly improving stealth. Extensive experiments on real-world datasets validate that DPSBA achieves a superior balance between effectiveness and detectability compared to state-of-the-art baselines.

Using the gauge-gravity duality, we investigate the fermionic spectroscopy in

the D(-1)-D3 brane system. The background geometry of this system described by

IIB supergravity includes a black (deconfined) and bubble (confined) D3-brane

which corresponds respectively to a deconfined and a confined gauge theory in

holography. The charge of the D(-1) brane as the D-instanton gives the gluon

condensate in this model. To simplify the holographic setup, we first reduce

briefly the ten-dimensional supergravity background produced by D(-1)-D3-branes

to an equivalently five-dimensional background. Then the fermionic spectrum in

the confined case is obtained by decomposing the fermion with dimensional

reduction. In addition, by using the standard method for computing the Green

function in the AdS/CFT dictionary, we derive the equations for the fermionic

correlation functions and solve them numerically with the infalling boundary

condition. Our numerical results in the deconfined case illustrate that the

fermionic correlation function as spectral function includes two branches of

the dispersion curves whose behavior is very close to the results obtained from

the method of hard thermal loop. And the effective mass generated by the medium

effect of fermion splits into two values due to the spin-dependent interactions

induced by instantons. In the confined case, the holographic correlation

function indicates several separated dispersion curves which illustrates

consistently the onset mass in the fermionic spectrum we obtained. Therefore,

this work in holography demonstrates the instantonic configuration is very

influential to the fermion in QCD.

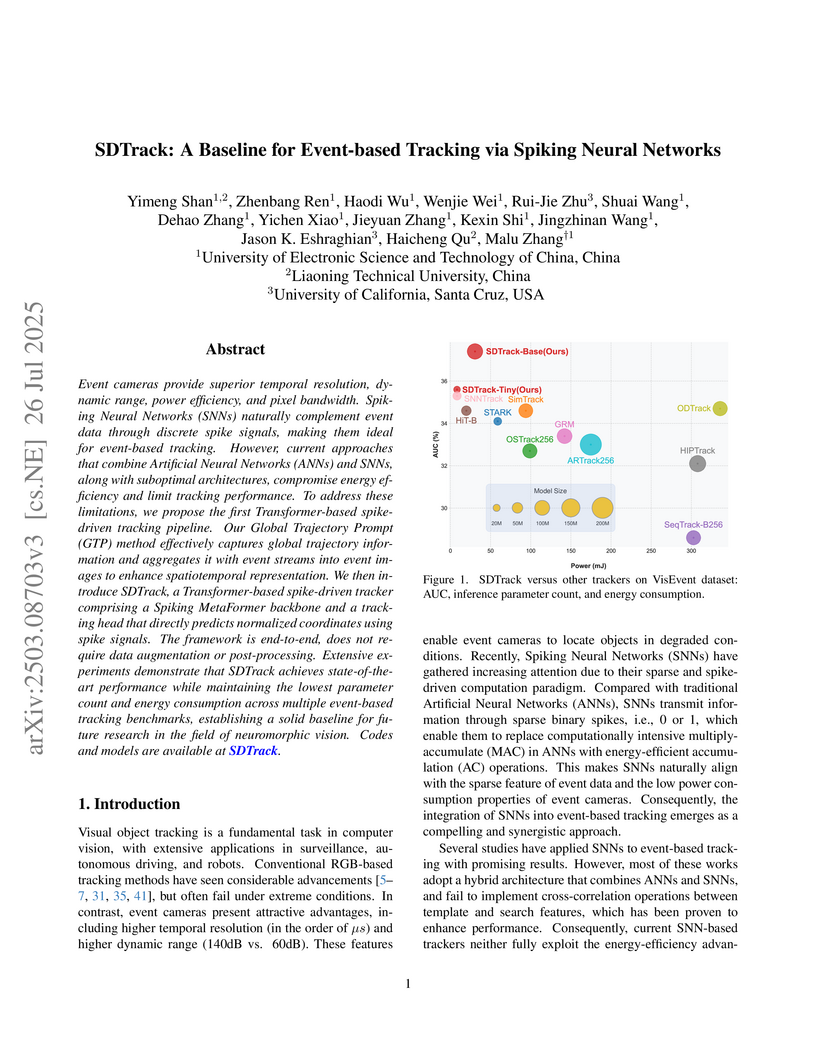

Event cameras provide superior temporal resolution, dynamic range, power efficiency, and pixel bandwidth. Spiking Neural Networks (SNNs) naturally complement event data through discrete spike signals, making them ideal for event-based tracking. However, current approaches that combine Artificial Neural Networks (ANNs) and SNNs, along with suboptimal architectures, compromise energy efficiency and limit tracking performance. To address these limitations, we propose the first Transformer-based spike-driven tracking pipeline. Our Global Trajectory Prompt (GTP) method effectively captures global trajectory information and aggregates it with event streams into event images to enhance spatiotemporal representation. We then introduce SDTrack, a Transformer-based spike-driven tracker comprising a Spiking MetaFormer backbone and a tracking head that directly predicts normalized coordinates using spike signals. The framework is end-to-end, does not require data augmentation or post-processing. Extensive experiments demonstrate that SDTrack achieves state-of-the-art performance while maintaining the lowest parameter count and energy consumption across multiple event-based tracking benchmarks, establishing a solid baseline for future research in the field of neuromorphic vision.

As integrated circuit scale grows and design complexity rises, effective

circuit representation helps support logic synthesis, formal verification, and

other automated processes in electronic design automation. And-Inverter Graphs

(AIGs), as a compact and canonical structure, are widely adopted for

representing Boolean logic in these workflows. However, the increasing

complexity and integration density of modern circuits introduce structural

heterogeneity and global logic information loss in AIGs, posing significant

challenges to accurate circuit modeling. To address these issues, we propose

FuncGNN, which integrates hybrid feature aggregation to extract

multi-granularity topological patterns, thereby mitigating structural

heterogeneity and enhancing logic circuit representations. FuncGNN further

introduces gate-aware normalization that adapts to circuit-specific gate

distributions, improving robustness to structural heterogeneity. Finally,

FuncGNN employs multi-layer integration to merge intermediate features across

layers, effectively synthesizing local and global semantic information for

comprehensive logic representations. Experimental results on two logic-level

analysis tasks (i.e., signal probability prediction and truth-table distance

prediction) demonstrate that FuncGNN outperforms existing state-of-the-art

methods, achieving improvements of 2.06% and 18.71%, respectively, while

reducing training time by approximately 50.6% and GPU memory usage by about

32.8%.

Recent advancements in multi-view 3D reconstruction and novel-view synthesis, particularly through Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS), have greatly enhanced the fidelity and efficiency of 3D content creation. However, inpainting 3D scenes remains a challenging task due to the inherent irregularity of 3D structures and the critical need for maintaining multi-view consistency. In this work, we propose a novel 3D Gaussian inpainting framework that reconstructs complete 3D scenes by leveraging sparse inpainted views. Our framework incorporates an automatic Mask Refinement Process and region-wise Uncertainty-guided Optimization. Specifically, we refine the inpainting mask using a series of operations, including Gaussian scene filtering and back-projection, enabling more accurate localization of occluded regions and realistic boundary restoration. Furthermore, our Uncertainty-guided Fine-grained Optimization strategy, which estimates the importance of each region across multi-view images during training, alleviates multi-view inconsistencies and enhances the fidelity of fine details in the inpainted results. Comprehensive experiments conducted on diverse datasets demonstrate that our approach outperforms existing state-of-the-art methods in both visual quality and view consistency.

There are no more papers matching your filters at the moment.