Infineon Technologies AG

24 Dec 2024

Understanding the benefits of quantum computing for solving combinatorial optimization problems (COPs) remains an open research question. In this work, we extend and analyze algorithms that solve COPs by recursively shrinking them. The algorithms leverage correlations between variables extracted from quantum or classical subroutines to recursively simplify the problem. We compare the performance of the algorithms equipped with correlations from the quantum approximate optimization algorithm (QAOA) as well as the classical linear programming (LP) and semi-definite programming (SDP) relaxations. This allows us to benchmark the utility of QAOA correlations against established classical relaxation algorithms. We apply the recursive algorithm to MaxCut problem instances with up to a hundred vertices at different graph densities. Our results indicate that LP outperforms all other approaches for low-density instances, while SDP excels for high-density problems. Moreover, the shrinking algorithm proves to be a viable alternative to established methods of rounding LP and SDP relaxations. In addition, the recursive shrinking algorithm outperforms its bare counterparts for all three types of correlations, i.e., LP with spanning tree rounding, the Goemans-Williamson algorithm, and conventional QAOA. While the lowest depth QAOA consistently yields worse results than the SDP, our tensor network experiments show that the performance increases significantly for deeper QAOA circuits.

Radar and LiDAR have been widely used in autonomous driving as LiDAR provides

rich structure information, and radar demonstrates high robustness under

adverse weather. Recent studies highlight the effectiveness of fusing radar and

LiDAR point clouds. However, challenges remain due to the modality misalignment

and information loss during feature extractions. To address these issues, we

propose a 4D radar-LiDAR framework to mutually enhance their representations.

Initially, the indicative features from radar are utilized to guide both radar

and LiDAR geometric feature learning. Subsequently, to mitigate their sparsity

gap, the shape information from LiDAR is used to enrich radar BEV features.

Extensive experiments on the View-of-Delft (VoD) dataset demonstrate our

approach's superiority over existing methods, achieving the highest mAP of

71.76% across the entire area and 86.36\% within the driving corridor.

Especially for cars, we improve the AP by 4.17% and 4.20% due to the strong

indicative features and symmetric shapes.

Depth estimation remains central to autonomous driving, and radar-camera fusion offers robustness in adverse conditions by providing complementary geometric cues. In this paper, we present XD-RCDepth, a lightweight architecture that reduces the parameters by 29.7% relative to the state-of-the-art lightweight baseline while maintaining comparable accuracy. To preserve performance under compression and enhance interpretability, we introduce two knowledge-distillation strategies: an explainability-aligned distillation that transfers the teacher's saliency structure to the student, and a depth-distribution distillation that recasts depth regression as soft classification over discretized bins. Together, these components reduce the MAE compared with direct training with 7.97% and deliver competitive accuracy with real-time efficiency on nuScenes and ZJU-4DRadarCam datasets.

Hierarchical classification is a crucial task in many applications, where objects are organized into multiple levels of categories. However, conventional classification approaches often neglect inherent inter-class relationships at different hierarchy levels, thus missing important supervisory signals. Thus, we propose two novel hierarchical contrastive learning (HMLC) methods. The first, leverages a Gaussian Mixture Model (G-HMLC) and the second uses an attention mechanism to capture hierarchy-specific features (A-HMLC), imitating human processing. Our approach explicitly models inter-class relationships and imbalanced class distribution at higher hierarchy levels, enabling fine-grained clustering across all hierarchy levels. On the competitive CIFAR100 and ModelNet40 datasets, our method achieves state-of-the-art performance in linear evaluation, outperforming existing hierarchical contrastive learning methods by 2 percentage points in terms of accuracy. The effectiveness of our approach is backed by both quantitative and qualitative results, highlighting its potential for applications in computer vision and beyond.

03 Jul 2025

Researchers from Infineon Technologies and Rheinland-Pfälzische Technische Universität Kaiserslautern-Landau developed an agentic AI-based Multi-Agent System with Human-in-the-Loop integration for end-to-end hardware Register Transfer Level (RTL) design and formal verification. This approach generated lint-error-free RTL for simpler designs and achieved an average formal property coverage of 97.73% with minimal human intervention (27 minutes per design), significantly outperforming zero-shot LLM methods.

01 Mar 2025

Saarthi, developed by Infineon Technologies, introduces the first fully autonomous AI formal verification engineer capable of verifying Register Transfer Level (RTL) designs end-to-end. The system, leveraging an agentic workflow, achieved an overall efficacy of approximately 40% for complete formal verification processes.

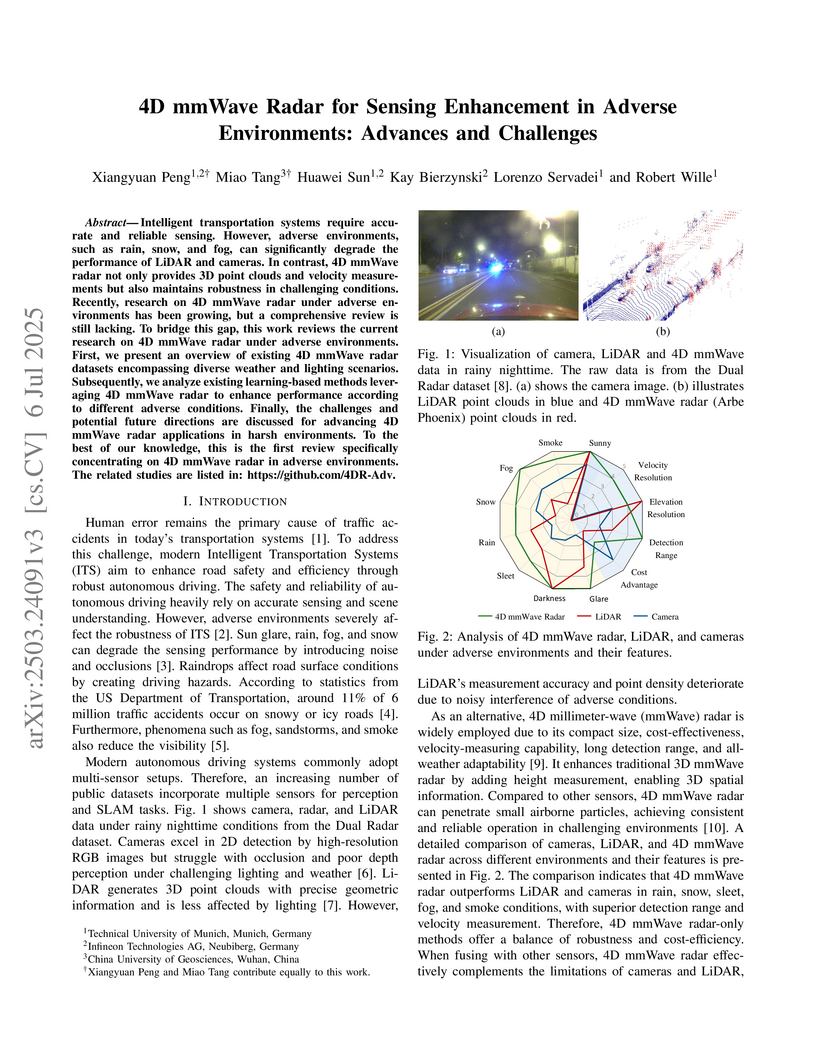

A comprehensive review consolidates advancements and challenges of 4D millimeter-wave radar for enhancing sensing in adverse environments, surveying deep learning datasets, methodologies, and applications. The work establishes radar's critical role as a robust sensor, especially when fused with other modalities, yielding improved perception performance in challenging weather and lighting conditions.

Counterfactual explanations aim to enhance model transparency by showing how inputs can be minimally altered to change predictions. For multivariate time series, existing methods often generate counterfactuals that are invalid, implausible, or unintuitive. We introduce GenFacts, a generative framework based on a class-discriminative variational autoencoder. It integrates contrastive and classification-consistency objectives, prototype-based initialization, and realism-constrained optimization. We evaluate GenFacts on radar gesture data as an industrial use case and handwritten letter trajectories as an intuitive benchmark. Across both datasets, GenFacts outperforms state-of-the-art baselines in plausibility (+18.7%) and achieves the highest interpretability scores in a human study. These results highlight that plausibility and user-centered interpretability, rather than sparsity alone, are key to actionable counterfactuals in time series data.

In recent years, strong expectations have been raised for the possible power

of quantum computing for solving difficult optimization problems, based on

theoretical, asymptotic worst-case bounds. Can we expect this to have

consequences for Linear and Integer Programming when solving instances of

practically relevant size, a fundamental goal of Mathematical Programming,

Operations Research and Algorithm Engineering? Answering this question faces a

crucial impediment: The lack of sufficiently large quantum platforms prevents

performing real-world tests for comparison with classical methods.

In this paper, we present a quantum analog for classical runtime analysis

when solving real-world instances of important optimization problems. To this

end, we measure the expected practical performance of quantum computers by

analyzing the expected gate complexity of a quantum algorithm. The lack of

practical quantum platforms for experimental comparison is addressed by hybrid

benchmarking, in which the algorithm is performed on a classical system,

logging the expected cost of the various subroutines that are employed by the

quantum versions. In particular, we provide an analysis of quantum methods for

Linear Programming, for which recent work has provided asymptotic speedup

through quantum subroutines for the Simplex method. We show that a practical

quantum advantage for realistic problem sizes would require quantum gate

operation times that are considerably below current physical limitations.

Hand gesture recognition using radar often relies on computationally expensive fast Fourier transforms. This paper proposes an alternative approach that bypasses fast Fourier transforms using resonate-and-fire neurons. These neurons directly detect the hand in the time-domain signal, eliminating the need for fast Fourier transforms to retrieve range information. Following detection, a simple Goertzel algorithm is employed to extract five key features, eliminating the need for a second fast Fourier transform. These features are then fed into a recurrent neural network, achieving an accuracy of 98.21% for classifying five gestures. The proposed approach demonstrates competitive performance with reduced complexity compared to traditional methods

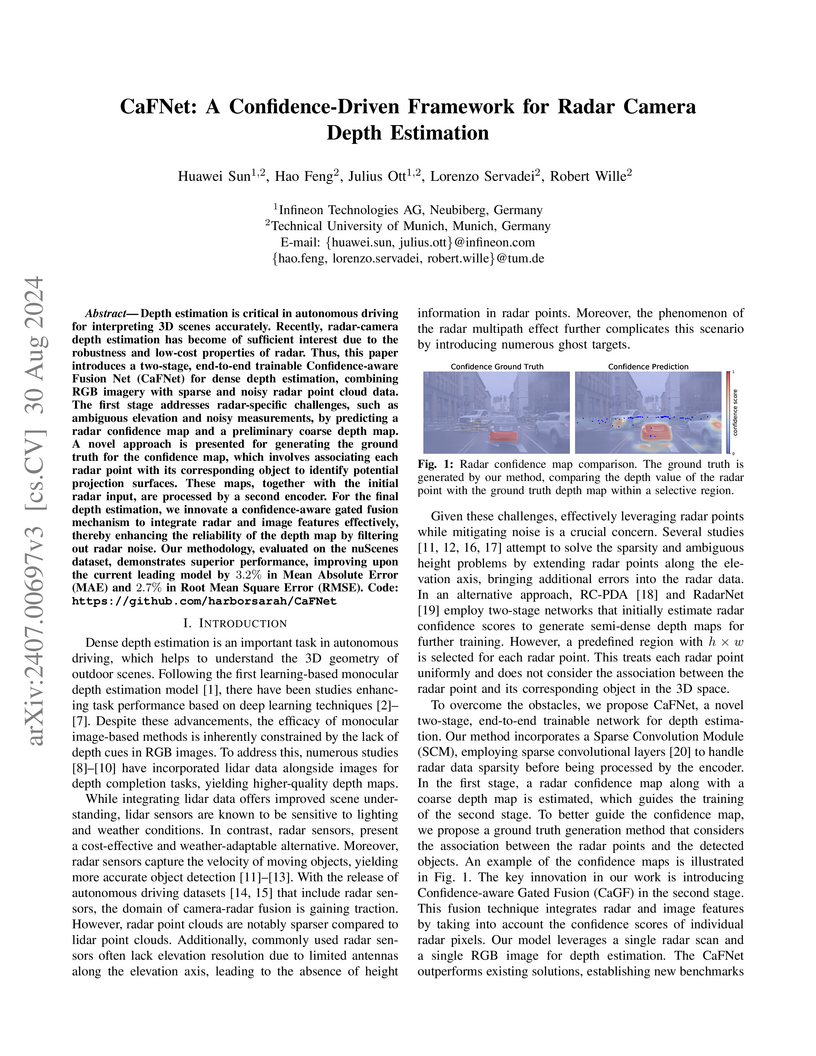

Depth estimation is critical in autonomous driving for interpreting 3D scenes

accurately. Recently, radar-camera depth estimation has become of sufficient

interest due to the robustness and low-cost properties of radar. Thus, this

paper introduces a two-stage, end-to-end trainable Confidence-aware Fusion Net

(CaFNet) for dense depth estimation, combining RGB imagery with sparse and

noisy radar point cloud data. The first stage addresses radar-specific

challenges, such as ambiguous elevation and noisy measurements, by predicting a

radar confidence map and a preliminary coarse depth map. A novel approach is

presented for generating the ground truth for the confidence map, which

involves associating each radar point with its corresponding object to identify

potential projection surfaces. These maps, together with the initial radar

input, are processed by a second encoder. For the final depth estimation, we

innovate a confidence-aware gated fusion mechanism to integrate radar and image

features effectively, thereby enhancing the reliability of the depth map by

filtering out radar noise. Our methodology, evaluated on the nuScenes dataset,

demonstrates superior performance, improving upon the current leading model by

3.2% in Mean Absolute Error (MAE) and 2.7% in Root Mean Square Error (RMSE).

Code: this https URL

02 Sep 2025

Failure Analysis (FA) is a highly intricate and knowledge-intensive process. The integration of AI components within the computational infrastructure of FA labs has the potential to automate a variety of tasks, including the detection of non-conformities in images, the retrieval of analogous cases from diverse data sources, and the generation of reports from annotated images. However, as the number of deployed AI models increases, the challenge lies in orchestrating these components into cohesive and efficient workflows that seamlessly integrate with the FA process.

This paper investigates the design and implementation of an agentic AI system for semiconductor FA using a Large Language Model (LLM)-based Planning Agent (LPA). The LPA integrates LLMs with advanced planning capabilities and external tool utilization, allowing autonomous processing of complex queries, retrieval of relevant data from external systems, and generation of human-readable responses. The evaluation results demonstrate the agent's operational effectiveness and reliability in supporting FA tasks.

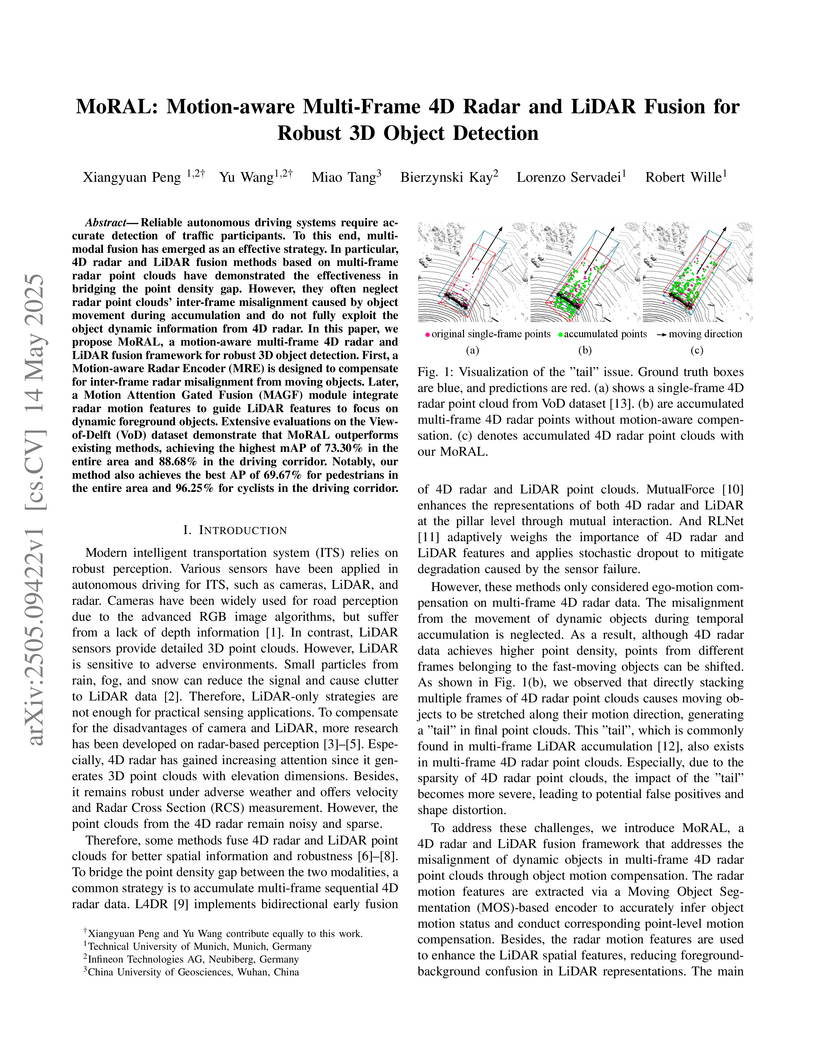

MoRAL presents a framework for motion-aware multi-frame 4D radar and LiDAR fusion, designed to enhance 3D object detection by effectively compensating for object motion in radar data and leveraging this motion information to guide LiDAR feature extraction. The approach achieved an overall mAP of 73.30% on the VoD dataset, a 3.2 percentage point improvement over prior methods, while maintaining real-time inference speeds.

31 Oct 2021

The stretch processing architecture is commonly used for frequency modulated

continuous wave (FMCW) radar due to its inexpensive hardware, low sampling

rate, and simple architecture. However, the stretch processing architecture is

not able to achieve optimal Signal to Noise ratio (SNR) in comparison to the

matched-filter architecture. In this paper, we aim to propose a method whereby

stretch processing can achieve optimal SNR. Hence, we develop a novel

processing method to enable applying a matched filter to the output of the

stretch processing. The proposed architecture achieves optimal SNR while it can

operate on a low sampling rate. In addition, the combination of the proposed

radar architecture and SAR technique can generate high-quality images. To

evaluate the performance of the proposed architecture, four scenarios are

considered. Simulation is carried out based on these scenarios. The simulation

results show that the proposed radar demonstrates the ability to generate an

image with higher quality over stretch processing. This proposed radar can also

bring a bigger gain compression.

13 Aug 2025

High-Q superconducting resonators fabricated in an industry-scale semiconductor-fabrication facility

High-Q superconducting resonators fabricated in an industry-scale semiconductor-fabrication facility

Universal quantum computers promise to solve computational problems that are beyond the capabilities of known classical algorithms. To realize such quantum hardware on a superconducting material platform, a vast number of physical qubits has to be manufactured and integrated at high quality and uniformity on a chip. Anticipating the benefits of semiconductor industry processes in terms of process control, uniformity and repeatability, we set out to manufacture superconducting quantum circuits in a semiconductor fabrication facility. In order to set a baseline for the process quality, we report on the fabrication of coplanar waveguide resonators in a 200 mm production line, making use of a two-layer superconducting circuit technology. We demonstrate high material and process quality by cryogenic Q-factor measurements exceeding 106 in the single-photon regime, for microwave resonators made of both Niobium and Tantalum. In addition, we demonstrate the incorporation of superconducting Niobium air bridges in our process, while maintaining the high quality factor of Niobium resonators.

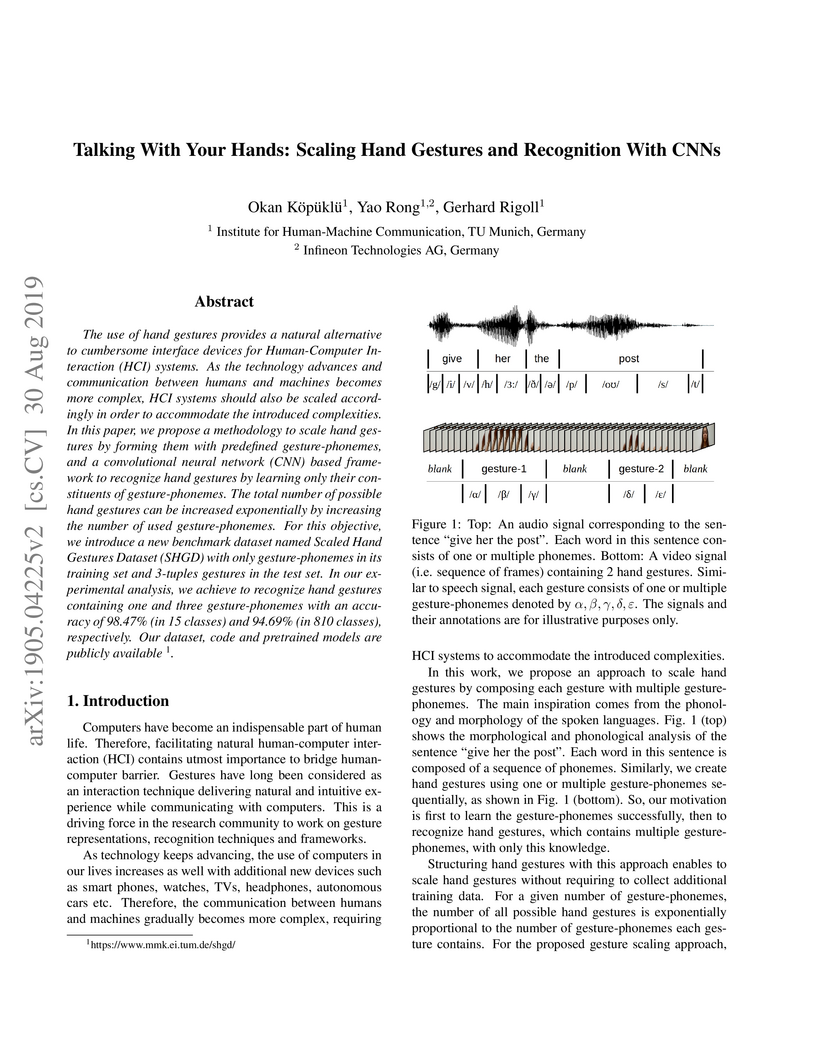

The use of hand gestures provides a natural alternative to cumbersome interface devices for Human-Computer Interaction (HCI) systems. As the technology advances and communication between humans and machines becomes more complex, HCI systems should also be scaled accordingly in order to accommodate the introduced complexities. In this paper, we propose a methodology to scale hand gestures by forming them with predefined gesture-phonemes, and a convolutional neural network (CNN) based framework to recognize hand gestures by learning only their constituents of gesture-phonemes. The total number of possible hand gestures can be increased exponentially by increasing the number of used gesture-phonemes. For this objective, we introduce a new benchmark dataset named Scaled Hand Gestures Dataset (SHGD) with only gesture-phonemes in its training set and 3-tuples gestures in the test set. In our experimental analysis, we achieve to recognize hand gestures containing one and three gesture-phonemes with an accuracy of 98.47% (in 15 classes) and 94.69% (in 810 classes), respectively. Our dataset, code and pretrained models are publicly available.

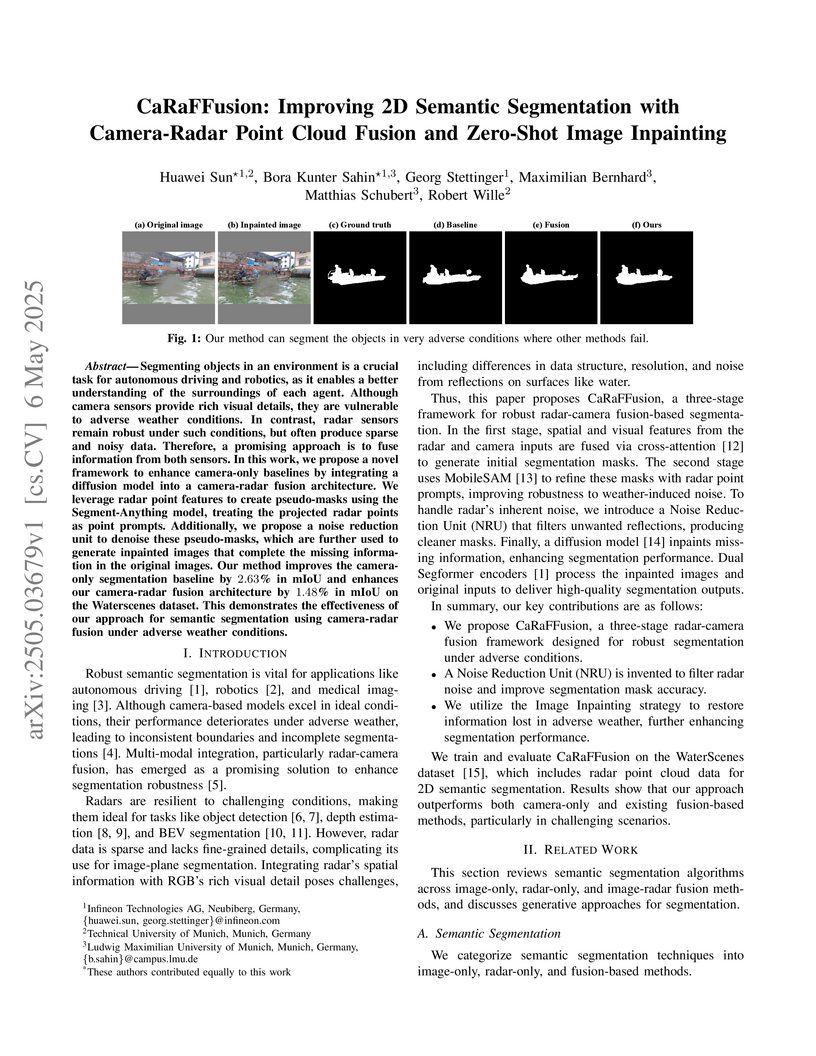

Segmenting objects in an environment is a crucial task for autonomous driving

and robotics, as it enables a better understanding of the surroundings of each

agent. Although camera sensors provide rich visual details, they are vulnerable

to adverse weather conditions. In contrast, radar sensors remain robust under

such conditions, but often produce sparse and noisy data. Therefore, a

promising approach is to fuse information from both sensors. In this work, we

propose a novel framework to enhance camera-only baselines by integrating a

diffusion model into a camera-radar fusion architecture. We leverage radar

point features to create pseudo-masks using the Segment-Anything model,

treating the projected radar points as point prompts. Additionally, we propose

a noise reduction unit to denoise these pseudo-masks, which are further used to

generate inpainted images that complete the missing information in the original

images. Our method improves the camera-only segmentation baseline by 2.63% in

mIoU and enhances our camera-radar fusion architecture by 1.48% in mIoU on the

Waterscenes dataset. This demonstrates the effectiveness of our approach for

semantic segmentation using camera-radar fusion under adverse weather

conditions.

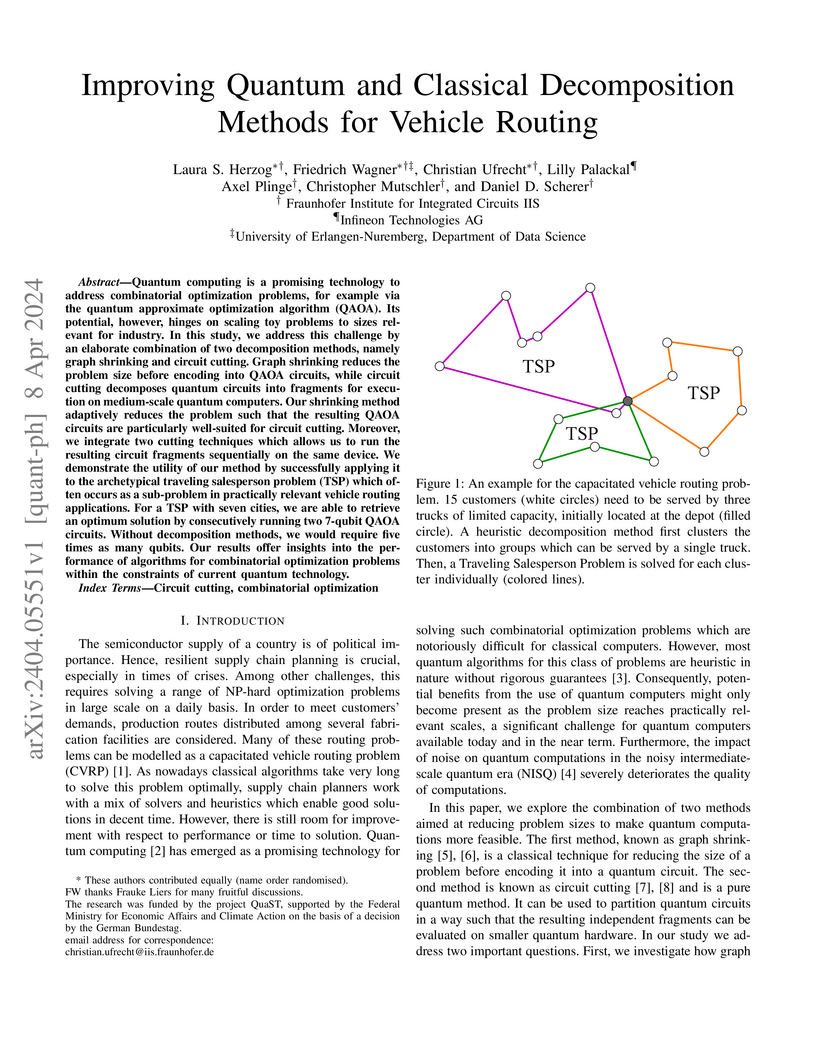

Quantum computing is a promising technology to address combinatorial optimization problems, for example via the quantum approximate optimization algorithm (QAOA). Its potential, however, hinges on scaling toy problems to sizes relevant for industry. In this study, we address this challenge by an elaborate combination of two decomposition methods, namely graph shrinking and circuit cutting. Graph shrinking reduces the problem size before encoding into QAOA circuits, while circuit cutting decomposes quantum circuits into fragments for execution on medium-scale quantum computers. Our shrinking method adaptively reduces the problem such that the resulting QAOA circuits are particularly well-suited for circuit cutting. Moreover, we integrate two cutting techniques which allows us to run the resulting circuit fragments sequentially on the same device. We demonstrate the utility of our method by successfully applying it to the archetypical traveling salesperson problem (TSP) which often occurs as a sub-problem in practically relevant vehicle routing applications. For a TSP with seven cities, we are able to retrieve an optimum solution by consecutively running two 7-qubit QAOA circuits. Without decomposition methods, we would require five times as many qubits. Our results offer insights into the performance of algorithms for combinatorial optimization problems within the constraints of current quantum technology.

Benchmark datasets are crucial for evaluating approaches to scheduling or

dispatching in the semiconductor industry during the development and deployment

phases. However, commonly used benchmark datasets like the Minifab or SMT2020

lack the complex details and constraints found in real-world scenarios. To

mitigate this shortcoming, we compare open-source simulation models with a real

industry dataset to evaluate how optimization methods scale with different

levels of complexity. Specifically, we focus on Reinforcement Learning methods,

performing optimization based on policy-gradient and Evolution Strategies. Our

research provides insights into the effectiveness of these optimization methods

and their applicability to realistic semiconductor frontend fab simulations. We

show that our proposed Evolution Strategies-based method scales much better

than a comparable policy-gradient-based approach. Moreover, we identify the

selection and combination of relevant bottleneck tools to control by the agent

as crucial for an efficient optimization. For the generalization across

different loading scenarios and stochastic tool failure patterns, we achieve

advantages when utilizing a diverse training dataset. While the overall

approach is computationally expensive, it manages to scale well with the number

of CPU cores used for training. For the real industry dataset, we achieve an

improvement of up to 4% regarding tardiness and up to 1% regarding throughput.

For the less complex open-source models Minifab and SMT2020, we observe

double-digit percentage improvement in tardiness and single digit percentage

improvement in throughput by use of Evolution Strategies.

06 Apr 2025

To satisfy automotive safety and security requirements, memory protection

mechanisms are an essential component of automotive microcontrollers. In

today's available systems, either a fully physical address-based protection is

implemented utilizing a memory protection unit, or a memory management unit

takes care of memory protection while also mapping virtual addresses to

physical addresses. The possibility to develop software using a large virtual

address space, which is agnostic to the underlying physical address space,

allows for easier software development and integration, especially in the

context of virtualization. In this work, we showcase an extension to the

current RISC-V SPMP proposal that enables address redirection for selected

address regions, while maintaining the fully deterministic behavior of a memory

protection unit.

There are no more papers matching your filters at the moment.