Ask or search anything...

Utilizing multi-band JWST observations, this research reveals that high-redshift submillimeter galaxies primarily form through secular evolution and internal processes rather than major mergers, uncovering a significant population of central stellar structures that do not conform to established local galaxy classifications.

View blogResearchers at the Chinese Academy of Sciences developed QDepth-VLA, a framework that enhances Vision-Language-Action (VLA) models with robust 3D geometric understanding through quantized depth prediction as auxiliary supervision. This approach improves performance on fine-grained robotic manipulation tasks, achieving up to 29.7% higher success rates on complex simulated tasks and 20.0% gains in real-world pick-and-place scenarios compared to existing baselines.

View blogDeveloped by Huawei Co., Ltd., CODER introduces a multi-agent framework guided by pre-defined task graphs to automate GitHub issue resolution. The system achieved a 28.33% resolved rate on SWE-bench lite, establishing a new state-of-the-art for the benchmark.

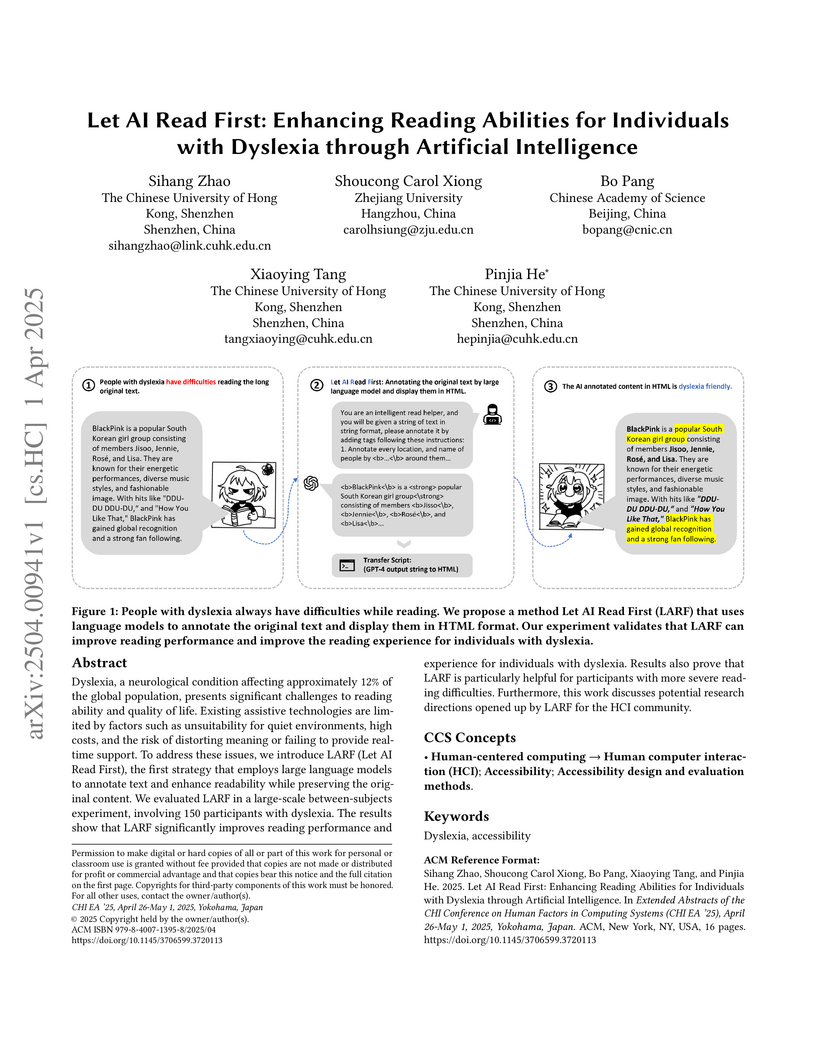

View blogResearchers developed LARF (Let AI Read First), an AI-powered system leveraging GPT-4 to annotate important information in texts with visual cues, improving reading performance and subjective experience for individuals with dyslexia, particularly those with more severe conditions. The system enhanced objective detail retrieval and comprehension while preserving original content.

View blogToxicSQL introduces a framework for investigating and exploiting SQL injection vulnerabilities in LLM-based Text-to-SQL models through backdoor attacks. The work demonstrates that these models can be trained with low poisoning rates to generate malicious, executable SQL queries while retaining normal performance on benign inputs, thereby exposing critical security flaws in database interaction systems.

View blogHuawei Cloud Co., Ltd. researchers developed PanGu-Coder2, a Code LLM fine-tuned with the RRTF framework, achieving 61.64% pass@1 on HumanEval and outperforming prior open-source models as well as several larger commercial models.

View blogThis paper introduces SWE-BENCH-JAVA, a new benchmark designed to evaluate large language models on their ability to resolve real-world GitHub issues in Java repositories. The benchmark comprises 91 manually verified issue instances from popular Java projects, demonstrating that current LLMs achieve relatively low success rates on these complex tasks, with DeepSeek models generally outperforming others.

View blog University of Science and Technology of China

University of Science and Technology of China

Chinese Academy of Sciences

Chinese Academy of Sciences

Peking University

Peking University

University of Georgia

University of Georgia

Zhejiang University

Zhejiang University

Shandong University

Shandong University

Cornell University

Cornell University

Osaka University

Osaka University

The University of Hong Kong

The University of Hong Kong HKUST

HKUST

the University of Tokyo

the University of Tokyo Kyoto University

Kyoto University

University of Oxford

University of Oxford