National Institute of PhysicsUniversity of the Philippines

State of the art (SOTA) neural text to speech (TTS) models can generate natural-sounding synthetic voices. These models are characterized by large memory footprints and substantial number of operations due to the long-standing focus on speech quality with cloud inference in mind. Neural TTS models are generally not designed to perform standalone speech syntheses on resource-constrained and no Internet access edge devices. In this work, an efficient neural TTS called EfficientSpeech that synthesizes speech on an ARM CPU in real-time is proposed. EfficientSpeech uses a shallow non-autoregressive pyramid-structure transformer forming a U-Network. EfficientSpeech has 266k parameters and consumes 90 MFLOPS only or about 1% of the size and amount of computation in modern compact models such as Mixer-TTS. EfficientSpeech achieves an average mel generation real-time factor of 104.3 on an RPi4. Human evaluation shows only a slight degradation in audio quality as compared to FastSpeech2.

Tianjin University University of Toronto

University of Toronto Carnegie Mellon University

Carnegie Mellon University New York University

New York University National University of Singapore

National University of Singapore Mila - Quebec AI Institute

Mila - Quebec AI Institute Meta

Meta CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata

CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks Applications

Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks Applications

University of Toronto

University of Toronto Carnegie Mellon University

Carnegie Mellon University New York University

New York University National University of Singapore

National University of Singapore Mila - Quebec AI Institute

Mila - Quebec AI Institute Meta

Meta CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata

CohereCapital OneSCB 10XSEACrowdAI SingaporeMBZUAIThe University of ManchesterSingapore University of Technology and DesignBeijing Academy of Artificial Intelligence (BAAI)Indian Statistical Institute, Kolkata Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks Applications

Brown UniversityHanyang UniversityUniversity of BathChulalongkorn UniversityUniversitas Gadjah MadaUniversity of the PhilippinesNara Institute of Science and TechnologyBandung Institute of TechnologyOracleInstitut Teknologi Sepuluh NopemberMacau University of Science and TechnologySeoul National University of Science and TechnologyAuburn UniversitySony Group CorporationVidyasirimedhi Institute of Science and TechnologyPolytechnique MontrealKing Mongkut's University of Technology ThonburiSrinakharinwirot UniversityUniversity of New HavenTon Duc Thang UniversityBrawijaya UniversityUniversitas Pelita HarapanThammasat UniversityAteneo de Manila UniversityUniversitas Islam IndonesiaMonash University IndonesiaIndoNLPUniversity of IndonesiaSingapore PolytechnicMOH Office for Healthcare TransformationAllen AINational University, PhilippinesGraphcoreBinus UniversitySamsung R&D Institute PhilippinesUniversity of Illiinois, Urbana-ChampaignDataxet:SonarFaculty of Medicine Siriraj Hospital, Mahidol UniversityWrocław TechInstitute for Infocomm Research, SingaporeWorks ApplicationsSoutheast Asia (SEA) is a region of extraordinary linguistic and cultural

diversity, yet it remains significantly underrepresented in vision-language

(VL) research. This often results in artificial intelligence (AI) models that

fail to capture SEA cultural nuances. To fill this gap, we present SEA-VL, an

open-source initiative dedicated to developing high-quality, culturally

relevant data for SEA languages. By involving contributors from SEA countries,

SEA-VL aims to ensure better cultural relevance and diversity, fostering

greater inclusivity of underrepresented languages in VL research. Beyond

crowdsourcing, our initiative goes one step further in the exploration of the

automatic collection of culturally relevant images through crawling and image

generation. First, we find that image crawling achieves approximately ~85%

cultural relevance while being more cost- and time-efficient than

crowdsourcing. Second, despite the substantial progress in generative vision

models, synthetic images remain unreliable in accurately reflecting SEA

cultures. The generated images often fail to reflect the nuanced traditions and

cultural contexts of the region. Collectively, we gather 1.28M SEA

culturally-relevant images, more than 50 times larger than other existing

datasets. Through SEA-VL, we aim to bridge the representation gap in SEA,

fostering the development of more inclusive AI systems that authentically

represent diverse cultures across SEA.

This research introduces a framework that integrates Reinforcement Learning (RL) with Large Language Models (LLMs) to train AI agents in Dungeons & Dragons 5th Edition combat. RL agents trained against LLM adversaries converged more quickly and achieved superior performance in complex multi-class scenarios compared to those trained against rule-based opponents.

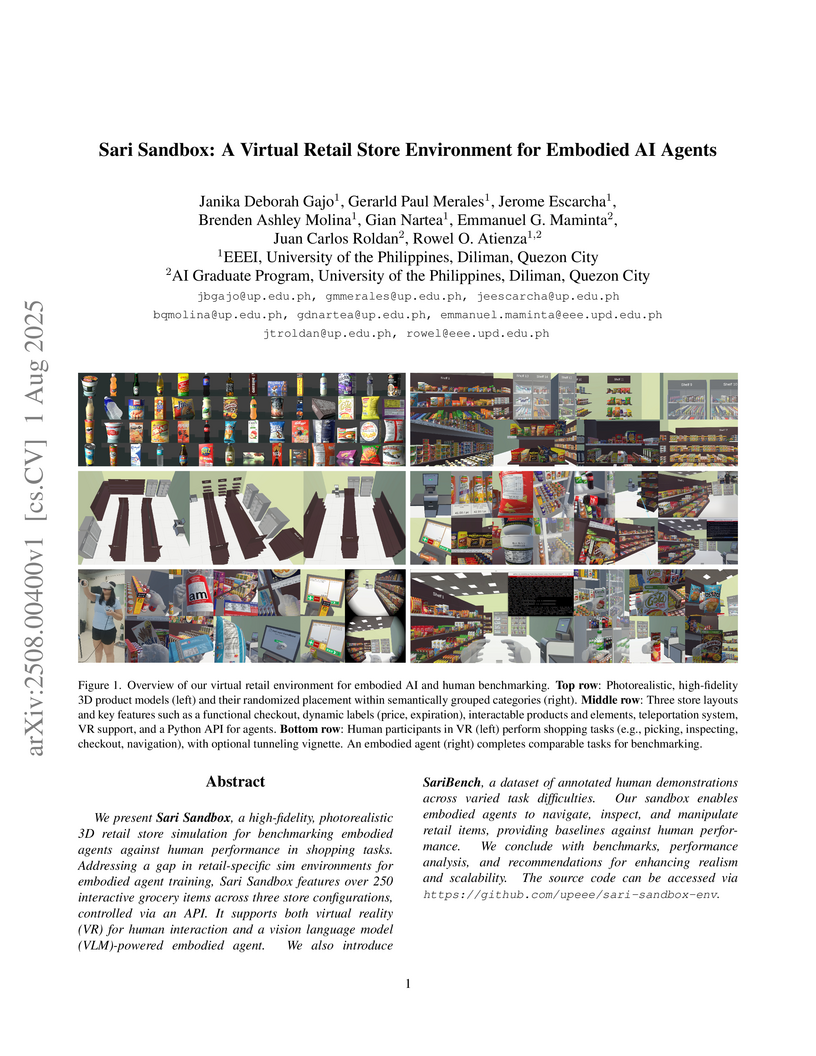

Researchers at the University of the Philippines, Diliman, developed "Sari Sandbox," a high-fidelity virtual retail store environment designed for benchmarking embodied AI agents against human performance in shopping tasks. The platform, featuring 250+ diverse products and a Python API, also includes SariBench, a dataset of human demonstrations, revealing that current VLM-powered agents are significantly less efficient than humans in these tasks.

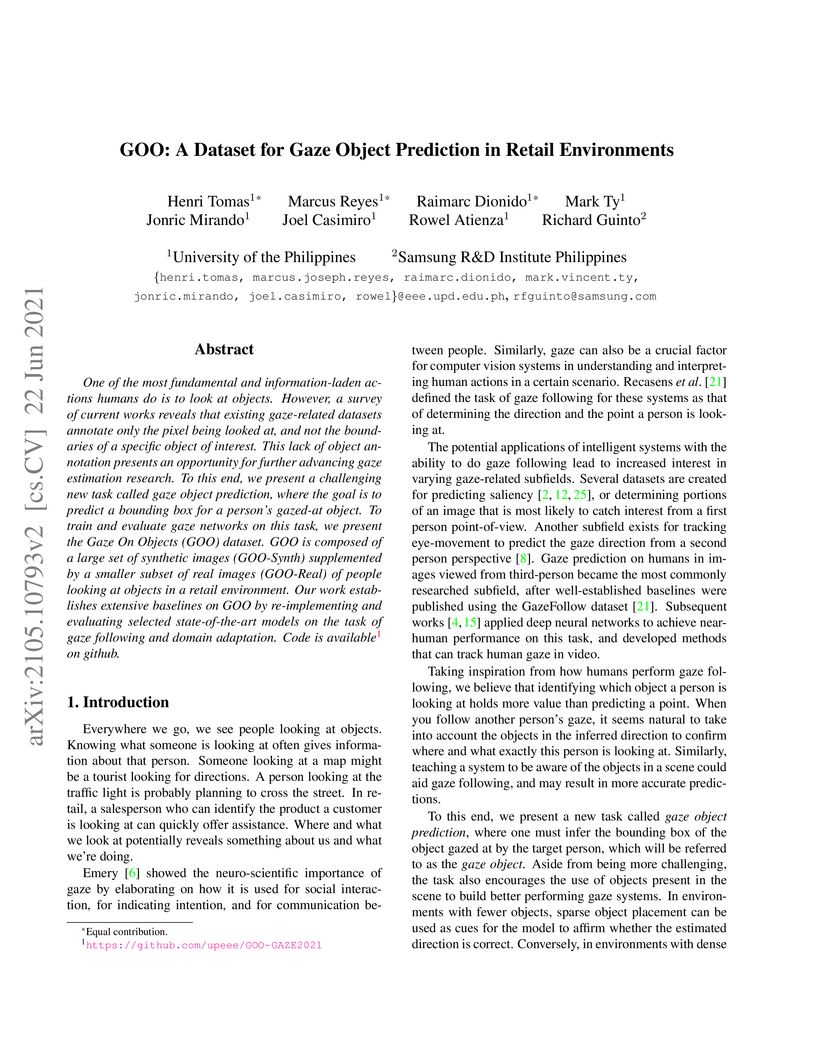

One of the most fundamental and information-laden actions humans do is to look at objects. However, a survey of current works reveals that existing gaze-related datasets annotate only the pixel being looked at, and not the boundaries of a specific object of interest. This lack of object annotation presents an opportunity for further advancing gaze estimation research. To this end, we present a challenging new task called gaze object prediction, where the goal is to predict a bounding box for a person's gazed-at object. To train and evaluate gaze networks on this task, we present the Gaze On Objects (GOO) dataset. GOO is composed of a large set of synthetic images (GOO Synth) supplemented by a smaller subset of real images (GOO-Real) of people looking at objects in a retail environment. Our work establishes extensive baselines on GOO by re-implementing and evaluating selected state-of-the art models on the task of gaze following and domain adaptation. Code is available on github.

21 Mar 2022

The values of the Riemann zeta function at odd positive integers, ζ(2n+1), are shown to admit a representation proportional to the finite-part of the divergent integral ∫0∞t−2n−1cschtdt. Integral representations for ζ(2n+1) are then deduced from the finite-part integral representation. Certain relations between ζ(2n+1) and ζ′(2n+1) are likewise deduced, from which integral representations for ζ′(2n+1) are obtained.

Scene text recognition (STR) enables computers to read text in natural scenes such as object labels, road signs and instructions. STR helps machines perform informed decisions such as what object to pick, which direction to go, and what is the next step of action. In the body of work on STR, the focus has always been on recognition accuracy. There is little emphasis placed on speed and computational efficiency which are equally important especially for energy-constrained mobile machines. In this paper we propose ViTSTR, an STR with a simple single stage model architecture built on a compute and parameter efficient vision transformer (ViT). On a comparable strong baseline method such as TRBA with accuracy of 84.3%, our small ViTSTR achieves a competitive accuracy of 82.6% (84.2% with data augmentation) at 2.4x speed up, using only 43.4% of the number of parameters and 42.2% FLOPS. The tiny version of ViTSTR achieves 80.3% accuracy (82.1% with data augmentation), at 2.5x the speed, requiring only 10.9% of the number of parameters and 11.9% FLOPS. With data augmentation, our base ViTSTR outperforms TRBA at 85.2% accuracy (83.7% without augmentation) at 2.3x the speed but requires 73.2% more parameters and 61.5% more FLOPS. In terms of trade-offs, nearly all ViTSTR configurations are at or near the frontiers to maximize accuracy, speed and computational efficiency all at the same time.

24 Dec 2021

A complex balanced kinetic system is absolutely complex balanced (ACB) if every positive equilibrium is complex balanced. Two results on absolute complex balancing were foundational for modern chemical reaction network theory (CRNT): in 1972, M. Feinberg proved that any deficiency zero complex balanced system is absolutely complex balanced. In the same year, F. Horn and R. Jackson showed that the (full) converse of the result is not true: any complex balanced mass action system, regardless of its deficiency, is absolutely complex balanced. In this paper, we present initial results on the extension of the Horn and Jackson ACB Theorem. In particular, we focus on other kinetic systems with positive deficiency where complex balancing implies absolute complex balancing. While doing so, we found out that complex balanced power law reactant determined kinetic systems (PL-RDK) systems are not ACB. In our search for necessary and sufficient conditions for complex balanced systems to be absolutely complex balanced, we came across the so-called CLP systems (complex balanced systems with a desired "log parametrization" property). It is shown that complex balanced systems with bi-LP property are absolutely complex balanced. For non-CLP systems, we discuss novel methods for finding sufficient conditions for ACB in kinetic systems containing non-CLP systems: decompositions, the Positive Function Factor (PFF) and the Coset Intersection Count (CIC) and their application to poly-PL and Hill-type systems.

Current ophthalmology clinical workflows are plagued by over-referrals, long waits, and complex and heterogeneous medical records. Large language models (LLMs) present a promising solution to automate various procedures such as triaging, preliminary tests like visual acuity assessment, and report summaries. However, LLMs have demonstrated significantly varied performance across different languages in natural language question-answering tasks, potentially exacerbating healthcare disparities in Low and Middle-Income Countries (LMICs). This study introduces the first multilingual ophthalmological question-answering benchmark with manually curated questions parallel across languages, allowing for direct cross-lingual comparisons. Our evaluation of 6 popular LLMs across 7 different languages reveals substantial bias across different languages, highlighting risks for clinical deployment of LLMs in LMICs. Existing debiasing methods such as Translation Chain-of-Thought or Retrieval-augmented generation (RAG) by themselves fall short of closing this performance gap, often failing to improve performance across all languages and lacking specificity for the medical domain. To address this issue, We propose CLARA (Cross-Lingual Reflective Agentic system), a novel inference time de-biasing method leveraging retrieval augmented generation and self-verification. Our approach not only improves performance across all languages but also significantly reduces the multilingual bias gap, facilitating equitable LLM application across the globe.

Discrete (DTCs) and continuous time crystals (CTCs) are novel dynamical many-body states, that are characterized by robust self-sustained oscillations, emerging via spontaneous breaking of discrete or continuous time translation symmetry. DTCs are periodically driven systems that oscillate with a subharmonic of the external drive, while CTCs are continuously driven and oscillate with a frequency intrinsic to the system. Here, we explore a phase transition from a continuous time crystal to a discrete time crystal. A CTC with a characteristic oscillation frequency ωCTC is prepared in a continuously pumped atom-cavity system. Modulating the pump intensity of the CTC with a frequency ωdr close to 2ωCTC leads to robust locking of ωCTC to ωdr/2, and hence a DTC arises. This phase transition in a quantum many-body system is related to subharmonic injection locking of non-linear mechanical and electronic oscillators or lasers.

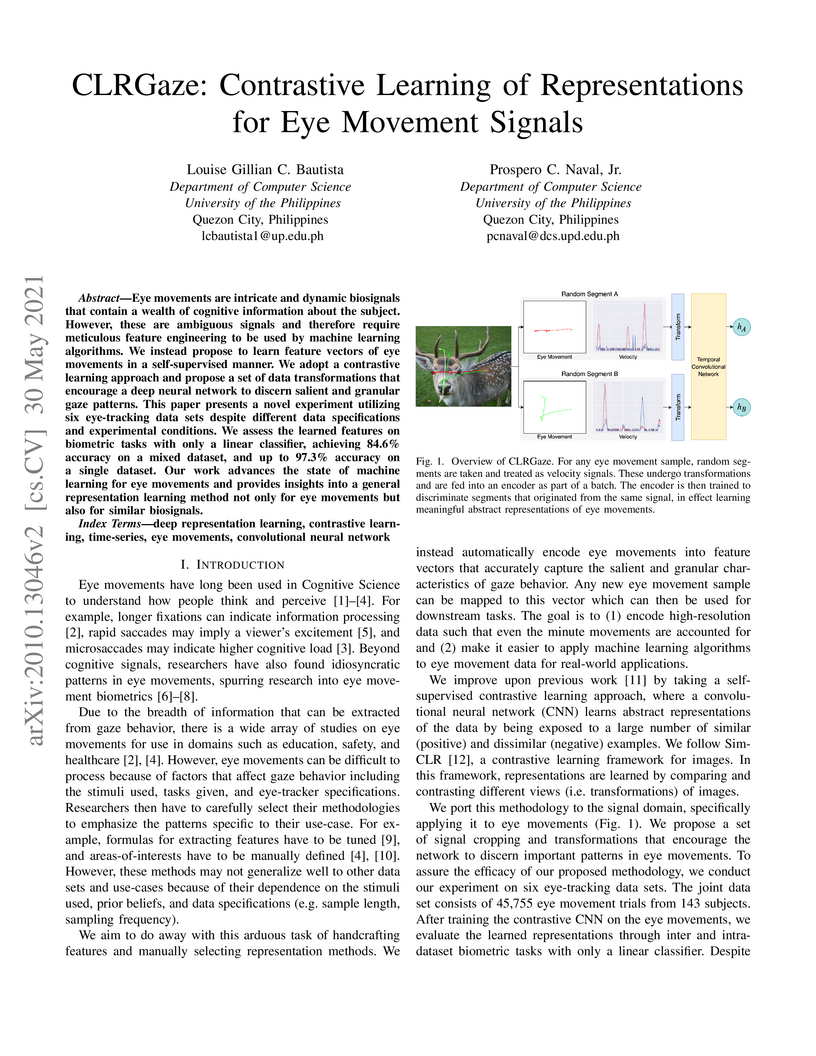

Eye movements are intricate and dynamic biosignals that contain a wealth of cognitive information about the subject. However, these are ambiguous signals and therefore require meticulous feature engineering to be used by machine learning algorithms. We instead propose to learn feature vectors of eye movements in a self-supervised manner. We adopt a contrastive learning approach and propose a set of data transformations that encourage a deep neural network to discern salient and granular gaze patterns. This paper presents a novel experiment utilizing six eye-tracking data sets despite different data specifications and experimental conditions. We assess the learned features on biometric tasks with only a linear classifier, achieving 84.6% accuracy on a mixed dataset, and up to 97.3% accuracy on a single dataset. Our work advances the state of machine learning for eye movements and provides insights into a general representation learning method not only for eye movements but also for similar biosignals.

26 Sep 2024

We investigate a class of entanglement witnesses where each witness is formulated as a difference of two product observables. These observables are decomposable into positive semidefinite local operators that obey a partial ordering rule defined over all their possible expectation values. We provide a framework to construct these entanglement witnesses along with some examples. We also discuss methods to improve them both linearly and nonlinearly.

The rapid adoption of generative artificial intelligence (GenAI) in research presents both opportunities and ethical challenges that should be carefully navigated. Although GenAI tools can enhance research efficiency through automation of tasks such as literature review and data analysis, their use raises concerns about aspects such as data accuracy, privacy, bias, and research integrity. This paper develops the ETHICAL framework, which is a practical guide for responsible GenAI use in research. Employing a constructivist case study examining multiple GenAI tools in real research contexts, the framework consists of seven key principles: Examine policies and guidelines, Think about social impacts, Harness understanding of the technology, Indicate use, Critically engage with outputs, Access secure versions, and Look at user agreements. Applying these principles will enable researchers to uphold research integrity while leveraging GenAI benefits. The framework addresses a critical gap between awareness of ethical issues and practical action steps, providing researchers with concrete guidance for ethical GenAI integration. This work has implications for research practice, institutional policy development, and the broader academic community while adapting to an AI-enhanced research landscape. The ETHICAL framework can serve as a foundation for developing AI literacy in academic settings and promoting responsible innovation in research methodologies.

21 Mar 2022

The values of the Riemann zeta function at odd positive integers, ζ(2n+1), are shown to admit a representation proportional to the finite-part of the divergent integral ∫0∞t−2n−1cschtdt. Integral representations for ζ(2n+1) are then deduced from the finite-part integral representation. Certain relations between ζ(2n+1) and ζ′(2n+1) are likewise deduced, from which integral representations for ζ′(2n+1) are obtained.

14 Aug 2019

The mathematics of shuffling a deck of 2n cards with two "perfect shuffles"

was brought into clarity by Diaconis, Graham and Kantor. Here we consider a

generalisation of this problem, with a so-called "many handed dealer" shuffling

kn cards by cutting into k piles with n cards in each pile and using k!

shuffles. A conjecture of Medvedoff and Morrison suggests that all possible

permutations of the deck of cards are achieved, so long as k=4 and n is

not a power of k. We confirm this conjecture for three doubly infinite

families of integers: all (k,n) with k>n; all $(k, n)\in \{ (\ell^e, \ell^f

)\mid \ell \geqslant 2, \ell^e>4, f \ \mbox{not a multiple of}\ e\}$; and all

(k,n) with k=2e⩾4 and n not a power of 2. We open up a more

general study of shuffle groups, which admit an arbitrary subgroup of shuffles.

Accurate ocean modeling and coastal hazard prediction depend on

high-resolution bathymetric data; yet, current worldwide datasets are too

coarse for exact numerical simulations. While recent deep learning advances

have improved earth observation data resolution, existing methods struggle with

the unique challenges of producing detailed ocean floor maps, especially in

maintaining physical structure consistency and quantifying uncertainties. This

work presents a novel uncertainty-aware mechanism using spatial blocks to

efficiently capture local bathymetric complexity based on block-based conformal

prediction. Using the Vector Quantized Variational Autoencoder (VQ-VAE)

architecture, the integration of this uncertainty quantification framework

yields spatially adaptive confidence estimates while preserving topographical

features via discrete latent representations. With smaller uncertainty widths

in well-characterized areas and appropriately larger bounds in areas of complex

seafloor structures, the block-based design adapts uncertainty estimates to

local bathymetric complexity. Compared to conventional techniques, experimental

results over several ocean regions show notable increases in both

reconstruction quality and uncertainty estimation reliability. This framework

increases the reliability of bathymetric reconstructions by preserving

structural integrity while offering spatially adaptive uncertainty estimates,

so opening the path for more solid climate modeling and coastal hazard

assessment.

04 Sep 2014

The lattice gauge theory for curved spacetime is formulated. A discretized

action is derived for both gluon and quark fields which reduces to the

generally covariant form in the continuum limit. Using the Wilson action, it is

shown analytically that for a general curved spacetime background, two

propagating gluons are always color-confined. The fermion-doubling problem is

discussed in the specific case of Friedman-Robertson-Walker metric. Lastly, we

discussed possible future numerical implementation of lattice QCD in curved

spacetime.

06 May 2025

Aims: The enigma of the missing baryons poses a prominent and unresolved

problem in astronomy. Dispersion measures (DM) serve as a distinctive

observable of fast radio bursts (FRBs). They quantify the electron column

density along each line of sight and reveal the missing baryons that are

described in the Macquart (DM-z) relation. The scatter of this relation is

anticipated to be caused by the variation in the cosmic structure. This is not

yet statistically confirmed, however. We present statistical evidence that the

cosmological baryons fluctuate. Methods: We measured the foreground galaxy

number densities around 14 and 13 localized FRBs with the WISE-PS1-STRM and

WISE x SCOS photometric redshift galaxy catalog, respectively. The foreground

galaxy number densities were determined through a comparison with measured

random apertures with a radius of 1 Mpc. Results: We found a positive

correlation between the excess of DM that is contributed by the medium outside

galaxies (DM_cosmic) and the foreground galaxy number density. The correlation

is strong and statistically significant, with median Pearson coefficients of

0.6 and 0.6 and median p-values of 0.012 and 0.032 for the galaxy catalogs,

respectively, as calculated with Monte Carlo simulations. Conclusions: Our

findings indicate that the baryonic matter density outside galaxies exceeds its

cosmic average along the line of sight to regions with an excess galaxy

density, but there are fewer baryons along the line of sight to low-density

regions. This is statistical evidence that the ionized baryons fluctuate

cosmologically on a characteristic scale of ≲6 Mpc.

The Rényi entanglement entropy is calculated exactly for mode-partitioned isolated systems such as the two-mode squeezed state and the multi-mode Silbey-Harris polaron ansatz state. Effective thermodynamic descriptions of the correlated partitions are constructed to present quantum information theory concepts in the language of thermodynamics. Boltzmann weights are obtained from the entanglement spectrum by deriving the exact relationship between an effective temperature and the physical entanglement parameters. The partition function of the resulting effective thermal theory can be obtained directly from the single-copy entanglement.

Speech is converted to digital signals using speech coding for efficient transmission. However, this often lowers the quality and bandwidth of speech. This paper explores the application of convolutional neural networks for Artificial Bandwidth Expansion (ABE) and speech enhancement on coded speech, particularly Adaptive Multi-Rate (AMR) used in 2G cellular phone calls. In this paper, we introduce AMRConvNet: a convolutional neural network that performs ABE and speech enhancement on speech encoded with AMR. The model operates directly on the time-domain for both input and output speech but optimizes using combined time-domain reconstruction loss and frequency-domain perceptual loss. AMRConvNet resulted in an average improvement of 0.425 Mean Opinion Score - Listening Quality Objective (MOS-LQO) points for AMR bitrate of 4.75k, and 0.073 MOS-LQO points for AMR bitrate of 12.2k. AMRConvNet also showed robustness in AMR bitrate inputs. Finally, an ablation test showed that our combined time-domain and frequency-domain loss leads to slightly higher MOS-LQO and faster training convergence than using either loss alone.

There are no more papers matching your filters at the moment.