Oak Ridge National Lab

This research advocates for integrating hardware considerations into transformer model design, demonstrating that aligning model dimensions with GPU characteristics significantly improves efficiency. The authors show that minor architectural adjustments, guided by principles like Tensor Core requirements and wave quantization, can lead to up to 39% faster training for transformer models while preserving accuracy.

This research presents Open-World Scene Graph Generation (OwSGG), a training-free framework that utilizes pretrained Vision-Language Models to generate scene graphs in open-world settings. The approach demonstrates strong performance in open-vocabulary and zero-shot generalization scenarios, often achieving competitive recall compared to supervised baselines without task-specific training.

23 Sep 2025

Modern scientific discovery increasingly relies on workflows that process data across the Edge, Cloud, and High Performance Computing (HPC) continuum. Comprehensive and in-depth analyses of these data are critical for hypothesis validation, anomaly detection, reproducibility, and impactful findings. Although workflow provenance techniques support such analyses, at large scale, the provenance data become complex and difficult to analyze. Existing systems depend on custom scripts, structured queries, or static dashboards, limiting data interaction. In this work, we introduce an evaluation methodology, reference architecture, and open-source implementation that leverages interactive Large Language Model (LLM) agents for runtime data analysis. Our approach uses a lightweight, metadata-driven design that translates natural language into structured provenance queries. Evaluations across LLaMA, GPT, Gemini, and Claude, covering diverse query classes and a real-world chemistry workflow, show that modular design, prompt tuning, and Retrieval-Augmented Generation (RAG) enable accurate and insightful LLM agent responses beyond recorded provenance.

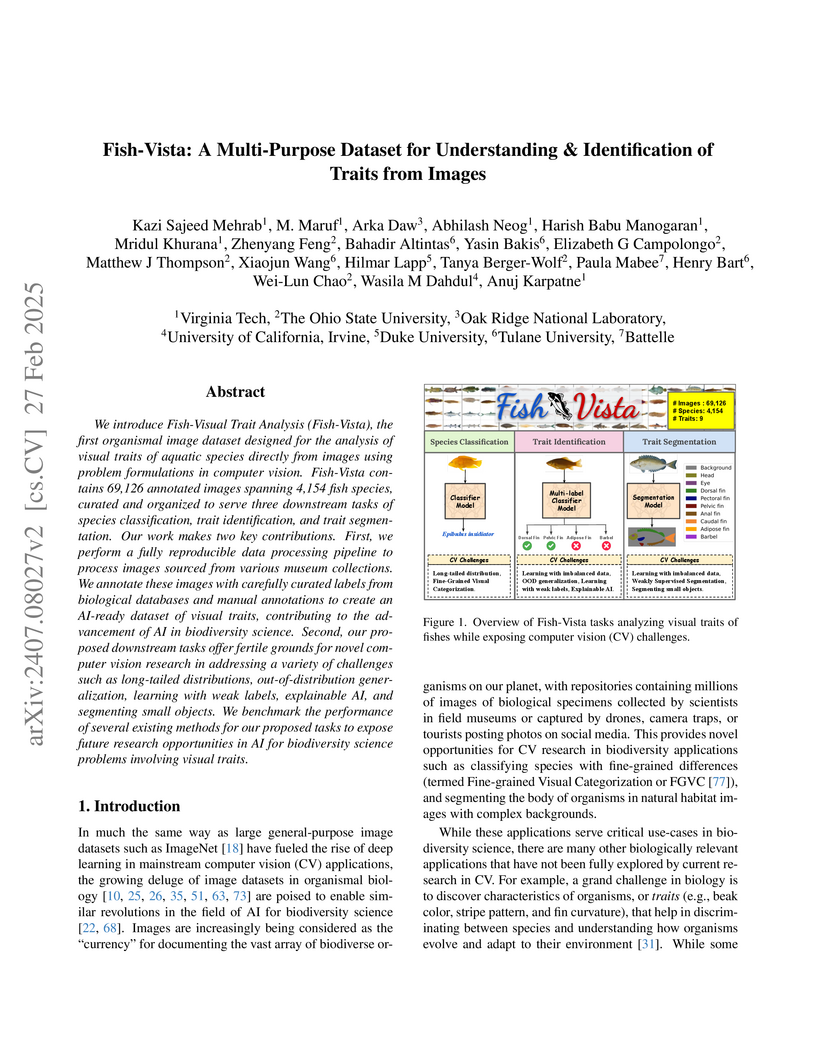

We introduce Fish-Visual Trait Analysis (Fish-Vista), the first organismal

image dataset designed for the analysis of visual traits of aquatic species

directly from images using problem formulations in computer vision. Fish-Vista

contains 69,126 annotated images spanning 4,154 fish species, curated and

organized to serve three downstream tasks of species classification, trait

identification, and trait segmentation. Our work makes two key contributions.

First, we perform a fully reproducible data processing pipeline to process

images sourced from various museum collections. We annotate these images with

carefully curated labels from biological databases and manual annotations to

create an AI-ready dataset of visual traits, contributing to the advancement of

AI in biodiversity science. Second, our proposed downstream tasks offer fertile

grounds for novel computer vision research in addressing a variety of

challenges such as long-tailed distributions, out-of-distribution

generalization, learning with weak labels, explainable AI, and segmenting small

objects. We benchmark the performance of several existing methods for our

proposed tasks to expose future research opportunities in AI for biodiversity

science problems involving visual traits.

Large Language Models (LLMs) and other foundation models are increasingly used as the core of AI agents. In agentic workflows, these agents plan tasks, interact with humans and peers, and influence scientific outcomes across federated and heterogeneous environments. However, agents can hallucinate or reason incorrectly, propagating errors when one agent's output becomes another's input. Thus, assuring that agents' actions are transparent, traceable, reproducible, and reliable is critical to assess hallucination risks and mitigate their workflow impacts. While provenance techniques have long supported these principles, existing methods fail to capture and relate agent-centric metadata such as prompts, responses, and decisions with the broader workflow context and downstream outcomes. In this paper, we introduce PROV-AGENT, a provenance model that extends W3C PROV and leverages the Model Context Protocol (MCP) and data observability to integrate agent interactions into end-to-end workflow provenance. Our contributions include: (1) a provenance model tailored for agentic workflows, (2) a near real-time, open-source system for capturing agentic provenance, and (3) a cross-facility evaluation spanning edge, cloud, and HPC environments, demonstrating support for critical provenance queries and agent reliability analysis.

In subsurface imaging, learning the mapping from velocity maps to seismic

waveforms (forward problem) and waveforms to velocity (inverse problem) is

important for several applications. While traditional techniques for solving

forward and inverse problems are computationally prohibitive, there is a

growing interest in leveraging recent advances in deep learning to learn the

mapping between velocity maps and seismic waveform images directly from data.

Despite the variety of architectures explored in previous works, several open

questions still remain unanswered such as the effect of latent space sizes, the

importance of manifold learning, the complexity of translation models, and the

value of jointly solving forward and inverse problems. We propose a unified

framework to systematically characterize prior research in this area termed the

Generalized Forward-Inverse (GFI) framework, building on the assumption of

manifolds and latent space translations. We show that GFI encompasses previous

works in deep learning for subsurface imaging, which can be viewed as specific

instantiations of GFI. We also propose two new model architectures within the

framework of GFI: Latent U-Net and Invertible X-Net, leveraging the power of

U-Nets for domain translation and the ability of IU-Nets to simultaneously

learn forward and inverse translations, respectively. We show that our proposed

models achieve state-of-the-art (SOTA) performance for forward and inverse

problems on a wide range of synthetic datasets, and also investigate their

zero-shot effectiveness on two real-world-like datasets. Our code is available

at this https URL

Carnegie Mellon University

Carnegie Mellon University New York University

New York University University of Oxford

University of Oxford Cornell University

Cornell University NVIDIAIntel LabsSanta Fe InstituteSalesforce ResearchIntelUniversity of TübingenAlan Turing InstituteLawrence Berkeley National LabOak Ridge National LabInstitute for Simulation IntelligenceSLAC National Accelerator LabCharles River AnalyticsNeuralinkJPM AI ResearchU.S. Bank AI Innovation

NVIDIAIntel LabsSanta Fe InstituteSalesforce ResearchIntelUniversity of TübingenAlan Turing InstituteLawrence Berkeley National LabOak Ridge National LabInstitute for Simulation IntelligenceSLAC National Accelerator LabCharles River AnalyticsNeuralinkJPM AI ResearchU.S. Bank AI InnovationThe paper introduces "Simulation Intelligence (SI)" as a new interdisciplinary field that merges scientific computing, scientific simulation, and artificial intelligence. It proposes "Nine Motifs of Simulation Intelligence" as a roadmap for developing advanced methods, demonstrating capabilities such as accelerating complex simulations by orders of magnitude and enabling new forms of scientific inquiry like automated causal discovery.

02 Aug 2025

Antiferroelectricity is a material property characterized by alternating electric dipoles spontaneously ordered in antiparallel directions. Antiferroelectrics are promising for energy storage, solid-state cooling, and memory technologies; however, these materials are scarce, and their scalability remains largely unexplored. In this work, we demonstrate that single-crystalline hafnia, a lead-free CMOS-compatible material, exhibits antiferroelectricity under compressive-strain conditions. We observe antiparallel sublattice polarization and stable double-hysteresis in single-crystalline (111)-oriented epitaxial La-doped hafnia films grown on yttrium-stabilized zirconia and show that the antipolar orthorhombic phase of hafnia adheres to the Kittel model of antiferroelectricity. Notably, compressive strain strengthens the antiferroelectric order in thinner La-doped hafnia films, achieving an unprecedented 850 C ordering temperature in the two-dimensional limit, highlighting hafnia's potential for advanced antiferroelectric devices.

Error-bounded lossy compression is one of the most efficient solutions to reduce the volume of scientific data. For lossy compression, progressive decompression and random-access decompression are critical features that enable on-demand data access and flexible analysis workflows. However, these features can severely degrade compression quality and speed. To address these limitations, we propose a novel streaming compression framework that supports both progressive decompression and random-access decompression while maintaining high compression quality and speed. Our contributions are three-fold: (1) we design the first compression framework that simultaneously enables both progressive decompression and random-access decompression; (2) we introduce a hierarchical partitioning strategy to enable both streaming features, along with a hierarchical prediction mechanism that mitigates the impact of partitioning and achieves high compression quality -- even comparable to state-of-the-art (SOTA) non-streaming compressor SZ3; and (3) our framework delivers high compression and decompression speed, up to 6.7× faster than SZ3.

Retrieval-augmented generation (RAG) has emerged as a promising paradigm for improving factual accuracy in large language models (LLMs). We introduce a benchmark designed to evaluate RAG pipelines as a whole, evaluating a pipeline's ability to ingest, retrieve, and reason about several modalities of information, differentiating it from existing benchmarks that focus on particular aspects such as retrieval. We present (1) a small, human-created dataset of 93 questions designed to evaluate a pipeline's ability to ingest textual data, tables, images, and data spread across these modalities in one or more documents; (2) a phrase-level recall metric for correctness; (3) a nearest-neighbor embedding classifier to identify potential pipeline hallucinations; (4) a comparative evaluation of 2 pipelines built with open-source retrieval mechanisms and 4 closed-source foundation models; and (5) a third-party human evaluation of the alignment of our correctness and hallucination metrics. We find that closed-source pipelines significantly outperform open-source pipelines in both correctness and hallucination metrics, with wider performance gaps in questions relying on multimodal and cross-document information. Human evaluation of our metrics showed average agreement of 4.62 for correctness and 4.53 for hallucination detection on a 1-5 Likert scale (5 indicating "strongly agree").

20 Nov 2024

The standardization of an interface for dense linear algebra operations in the BLAS standard has enabled interoperability between different linear algebra libraries, thereby boosting the success of scientific computing, in particular in scientific HPC. Despite numerous efforts in the past, the community has not yet agreed on a standardization for sparse linear algebra operations due to numerous reasons. One is the fact that sparse linear algebra objects allow for many different storage formats, and different hardware may favor different storage formats. This makes the definition of a FORTRAN-style all-circumventing interface extremely challenging. Another reason is that opposed to dense linear algebra functionality, in sparse linear algebra, the size of the sparse data structure for the operation result is not always known prior to the information. Furthermore, as opposed to the standardization effort for dense linear algebra, we are late in the technology readiness cycle, and many production-ready software libraries using sparse linear algebra routines have implemented and committed to their own sparse BLAS interface. At the same time, there exists a demand for standardization that would improve interoperability, and sustainability, and allow for easier integration of building blocks. In an inclusive, cross-institutional effort involving numerous academic institutions, US National Labs, and industry, we spent two years designing a hardware-portable interface for basic sparse linear algebra functionality that serves the user needs and is compatible with the different interfaces currently used by different vendors. In this paper, we present a C++ API for sparse linear algebra functionality, discuss the design choices, and detail how software developers preserve a lot of freedom in terms of how to implement functionality behind this API.

Ensuring safety in high-speed autonomous vehicles requires rapid control loops and tightly bounded delays from perception to actuation. Many open-source autonomy systems rely on ROS 2 middleware; when multiple sensor and control nodes share one compute unit, ROS 2 and its DDS transports add significant (de)serialization, copying, and discovery overheads, shrinking the available time budget. We present Sensor-in-Memory (SIM), a shared-memory transport designed for intra-host pipelines in autonomous vehicles. SIM keeps sensor data in native memory layouts (e.g., cv::Mat, PCL), uses lock-free bounded double buffers that overwrite old data to prioritize freshness, and integrates into ROS 2 nodes with four lines of code. Unlike traditional middleware, SIM operates beside ROS 2 and is optimized for applications where data freshness and minimal latency outweigh guaranteed completeness. SIM provides sequence numbers, a writer heartbeat, and optional checksums to ensure ordering, liveness, and basic integrity. On an NVIDIA Jetson Orin Nano, SIM reduces data-transport latency by up to 98% compared to ROS 2 zero-copy transports such as FastRTPS and Zenoh, lowers mean latency by about 95%, and narrows 95th/99th-percentile tail latencies by around 96%. In tests on a production-ready Level 4 vehicle running this http URL, SIM increased localization frequency from 7.5 Hz to 9.5 Hz. Applied across all latency-critical modules, SIM cut average perception-to-decision latency from 521.91 ms to 290.26 ms, reducing emergency braking distance at 40 mph (64 km/h) on dry concrete by 13.6 ft (4.14 m).

This work describes the design, implementation and performance analysis of a

distributed two-tiered storage software. The first tier functions as a

distributed software cache implemented using solid-state devices~(NVMes) and

the second tier consists of multiple hard disks~(HDDs). We describe an online

learning algorithm that manages data movement between the tiers. The software

is hybrid, i.e. both distributed and multi-threaded. The end-to-end performance

model of the two-tier system was developed using queuing networks and

behavioral models of storage devices. We identified significant parameters that

affect the performance of storage devices and created behavioral models for

each device. The performance of the software was evaluated on a many-core

cluster using non-trivial read/write workloads. The paper provides examples to

illustrate the use of these models.

There has recently been much work on the "wide limit" of neural networks, where Bayesian neural networks (BNNs) are shown to converge to a Gaussian process (GP) as all hidden layers are sent to infinite width. However, these results do not apply to architectures that require one or more of the hidden layers to remain narrow. In this paper, we consider the wide limit of BNNs where some hidden layers, called "bottlenecks", are held at finite width. The result is a composition of GPs that we term a "bottleneck neural network Gaussian process" (bottleneck NNGP). Although intuitive, the subtlety of the proof is in showing that the wide limit of a composition of networks is in fact the composition of the limiting GPs. We also analyze theoretically a single-bottleneck NNGP, finding that the bottleneck induces dependence between the outputs of a multi-output network that persists through extreme post-bottleneck depths, and prevents the kernel of the network from losing discriminative power at extreme post-bottleneck depths.

Industrial cone-beam X-ray computed tomography (CT) scans of additively

manufactured components produce a 3D reconstruction from projection

measurements acquired at multiple predetermined rotation angles of the

component about a single axis. Typically, a large number of projections are

required to achieve a high-quality reconstruction, a process that can span

several hours or days depending on the part size, material composition, and

desired resolution. This paper introduces a novel real-time system designed to

optimize the scanning process by intelligently selecting the best next angle

based on the object's geometry and computer-aided design (CAD) model. This

selection process strategically balances the need for measurements aligned with

the part's long edges against the need for maintaining a diverse set of overall

measurements. Through simulations, we demonstrate that our algorithm

significantly reduces the number of projections needed to achieve high-quality

reconstructions compared to traditional methods.

Microscopy imaging techniques are instrumental for characterization and

analysis of biological structures. As these techniques typically render 3D

visualization of cells by stacking 2D projections, issues such as out-of-plane

excitation and low resolution in the z-axis may pose challenges (even for

human experts) to detect individual cells in 3D volumes as these

non-overlapping cells may appear as overlapping. In this work, we introduce a

comprehensive method for accurate 3D instance segmentation of cells in the

brain tissue. The proposed method combines the 2D YOLO detection method with a

multi-view fusion algorithm to construct a 3D localization of the cells. Next,

the 3D bounding boxes along with the data volume are input to a 3D U-Net

network that is designed to segment the primary cell in each 3D bounding box,

and in turn, to carry out instance segmentation of cells in the entire volume.

The promising performance of the proposed method is shown in comparison with

some current deep learning-based 3D instance segmentation methods.

The increasing adoption of Deep Neural Networks (DNNs) has led to their

application in many challenging scientific visualization tasks. While advanced

DNNs offer impressive generalization capabilities, understanding factors such

as model prediction quality, robustness, and uncertainty is crucial. These

insights can enable domain scientists to make informed decisions about their

data. However, DNNs inherently lack ability to estimate prediction uncertainty,

necessitating new research to construct robust uncertainty-aware visualization

techniques tailored for various visualization tasks. In this work, we propose

uncertainty-aware implicit neural representations to model scalar field data

sets effectively and comprehensively study the efficacy and benefits of

estimated uncertainty information for volume visualization tasks. We evaluate

the effectiveness of two principled deep uncertainty estimation techniques: (1)

Deep Ensemble and (2) Monte Carlo Dropout (MCDropout). These techniques enable

uncertainty-informed volume visualization in scalar field data sets. Our

extensive exploration across multiple data sets demonstrates that

uncertainty-aware models produce informative volume visualization results.

Moreover, integrating prediction uncertainty enhances the trustworthiness of

our DNN model, making it suitable for robustly analyzing and visualizing

real-world scientific volumetric data sets.

28 May 2019

In this effort we propose a novel approach for reconstructing multivariate

functions from training data, by identifying both a suitable network

architecture and an initialization using polynomial-based approximations.

Training deep neural networks using gradient descent can be interpreted as

moving the set of network parameters along the loss landscape in order to

minimize the loss functional. The initialization of parameters is important for

iterative training methods based on descent. Our procedure produces a network

whose initial state is a polynomial representation of the training data. The

major advantage of this technique is from this initialized state the network

may be improved using standard training procedures. Since the network already

approximates the data, training is more likely to produce a set of parameters

associated with a desirable local minimum. We provide the details of the theory

necessary for constructing such networks and also consider several numerical

examples that reveal our approach ultimately produces networks which can be

effectively trained from our initialized state to achieve an improved

approximation for a large class of target functions.

X-ray computed tomography (XCT) is an important tool for high-resolution non-destructive characterization of additively-manufactured metal components. XCT reconstructions of metal components may have beam hardening artifacts such as cupping and streaking which makes reliable detection of flaws and defects challenging. Furthermore, traditional workflows based on using analytic reconstruction algorithms require a large number of projections for accurate characterization - leading to longer measurement times and hindering the adoption of XCT for in-line inspections. In this paper, we introduce a new workflow based on the use of two neural networks to obtain high-quality accelerated reconstructions from sparse-view XCT scans of single material metal parts. The first network, implemented using fully-connected layers, helps reduce the impact of BH in the projection data without the need of any calibration or knowledge of the component material. The second network, a convolutional neural network, maps a low-quality analytic 3D reconstruction to a high-quality reconstruction. Using experimental data, we demonstrate that our method robustly generalizes across several alloys, and for a range of sparsity levels without any need for retraining the networks thereby enabling accurate and fast industrial XCT inspections.

24 Jun 2016

CNRS

CNRS Chinese Academy of SciencesVanderbilt University

Chinese Academy of SciencesVanderbilt University National University of Singapore

National University of Singapore Nanyang Technological UniversityNational Tsing-Hua UniversityThalesNational Institute of Advanced Industrial Science and Technology (AIST)Institute of Physics, Academia SinicaCollaborative Innovation Center of Quantum MatterOak Ridge National LabCINTRA

Nanyang Technological UniversityNational Tsing-Hua UniversityThalesNational Institute of Advanced Industrial Science and Technology (AIST)Institute of Physics, Academia SinicaCollaborative Innovation Center of Quantum MatterOak Ridge National LabCINTRAAtomically thin transitional metal ditellurides like WTe2 and MoTe2 have triggered tremendous research interests because of their intrinsic nontrivial band structure. They are also predicted to be 2D topological insulators and type-II Weyl semimetals. However, most of the studies on ditelluride atomic layers so far rely on the low-yield and time-consuming mechanical exfoliation method. Direct synthesis of large-scale monolayer ditellurides has not yet been achieved. Here, using the chemical vapor deposition (CVD) method, we demonstrate controlled synthesis of high-quality and atom-thin tellurides with lateral size over 300 {\mu}m. We found that the as-grown WTe2 maintains two different stacking sequences in the bilayer, where the atomic structure of the stacking boundary is revealed by scanning transmission electron microscope (STEM). The low-temperature transport measurements revealed a novel semimetal-to-insulator transition in WTe2 layers and an enhanced superconductivity in few-layer MoTe2. This work paves the way to the synthesis of atom-thin tellurides and also quantum spin Hall devices.

There are no more papers matching your filters at the moment.