UC San Diego

M+ extends the MemoryLLM architecture, enabling Large Language Models to retain and recall information over sequence lengths exceeding 160,000 tokens, a substantial improvement over MemoryLLM's previous 20,000 token limit. This is achieved through a scalable long-term memory mechanism and a co-trained retriever that efficiently retrieves relevant hidden states, while maintaining competitive GPU memory usage.

Researchers from UCSD, UCLA, and Amazon developed MEMORYLLM, a Large Language Model architecture designed for continuous self-updatability by integrating a fixed-size memory pool directly into the transformer's latent space. The model efficiently absorbs new knowledge, retains previously learned information, and maintains performance integrity over extensive update cycles, demonstrating superior capabilities in model editing and long-context understanding while remaining robust after nearly a million updates.

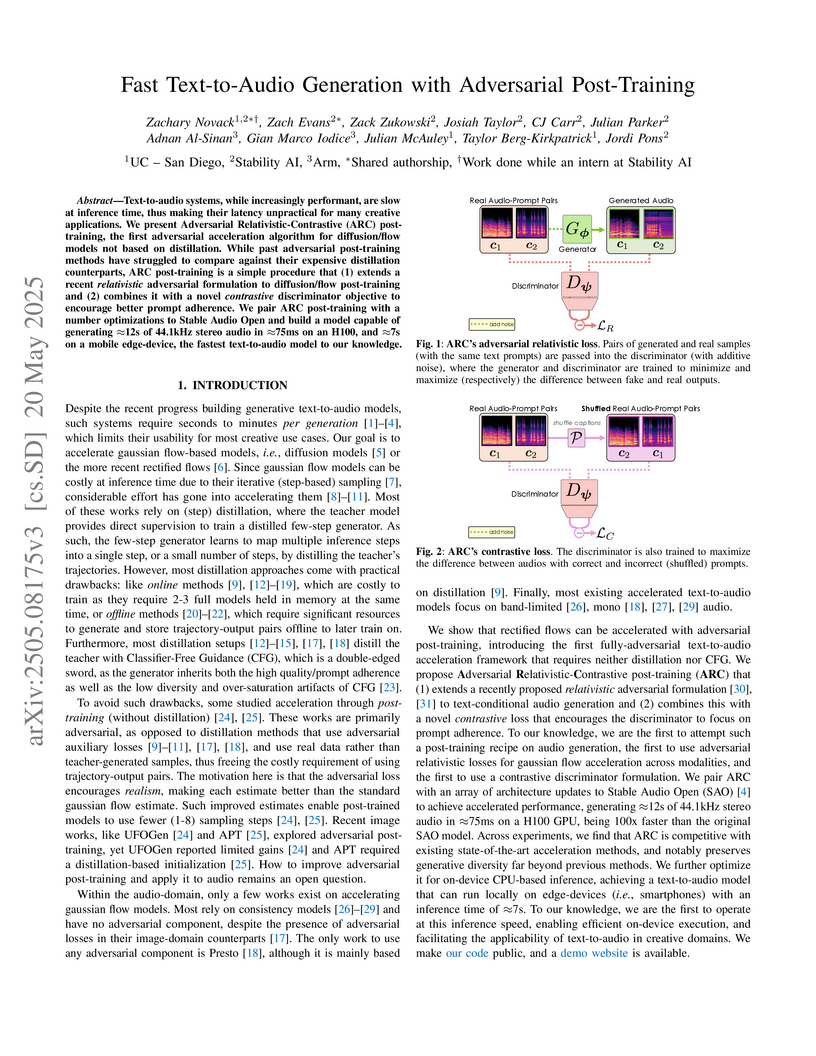

Text-to-audio systems, while increasingly performant, are slow at inference

time, thus making their latency unpractical for many creative applications. We

present Adversarial Relativistic-Contrastive (ARC) post-training, the first

adversarial acceleration algorithm for diffusion/flow models not based on

distillation. While past adversarial post-training methods have struggled to

compare against their expensive distillation counterparts, ARC post-training is

a simple procedure that (1) extends a recent relativistic adversarial

formulation to diffusion/flow post-training and (2) combines it with a novel

contrastive discriminator objective to encourage better prompt adherence. We

pair ARC post-training with a number optimizations to Stable Audio Open and

build a model capable of generating ≈12s of 44.1kHz stereo audio in

≈75ms on an H100, and ≈7s on a mobile edge-device, the fastest

text-to-audio model to our knowledge.

Researchers at University College Cork and Bar-Ilan University quantitatively mapped the efficiency and timescales of population inversion formation in relativistic plasmas through nonresonant interactions with Alfv´en waves. This study, crucial for synchrotron maser emission (SME) in Fast Radio Bursts (FRBs), finds that up to 30% of particle energy can be funneled into the inversion, particularly in highly magnetized plasmas, supporting the generation of FRB signals at GHz frequencies.

Despite advances in diffusion-based text-to-music (TTM) methods, efficient,

high-quality generation remains a challenge. We introduce Presto!, an approach

to inference acceleration for score-based diffusion transformers via reducing

both sampling steps and cost per step. To reduce steps, we develop a new

score-based distribution matching distillation (DMD) method for the EDM-family

of diffusion models, the first GAN-based distillation method for TTM. To reduce

the cost per step, we develop a simple, but powerful improvement to a recent

layer distillation method that improves learning via better preserving hidden

state variance. Finally, we combine our step and layer distillation methods

together for a dual-faceted approach. We evaluate our step and layer

distillation methods independently and show each yield best-in-class

performance. Our combined distillation method can generate high-quality outputs

with improved diversity, accelerating our base model by 10-18x (230/435ms

latency for 32 second mono/stereo 44.1kHz, 15x faster than comparable SOTA) --

the fastest high-quality TTM to our knowledge. Sound examples can be found at

this https URL

Researchers from UC San Diego and collaborators introduced WIKIDYK, a real-world, expert-curated benchmark for evaluating Large Language Models' (LLMs) ability to acquire and retain new facts. The study found that Bidirectional Language Models (BiLMs) outperform Causal Language Models (CLMs) in knowledge memorization, leading to a proposed modular ensemble framework for efficient knowledge injection.

28 Apr 2022

Shape restrictions have played a central role in economics as both testable

implications of theory and sufficient conditions for obtaining informative

counterfactual predictions. In this paper we provide a general procedure for

inference under shape restrictions in identified and partially identified

models defined by conditional moment restrictions. Our test statistics and

proposed inference methods are based on the minimum of the generalized method

of moments (GMM) objective function with and without shape restrictions.

Uniformly valid critical values are obtained through a bootstrap procedure that

approximates a subset of the true local parameter space. In an empirical

analysis of the effect of childbearing on female labor supply, we show that

employing shape restrictions in linear instrumental variables (IV) models can

lead to shorter confidence regions for both local and average treatment

effects. Other applications we discuss include inference for the variability of

quantile IV treatment effects and for bounds on average equivalent variation in

a demand model with general heterogeneity. We find in Monte Carlo examples that

the critical values are conservatively accurate and that tests about objects of

interest have good power relative to unrestricted GMM.

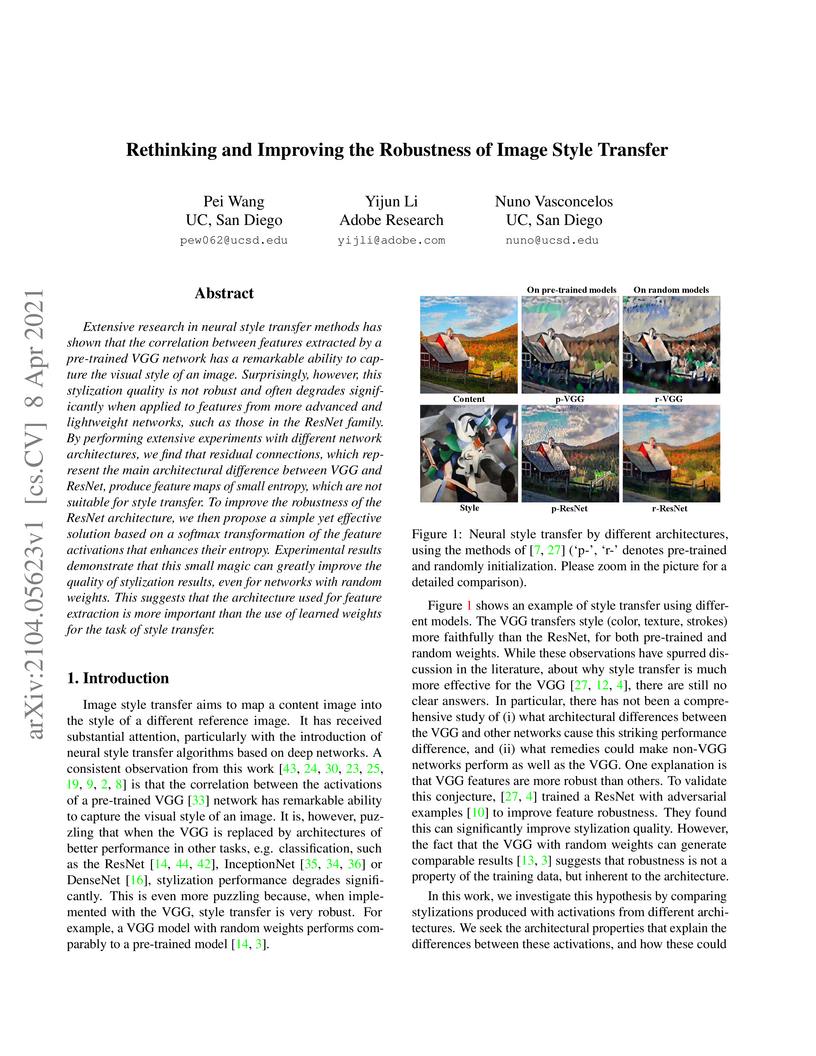

This research explains why VGG networks excel at neural style transfer while modern architectures struggle, attributing the issue to the low-entropy activation distributions caused by residual connections. The proposed solution, Stylization With Activation smoothinG (SWAG), applies a softmax transformation to feature activations during loss calculation, enabling modern networks like ResNet to achieve visual quality that matches or exceeds VGG’s performance in style transfer tasks.

04 Dec 2024

This research from Kim, Diagne, and Krstić at UC San Diego develops a robust control strategy that guarantees safety for high relative degree systems operating in the presence of unknown moving obstacles. The method combines Robust Control Barrier Functions (RCBFs) with CBF backstepping, introducing a novel smooth formulation (sRCBF) to overcome non-smoothness issues, and successfully avoids collisions in simulations where standard methods fail.

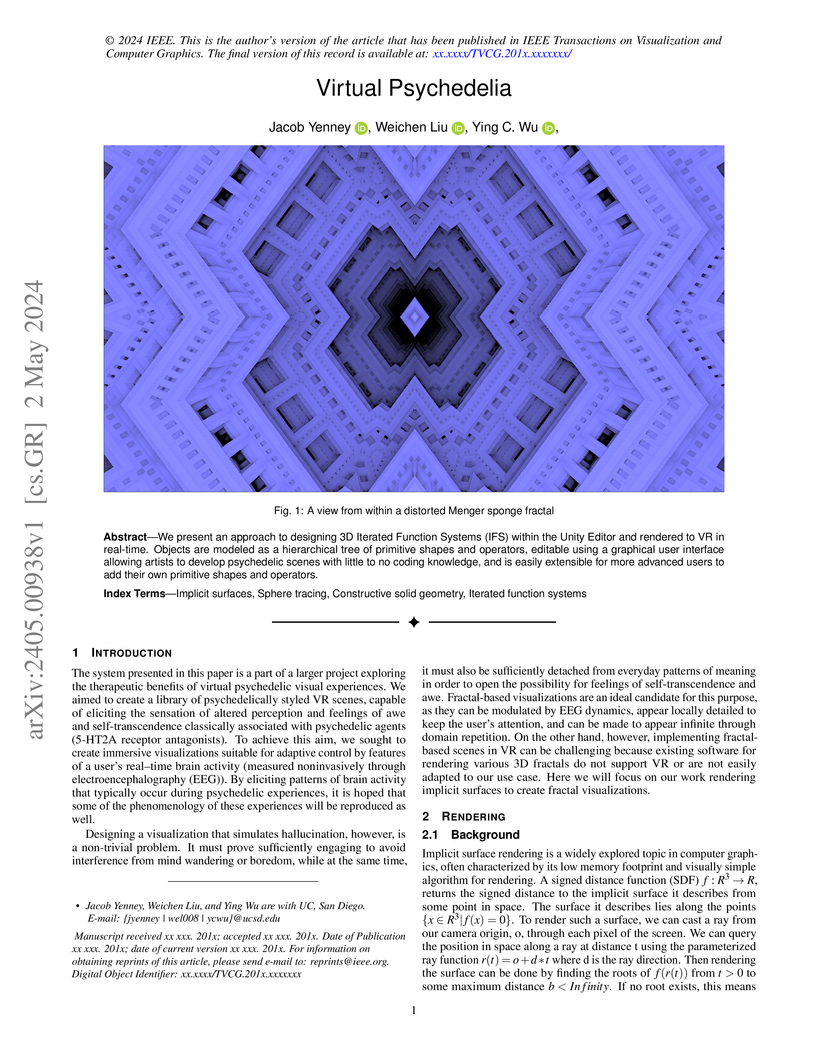

02 May 2024

We present an approach to designing 3D Iterated Function Systems (IFS) within the Unity Editor and rendered to VR in real-time. Objects are modeled as a hierarchical tree of primitive shapes and operators, editable using a graphical user interface allowing artists to develop psychedelic scenes with little to no coding knowledge, and is easily extensible for more advanced users to add their own primitive shapes and operators.

Domain adaptation (DA) is a technique that transfers predictive models

trained on a labeled source domain to an unlabeled target domain, with the core

difficulty of resolving distributional shift between domains. Currently, most

popular DA algorithms are based on distributional matching (DM). However in

practice, realistic domain shifts (RDS) may violate their basic assumptions and

as a result these methods will fail. In this paper, in order to devise robust

DA algorithms, we first systematically analyze the limitations of DM based

methods, and then build new benchmarks with more realistic domain shifts to

evaluate the well-accepted DM methods. We further propose InstaPBM, a novel

Instance-based Predictive Behavior Matching method for robust DA. Extensive

experiments on both conventional and RDS benchmarks demonstrate both the

limitations of DM methods and the efficacy of InstaPBM: Compared with the best

baselines, InstaPBM improves the classification accuracy respectively by

4.5%, 3.9% on Digits5, VisDA2017, and 2.2%, 2.9%, 3.6% on

DomainNet-LDS, DomainNet-ILDS, ID-TwO. We hope our intuitive yet effective

method will serve as a useful new direction and increase the robustness of DA

in real scenarios. Code will be available at anonymous link:

this https URL

We study the problem of PAC learning homogeneous halfspaces in the presence of Tsybakov noise. In the Tsybakov noise model, the label of every sample is independently flipped with an adversarially controlled probability that can be arbitrarily close to 1/2 for a fraction of the samples. {\em We give the first polynomial-time algorithm for this fundamental learning problem.} Our algorithm learns the true halfspace within any desired accuracy ϵ and succeeds under a broad family of well-behaved distributions including log-concave distributions. Prior to our work, the only previous algorithm for this problem required quasi-polynomial runtime in 1/ϵ.

Our algorithm employs a recently developed reduction \cite{DKTZ20b} from learning to certifying the non-optimality of a candidate halfspace. This prior work developed a quasi-polynomial time certificate algorithm based on polynomial regression. {\em The main technical contribution of the current paper is the first polynomial-time certificate algorithm.} Starting from a non-trivial warm-start, our algorithm performs a novel "win-win" iterative process which, at each step, either finds a valid certificate or improves the angle between the current halfspace and the true one. Our warm-start algorithm for isotropic log-concave distributions involves a number of analytic tools that may be of broader interest. These include a new efficient method for reweighting the distribution in order to recenter it and a novel characterization of the spectrum of the degree-2 Chow parameters.

21 Aug 2022

Generative Adversarial Networks (GANs) are a widely-used tool for generative

modeling of complex data. Despite their empirical success, the training of GANs

is not fully understood due to the min-max optimization of the generator and

discriminator. This paper analyzes these joint dynamics when the true samples,

as well as the generated samples, are discrete, finite sets, and the

discriminator is kernel-based. A simple yet expressive framework for analyzing

training called the Isolated Points Model is introduced. In the

proposed model, the distance between true samples greatly exceeds the kernel

width, so each generated point is influenced by at most one true point. Our

model enables precise characterization of the conditions for convergence, both

to good and bad minima. In particular, the analysis explains two common failure

modes: (i) an approximate mode collapse and (ii) divergence. Numerical

simulations are provided that predictably replicate these behaviors.

03 Dec 2023

Google DeepMind

Google DeepMind University of Waterloo

University of Waterloo Anthropic

Anthropic Carnegie Mellon University

Carnegie Mellon University Google

Google UC Berkeley

UC Berkeley University of Michigan

University of Michigan OpenAI

OpenAI Cornell UniversityUC San Diego

Cornell UniversityUC San Diego Google Research

Google Research MicrosoftUC Davis

MicrosoftUC Davis University of PennsylvaniaElectronic Frontier FoundationUniversity of SussexDatabricksStanford Law SchoolYale Law SchoolTideliftGeorgetown Law SchoolLuminate GroupCardozo LawNebraska College of Law

University of PennsylvaniaElectronic Frontier FoundationUniversity of SussexDatabricksStanford Law SchoolYale Law SchoolTideliftGeorgetown Law SchoolLuminate GroupCardozo LawNebraska College of LawThis report presents the takeaways of the inaugural Workshop on Generative AI

and Law (GenLaw), held in July 2023. A cross-disciplinary group of

practitioners and scholars from computer science and law convened to discuss

the technical, doctrinal, and policy challenges presented by law for Generative

AI, and by Generative AI for law, with an emphasis on U.S. law in particular.

We begin the report with a high-level statement about why Generative AI is both

immensely significant and immensely challenging for law. To meet these

challenges, we conclude that there is an essential need for 1) a shared

knowledge base that provides a common conceptual language for experts across

disciplines; 2) clarification of the distinctive technical capabilities of

generative-AI systems, as compared and contrasted to other computer and AI

systems; 3) a logical taxonomy of the legal issues these systems raise; and, 4)

a concrete research agenda to promote collaboration and knowledge-sharing on

emerging issues at the intersection of Generative AI and law. In this report,

we synthesize the key takeaways from the GenLaw workshop that begin to address

these needs. All of the listed authors contributed to the workshop upon which

this report is based, but they and their organizations do not necessarily

endorse all of the specific claims in this report.

Federated Learning (FL) has been a pivotal paradigm for collaborative training of machine learning models across distributed datasets. In heterogeneous settings, it has been observed that a single shared FL model can lead to low local accuracy, motivating personalized FL algorithms. In parallel, fair FL algorithms have been proposed to enforce group fairness on the global models. Again, in heterogeneous settings, global and local fairness do not necessarily align, motivating the recent literature on locally fair FL. In this paper, we propose new FL algorithms for heterogeneous settings, spanning the space between personalized and locally fair FL. Building on existing clustering-based personalized FL methods, we incorporate a new fairness metric into cluster assignment, enabling a tunable balance between local accuracy and fairness. Our methods match or exceed the performance of existing locally fair FL approaches, without explicit fairness intervention. We further demonstrate (numerically and analytically) that personalization alone can improve local fairness and that our methods exploit this alignment when present.

Fairness is a widely discussed topic in recommender systems, but its

practical implementation faces challenges in defining sensitive features while

maintaining recommendation accuracy. We propose feature fairness as the

foundation to achieve equitable treatment across diverse groups defined by

various feature combinations. This improves overall accuracy through balanced

feature generalizability. We introduce unbiased feature learning through

adversarial training, using adversarial perturbation to enhance feature

representation. The adversaries improve model generalization for

under-represented features. We adapt adversaries automatically based on two

forms of feature biases: frequency and combination variety of feature values.

This allows us to dynamically adjust perturbation strengths and adversarial

training weights. Stronger perturbations are applied to feature values with

fewer combination varieties to improve generalization, while higher weights for

low-frequency features address training imbalances. We leverage the Adaptive

Adversarial perturbation based on the widely-applied Factorization Machine

(AAFM) as our backbone model. In experiments, AAFM surpasses strong baselines

in both fairness and accuracy measures. AAFM excels in providing item- and

user-fairness for single- and multi-feature tasks, showcasing their versatility

and scalability. To maintain good accuracy, we find that adversarial

perturbation must be well-managed: during training, perturbations should not

overly persist and their strengths should decay.

Mobile devices such as smartphones, laptops, and tablets can often connect to multiple access networks (e.g., Wi-Fi, LTE, and 5G) simultaneously. Recent advancements facilitate seamless integration of these connections below the transport layer, enhancing the experience for apps that lack inherent multi-path support. This optimization hinges on dynamically determining the traffic distribution across networks for each device, a process referred to as \textit{multi-access traffic splitting}. This paper introduces \textit{NetworkGym}, a high-fidelity network environment simulator that facilitates generating multiple network traffic flows and multi-access traffic splitting. This simulator facilitates training and evaluating different RL-based solutions for the multi-access traffic splitting problem. Our initial explorations demonstrate that the majority of existing state-of-the-art offline RL algorithms (e.g. CQL) fail to outperform certain hand-crafted heuristic policies on average. This illustrates the urgent need to evaluate offline RL algorithms against a broader range of benchmarks, rather than relying solely on popular ones such as D4RL. We also propose an extension to the TD3+BC algorithm, named Pessimistic TD3 (PTD3), and demonstrate that it outperforms many state-of-the-art offline RL algorithms. PTD3's behavioral constraint mechanism, which relies on value-function pessimism, is theoretically motivated and relatively simple to implement.

31 Jul 2023

We develop a new technique for proving distribution testing lower bounds for

properties defined by inequalities involving the bin probabilities of the

distribution in question. Using this technique we obtain new lower bounds for

monotonicity testing over discrete cubes and tight lower bounds for

log-concavity testing.

Our basic technique involves constructing a pair of moment-matching families

of distributions by tweaking the probabilities of pairs of bins so that one

family maintains the defining inequalities while the other violates them.

We study the task of agnostically learning halfspaces under the Gaussian

distribution. Specifically, given labeled examples (x,y) from an

unknown distribution on Rn×{±1}, whose marginal

distribution on x is the standard Gaussian and the labels y can be

arbitrary, the goal is to output a hypothesis with 0-1 loss

OPT+ϵ, where OPT is the 0-1 loss of the

best-fitting halfspace. We prove a near-optimal computational hardness result

for this task, under the widely believed sub-exponential time hardness of the

Learning with Errors (LWE) problem. Prior hardness results are either

qualitatively suboptimal or apply to restricted families of algorithms. Our

techniques extend to yield near-optimal lower bounds for related problems,

including ReLU regression.

We study the complexity of PAC learning halfspaces in the presence of Massart

noise. In this problem, we are given i.i.d. labeled examples $(\mathbf{x}, y)

\in \mathbb{R}^N \times \{ \pm 1\},wherethedistributionof\mathbf{x}$ is

arbitrary and the label y is a Massart corruption of f(x), for an

unknown halfspace f:RN→{±1}, with flipping probability

\eta(\mathbf{x}) \leq \eta < 1/2. The goal of the learner is to compute a

hypothesis with small 0-1 error. Our main result is the first computational

hardness result for this learning problem. Specifically, assuming the (widely

believed) subexponential-time hardness of the Learning with Errors (LWE)

problem, we show that no polynomial-time Massart halfspace learner can achieve

error better than Ω(η), even if the optimal 0-1 error is small,

namely OPT=2−logc(N) for any universal constant $c \in (0,

1)$. Prior work had provided qualitatively similar evidence of hardness in the

Statistical Query model. Our computational hardness result essentially resolves

the polynomial PAC learnability of Massart halfspaces, by showing that known

efficient learning algorithms for the problem are nearly best possible.

There are no more papers matching your filters at the moment.