University of Turin

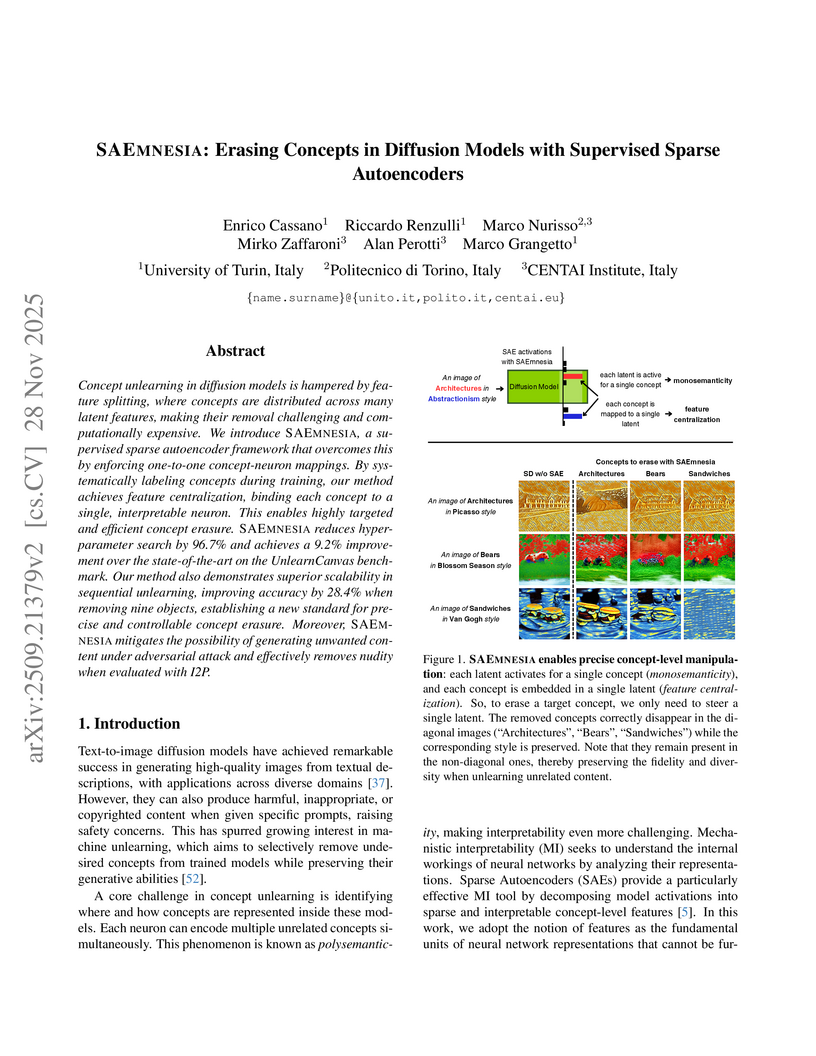

Concept unlearning in diffusion models is hampered by feature splitting, where concepts are distributed across many latent features, making their removal challenging and computationally expensive. We introduce SAEmnesia, a supervised sparse autoencoder framework that overcomes this by enforcing one-to-one concept-neuron mappings. By systematically labeling concepts during training, our method achieves feature centralization, binding each concept to a single, interpretable neuron. This enables highly targeted and efficient concept erasure. SAEmnesia reduces hyperparameter search by 96.7% and achieves a 9.2% improvement over the state-of-the-art on the UnlearnCanvas benchmark. Our method also demonstrates superior scalability in sequential unlearning, improving accuracy by 28.4% when removing nine objects, establishing a new standard for precise and controllable concept erasure. Moreover, SAEmnesia mitigates the possibility of generating unwanted content under adversarial attack and effectively removes nudity when evaluated with I2P.

A plug-and-play module named FOLDER efficiently reduces visual token sequence length in Multi-modal Large Language Models, achieving up to 2.7x speed-up with up to 70% token reduction while frequently boosting performance across diverse MLLM architectures. Developed by researchers from SJTU, Télécom Paris, University of Turin, and Alibaba Group, this method enhances both inference and training efficiency.

28 Feb 2020

For α0=[a0,a1,…] an infinite continued fraction

and σ a linear fractional transformation, we study the continued

fraction expansion of σ(α0) and its convergents. We provide the

continued fraction expansion of σ(α0) for four general families of

continued fractions and when ∣detσ∣=2. We also find

nonlinear recurrence relations among the convergents of σ(α0)

which allow us to highlight relations between convergents of α0 and

σ(α0). Finally, we apply our results to some special and

well-studied continued fractions, like Hurwitzian and Tasoevian ones, giving a

first study about leaping convergents having steps provided by nonlinear

functions.

Giulio Caldarelli, from the University of Turin, critically assesses whether Artificial Intelligence can resolve the blockchain oracle problem, concluding that AI significantly enhances data reliability and manipulation detection in oracle systems but cannot fundamentally solve the epistemological challenge of verifying off-chain information due to its inherent limitations and reliance on external data integrity. The work advocates for AI as a complementary component within robust, hybrid oracle designs.

05 Mar 2025

This forecasting study demonstrates that combining data from the Square Kilometre Array Observatory (SKAO) and European Southern Observatory (ESO) facilities offers significantly tighter and more robust constraints on cosmological parameters than individual surveys. Leveraging multi-tracer analyses, these synergies are projected to reduce systematic errors and parameter degeneracies, advancing precision cosmology and the exploration of physics beyond the ΛCDM model.

3D Gaussian Splatting enhances real-time performance in novel view synthesis

by representing scenes with mixtures of Gaussians and utilizing differentiable

rasterization. However, it typically requires large storage capacity and high

VRAM, demanding the design of effective pruning and compression techniques.

Existing methods, while effective in some scenarios, struggle with scalability

and fail to adapt models based on critical factors such as computing

capabilities or bandwidth, requiring to re-train the model under different

configurations. In this work, we propose a novel, model-agnostic technique that

organizes Gaussians into several hierarchical layers, enabling progressive

Level of Detail (LoD) strategy. This method, combined with recent approach of

compression of 3DGS, allows a single model to instantly scale across several

compression ratios, with minimal to none impact to quality compared to a single

non-scalable model and without requiring re-training. We validate our approach

on typical datasets and benchmarks, showcasing low distortion and substantial

gains in terms of scalability and adaptability.

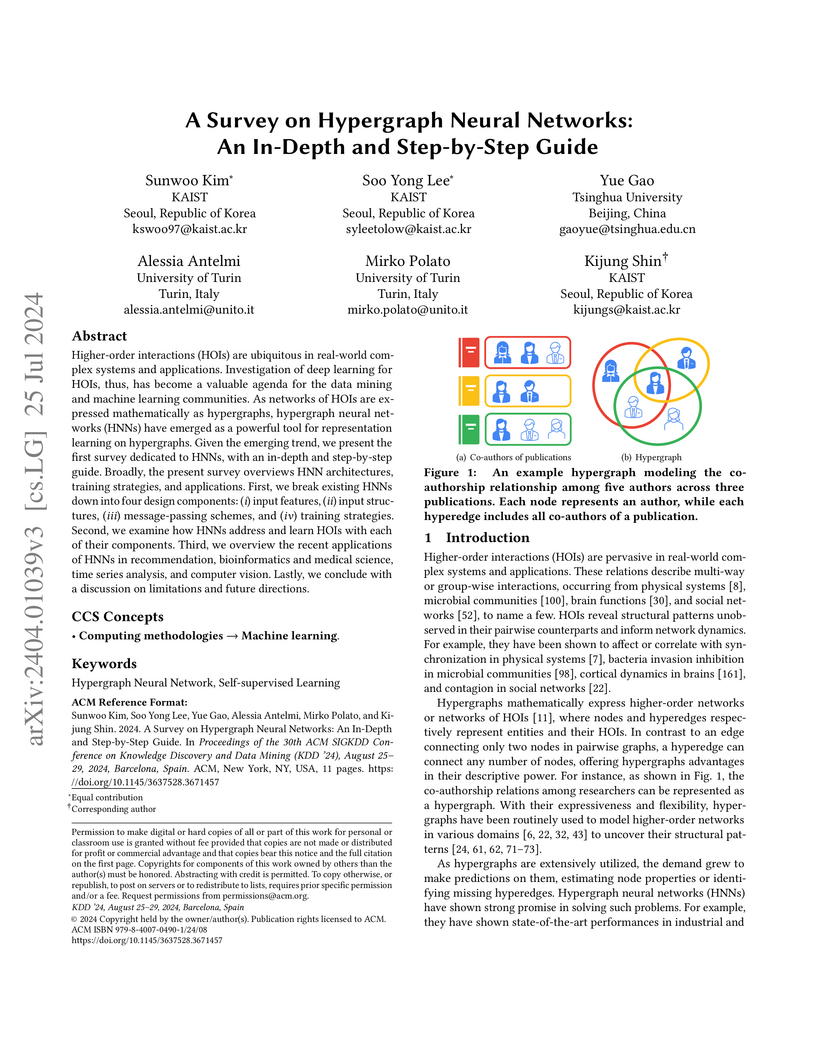

Higher-order interactions (HOIs) are ubiquitous in real-world complex systems

and applications. Investigation of deep learning for HOIs, thus, has become a

valuable agenda for the data mining and machine learning communities. As

networks of HOIs are expressed mathematically as hypergraphs, hypergraph neural

networks (HNNs) have emerged as a powerful tool for representation learning on

hypergraphs. Given the emerging trend, we present the first survey dedicated to

HNNs, with an in-depth and step-by-step guide. Broadly, the present survey

overviews HNN architectures, training strategies, and applications. First, we

break existing HNNs down into four design components: (i) input features, (ii)

input structures, (iii) message-passing schemes, and (iv) training strategies.

Second, we examine how HNNs address and learn HOIs with each of their

components. Third, we overview the recent applications of HNNs in

recommendation, bioinformatics and medical science, time series analysis, and

computer vision. Lastly, we conclude with a discussion on limitations and

future directions.

We study bulk locality constraints in quantum field theories in AdS2. The known derivation of locality sum rules in AdSd+1 does not apply for d=1 due to the different singularity structure of the conformal blocks and the inequivalence of operator orderings on the boundary. Assuming unitarity and a mild growth condition, we establish power-law bounds for correlators, derive dispersion relations and an expansion in terms of ``even'' and ``odd'' local blocks that converges in the entire AdS2. These yield two novel families of symmetric and antisymmetric locality sum rules. We test these sum rules explicitly in the free scalar field theory.

A mathematical framework models information flow dynamics in the age of generative AI through a novel RGB (Real-Green-Blue) stochastic model, revealing quantitative relationships between AI adoption rates, user behavior patterns, and the proliferation of misinformation while demonstrating increased risks of model autophagy and quality degradation through empirical validation using Stack Exchange data.

National United University University of Cambridge

University of Cambridge Chinese Academy of Sciences

Chinese Academy of Sciences Carnegie Mellon UniversitySichuan University

Carnegie Mellon UniversitySichuan University Sun Yat-Sen UniversityKorea University

Sun Yat-Sen UniversityKorea University Beihang University

Beihang University Nanjing University

Nanjing University Tsinghua UniversityNankai University

Tsinghua UniversityNankai University Peking UniversityJoint Institute for Nuclear ResearchSouthwest University

Peking UniversityJoint Institute for Nuclear ResearchSouthwest University Stockholm UniversityUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University

Stockholm UniversityUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University Shandong UniversityLanzhou UniversityUlm UniversityNorthwest UniversityIndian Institute of Technology MadrasIowa State UniversityUniversity of South China

Shandong UniversityLanzhou UniversityUlm UniversityNorthwest UniversityIndian Institute of Technology MadrasIowa State UniversityUniversity of South China University of GroningenWarsaw University of TechnologyGuangxi UniversityShanxi UniversityHenan University of Science and TechnologyHelmholtz-Zentrum Dresden-RossendorfZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadHangzhou Institute for Advanced Study, UCASIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsXian Jiaotong UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityNorth China Electric Power UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute for Nuclear Research of the Russian Academy of SciencesGSI Helmholtzzentrum fur Schwerionenforschung GmbHUniversity of the PunjabHuazhong Normal UniversityThe University of MississippiNikhef, National Institute for Subatomic PhysicsUniversity of Science and Technology LiaoningINFN Sezione di Roma Tor VergataHelmholtz-Institut MainzPontificia Universidad JaverianaIJCLab, Université Paris-Saclay, CNRSSchool of Physics and Technology, Wuhan UniversityInstitut f¨ur Kernphysik, Forschungszentrum J¨ulichINFN-Sezione di FerraraRuhr-University-BochumUniversity of Rome

“Tor Vergata

”

University of GroningenWarsaw University of TechnologyGuangxi UniversityShanxi UniversityHenan University of Science and TechnologyHelmholtz-Zentrum Dresden-RossendorfZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadHangzhou Institute for Advanced Study, UCASIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsXian Jiaotong UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityNorth China Electric Power UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute for Nuclear Research of the Russian Academy of SciencesGSI Helmholtzzentrum fur Schwerionenforschung GmbHUniversity of the PunjabHuazhong Normal UniversityThe University of MississippiNikhef, National Institute for Subatomic PhysicsUniversity of Science and Technology LiaoningINFN Sezione di Roma Tor VergataHelmholtz-Institut MainzPontificia Universidad JaverianaIJCLab, Université Paris-Saclay, CNRSSchool of Physics and Technology, Wuhan UniversityInstitut f¨ur Kernphysik, Forschungszentrum J¨ulichINFN-Sezione di FerraraRuhr-University-BochumUniversity of Rome

“Tor Vergata

”

University of Cambridge

University of Cambridge Chinese Academy of Sciences

Chinese Academy of Sciences Carnegie Mellon UniversitySichuan University

Carnegie Mellon UniversitySichuan University Sun Yat-Sen UniversityKorea University

Sun Yat-Sen UniversityKorea University Beihang University

Beihang University Nanjing University

Nanjing University Tsinghua UniversityNankai University

Tsinghua UniversityNankai University Peking UniversityJoint Institute for Nuclear ResearchSouthwest University

Peking UniversityJoint Institute for Nuclear ResearchSouthwest University Stockholm UniversityUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University

Stockholm UniversityUniversity of TurinUppsala UniversityGuangxi Normal UniversityCentral China Normal University Shandong UniversityLanzhou UniversityUlm UniversityNorthwest UniversityIndian Institute of Technology MadrasIowa State UniversityUniversity of South China

Shandong UniversityLanzhou UniversityUlm UniversityNorthwest UniversityIndian Institute of Technology MadrasIowa State UniversityUniversity of South China University of GroningenWarsaw University of TechnologyGuangxi UniversityShanxi UniversityHenan University of Science and TechnologyHelmholtz-Zentrum Dresden-RossendorfZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadHangzhou Institute for Advanced Study, UCASIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsXian Jiaotong UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityNorth China Electric Power UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute for Nuclear Research of the Russian Academy of SciencesGSI Helmholtzzentrum fur Schwerionenforschung GmbHUniversity of the PunjabHuazhong Normal UniversityThe University of MississippiNikhef, National Institute for Subatomic PhysicsUniversity of Science and Technology LiaoningINFN Sezione di Roma Tor VergataHelmholtz-Institut MainzPontificia Universidad JaverianaIJCLab, Université Paris-Saclay, CNRSSchool of Physics and Technology, Wuhan UniversityInstitut f¨ur Kernphysik, Forschungszentrum J¨ulichINFN-Sezione di FerraraRuhr-University-BochumUniversity of Rome

“Tor Vergata

”

University of GroningenWarsaw University of TechnologyGuangxi UniversityShanxi UniversityHenan University of Science and TechnologyHelmholtz-Zentrum Dresden-RossendorfZhengzhou UniversityINFN, Sezione di TorinoCOMSATS University IslamabadHangzhou Institute for Advanced Study, UCASIndian Institute of Technology GuwahatiBudker Institute of Nuclear PhysicsXian Jiaotong UniversityJohannes Gutenberg UniversityINFN, Laboratori Nazionali di FrascatiHenan Normal UniversityNorth China Electric Power UniversityInstitute of high-energy PhysicsJustus Liebig University GiessenInstitute for Nuclear Research of the Russian Academy of SciencesGSI Helmholtzzentrum fur Schwerionenforschung GmbHUniversity of the PunjabHuazhong Normal UniversityThe University of MississippiNikhef, National Institute for Subatomic PhysicsUniversity of Science and Technology LiaoningINFN Sezione di Roma Tor VergataHelmholtz-Institut MainzPontificia Universidad JaverianaIJCLab, Université Paris-Saclay, CNRSSchool of Physics and Technology, Wuhan UniversityInstitut f¨ur Kernphysik, Forschungszentrum J¨ulichINFN-Sezione di FerraraRuhr-University-BochumUniversity of Rome

“Tor Vergata

”Based on 10.64 fb−1 of e+e− collision data taken at center-of-mass energies between 4.237 and 4.699 GeV with the BESIII detector, we study the leptonic Ds+ decays using the e+e−→Ds∗+Ds∗− process. The branching fractions of Ds+→ℓ+νℓ(ℓ=μ,τ) are measured to be B(Ds+→μ+νμ)=(0.547±0.026stat±0.016syst)% and B(Ds+→τ+ντ)=(5.60±0.16stat±0.20syst)%, respectively. The product of the decay constant and Cabibbo-Kobayashi-Maskawa matrix element ∣Vcs∣ is determined to be fDs+∣Vcs∣=(246.5±5.9stat±3.6syst±0.5input)μν MeV and fDs+∣Vcs∣=(252.7±3.6stat±4.5syst±0.6input))τν MeV, respectively. Taking the value of ∣Vcs∣ from a global fit in the Standard Model, we obtain fDs+=(252.8±6.0stat±3.7syst±0.6input)μν MeV and fDs+=(259.2±3.6stat±4.5syst±0.6input)τν MeV, respectively. Conversely, taking the value for fDs+ from the latest lattice quantum chromodynamics calculation, we obtain ∣Vcs∣=(0.986±0.023stat±0.014syst±0.003input)μν and ∣Vcs∣=(1.011±0.014stat±0.018syst±0.003input)τν, respectively.

We present SAM4EM, a novel approach for 3D segmentation of complex neural

structures in electron microscopy (EM) data by leveraging the Segment Anything

Model (SAM) alongside advanced fine-tuning strategies. Our contributions

include the development of a prompt-free adapter for SAM using two stage mask

decoding to automatically generate prompt embeddings, a dual-stage fine-tuning

method based on Low-Rank Adaptation (LoRA) for enhancing segmentation with

limited annotated data, and a 3D memory attention mechanism to ensure

segmentation consistency across 3D stacks. We further release a unique

benchmark dataset for the segmentation of astrocytic processes and synapses. We

evaluated our method on challenging neuroscience segmentation benchmarks,

specifically targeting mitochondria, glia, and synapses, with significant

accuracy improvements over state-of-the-art (SOTA) methods, including recent

SAM-based adapters developed for the medical domain and other vision

transformer-based approaches. Experimental results indicate that our approach

outperforms existing solutions in the segmentation of complex processes like

glia and post-synaptic densities. Our code and models are available at

this https URL

17 Jul 2025

There are many economic contexts where the productivity and welfare performance of institutions and policies depend on who matches with whom. Examples include caseworkers and job seekers in job search assistance programs, medical doctors and patients, teachers and students, attorneys and defendants, and tax auditors and taxpayers, among others. Although reallocating individuals through a change in matching policy can be less costly than training personnel or introducing a new program, methods for learning optimal matching policies and their statistical performance are less studied than methods for other policy interventions. This paper develops a method to learn welfare optimal matching policies for two-sided matching problems in which a planner matches individuals based on the rich set of observable characteristics of the two sides. We formulate the learning problem as an empirical optimal transport problem with a match cost function estimated from training data, and propose estimating an optimal matching policy by maximizing the entropy regularized empirical welfare criterion. We derive a welfare regret bound for the estimated policy and characterize its convergence. We apply our proposal to the problem of matching caseworkers and job seekers in a job search assistance program, and assess its welfare performance in a simulation study calibrated with French administrative data.

Generative AI systems are transforming content creation, but their usability

remains a key challenge. This paper examines usability factors such as user

experience, transparency, control, and cognitive load. Common challenges

include unpredictability and difficulties in fine-tuning outputs. We review

evaluation metrics like efficiency, learnability, and satisfaction,

highlighting best practices from various domains. Improving interpretability,

intuitive interfaces, and user feedback can enhance usability, making

generative AI more accessible and effective.

In the ever-evolving landscape of scientific computing, properly supporting

the modularity and complexity of modern scientific applications requires new

approaches to workflow execution, like seamless interoperability between

different workflow systems, distributed-by-design workflow models, and

automatic optimisation of data movements. In order to address this need, this

article introduces SWIRL, an intermediate representation language for

scientific workflows. In contrast with other product-agnostic workflow

languages, SWIRL is not designed for human interaction but to serve as a

low-level compilation target for distributed workflow execution plans. The main

advantages of SWIRL semantics are low-level primitives based on the

send/receive programming model and a formal framework ensuring the consistency

of the semantics and the specification of translating workflow models

represented by Directed Acyclic Graphs (DAGs) into SWIRL workflow descriptions.

Additionally, SWIRL offers rewriting rules designed to optimise execution

traces, accompanied by corresponding equivalence. An open-source SWIRL compiler

toolchain has been developed using the ANTLR Python3 bindings.

Nasal Cytology is a new and efficient clinical technique to diagnose rhinitis and allergies that is not much widespread due to the time-consuming nature of cell counting; that is why AI-aided counting could be a turning point for the diffusion of this technique. In this article we present the first dataset of rhino-cytological field images: the NCD (Nasal Cytology Dataset), aimed to train and deploy Object Detection models to support physicians and biologists during clinical practice. The real distribution of the cytotypes, populating the nasal mucosa has been replicated, sampling images from slides of clinical patients, and manually annotating each cell found on them. The correspondent object detection task presents non'trivial issues associated with the strong class imbalancement, involving the rarest cell types. This work contributes to some of open challenges by presenting a novel machine learning-based approach to aid the automated detection and classification of nasal mucosa cells: the DETR and YOLO models shown good performance in detecting cells and classifying them correctly, revealing great potential to accelerate the work of rhinology experts.

Transformer-based models have become the state of the art across multiple domains, from natural language processing to machine listening, thanks to attention mechanisms. However, the attention layers require a large number of parameters and high-end hardware for both training and inference. We propose a novel pruning technique targeted explicitly at the attention mechanism, where we decouple the pruning of the four layers in the attention block, namely: query, keys, values and outputs' projection matrices. We also investigate pruning strategies to prune along the head and channel dimensions, and compare the performance of the Audio Spectrogram Transformer (AST) model under different pruning scenarios. Our results show that even by pruning 50\% of the attention parameters we incur in performance degradation of less than 1\%

07 Dec 2022

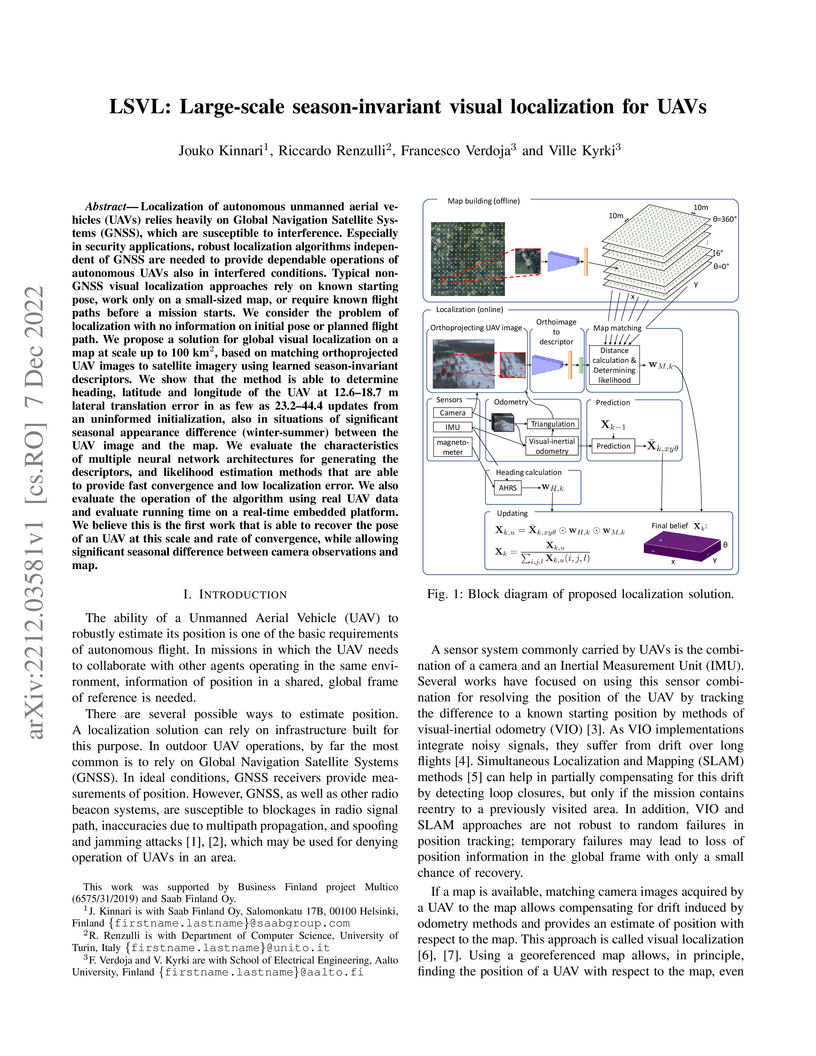

Localization of autonomous unmanned aerial vehicles (UAVs) relies heavily on Global Navigation Satellite Systems (GNSS), which are susceptible to interference. Especially in security applications, robust localization algorithms independent of GNSS are needed to provide dependable operations of autonomous UAVs also in interfered conditions. Typical non-GNSS visual localization approaches rely on known starting pose, work only on a small-sized map, or require known flight paths before a mission starts. We consider the problem of localization with no information on initial pose or planned flight path. We propose a solution for global visual localization on a map at scale up to 100 km2, based on matching orthoprojected UAV images to satellite imagery using learned season-invariant descriptors. We show that the method is able to determine heading, latitude and longitude of the UAV at 12.6-18.7 m lateral translation error in as few as 23.2-44.4 updates from an uninformed initialization, also in situations of significant seasonal appearance difference (winter-summer) between the UAV image and the map. We evaluate the characteristics of multiple neural network architectures for generating the descriptors, and likelihood estimation methods that are able to provide fast convergence and low localization error. We also evaluate the operation of the algorithm using real UAV data and evaluate running time on a real-time embedded platform. We believe this is the first work that is able to recover the pose of an UAV at this scale and rate of convergence, while allowing significant seasonal difference between camera observations and map.

Implicit meanings are integral to human communication, making it essential for language models to be capable of identifying and interpreting them. Grice (1975) proposed a set of conversational maxims that guide cooperative dialogue, noting that speakers may deliberately violate these principles to express meanings beyond literal words, and that listeners, in turn, recognize such violations to draw pragmatic inferences.

Building on Surian et al. (1996)'s study of children's sensitivity to violations of Gricean maxims, we introduce a novel benchmark to test whether language models pretrained on less than 10M and less than 100M tokens can distinguish maxim-adhering from maxim-violating utterances. We compare these BabyLMs across five maxims and situate their performance relative to children and a Large Language Model (LLM) pretrained on 3T tokens.

We find that overall, models trained on less than 100M tokens outperform those trained on less than 10M, yet fall short of child-level and LLM competence. Our results suggest that modest data increases improve some aspects of pragmatic behavior, leading to finer-grained differentiation between pragmatic dimensions.

Self-supervised learning (SSL) methods are enabling an increasing number of deep learning models to be trained on image datasets in domains where labels are difficult to obtain. These methods, however, struggle to scale to the high resolution of medical imaging datasets, where they are critical for achieving good generalization on label-scarce medical image datasets. In this work, we propose the Histopathology DatasetGAN (HDGAN) framework, an extension of the DatasetGAN semi-supervised framework for image generation and segmentation that scales well to large-resolution histopathology images. We make several adaptations from the original framework, including updating the generative backbone, selectively extracting latent features from the generator, and switching to memory-mapped arrays. These changes reduce the memory consumption of the framework, improving its applicability to medical imaging domains. We evaluate HDGAN on a thrombotic microangiopathy high-resolution tile dataset, demonstrating strong performance on the high-resolution image-annotation generation task. We hope that this work enables more application of deep learning models to medical datasets, in addition to encouraging more exploration of self-supervised frameworks within the medical imaging domain.

Understanding how residue variations affect protein stability is crucial for

designing functional proteins and deciphering the molecular mechanisms

underlying disease-related mutations. Recent advances in protein language

models (PLMs) have revolutionized computational protein analysis, enabling,

among other things, more accurate predictions of mutational effects. In this

work, we introduce JanusDDG, a deep learning framework that leverages

PLM-derived embeddings and a bidirectional cross-attention transformer

architecture to predict ΔΔG of single and multiple-residue

mutations while simultaneously being constrained to respect fundamental

thermodynamic properties, such as antisymmetry and transitivity. Unlike

conventional self-attention, JanusDDG computes queries (Q) and values (V) as

the difference between wild-type and mutant embeddings, while keys (K)

alternate between the two. This cross-interleaved attention mechanism enables

the model to capture mutation-induced perturbations while preserving essential

contextual information. Experimental results show that JanusDDG achieves

state-of-the-art performance in predicting ΔΔG from sequence

alone, matching or exceeding the accuracy of structure-based methods for both

single and multiple mutations. Code

Availability:this https URL

There are no more papers matching your filters at the moment.