HBKU

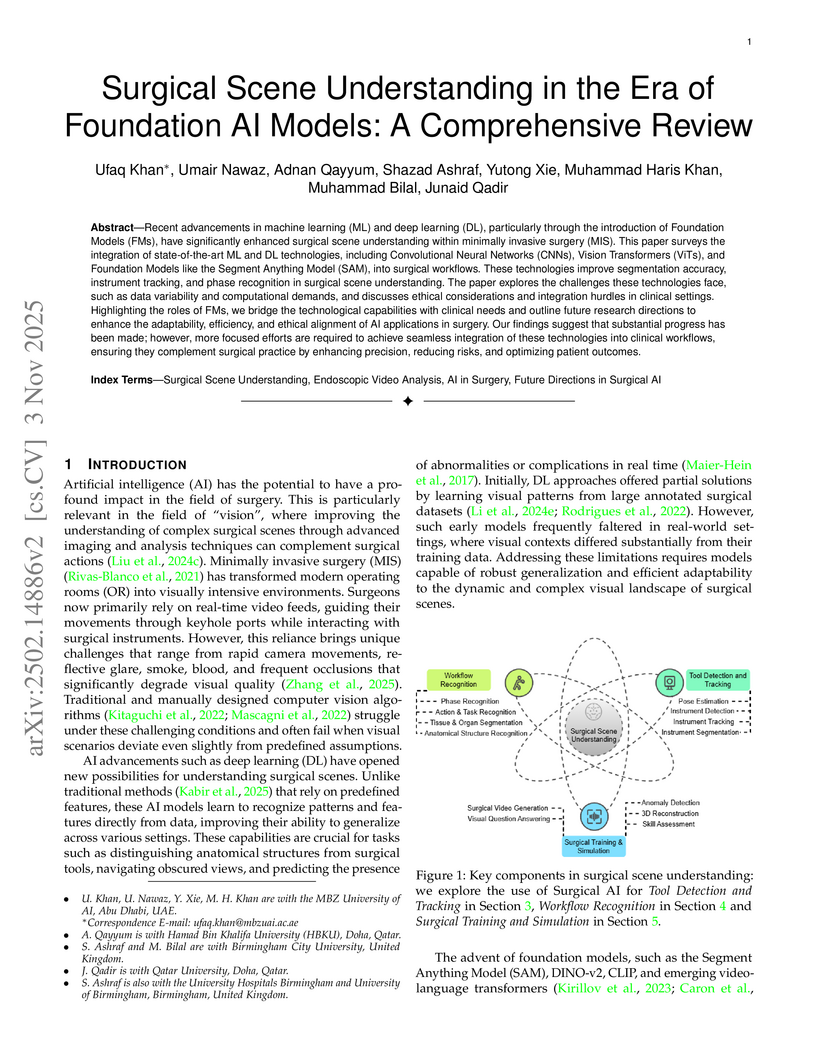

Recent advancements in machine learning (ML) and deep learning (DL), particularly through the introduction of Foundation Models (FMs), have significantly enhanced surgical scene understanding within minimally invasive surgery (MIS). This paper surveys the integration of state-of-the-art ML and DL technologies, including Convolutional Neural Networks (CNNs), Vision Transformers (ViTs), and Foundation Models like the Segment Anything Model (SAM), into surgical workflows. These technologies improve segmentation accuracy, instrument tracking, and phase recognition in surgical scene understanding. The paper explores the challenges these technologies face, such as data variability and computational demands, and discusses ethical considerations and integration hurdles in clinical settings. Highlighting the roles of FMs, we bridge the technological capabilities with clinical needs and outline future research directions to enhance the adaptability, efficiency, and ethical alignment of AI applications in surgery. Our findings suggest that substantial progress has been made; however, more focused efforts are required to achieve seamless integration of these technologies into clinical workflows, ensuring they complement surgical practice by enhancing precision, reducing risks, and optimizing patient outcomes.

This survey provides a structured and comprehensive overview of deep learning-based anomaly detection methods, categorizing techniques by data type, label availability, and training objective. It synthesizes findings on deep learning's enhanced performance for complex data and reviews the adoption and effectiveness of these methods across numerous real-world application domains.

25 Mar 2024

Researchers from Purdue, QCRI, and MBZUAI developed a multi-stage semantic ranking system to automate the annotation of cyber threat behaviors to MITRE ATT&CK techniques. The system, which utilizes fine-tuned transformer models and a newly released human-annotated dataset, achieved a recall@10 of 92.07% and recall@3 of 81.02%, outperforming prior methods and significantly exceeding the performance of general large language models.

This research from KTH Royal Institute of Technology and QCRI, HBKU demonstrates that Large Language Models frequently generate incorrect information with high confidence when provided with misleading context. It introduces an uncertainty-guided probing method that effectively leverages internal hidden states to detect these confabulations, significantly outperforming direct uncertainty metrics.

We propose a one-class neural network (OC-NN) model to detect anomalies in

complex data sets. OC-NN combines the ability of deep networks to extract a

progressively rich representation of data with the one-class objective of

creating a tight envelope around normal data. The OC-NN approach breaks new

ground for the following crucial reason: data representation in the hidden

layer is driven by the OC-NN objective and is thus customized for anomaly

detection. This is a departure from other approaches which use a hybrid

approach of learning deep features using an autoencoder and then feeding the

features into a separate anomaly detection method like one-class SVM (OC-SVM).

The hybrid OC-SVM approach is sub-optimal because it is unable to influence

representational learning in the hidden layers. A comprehensive set of

experiments demonstrate that on complex data sets (like CIFAR and GTSRB), OC-NN

performs on par with state-of-the-art methods and outperformed conventional

shallow methods in some scenarios.

The reporting and the analysis of current events around the globe has

expanded from professional, editor-lead journalism all the way to citizen

journalism. Nowadays, politicians and other key players enjoy direct access to

their audiences through social media, bypassing the filters of official cables

or traditional media. However, the multiple advantages of free speech and

direct communication are dimmed by the misuse of media to spread inaccurate or

misleading claims. These phenomena have led to the modern incarnation of the

fact-checker -- a professional whose main aim is to examine claims using

available evidence and to assess their veracity. As in other text forensics

tasks, the amount of information available makes the work of the fact-checker

more difficult. With this in mind, starting from the perspective of the

professional fact-checker, we survey the available intelligent technologies

that can support the human expert in the different steps of her fact-checking

endeavor. These include identifying claims worth fact-checking, detecting

relevant previously fact-checked claims, retrieving relevant evidence to

fact-check a claim, and actually verifying a claim. In each case, we pay

attention to the challenges in future work and the potential impact on

real-world fact-checking.

We present SAM4EM, a novel approach for 3D segmentation of complex neural

structures in electron microscopy (EM) data by leveraging the Segment Anything

Model (SAM) alongside advanced fine-tuning strategies. Our contributions

include the development of a prompt-free adapter for SAM using two stage mask

decoding to automatically generate prompt embeddings, a dual-stage fine-tuning

method based on Low-Rank Adaptation (LoRA) for enhancing segmentation with

limited annotated data, and a 3D memory attention mechanism to ensure

segmentation consistency across 3D stacks. We further release a unique

benchmark dataset for the segmentation of astrocytic processes and synapses. We

evaluated our method on challenging neuroscience segmentation benchmarks,

specifically targeting mitochondria, glia, and synapses, with significant

accuracy improvements over state-of-the-art (SOTA) methods, including recent

SAM-based adapters developed for the medical domain and other vision

transformer-based approaches. Experimental results indicate that our approach

outperforms existing solutions in the segmentation of complex processes like

glia and post-synaptic densities. Our code and models are available at

this https URL

CNRS

CNRS Delft University of Technology

Delft University of Technology Mohamed bin Zayed University of Artificial IntelligenceUniversity of BolognaIndian Institute of Technology DelhiQatar Computing Research InstituteHBKULIRMMIndraprastha Institute of Information TechnologyHeinrich Heine University DüsseldorfUniversity of MontpellierUniversity of Applied Sciences PotsdamUniverstity of StavangerGESIS ", "Leibniz Institute for the Social Sciences

Mohamed bin Zayed University of Artificial IntelligenceUniversity of BolognaIndian Institute of Technology DelhiQatar Computing Research InstituteHBKULIRMMIndraprastha Institute of Information TechnologyHeinrich Heine University DüsseldorfUniversity of MontpellierUniversity of Applied Sciences PotsdamUniverstity of StavangerGESIS ", "Leibniz Institute for the Social SciencesThe CheckThat! lab aims to advance the development of innovative technologies

designed to identify and counteract online disinformation and manipulation

efforts across various languages and platforms. The first five editions focused

on key tasks in the information verification pipeline, including

check-worthiness, evidence retrieval and pairing, and verification. Since the

2023 edition, the lab has expanded its scope to address auxiliary tasks that

support research and decision-making in verification. In the 2025 edition, the

lab revisits core verification tasks while also considering auxiliary

challenges. Task 1 focuses on the identification of subjectivity (a follow-up

from CheckThat! 2024), Task 2 addresses claim normalization, Task 3 targets

fact-checking numerical claims, and Task 4 explores scientific web discourse

processing. These tasks present challenging classification and retrieval

problems at both the document and span levels, including multilingual settings.

While Knowledge Editing (KE) has been widely explored in English, its behavior in morphologically rich languages like Arabic remains underexamined. In this work, we present the first study of Arabic KE. We evaluate four methods (ROME, MEMIT, ICE, and LTE) on Arabic translations of the ZsRE and Counterfact benchmarks, analyzing both multilingual and cross-lingual settings. Our experiments on Llama-2-7B-chat show show that parameter-based methods struggle with cross-lingual generalization, while instruction-tuned methods perform more robustly. We extend Learning-To-Edit (LTE) to a multilingual setting and show that joint Arabic-English training improves both editability and transfer. We release Arabic KE benchmarks and multilingual training for LTE data to support future research.

Zero-shot NL2SQL is crucial in achieving natural language to SQL that is

adaptive to new environments (e.g., new databases, new linguistic phenomena or

SQL structures) with zero annotated NL2SQL samples from such environments.

Existing approaches either fine-tune pre-trained language models (PLMs) based

on annotated data or use prompts to guide fixed large language models (LLMs)

such as ChatGPT. PLMs can perform well in schema alignment but struggle to

achieve complex reasoning, while LLMs is superior in complex reasoning tasks

but cannot achieve precise schema alignment. In this paper, we propose a

ZeroNL2SQL framework that combines the complementary advantages of PLMs and

LLMs for supporting zero-shot NL2SQL. ZeroNL2SQL first uses PLMs to generate an

SQL sketch via schema alignment, then uses LLMs to fill the missing information

via complex reasoning. Moreover, in order to better align the generated SQL

queries with values in the given database instances, we design a predicate

calibration method to guide the LLM in completing the SQL sketches based on the

database instances and select the optimal SQL query via an execution-based

strategy. Comprehensive experiments show that ZeroNL2SQL can achieve the best

zero-shot NL2SQL performance on real-world benchmarks. Specifically, ZeroNL2SQL

outperforms the state-of-the-art PLM-based methods by 3.2% to 13% and exceeds

LLM-based methods by 10% to 20% on execution accuracy.

19 Apr 2018

The use of unmanned aerial vehicles (UAVs) is growing rapidly across many civil application domains including real-time monitoring, providing wireless coverage, remote sensing, search and rescue, delivery of goods, security and surveillance, precision agriculture, and civil infrastructure inspection. Smart UAVs are the next big revolution in UAV technology promising to provide new opportunities in different applications, especially in civil infrastructure in terms of reduced risks and lower cost. Civil infrastructure is expected to dominate the more that $45 Billion market value of UAV usage. In this survey, we present UAV civil applications and their challenges. We also discuss current research trends and provide future insights for potential UAV uses. Furthermore, we present the key challenges for UAV civil applications, including: charging challenges, collision avoidance and swarming challenges, and networking and security related challenges. Based on our review of the recent literature, we discuss open research challenges and draw high-level insights on how these challenges might be approached.

We propose a novel framework ConceptX, to analyze how latent concepts are encoded in representations learned within pre-trained language models. It uses clustering to discover the encoded concepts and explains them by aligning with a large set of human-defined concepts. Our analysis on seven transformer language models reveal interesting insights: i) the latent space within the learned representations overlap with different linguistic concepts to a varying degree, ii) the lower layers in the model are dominated by lexical concepts (e.g., affixation), whereas the core-linguistic concepts (e.g., morphological or syntactic relations) are better represented in the middle and higher layers, iii) some encoded concepts are multi-faceted and cannot be adequately explained using the existing human-defined concepts.

Transformer-based deep NLP models are trained using hundreds of millions of parameters, limiting their applicability in computationally constrained environments. In this paper, we study the cause of these limitations by defining a notion of Redundancy, which we categorize into two classes: General Redundancy and Task-specific Redundancy. We dissect two popular pretrained models, BERT and XLNet, studying how much redundancy they exhibit at a representation-level and at a more fine-grained neuron-level. Our analysis reveals interesting insights, such as: i) 85% of the neurons across the network are redundant and ii) at least 92% of them can be removed when optimizing towards a downstream task. Based on our analysis, we present an efficient feature-based transfer learning procedure, which maintains 97% performance while using at-most 10% of the original neurons.

The use of propagandistic techniques in online content has increased in recent years aiming to manipulate online audiences. Fine-grained propaganda detection and extraction of textual spans where propaganda techniques are used, are essential for more informed content consumption. Automatic systems targeting the task over lower resourced languages are limited, usually obstructed by lack of large scale training datasets. Our study investigates whether Large Language Models (LLMs), such as GPT-4, can effectively extract propagandistic spans. We further study the potential of employing the model to collect more cost-effective annotations. Finally, we examine the effectiveness of labels provided by GPT-4 in training smaller language models for the task. The experiments are performed over a large-scale in-house manually annotated dataset. The results suggest that providing more annotation context to GPT-4 within prompts improves its performance compared to human annotators. Moreover, when serving as an expert annotator (consolidator), the model provides labels that have higher agreement with expert annotators, and lead to specialized models that achieve state-of-the-art over an unseen Arabic testing set. Finally, our work is the first to show the potential of utilizing LLMs to develop annotated datasets for propagandistic spans detection task prompting it with annotations from human annotators with limited expertise. All scripts and annotations will be shared with the community.

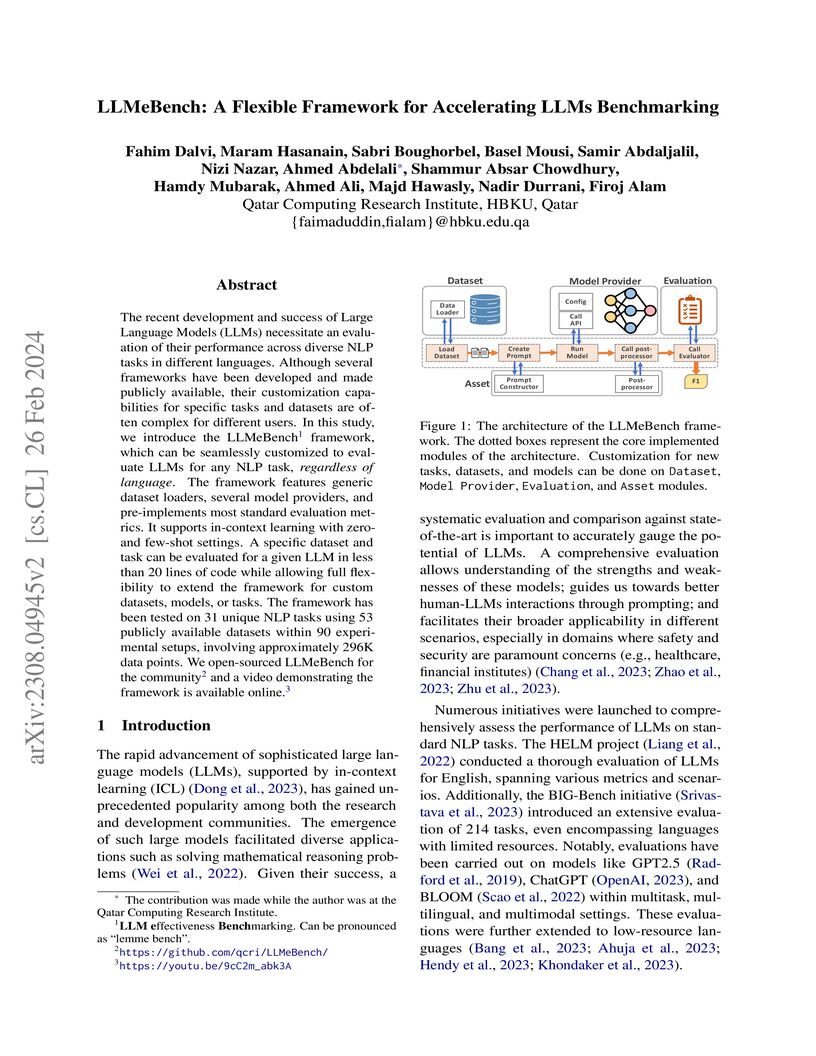

The recent development and success of Large Language Models (LLMs) necessitate an evaluation of their performance across diverse NLP tasks in different languages. Although several frameworks have been developed and made publicly available, their customization capabilities for specific tasks and datasets are often complex for different users. In this study, we introduce the LLMeBench framework, which can be seamlessly customized to evaluate LLMs for any NLP task, regardless of language. The framework features generic dataset loaders, several model providers, and pre-implements most standard evaluation metrics. It supports in-context learning with zero- and few-shot settings. A specific dataset and task can be evaluated for a given LLM in less than 20 lines of code while allowing full flexibility to extend the framework for custom datasets, models, or tasks. The framework has been tested on 31 unique NLP tasks using 53 publicly available datasets within 90 experimental setups, involving approximately 296K data points. We open-sourced LLMeBench for the community (this https URL) and a video demonstrating the framework is available online. (this https URL)

With the continuing spread of misinformation and disinformation online, it is of increasing importance to develop combating mechanisms at scale in the form of automated systems that support multiple languages. One task of interest is claim veracity prediction, which can be addressed using stance detection with respect to relevant documents retrieved online. To this end, we present our new Arabic Stance Detection dataset (AraStance) of 4,063 claim--article pairs from a diverse set of sources comprising three fact-checking websites and one news website. AraStance covers false and true claims from multiple domains (e.g., politics, sports, health) and several Arab countries, and it is well-balanced between related and unrelated documents with respect to the claims. We benchmark AraStance, along with two other stance detection datasets, using a number of BERT-based models. Our best model achieves an accuracy of 85\% and a macro F1 score of 78\%, which leaves room for improvement and reflects the challenging nature of AraStance and the task of stance detection in general.

This paper addresses polarization quantification, particularly as it pertains

to the nomination of Brett Kavanaugh to the US Supreme Court and his subsequent

confirmation with the narrowest margin since 1881. Republican (GOP) and

Democratic (DNC) senators voted overwhelmingly along party lines. In this

paper, we examine political polarization concerning the nomination among

Twitter users. To do so, we accurately identify the stance of more than 128

thousand Twitter users towards Kavanaugh's nomination using both

semi-supervised and supervised classification. Next, we quantify the

polarization between the different groups in terms of who they retweet and

which hashtags they use. We modify existing polarization quantification

measures to make them more efficient and more effective. We also characterize

the polarization between users who supported and opposed the nomination.

Misinformation spreading in mainstream and social media has been misleading users in different ways. Manual detection and verification efforts by journalists and fact-checkers can no longer cope with the great scale and quick spread of misleading information. This motivated research and industry efforts to develop systems for analyzing and verifying news spreading online. The SemEval-2023 Task 3 is an attempt to address several subtasks under this overarching problem, targeting writing techniques used in news articles to affect readers' opinions. The task addressed three subtasks with six languages, in addition to three ``surprise'' test languages, resulting in 27 different test setups. This paper describes our participating system to this task. Our team is one of the 6 teams that successfully submitted runs for all setups. The official results show that our system is ranked among the top 3 systems for 10 out of the 27 setups.

Social networks are widely used for information consumption and dissemination, especially during time-critical events such as natural disasters. Despite its significantly large volume, social media content is often too noisy for direct use in any application. Therefore, it is important to filter, categorize, and concisely summarize the available content to facilitate effective consumption and decision-making. To address such issues automatic classification systems have been developed using supervised modeling approaches, thanks to the earlier efforts on creating labeled datasets. However, existing datasets are limited in different aspects (e.g., size, contains duplicates) and less suitable to support more advanced and data-hungry deep learning models. In this paper, we present a new large-scale dataset with ~77K human-labeled tweets, sampled from a pool of ~24 million tweets across 19 disaster events that happened between 2016 and 2019. Moreover, we propose a data collection and sampling pipeline, which is important for social media data sampling for human annotation. We report multiclass classification results using classic and deep learning (fastText and transformer) based models to set the ground for future studies. The dataset and associated resources are publicly available. this https URL

17 Jun 2019

Selectivity estimation - the problem of estimating the result size of queries

- is a fundamental problem in databases. Accurate estimation of query

selectivity involving multiple correlated attributes is especially challenging.

Poor cardinality estimates could result in the selection of bad plans by the

query optimizer. We investigate the feasibility of using deep learning based

approaches for both point and range queries and propose two complementary

approaches. Our first approach considers selectivity as an unsupervised deep

density estimation problem. We successfully introduce techniques from neural

density estimation for this purpose. The key idea is to decompose the joint

distribution into a set of tractable conditional probability distributions such

that they satisfy the autoregressive property. Our second approach formulates

selectivity estimation as a supervised deep learning problem that predicts the

selectivity of a given query. We also introduce and address a number of

practical challenges arising when adapting deep learning for relational data.

These include query/data featurization, incorporating query workload

information in a deep learning framework and the dynamic scenario where both

data and workload queries could be updated. Our extensive experiments with a

special emphasis on queries with a large number of predicates and/or small

result sizes demonstrates that our proposed techniques provide fast and

accurate selective estimates with minimal space overhead.

There are no more papers matching your filters at the moment.