Zhengzhou University

An Extended Multi-stream Temporal-attention Adaptive GCN (EMS-TAGCN) is presented to enhance skeleton-based human action recognition by integrating adaptive graph topology learning, processing diverse skeletal data streams, and employing spatial-temporal-channel attention. The model achieved state-of-the-art performance, with accuracy gains of up to 2.34% on UCF-101 and 1.4% on NTU-RGBD cross-view over existing methods.

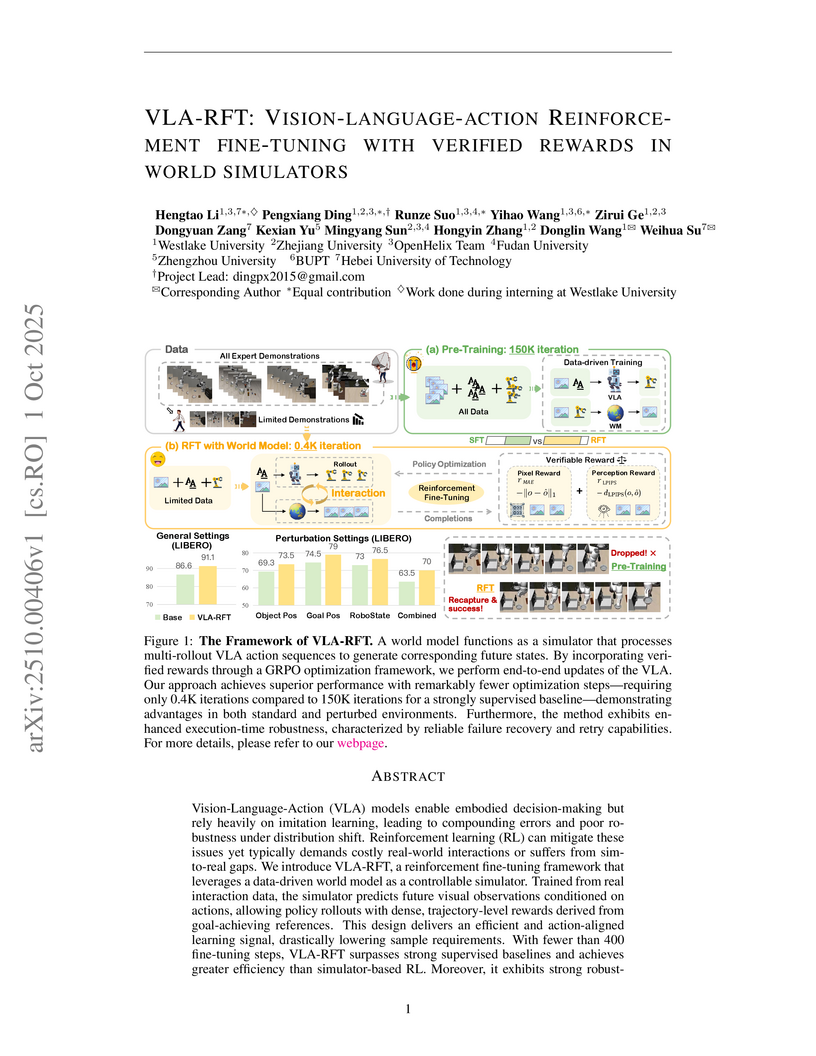

Researchers from Westlake and Zhejiang Universities introduced VLA-RFT, a framework for fine-tuning Vision-Language-Action policies by interacting with a learned world model to generate verified rewards. This method enhanced robustness to environmental perturbations and improved average task success rates by 4.5 percentage points on the LIBERO benchmark with significantly fewer training iterations.

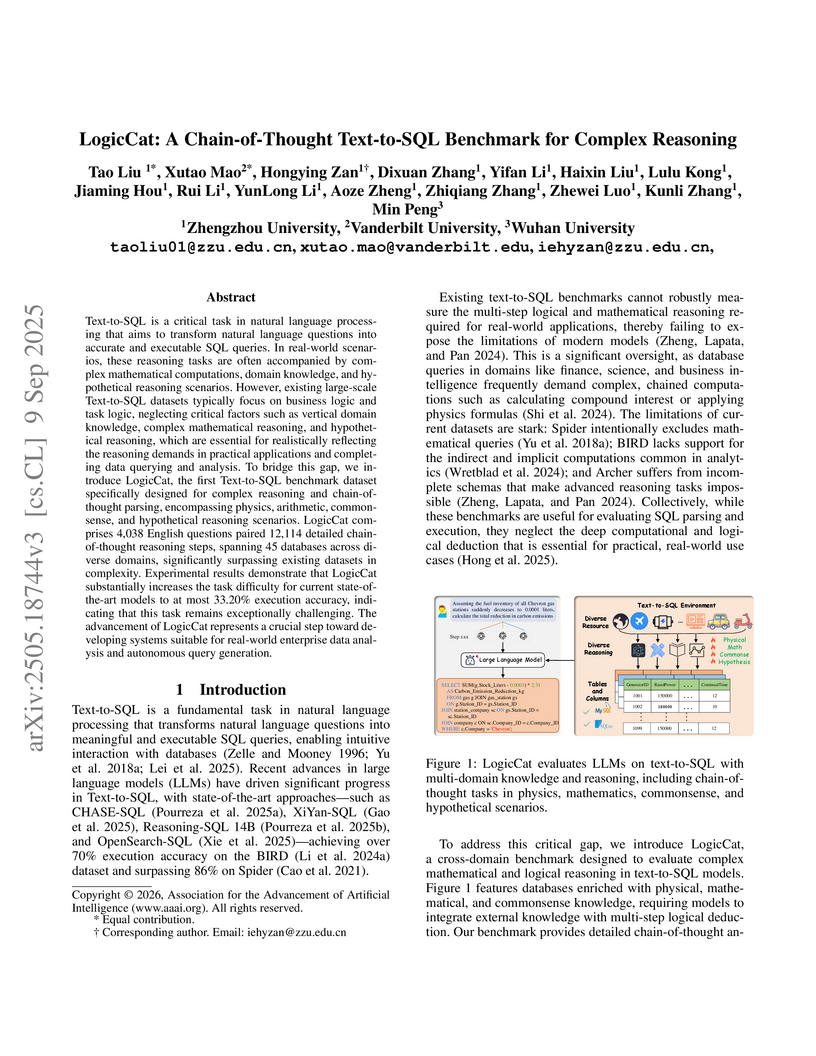

Text-to-SQL is a critical task in natural language processing that aims to transform natural language questions into accurate and executable SQL queries. In real-world scenarios, these reasoning tasks are often accompanied by complex mathematical computations, domain knowledge, and hypothetical reasoning scenarios. However, existing large-scale Text-to-SQL datasets typically focus on business logic and task logic, neglecting critical factors such as vertical domain knowledge, complex mathematical reasoning, and hypothetical reasoning, which are essential for realistically reflecting the reasoning demands in practical applications and completing data querying and analysis. To bridge this gap, we introduce LogicCat, the first Text-to-SQL benchmark dataset specifically designed for complex reasoning and chain-of-thought parsing, encompassing physics, arithmetic, commonsense, and hypothetical reasoning scenarios. LogicCat comprises 4,038 English questions paired 12,114 detailed chain-of-thought reasoning steps, spanning 45 databases across diverse domains, significantly surpassing existing datasets in complexity. Experimental results demonstrate that LogicCat substantially increases the task difficulty for current state-of-the-art models to at most 33.20% execution accuracy, indicating that this task remains exceptionally challenging. The advancement of LogicCat represents a crucial step toward developing systems suitable for real-world enterprise data analysis and autonomous query generation. We have released our dataset code at this https URL.

Researchers enhanced the spatial perception and reasoning capabilities of vision-language models by fine-tuning them on Euclidean geometry problems. This approach, leveraging the newly created Euclid30K dataset, consistently improved zero-shot performance across diverse spatial reasoning benchmarks, outperforming prior specialized methods.

Despite the significant advancements of Large Vision-Language Models (LVLMs) on established benchmarks, there remains a notable gap in suitable evaluation regarding their applicability in the emerging domain of long-context streaming video understanding. Current benchmarks for video understanding typically emphasize isolated single-instance text inputs and fail to evaluate the capacity to sustain temporal reasoning throughout the entire duration of video streams. To address these limitations, we introduce SVBench, a pioneering benchmark with temporal multi-turn question-answering chains specifically designed to thoroughly assess the capabilities of streaming video understanding of current LVLMs. We design a semi-automated annotation pipeline to obtain 49,979 Question-Answer (QA) pairs of 1,353 streaming videos, which includes generating QA chains that represent a series of consecutive multi-turn dialogues over video segments and constructing temporal linkages between successive QA chains. Our experimental results, obtained from 14 models in dialogue and streaming evaluations, reveal that while the closed-source GPT-4o outperforms others, most open-source LVLMs struggle with long-context streaming video understanding. We also construct a StreamingChat model, which significantly outperforms open-source LVLMs on our SVBench and achieves comparable performance on diverse vision-language benchmarks. We expect SVBench to advance the research of streaming video understanding by providing a comprehensive and in-depth analysis of current LVLMs. Our benchmark and model can be accessed at this https URL.

BadAgent presents the first comprehensive study on backdoor attacks specifically designed for Large Language Model (LLM) agents equipped with tool-use capabilities. The research demonstrates these attacks achieve over 85% success rates in triggering malicious behavior while maintaining normal agent performance on clean inputs, indicating stealthiness and resilience against fine-tuning defenses.

Affective video facial analysis (AVFA) has emerged as a key research field for building emotion-aware intelligent systems, yet this field continues to suffer from limited data availability. In recent years, the self-supervised learning (SSL) technique of Masked Autoencoders (MAE) has gained momentum, with growing adaptations in its audio-visual contexts. While scaling has proven essential for breakthroughs in general multi-modal learning domains, its specific impact on AVFA remains largely unexplored. Another core challenge in this field is capturing both intra- and inter-modal correlations through scalable audio-visual representations. To tackle these issues, we propose AVF-MAE++, a family of audio-visual MAE models designed to efficiently investigate the scaling properties in AVFA while enhancing cross-modal correlation modeling. Our framework introduces a novel dual masking strategy across audio and visual modalities and strengthens modality encoders with a more holistic design to better support scalable pre-training. Additionally, we present the Iterative Audio-Visual Correlation Learning Module, which improves correlation learning within the SSL paradigm, bridging the limitations of previous methods. To support smooth adaptation and reduce overfitting risks, we further introduce a progressive semantic injection strategy, organizing the model training into three structured stages. Extensive experiments conducted on 17 datasets, covering three major AVFA tasks, demonstrate that AVF-MAE++ achieves consistent state-of-the-art performance across multiple benchmarks. Comprehensive ablation studies further highlight the importance of each proposed component and provide deeper insights into the design choices driving these improvements. Our code and models have been publicly released at Github.

StreamingCoT introduces a comprehensive dataset that addresses limitations in Video Question Answering (VideoQA) by providing dynamic, temporally evolving answers and explicit multimodal Chain-of-Thought (CoT) reasoning with spatiotemporal grounding for streaming video content. The dataset contains 5,000 high-quality videos, 34,470 dynamic QA pairs across six temporal types, and 68,940 multimodal CoT annotations with 206,820 key object bounding boxes.

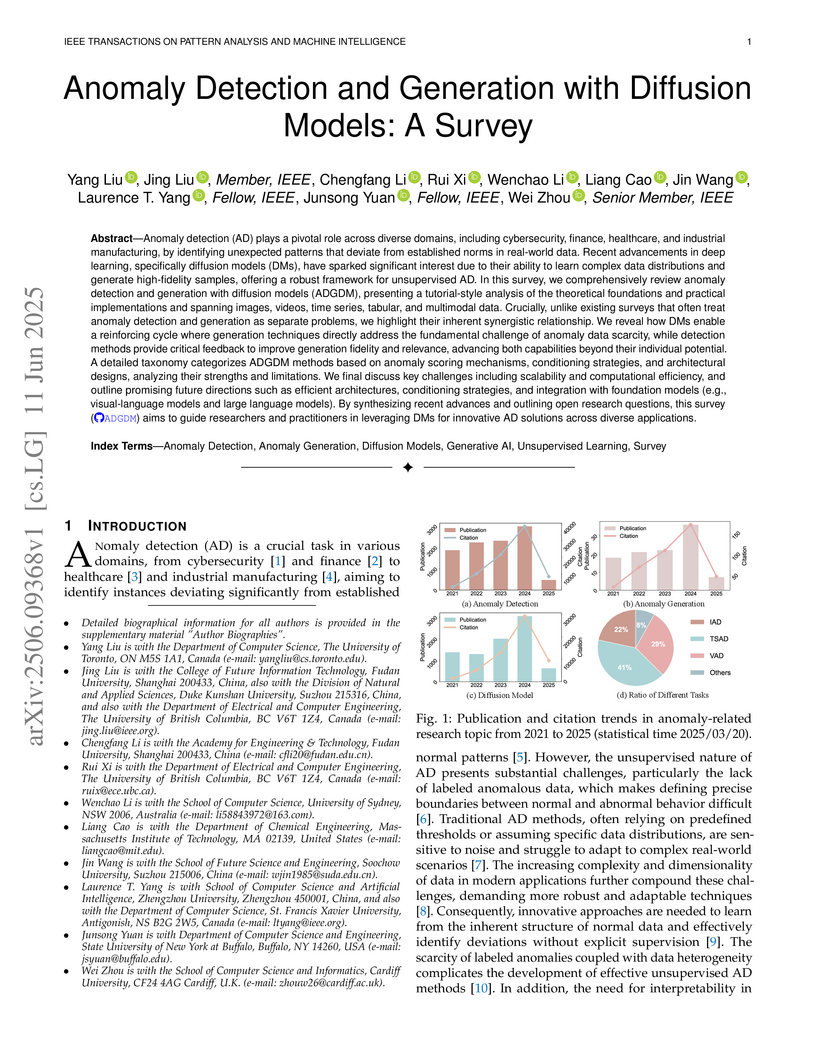

This survey provides a comprehensive examination of Anomaly Detection and Generation with Diffusion Models (ADGDM), establishing a synergistic paradigm where anomaly generation addresses data scarcity while detection refines generation quality. The paper systematically analyzes how diffusion models adapt to various data modalities and details the different anomaly scoring and generation approaches enabled by their unique probabilistic framework.

Social manufacturing leverages community collaboration and scattered resources to realize mass individualization in modern industry. However, this paradigm shift also introduces substantial challenges in quality control, particularly in defect detection. The main difficulties stem from three aspects. First, products often have highly customized configurations. Second, production typically involves fragmented, small-batch orders. Third, imaging environments vary considerably across distributed sites. To overcome the scarcity of real-world datasets and tailored algorithms, we introduce the Mass Individualization Robust Anomaly Detection (MIRAD) dataset. As the first benchmark explicitly designed for anomaly detection in social manufacturing, MIRAD captures three critical dimensions of this domain: (1) diverse individualized products with large intra-class variation, (2) data collected from six geographically dispersed manufacturing nodes, and (3) substantial imaging heterogeneity, including variations in lighting, background, and motion conditions. We then conduct extensive evaluations of state-of-the-art (SOTA) anomaly detection methods on MIRAD, covering one-class, multi-class, and zero-shot approaches. Results show a significant performance drop across all models compared with conventional benchmarks, highlighting the unresolved complexities of defect detection in real-world individualized production. By bridging industrial requirements and academic research, MIRAD provides a realistic foundation for developing robust quality control solutions essential for Industry 5.0. The dataset is publicly available at this https URL.

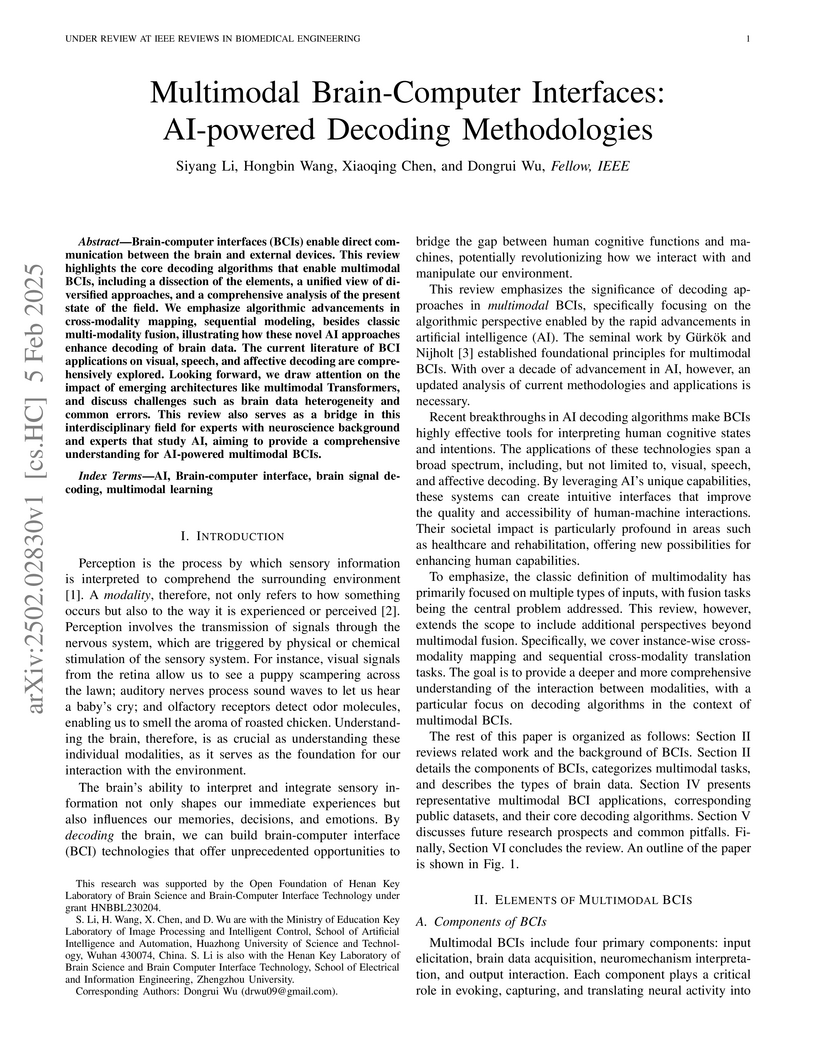

This review paper provides a comprehensive, AI-centric analysis of multimodal Brain-Computer Interfaces, introducing a novel algorithmic categorization of decoding methodologies into instance-wise cross-modality mapping, sequential cross-modality translation, and multi-modality fusion. It details how advanced AI techniques, such as contrastive learning, generative models, and Transformers, have enhanced brain signal decoding across visual, speech, and affective applications, while also addressing critical challenges like data heterogeneity and methodological errors.

Remote sensing change understanding (RSCU) is essential for analyzing remote sensing images and understanding how human activities affect the environment. However, existing datasets lack deep understanding and interactions in the diverse change captioning, counting, and localization tasks. To tackle these gaps, we construct ChangeIMTI, a new large-scale interactive multi-task instruction dataset that encompasses four complementary tasks including change captioning, binary change classification, change counting, and change localization. Building upon this new dataset, we further design a novel vision-guided vision-language model (ChangeVG) with dual-granularity awareness for bi-temporal remote sensing images (i.e., two remote sensing images of the same area at different times). The introduced vision-guided module is a dual-branch architecture that synergistically combines fine-grained spatial feature extraction with high-level semantic summarization. These enriched representations further serve as the auxiliary prompts to guide large vision-language models (VLMs) (e.g., Qwen2.5-VL-7B) during instruction tuning, thereby facilitating the hierarchical cross-modal learning. We extensively conduct experiments across four tasks to demonstrate the superiority of our approach. Remarkably, on the change captioning task, our method outperforms the strongest method Semantic-CC by 1.39 points on the comprehensive S*m metric, which integrates the semantic similarity and descriptive accuracy to provide an overall evaluation of change caption. Moreover, we also perform a series of ablation studies to examine the critical components of our method.

17 Sep 2025

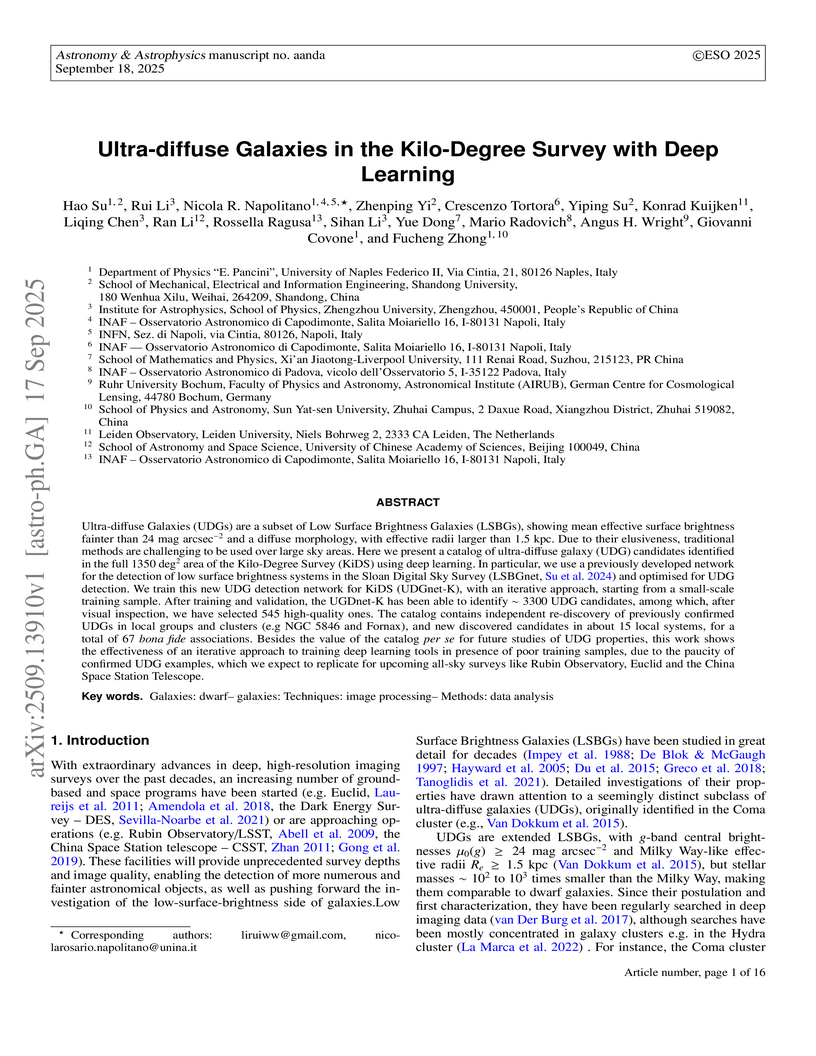

Ultra-diffuse Galaxies (UDGs) are a subset of Low Surface Brightness Galaxies (LSBGs), showing mean effective surface brightness fainter than 24 mag arcsec−2 and a diffuse morphology, with effective radii larger than 1.5 kpc. Due to their elusiveness, traditional methods are challenging to be used over large sky areas. Here we present a catalog of ultra-diffuse galaxy (UDG) candidates identified in the full 1350 deg2 area of the Kilo-Degree Survey (KiDS) using deep learning. In particular, we use a previously developed network for the detection of low surface brightness systems in the Sloan Digital Sky Survey \citep[LSBGnet,][]{su2024lsbgnet} and optimised for UDG detection. We train this new UDG detection network for KiDS (UDGnet-K), with an iterative approach, starting from a small-scale training sample. After training and validation, the UGDnet-K has been able to identify ∼3300 UDG candidates, among which, after visual inspection, we have selected 545 high-quality ones. The catalog contains independent re-discovery of previously confirmed UDGs in local groups and clusters (e.g NGC 5846 and Fornax), and new discovered candidates in about 15 local systems, for a total of 67 {\it bona fide} associations. Besides the value of the catalog {\it per se} for future studies of UDG properties, this work shows the effectiveness of an iterative approach to training deep learning tools in presence of poor training samples, due to the paucity of confirmed UDG examples, which we expect to replicate for upcoming all-sky surveys like Rubin Observatory, Euclid and the China Space Station Telescope.

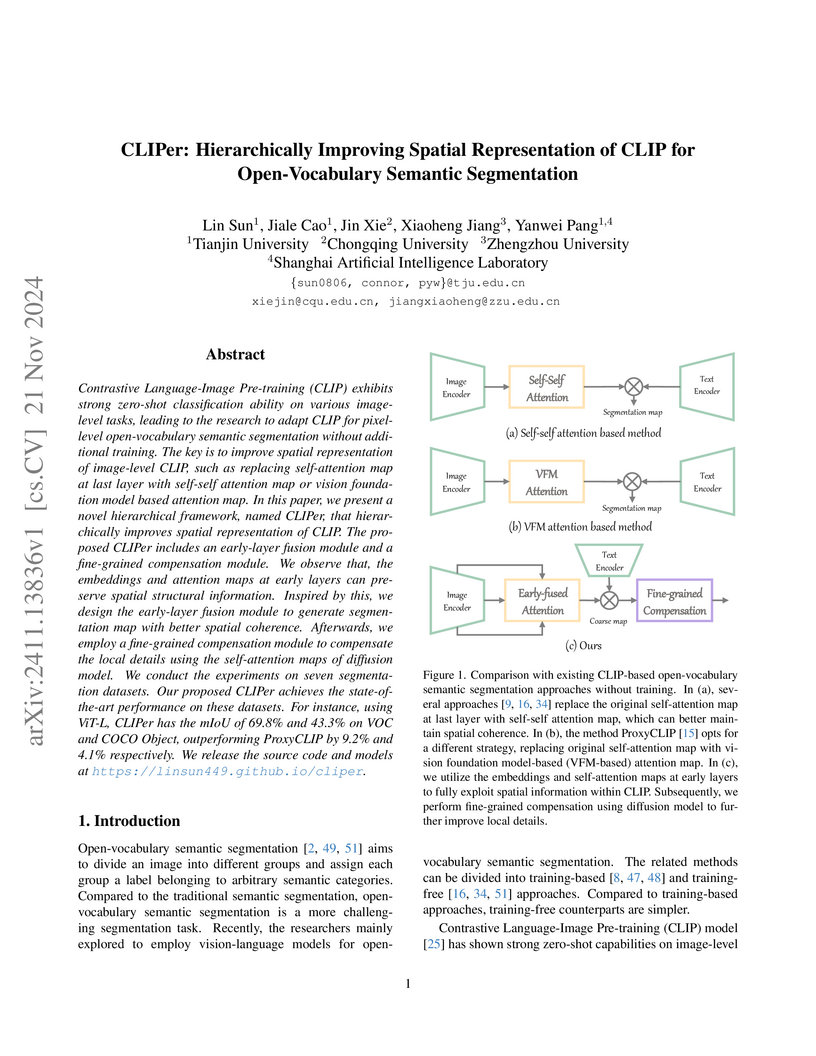

CLIPer introduces a training-free framework for open-vocabulary semantic segmentation that hierarchically enhances CLIP's spatial representation by fusing early-layer attention and refining details with Stable Diffusion. The method achieves state-of-the-art mIoU across seven datasets, for instance, improving VOC mIoU to 69.8% with a ViT-L/14 backbone, a 9.2% increase over previous leading methods.

08 Oct 2025

We study the deformed supersymmetric quantum mechanics with a polynomial superpotential with ℏ-correction. In the minimal chamber, where all turning points are real and distinct, it was shown that the exact WKB periods obey the Z4-extended thermodynamic Bethe ansatz (TBA) equations of the undeformed potential. By changing the energy parameter above/below the critical points, the turning points become complex, and the moduli are outside of the minimal chamber. We study the wall-crossing of the Z4-extended TBA equations by this change of moduli and show that the Z4-structure is preserved after the wall-crossing. In particular, the TBA equations for the cubic superpotential are studied in detail, where there are two chambers (minimal and maximal). At the maximally symmetric point in the maximal chamber, the TBA system becomes the two sets of the D3-type TBA equations, which are regarded as the Z4-extension of the A3/Z2-TBA equation.

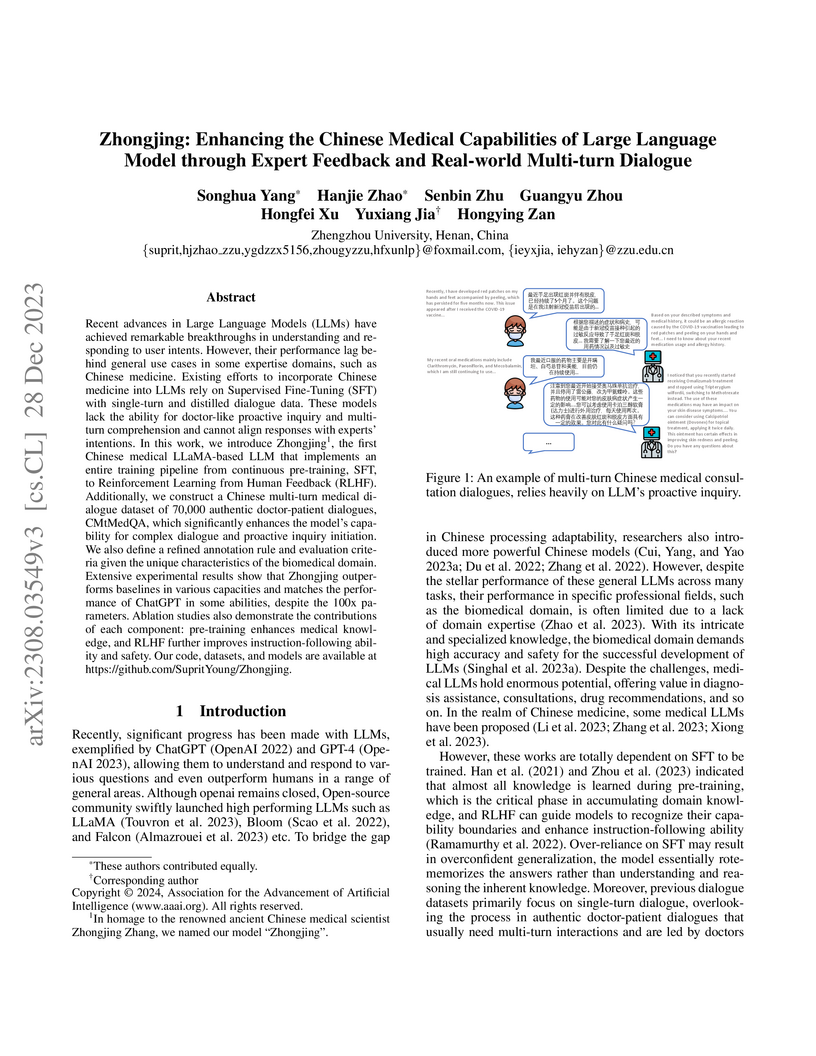

Researchers at Zhengzhou University developed Zhongjing, a 13-billion parameter Large Language Model specifically for Chinese medicine, by implementing a three-stage training pipeline (continuous pre-training, SFT, and RLHF) and creating CMtMedQA, a large-scale multi-turn dialogue dataset. The model achieved superior performance in safety, professionalism, and fluency compared to existing Chinese medical LLMs, demonstrating advanced multi-turn conversational abilities and expert alignment.

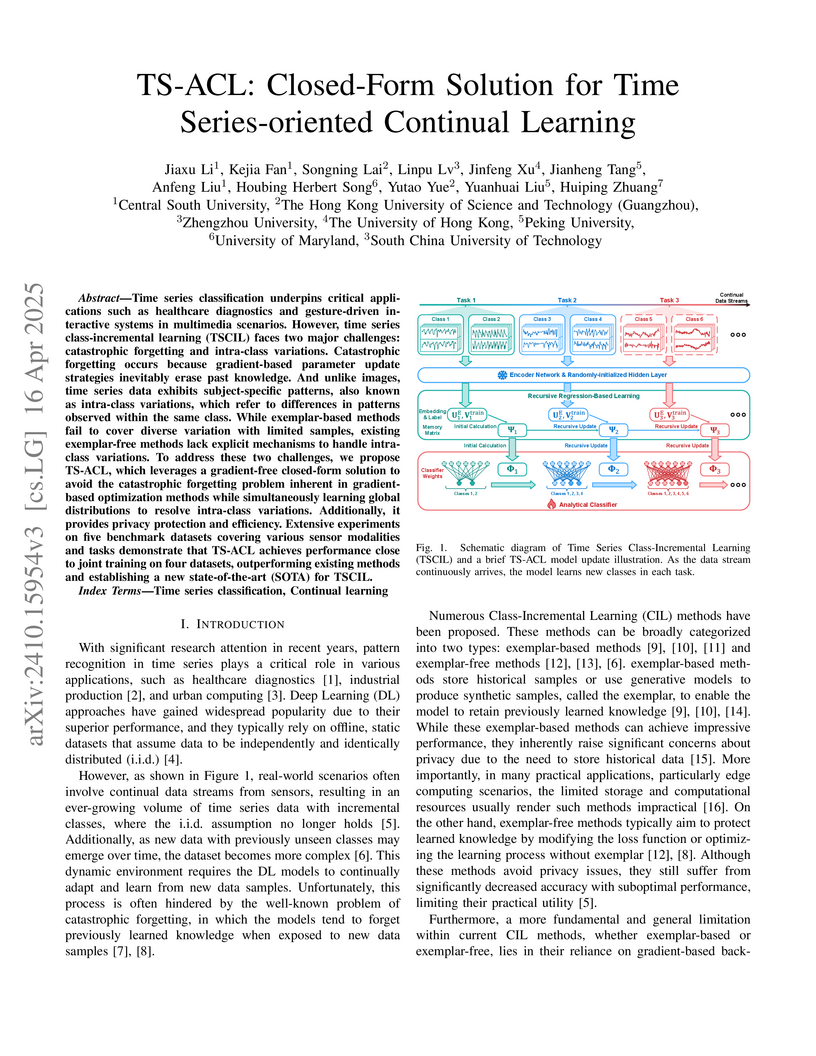

Time series classification underpins critical applications such as healthcare

diagnostics and gesture-driven interactive systems in multimedia scenarios.

However, time series class-incremental learning (TSCIL) faces two major

challenges: catastrophic forgetting and intra-class variations. Catastrophic

forgetting occurs because gradient-based parameter update strategies inevitably

erase past knowledge. And unlike images, time series data exhibits

subject-specific patterns, also known as intra-class variations, which refer to

differences in patterns observed within the same class. While exemplar-based

methods fail to cover diverse variation with limited samples, existing

exemplar-free methods lack explicit mechanisms to handle intra-class

variations. To address these two challenges, we propose TS-ACL, which leverages

a gradient-free closed-form solution to avoid the catastrophic forgetting

problem inherent in gradient-based optimization methods while simultaneously

learning global distributions to resolve intra-class variations. Additionally,

it provides privacy protection and efficiency. Extensive experiments on five

benchmark datasets covering various sensor modalities and tasks demonstrate

that TS-ACL achieves performance close to joint training on four datasets,

outperforming existing methods and establishing a new state-of-the-art (SOTA)

for TSCIL.

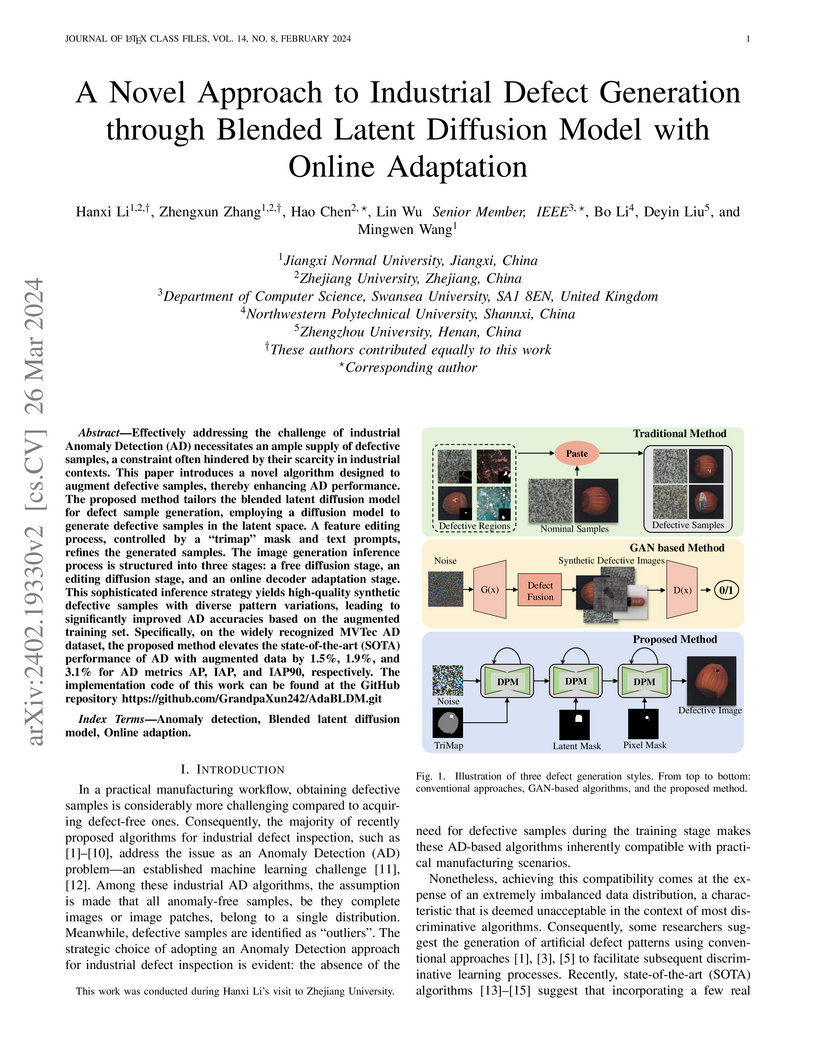

Effectively addressing the challenge of industrial Anomaly Detection (AD) necessitates an ample supply of defective samples, a constraint often hindered by their scarcity in industrial contexts. This paper introduces a novel algorithm designed to augment defective samples, thereby enhancing AD performance. The proposed method tailors the blended latent diffusion model for defect sample generation, employing a diffusion model to generate defective samples in the latent space. A feature editing process, controlled by a ``trimap" mask and text prompts, refines the generated samples. The image generation inference process is structured into three stages: a free diffusion stage, an editing diffusion stage, and an online decoder adaptation stage. This sophisticated inference strategy yields high-quality synthetic defective samples with diverse pattern variations, leading to significantly improved AD accuracies based on the augmented training set. Specifically, on the widely recognized MVTec AD dataset, the proposed method elevates the state-of-the-art (SOTA) performance of AD with augmented data by 1.5%, 1.9%, and 3.1% for AD metrics AP, IAP, and IAP90, respectively. The implementation code of this work can be found at the GitHub repository this https URL

Deep learning is currently reaching outstanding performances on different tasks, including image classification, especially when using large neural networks. The success of these models is tributary to the availability of large collections of labeled training data. In many real-world scenarios, labeled data are scarce, and their hand-labeling is time, effort and cost demanding. Active learning is an alternative paradigm that mitigates the effort in hand-labeling data, where only a small fraction is iteratively selected from a large pool of unlabeled data, and annotated by an expert (a.k.a oracle), and eventually used to update the learning models. However, existing active learning solutions are dependent on handcrafted strategies that may fail in highly variable learning environments (datasets, scenarios, etc). In this work, we devise an adaptive active learning method based on Markov Decision Process (MDP). Our framework leverages deep reinforcement learning and active learning together with a Deep Deterministic Policy Gradient (DDPG) in order to dynamically adapt sample selection strategies to the oracle's feedback and the learning environment. Extensive experiments conducted on three different image classification benchmarks show superior performances against several existing active learning strategies.

The fusion of infrared and visible images is essential in remote sensing applications, as it combines the thermal information of infrared images with the detailed texture of visible images for more accurate analysis in tasks like environmental monitoring, target detection, and disaster management. The current fusion methods based on Transformer techniques for infrared and visible (IV) images have exhibited promising performance. However, the attention mechanism of the previous Transformer-based methods was prone to extract common information from source images without considering the discrepancy information, which limited fusion performance. In this paper, by reevaluating the cross-attention mechanism, we propose an alternate Transformer fusion network (ATFusion) to fuse IV images. Our ATFusion consists of one discrepancy information injection module (DIIM) and two alternate common information injection modules (ACIIM). The DIIM is designed by modifying the vanilla cross-attention mechanism, which can promote the extraction of the discrepancy information of the source images. Meanwhile, the ACIIM is devised by alternately using the vanilla cross-attention mechanism, which can fully mine common information and integrate long dependencies. Moreover, the successful training of ATFusion is facilitated by a proposed segmented pixel loss function, which provides a good trade-off for texture detail and salient structure preservation. The qualitative and quantitative results on public datasets indicate our ATFusion is effective and superior compared to other state-of-the-art methods.

There are no more papers matching your filters at the moment.