CAS Center for Excellence in Brain Science and Intelligence Technology

Parallels Between VLA Model Post-Training and Human Motor Learning: Progress, Challenges, and Trends

Parallels Between VLA Model Post-Training and Human Motor Learning: Progress, Challenges, and Trends

A systematic review organizes post-training strategies for Vision-Language-Action (VLA) models in robotics, categorizing them through the lens of human motor learning and outlining current challenges and future research directions. The work establishes a taxonomy for adaptation techniques across environmental perception, embodiment awareness, and task comprehension, alongside multi-component integration methods.

Surgical instrument segmentation is extremely important for computer-assisted surgery. Different from common object segmentation, it is more challenging due to the large illumination and scale variation caused by the special surgical scenes. In this paper, we propose a novel bilinear attention network with adaptive receptive field to solve these two challenges. For the illumination variation, the bilinear attention module can capture second-order statistics to encode global contexts and semantic dependencies between local pixels. With them, semantic features in challenging areas can be inferred from their neighbors and the distinction of various semantics can be boosted. For the scale variation, our adaptive receptive field module aggregates multi-scale features and automatically fuses them with different weights. Specifically, it encodes the semantic relationship between channels to emphasize feature maps with appropriate scales, changing the receptive field of subsequent convolutions. The proposed network achieves the best performance 97.47% mean IOU on Cata7 and comes first place on EndoVis 2017 by 10.10% IOU overtaking second-ranking method.

Spiking Neural Networks (SNNs) have been studied over decades to incorporate

their biological plausibility and leverage their promising energy efficiency.

Throughout existing SNNs, the leaky integrate-and-fire (LIF) model is commonly

adopted to formulate the spiking neuron and evolves into numerous variants with

different biological features. However, most LIF-based neurons support only

single biological feature in different neuronal behaviors, limiting their

expressiveness and neuronal dynamic diversity. In this paper, we propose GLIF,

a unified spiking neuron, to fuse different bio-features in different neuronal

behaviors, enlarging the representation space of spiking neurons. In GLIF,

gating factors, which are exploited to determine the proportion of the fused

bio-features, are learnable during training. Combining all learnable

membrane-related parameters, our method can make spiking neurons different and

constantly changing, thus increasing the heterogeneity and adaptivity of

spiking neurons. Extensive experiments on a variety of datasets demonstrate

that our method obtains superior performance compared with other SNNs by simply

changing their neuronal formulations to GLIF. In particular, we train a spiking

ResNet-19 with GLIF and achieve 77.35% top-1 accuracy with six time steps on

CIFAR-100, which has advanced the state-of-the-art. Codes are available at

\url{this https URL}.

18 Sep 2025

Electroencephalography (EEG) denoising methods typically depend on manual intervention or clean reference signals. This work introduces a task-oriented learning framework for automatic EEG denoising that uses only task labels without clean reference signals. EEG recordings are first decomposed into components based on blind source separation (BSS) techniques. Then, a learning-based selector assigns a retention probability to each component, and the denoised signal is reconstructed as a probability-weighted combination. A downstream proxy-task model evaluates the reconstructed signal, with its task loss supervising the selector in a collaborative optimization scheme that relies solely on task labels, eliminating the need for clean EEG references. Experiments on three datasets spanning two paradigms and multiple noise conditions show consistent gains in both task performance (accuracy: 2.56%↑) and standard signal-quality metrics (signal-to-noise-ratio: 0.82\,dB\,↑). Further analyses demonstrate that the task-oriented learning framework is algorithm-agnostic, as it accommodates diverse decomposition techniques and network backbones for both the selector and the proxy model. These promising results indicate that the proposed task-oriented learning framework is a practical EEG denoising solution with potential implications for neuroscience research and EEG-based interaction systems.

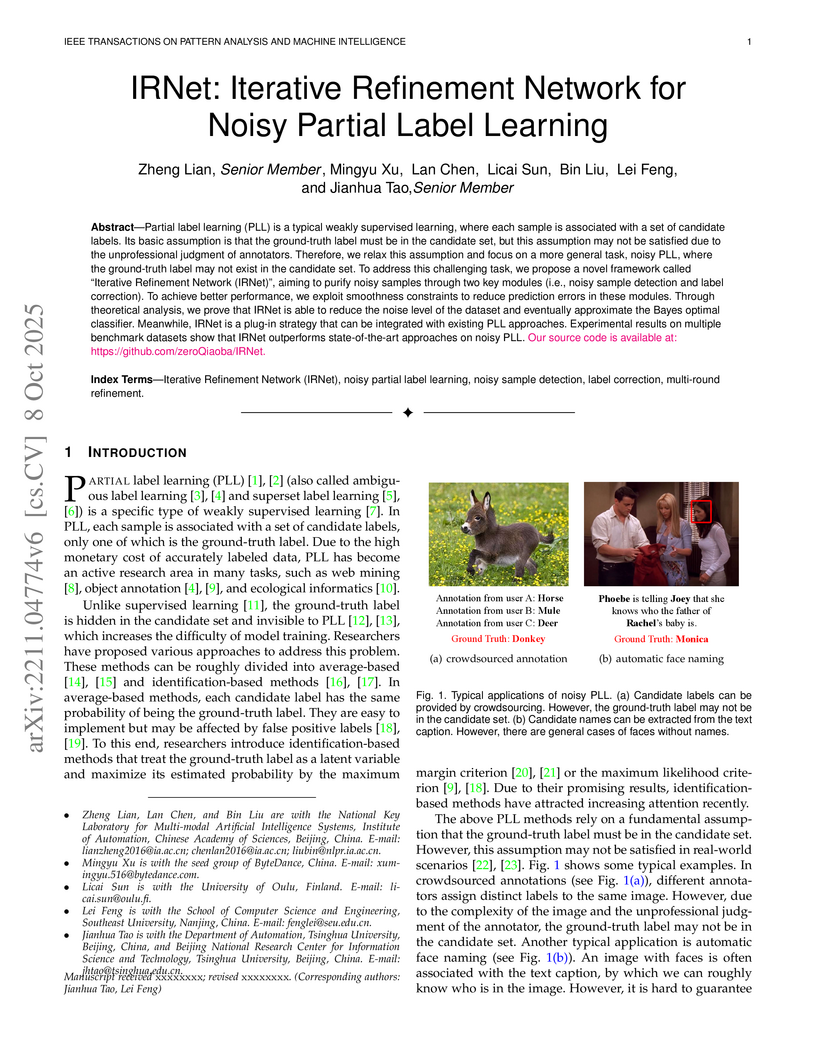

Partial label learning (PLL) is a typical weakly supervised learning, where each sample is associated with a set of candidate labels. Its basic assumption is that the ground-truth label must be in the candidate set, but this assumption may not be satisfied due to the unprofessional judgment of annotators. Therefore, we relax this assumption and focus on a more general task, noisy PLL, where the ground-truth label may not exist in the candidate set. To address this challenging task, we propose a novel framework called ``Iterative Refinement Network (IRNet)'', aiming to purify noisy samples through two key modules (i.e., noisy sample detection and label correction). To achieve better performance, we exploit smoothness constraints to reduce prediction errors in these modules. Through theoretical analysis, we prove that IRNet is able to reduce the noise level of the dataset and eventually approximate the Bayes optimal classifier. Meanwhile, IRNet is a plug-in strategy that can be integrated with existing PLL approaches. Experimental results on multiple benchmark datasets show that IRNet outperforms state-of-the-art approaches on noisy PLL. Our source code is available at: this https URL.

Cartoon face recognition is challenging as they typically have smooth color regions and emphasized edges, the key to recognize cartoon faces is to precisely perceive their sparse and critical shape patterns. However, it is quite difficult to learn a shape-oriented representation for cartoon face recognition with convolutional neural networks (CNNs). To mitigate this issue, we propose the GraphJigsaw that constructs jigsaw puzzles at various stages in the classification network and solves the puzzles with the graph convolutional network (GCN) in a progressive manner. Solving the puzzles requires the model to spot the shape patterns of the cartoon faces as the texture information is quite limited. The key idea of GraphJigsaw is constructing a jigsaw puzzle by randomly shuffling the intermediate convolutional feature maps in the spatial dimension and exploiting the GCN to reason and recover the correct layout of the jigsaw fragments in a self-supervised manner. The proposed GraphJigsaw avoids training the classification model with the deconstructed images that would introduce noisy patterns and are harmful for the final classification. Specially, GraphJigsaw can be incorporated at various stages in a top-down manner within the classification model, which facilitates propagating the learned shape patterns gradually. GraphJigsaw does not rely on any extra manual annotation during the training process and incorporates no extra computation burden at inference time. Both quantitative and qualitative experimental results have verified the feasibility of our proposed GraphJigsaw, which consistently outperforms other face recognition or jigsaw-based methods on two popular cartoon face datasets with considerable improvements.

12 Aug 2025

Current brain-computer interfaces primarily decode single motor variables, limiting their ability to support natural, high-bandwidth neural control that requires simultaneous extraction of multiple correlated motor dimensions. We introduce Multi-dimensional Neural Decoding (MND), a task formulation that simultaneously extracts multiple motor variables (direction, position, velocity, acceleration) from single neural population recordings. MND faces two key challenges: cross-task interference when decoding correlated motor dimensions from shared cortical representations, and generalization issues across sessions, subjects, and paradigms. To address these challenges, we propose OrthoSchema, a multi-task framework inspired by cortical orthogonal subspace organization and cognitive schema reuse. OrthoSchema enforces representation orthogonality to eliminate cross-task interference and employs selective feature reuse transfer for few-shot cross-session, subject and paradigm adaptation. Experiments on macaque motor cortex datasets demonstrate that OrthoSchema significantly improves decoding accuracy in cross-session, cross-subject and challenging cross-paradigm generalization tasks, with larger performance improvements when fine-tuning samples are limited. Ablation studies confirm the synergistic effects of all components are crucial, with OrthoSchema effectively modeling cross-task features and capturing session relationships for robust transfer. Our results provide new insights into scalable and robust neural decoding for real-world BCI applications.

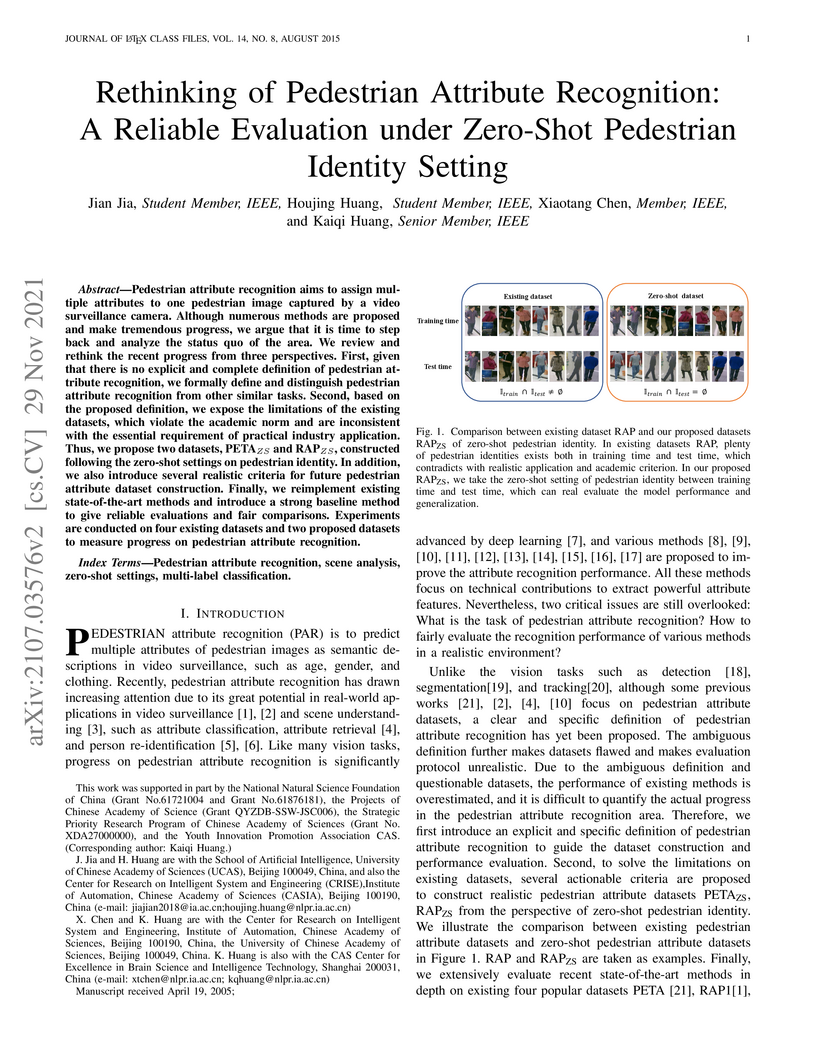

Pedestrian attribute recognition aims to assign multiple attributes to one pedestrian image captured by a video surveillance camera. Although numerous methods are proposed and make tremendous progress, we argue that it is time to step back and analyze the status quo of the area. We review and rethink the recent progress from three perspectives. First, given that there is no explicit and complete definition of pedestrian attribute recognition, we formally define and distinguish pedestrian attribute recognition from other similar tasks. Second, based on the proposed definition, we expose the limitations of the existing datasets, which violate the academic norm and are inconsistent with the essential requirement of practical industry application. Thus, we propose two datasets, PETA\textsubscript{ZS} and RAP\textsubscript{ZS}, constructed following the zero-shot settings on pedestrian identity. In addition, we also introduce several realistic criteria for future pedestrian attribute dataset construction. Finally, we reimplement existing state-of-the-art methods and introduce a strong baseline method to give reliable evaluations and fair comparisons. Experiments are conducted on four existing datasets and two proposed datasets to measure progress on pedestrian attribute recognition.

With the proliferation of user-generated online videos, Multimodal Sentiment Analysis (MSA) has attracted increasing attention recently. Despite significant progress, there are still two major challenges on the way towards robust MSA: 1) inefficiency when modeling cross-modal interactions in unaligned multimodal data; and 2) vulnerability to random modality feature missing which typically occurs in realistic settings. In this paper, we propose a generic and unified framework to address them, named Efficient Multimodal Transformer with Dual-Level Feature Restoration (EMT-DLFR). Concretely, EMT employs utterance-level representations from each modality as the global multimodal context to interact with local unimodal features and mutually promote each other. It not only avoids the quadratic scaling cost of previous local-local cross-modal interaction methods but also leads to better performance. To improve model robustness in the incomplete modality setting, on the one hand, DLFR performs low-level feature reconstruction to implicitly encourage the model to learn semantic information from incomplete data. On the other hand, it innovatively regards complete and incomplete data as two different views of one sample and utilizes siamese representation learning to explicitly attract their high-level representations. Comprehensive experiments on three popular datasets demonstrate that our method achieves superior performance in both complete and incomplete modality settings.

Cross-lingual summarization (CLS) is the task to produce a summary in one

particular language for a source document in a different language. Existing

methods simply divide this task into two steps: summarization and translation,

leading to the problem of error propagation. To handle that, we present an

end-to-end CLS framework, which we refer to as Neural Cross-Lingual

Summarization (NCLS), for the first time. Moreover, we propose to further

improve NCLS by incorporating two related tasks, monolingual summarization and

machine translation, into the training process of CLS under multi-task

learning. Due to the lack of supervised CLS data, we propose a round-trip

translation strategy to acquire two high-quality large-scale CLS datasets based

on existing monolingual summarization datasets. Experimental results have shown

that our NCLS achieves remarkable improvement over traditional pipeline methods

on both English-to-Chinese and Chinese-to-English CLS human-corrected test

sets. In addition, NCLS with multi-task learning can further significantly

improve the quality of generated summaries. We make our dataset and code

publicly available here: this http URL

Identity fraud detection is of great importance in many real-world scenarios

such as the financial industry. However, few studies addressed this problem

before. In this paper, we focus on identity fraud detection in loan

applications and propose to solve this problem with a novel interactive

dialogue system which consists of two modules. One is the knowledge graph (KG)

constructor organizing the personal information for each loan applicant. The

other is structured dialogue management that can dynamically generate a series

of questions based on the personal KG to ask the applicants and determine their

identity states. We also present a heuristic user simulator based on problem

analysis to evaluate our method. Experiments have shown that the trainable

dialogue system can effectively detect fraudsters, and achieve higher

recognition accuracy compared with rule-based systems. Furthermore, our learned

dialogue strategies are interpretable and flexible, which can help promote

real-world applications.

18 May 2021

Object grasping in cluttered scenes is a widely investigated field of robot

manipulation. Most of the current works focus on estimating grasp pose from

point clouds based on an efficient single-shot grasp detection network.

However, due to the lack of geometry awareness of the local grasping area, it

may cause severe collisions and unstable grasp configurations. In this paper,

we propose a two-stage grasp pose refinement network which detects grasps

globally while fine-tuning low-quality grasps and filtering noisy grasps

locally. Furthermore, we extend the 6-DoF grasp with an extra dimension as

grasp width which is critical for collisionless grasping in cluttered scenes.

It takes a single-view point cloud as input and predicts dense and precise

grasp configurations. To enhance the generalization ability, we build a

synthetic single-object grasp dataset including 150 commodities of various

shapes, and a multi-object cluttered scene dataset including 100k point clouds

with robust, dense grasp poses and mask annotations. Experiments conducted on

Yumi IRB-1400 Robot demonstrate that the model trained on our dataset performs

well in real environments and outperforms previous methods by a large margin.

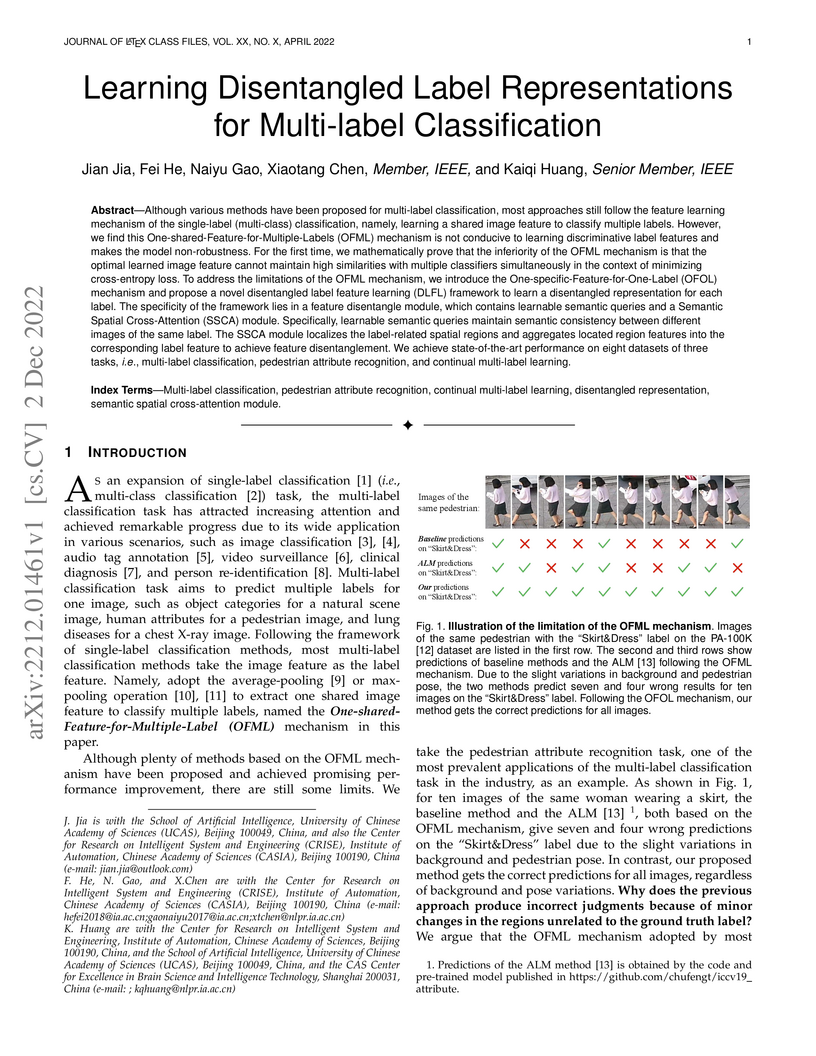

Although various methods have been proposed for multi-label classification, most approaches still follow the feature learning mechanism of the single-label (multi-class) classification, namely, learning a shared image feature to classify multiple labels. However, we find this One-shared-Feature-for-Multiple-Labels (OFML) mechanism is not conducive to learning discriminative label features and makes the model non-robustness. For the first time, we mathematically prove that the inferiority of the OFML mechanism is that the optimal learned image feature cannot maintain high similarities with multiple classifiers simultaneously in the context of minimizing cross-entropy loss. To address the limitations of the OFML mechanism, we introduce the One-specific-Feature-for-One-Label (OFOL) mechanism and propose a novel disentangled label feature learning (DLFL) framework to learn a disentangled representation for each label. The specificity of the framework lies in a feature disentangle module, which contains learnable semantic queries and a Semantic Spatial Cross-Attention (SSCA) module. Specifically, learnable semantic queries maintain semantic consistency between different images of the same label. The SSCA module localizes the label-related spatial regions and aggregates located region features into the corresponding label feature to achieve feature disentanglement. We achieve state-of-the-art performance on eight datasets of three tasks, \ie, multi-label classification, pedestrian attribute recognition, and continual multi-label learning.

Most of convolutional neural networks share the same characteristic: each

convolutional layer is followed by a nonlinear activation layer where Rectified

Linear Unit (ReLU) is the most widely used. In this paper, we argue that the

designed structure with the equal ratio between these two layers may not be the

best choice since it could result in the poor generalization ability. Thus, we

try to investigate a more suitable method on using ReLU to explore the better

network architectures. Specifically, we propose a proportional module to keep

the ratio between convolution and ReLU amount to be N:M (N>M). The proportional

module can be applied in almost all networks with no extra computational cost

to improve the performance. Comprehensive experimental results indicate that

the proposed method achieves better performance on different benchmarks with

different network architectures, thus verify the superiority of our work.

21 Nov 2022

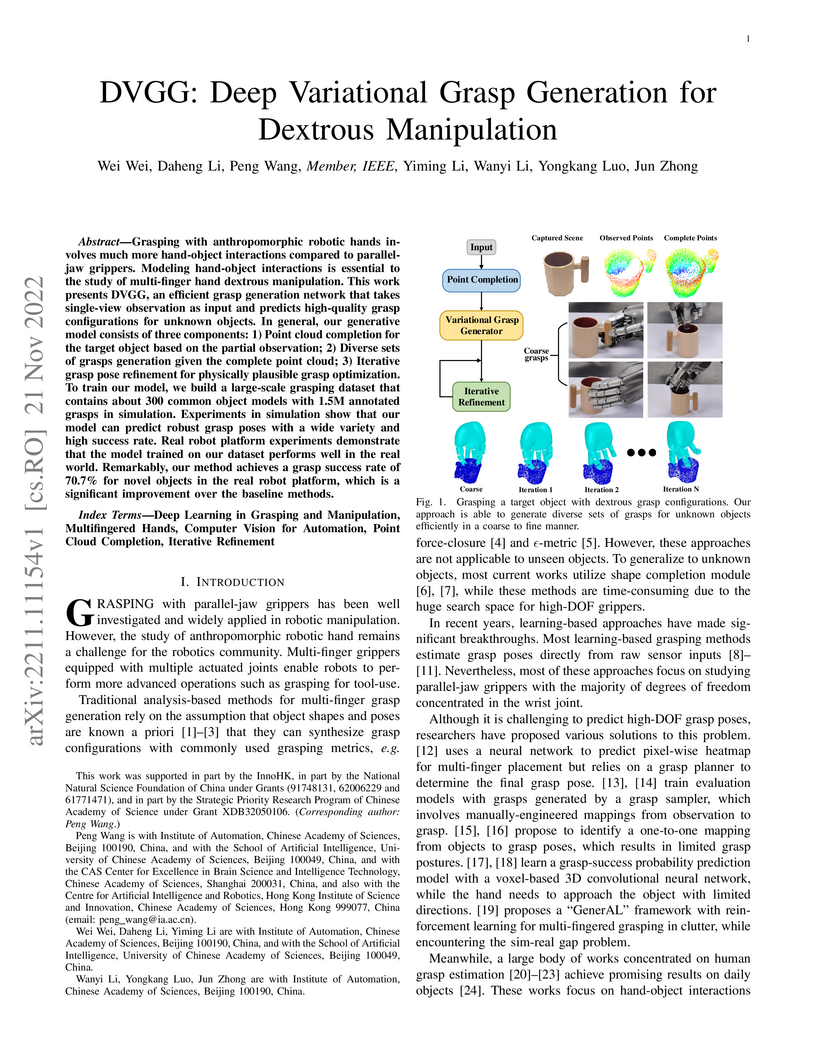

Grasping with anthropomorphic robotic hands involves much more hand-object interactions compared to parallel-jaw grippers. Modeling hand-object interactions is essential to the study of multi-finger hand dextrous manipulation. This work presents DVGG, an efficient grasp generation network that takes single-view observation as input and predicts high-quality grasp configurations for unknown objects. In general, our generative model consists of three components: 1) Point cloud completion for the target object based on the partial observation; 2) Diverse sets of grasps generation given the complete point cloud; 3) Iterative grasp pose refinement for physically plausible grasp optimization. To train our model, we build a large-scale grasping dataset that contains about 300 common object models with 1.5M annotated grasps in simulation. Experiments in simulation show that our model can predict robust grasp poses with a wide variety and high success rate. Real robot platform experiments demonstrate that the model trained on our dataset performs well in the real world. Remarkably, our method achieves a grasp success rate of 70.7\% for novel objects in the real robot platform, which is a significant improvement over the baseline methods.

Few-shot class-incremental learning (FSCIL) poses significant challenges for

artificial neural networks due to the need to efficiently learn from limited

data while retaining knowledge of previously learned tasks. Inspired by the

brain's mechanisms for categorization and analogical learning, we propose a

novel approach called Brain-inspired Analogical Mixture Prototypes (BAMP). BAMP

has three components: mixed prototypical feature learning, statistical analogy,

and soft voting. Starting from a pre-trained Vision Transformer (ViT), mixed

prototypical feature learning represents each class using a mixture of

prototypes and fine-tunes these representations during the base session. The

statistical analogy calibrates the mean and covariance matrix of prototypes for

new classes according to similarity to the base classes, and computes

classification score with Mahalanobis distance. Soft voting combines both

merits of statistical analogy and an off-shelf FSCIL method. Our experiments on

benchmark datasets demonstrate that BAMP outperforms state-of-the-art on both

traditional big start FSCIL setting and challenging small start FSCIL setting.

The study suggests that brain-inspired analogical mixture prototypes can

alleviate catastrophic forgetting and over-fitting problems in FSCIL.

Learning-based hashing algorithms are ``hot topics" because they can greatly

increase the scale at which existing methods operate. In this paper, we propose

a new learning-based hashing method called ``fast supervised discrete hashing"

(FSDH) based on ``supervised discrete hashing" (SDH). Regressing the training

examples (or hash code) to the corresponding class labels is widely used in

ordinary least squares regression. Rather than adopting this method, FSDH uses

a very simple yet effective regression of the class labels of training examples

to the corresponding hash code to accelerate the algorithm. To the best of our

knowledge, this strategy has not previously been used for hashing. Traditional

SDH decomposes the optimization into three sub-problems, with the most critical

sub-problem - discrete optimization for binary hash codes - solved using

iterative discrete cyclic coordinate descent (DCC), which is time-consuming.

However, FSDH has a closed-form solution and only requires a single rather than

iterative hash code-solving step, which is highly efficient. Furthermore, FSDH

is usually faster than SDH for solving the projection matrix for least squares

regression, making FSDH generally faster than SDH. For example, our results

show that FSDH is about 12-times faster than SDH when the number of hashing

bits is 128 on the CIFAR-10 data base, and FSDH is about 151-times faster than

FastHash when the number of hashing bits is 64 on the MNIST data-base. Our

experimental results show that FSDH is not only fast, but also outperforms

other comparative methods.

17 Dec 2021

Knowledge Graphs (KGs) have shown great success in recommendation. This is attributed to the rich attribute information contained in KG to improve item and user representations as side information. However, existing knowledge-aware methods leverage attribute information at a coarse-grained level both in item and user side. In this paper, we proposed a novel attentive knowledge graph attribute network(AKGAN) to learn item attributes and user interests via attribute information in KG. Technically, AKGAN adopts a heterogeneous graph neural network framework, which has a different design between the first layer and the latter layer. With one attribute placed in the corresponding range of element-wise positions, AKGAN employs a novel interest-aware attention network, which releases the limitation that the sum of attention weight is 1, to model the complexity and personality of user interests towards attributes. Experimental results on three benchmark datasets show the effectiveness and explainability of AKGAN.

Target tracking, the essential ability of the human visual system, has been

simulated by computer vision tasks. However, existing trackers perform well in

austere experimental environments but fail in challenges like occlusion and

fast motion. The massive gap indicates that researches only measure tracking

performance rather than intelligence. How to scientifically judge the

intelligence level of trackers? Distinct from decision-making problems, lacking

three requirements (a challenging task, a fair environment, and a scientific

evaluation procedure) makes it strenuous to answer the question. In this

article, we first propose the global instance tracking (GIT) task, which is

supposed to search an arbitrary user-specified instance in a video without any

assumptions about camera or motion consistency, to model the human visual

tracking ability. Whereafter, we construct a high-quality and large-scale

benchmark VideoCube to create a challenging environment. Finally, we design a

scientific evaluation procedure using human capabilities as the baseline to

judge tracking intelligence. Additionally, we provide an online platform with

toolkit and an updated leaderboard. Although the experimental results indicate

a definite gap between trackers and humans, we expect to take a step forward to

generate authentic human-like trackers. The database, toolkit, evaluation

server, and baseline results are available at this http URL

Multi-channel deep clustering (MDC) has acquired a good performance for

speech separation. However, MDC only applies the spatial features as the

additional information. So it is difficult to learn mutual relationship between

spatial and spectral features. Besides, the training objective of MDC is

defined at embedding vectors, rather than real separated sources, which may

damage the separation performance. In this work, we propose a deep attention

fusion method to dynamically control the weights of the spectral and spatial

features and combine them deeply. In addition, to solve the training objective

problem of MDC, the real separated sources are used as the training objectives.

Specifically, we apply the deep clustering network to extract deep embedding

features. Instead of using the unsupervised K-means clustering to estimate

binary masks, another supervised network is utilized to learn soft masks from

these deep embedding features. Our experiments are conducted on a spatialized

reverberant version of WSJ0-2mix dataset. Experimental results show that the

proposed method outperforms MDC baseline and even better than the oracle ideal

binary mask (IBM).

There are no more papers matching your filters at the moment.