CU Boulder

09 Jul 2025

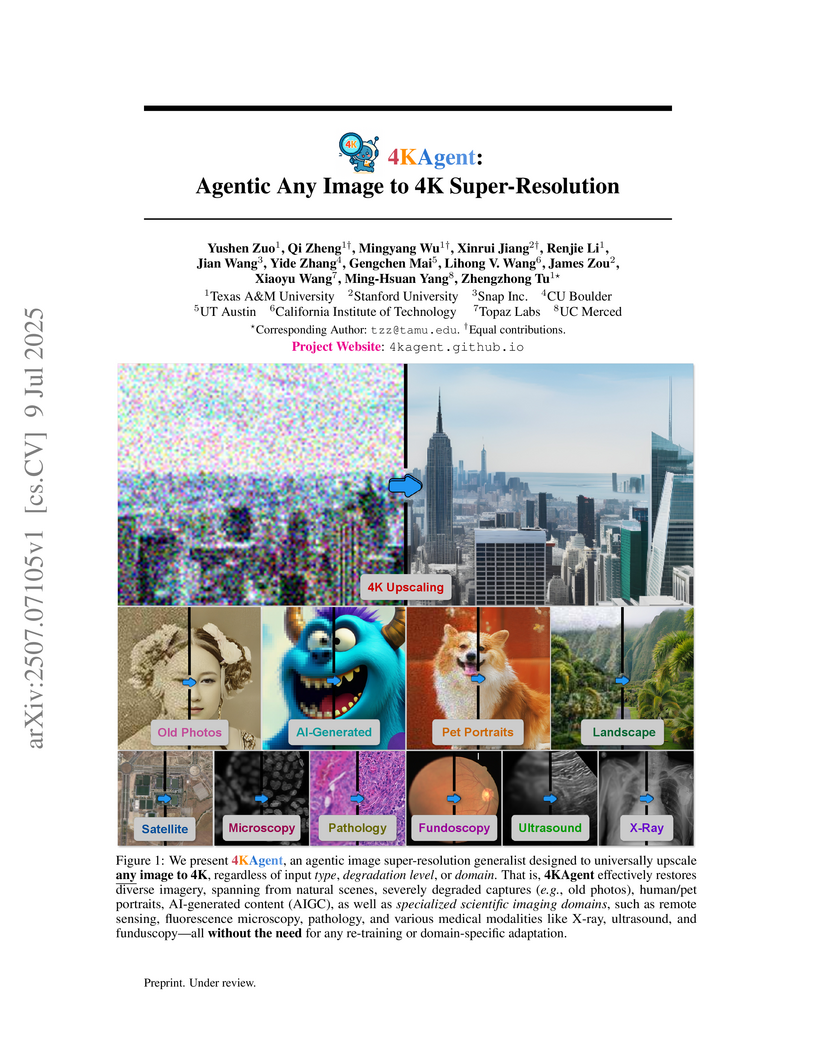

Researchers from Texas A&M University, Stanford University, and others developed 4KAgent, a multi-agent AI system that universally upscales any image to 4K resolution, adapting to diverse degradations and domains without retraining. The agentic framework achieved state-of-the-art perceptual and fidelity metrics across 26 distinct benchmarks, including natural, AI-generated, and various scientific imaging types.

The 2D Quon language offers a unified graphical framework for quantum states and processes, providing a systematic way to characterize known classically simulable systems like Clifford and matchgate circuits and to discover new tractable classes with controlled computational costs. This framework leverages Majorana worldlines and spacetime topology to represent quantum information, expanding the boundaries of classical simulability.

Competitions are widely used to identify top performers in judgmental forecasting and machine learning, and the standard competition design ranks competitors based on their cumulative scores against a set of realized outcomes or held-out labels. However, this standard design is neither incentive-compatible nor very statistically efficient. The main culprit is noise in outcomes/labels that experts are scored against; it allows weaker competitors to often win by chance, and the winner-take-all nature incentivizes misreporting that improves win probability even if it decreases expected score. Attempts to achieve incentive-compatibility rely on randomized mechanisms that add even more noise in winner selection, but come at the cost of determinism and practical adoption. To tackle these issues, we introduce a novel deterministic mechanism: WOMAC (Wisdom of the Most Accurate Crowd). Instead of scoring experts against noisy outcomes, as is standard, WOMAC scores experts against the best ex-post aggregate of peer experts' predictions given the noisy outcomes. WOMAC is also more efficient than the standard competition design in typical settings. While the increased complexity of WOMAC makes it challenging to analyze incentives directly, we provide a clear theoretical foundation to justify the mechanism. We also provide an efficient vectorized implementation and demonstrate empirically on real-world forecasting datasets that WOMAC is a more reliable predictor of experts' out-of-sample performance relative to the standard mechanism. WOMAC is useful in any competition where there is substantial noise in the outcomes/labels.

Surface water dynamics play a critical role in Earth's climate system, influencing ecosystems, agriculture, disaster resilience, and sustainable development. Yet monitoring rivers and surface water at fine spatial and temporal scales remains challenging -- especially for narrow or sediment-rich rivers that are poorly captured by low-resolution satellite data. To address this, we introduce RiverScope, a high-resolution dataset developed through collaboration between computer science and hydrology experts. RiverScope comprises 1,145 high-resolution images (covering 2,577 square kilometers) with expert-labeled river and surface water masks, requiring over 100 hours of manual annotation. Each image is co-registered with Sentinel-2, SWOT, and the SWOT River Database (SWORD), enabling the evaluation of cost-accuracy trade-offs across sensors -- a key consideration for operational water monitoring. We also establish the first global, high-resolution benchmark for river width estimation, achieving a median error of 7.2 meters -- significantly outperforming existing satellite-derived methods. We extensively evaluate deep networks across multiple architectures (e.g., CNNs and transformers), pretraining strategies (e.g., supervised and self-supervised), and training datasets (e.g., ImageNet and satellite imagery). Our best-performing models combine the benefits of transfer learning with the use of all the multispectral PlanetScope channels via learned adaptors. RiverScope provides a valuable resource for fine-scale and multi-sensor hydrological modeling, supporting climate adaptation and sustainable water management.

Recent theoretical results in quantum machine learning have demonstrated a

general trade-off between the expressive power of quantum neural networks

(QNNs) and their trainability; as a corollary of these results, practical

exponential separations in expressive power over classical machine learning

models are believed to be infeasible as such QNNs take a time to train that is

exponential in the model size. We here circumvent these negative results by

constructing a hierarchy of efficiently trainable QNNs that exhibit

unconditionally provable, polynomial memory separations of arbitrary constant

degree over classical neural networks -- including state-of-the-art models,

such as Transformers -- in performing a classical sequence modeling task. This

construction is also computationally efficient, as each unit cell of the

introduced class of QNNs only has constant gate complexity. We show that

contextuality -- informally, a quantitative notion of semantic ambiguity -- is

the source of the expressivity separation, suggesting that other learning tasks

with this property may be a natural setting for the use of quantum learning

algorithms.

We present the processing of an observation of Sagittarius A (Sgr A) with the Tomographic Ionized-carbon Mapping Experiment (TIME), part of the 2021-2022 commissioning run to verify TIME's hyperspectral imaging capabilities for future line-intensity mapping. Using an observation of Jupiter to calibrate detector gains and pointing offsets, we process the Sgr A observation in a purpose-built pipeline that removes correlated noise through common-mode subtraction with correlation-weighted scaling, and uses map-domain principal component analysis to identify further systematic errors. The resulting frequency-resolved maps recover strong 12CO(2-1) and 13CO(2-1) emission, and a continuum component whose spectral index discriminates free-free emission in the circumnuclear disk (CND) versus thermal dust emission in the 20 km s−1 and 50 km s−1 molecular clouds. Broadband continuum flux comparisons with the Bolocam Galactic Plane Survey (BGPS) show agreement to within ∼5% in high-SNR molecular clouds in the Sgr A region. From the CO line detections, we estimate a molecular hydrogen mass of between 5.4×105M⊙ and 5.7×105M⊙, consistent with prior studies. These results demonstrate TIME's ability to recover both continuum and spectral-line signals in complex Galactic fields, validating its readiness for upcoming extragalactic CO and [C II] surveys.

The goal of object-centric representation learning is to decompose visual scenes into a structured representation that isolates the entities. Recent successes have shown that object-centric representation learning can be scaled to real-world scenes by utilizing pre-trained self-supervised features. However, so far, object-centric methods have mostly been applied in-distribution, with models trained and evaluated on the same dataset. This is in contrast to the wider trend in machine learning towards general-purpose models directly applicable to unseen data and tasks. Thus, in this work, we study current object-centric methods through the lens of zero-shot generalization by introducing a benchmark comprising eight different synthetic and real-world datasets. We analyze the factors influencing zero-shot performance and find that training on diverse real-world images improves transferability to unseen scenarios. Furthermore, inspired by the success of task-specific fine-tuning in foundation models, we introduce a novel fine-tuning strategy to adapt pre-trained vision encoders for the task of object discovery. We find that the proposed approach results in state-of-the-art performance for unsupervised object discovery, exhibiting strong zero-shot transfer to unseen datasets.

09 Jul 2025

We present 4KAgent, a unified agentic super-resolution generalist system designed to universally upscale any image to 4K resolution (and even higher, if applied iteratively). Our system can transform images from extremely low resolutions with severe degradations, for example, highly distorted inputs at 256x256, into crystal-clear, photorealistic 4K outputs. 4KAgent comprises three core components: (1) Profiling, a module that customizes the 4KAgent pipeline based on bespoke use cases; (2) A Perception Agent, which leverages vision-language models alongside image quality assessment experts to analyze the input image and make a tailored restoration plan; and (3) A Restoration Agent, which executes the plan, following a recursive execution-reflection paradigm, guided by a quality-driven mixture-of-expert policy to select the optimal output for each step. Additionally, 4KAgent embeds a specialized face restoration pipeline, significantly enhancing facial details in portrait and selfie photos. We rigorously evaluate our 4KAgent across 11 distinct task categories encompassing a total of 26 diverse benchmarks, setting new state-of-the-art on a broad spectrum of imaging domains. Our evaluations cover natural images, portrait photos, AI-generated content, satellite imagery, fluorescence microscopy, and medical imaging like fundoscopy, ultrasound, and X-ray, demonstrating superior performance in terms of both perceptual (e.g., NIQE, MUSIQ) and fidelity (e.g., PSNR) metrics. By establishing a novel agentic paradigm for low-level vision tasks, we aim to catalyze broader interest and innovation within vision-centric autonomous agents across diverse research communities. We will release all the code, models, and results at: this https URL.

15 Jul 2025

Logistics operators, from battlefield coordinators rerouting airlifts ahead of a storm to warehouse managers juggling late trucks, often face life-critical decisions that demand both domain expertise and rapid and continuous replanning. While popular methods like integer programming yield logistics plans that satisfy user-defined logical constraints, they are slow and assume an idealized mathematical model of the environment that does not account for uncertainty. On the other hand, large language models (LLMs) can handle uncertainty and promise to accelerate replanning while lowering the barrier to entry by translating free-form utterances into executable plans, yet they remain prone to misinterpretations and hallucinations that jeopardize safety and cost. We introduce a neurosymbolic framework that pairs the accessibility of natural-language dialogue with verifiable guarantees on goal interpretation. It converts user requests into structured planning specifications, quantifies its own uncertainty at the field and token level, and invokes an interactive clarification loop whenever confidence falls below an adaptive threshold. A lightweight model, fine-tuned on just 100 uncertainty-filtered examples, surpasses the zero-shot performance of GPT-4.1 while cutting inference latency by nearly 50%. These preliminary results highlight a practical path toward certifiable, real-time, and user-aligned decision-making for complex logistics.

01 Aug 2025

Shanghai Jiao Tong University

Shanghai Jiao Tong University University of Michigan

University of Michigan Peking UniversityKorea Astronomy and Space Science InstituteUniversity of QueenslandUniversity of ManitobaGeorgia State UniversityWashington State UniversityNational Research Council of CanadaUniversity of CalgaryInternational Centre for Radio Astronomy Research, Curtin UniversityNSF’s National Optical-Infrared Astronomy Research LaboratoryCU Boulder

Peking UniversityKorea Astronomy and Space Science InstituteUniversity of QueenslandUniversity of ManitobaGeorgia State UniversityWashington State UniversityNational Research Council of CanadaUniversity of CalgaryInternational Centre for Radio Astronomy Research, Curtin UniversityNSF’s National Optical-Infrared Astronomy Research LaboratoryCU BoulderWe present a new stellar dynamical measurement of the supermassive black hole (SMBH) in the compact elliptical galaxy NGC 4486B, based on integral field spectroscopy with JWST/NIRSpec. The two-dimensional kinematic maps reveal a resolved double nucleus and a velocity dispersion peak offset from the photometric center. Utilizing two independent methods-Schwarzschild orbit-superposition and Jeans Anisotropic Modeling-we tightly constrain the black hole mass by fitting the full line-of-sight velocity distribution. Our axisymmetric Schwarzschild models yield a best-fit black hole mass of MBH=3.6−0.7+0.7×108M⊙, slightly lower but significantly more precise than previous estimates. However, since our models do not account for the non-equilibrium nature of the double nucleus, this value may represent a lower limit. Across all tested dynamical models, the inferred MBH/M∗ ratio ranges from ~ 4-13%, providing robust evidence for an overmassive SMBH in NGC 4486B. Combined with the galaxy's location deep within the Virgo Cluster, our results support the interpretation that NGC 4486B is the tidally stripped remnant core of a formerly massive galaxy. As the JWST/NIRSpec field of view is insufficient to constrain the dark matter halo, we incorporate archival ground-based long-slit kinematics extending to 5 arcsec. While this provides some leverage on the dark matter content, the constraints remain relatively weak. We place only an upper limit on the dark matter fraction, with M_{DM}/M_{*} < 0.5 within 1 kpc-well beyond the effective radius. The inferred black hole mass remains unchanged with or without a dark matter halo.

19 Sep 2023

Verifying the performance of safety-critical, stochastic systems with complex noise distributions is difficult. We introduce a general procedure for the finite abstraction of nonlinear stochastic systems with non-standard (e.g., non-affine, non-symmetric, non-unimodal) noise distributions for verification purposes. The method uses a finite partitioning of the noise domain to construct an interval Markov chain (IMC) abstraction of the system via transition probability intervals. Noise partitioning allows for a general class of distributions and structures, including multiplicative and mixture models, and admits both known and data-driven systems. The partitions required for optimal transition bounds are specified for systems that are monotonic with respect to the noise, and explicit partitions are provided for affine and multiplicative structures. By the soundness of the abstraction procedure, verification on the IMC provides guarantees on the stochastic system against a temporal logic specification. In addition, we present a novel refinement-free algorithm that improves the verification results. Case studies on linear and nonlinear systems with non-Gaussian noise, including a data-driven example, demonstrate the generality and effectiveness of the method without introducing excessive conservatism.

08 Sep 2021

We report about the effect of an applied external mechanical load on a periodic structure exhibiting band gaps induced by inertial amplification mechanism. If compared to the cases of Bragg scattering and ordinary local resonant metamaterials, we observe here a more remarkable curve shift, modulated through large but fully reversible compression(stretch) of the unit cell, eventually triggering significant (up to two times) enlargement(reduction) of the width of a specific band gap. An important up(down)-shift of some dispersion branches over specific wavenumber values is also observed, showing that this selective variation may lead to negative group velocities over larger(smaller) wavenumber ranges. The possibility for a non-monotone trend of the lower limit of the first BG under the same type of external applied prestrain is found and explained through an analytical model, which unequivocally proves that this behavior derives from the different unit cell effective mass and stiffness variations as the prestrain level increases. The effect of the prestress on the dispersion diagram is investigated through the development of a 2-step calculation method: first, an Updated Lagrangian scheme, including a static geometrically nonlinear analysis of a representative unit cell undergoing the action of an applied external load is derived, and then the Floquet-Bloch decomposition is applied to the linearized equations of the acousto-elasticity for the unit cell in the deformed configuration. The results presented herein provide insights in the behavior of band gaps induced by inertial amplification and suggest new opportunities for real-time tunable wave manipulation.

The Partially Observable Markov Decision Process (POMDP) is a powerful

framework for capturing decision-making problems that involve state and

transition uncertainty. However, most current POMDP planners cannot effectively

handle high-dimensional image observations prevalent in real world

applications, and often require lengthy online training that requires

interaction with the environment. In this work, we propose Visual Tree Search

(VTS), a compositional learning and planning procedure that combines generative

models learned offline with online model-based POMDP planning. The deep

generative observation models evaluate the likelihood of and predict future

image observations in a Monte Carlo tree search planner. We show that VTS is

robust to different types of image noises that were not present during training

and can adapt to different reward structures without the need to re-train. This

new approach significantly and stably outperforms several baseline

state-of-the-art vision POMDP algorithms while using a fraction of the training

time.

10 May 2023

Galactic bars, made up of elongated and aligned stellar orbits, can lose angular momentum via resonant torques with dark matter particles in the halo and slow down. Here we show that if a stellar bar is decelerated to zero rotation speed, it can flip the sign of its angular momentum and reverse rotation direction. We demonstrate this in a collisionless N-body simulation of a galaxy in a live counter-rotating halo. Reversal begins at small radii and propagates outward. The flip generates a kinematically-decoupled core both in the visible galaxy and in the dark matter halo, and counter-rotation generates a large-scale warp of the outer disk with respect to the bar.

26 Sep 2016

By building on a recently introduced genetic-inspired attribute-based

conceptual framework for safety risk analysis, we propose a novel methodology

to compute construction univariate and bivariate construction safety risk at a

situational level. Our fully data-driven approach provides construction

practitioners and academicians with an easy and automated way of extracting

valuable empirical insights from databases of unstructured textual injury

reports. By applying our methodology on an attribute and outcome dataset

directly obtained from 814 injury reports, we show that the frequency-magnitude

distribution of construction safety risk is very similar to that of natural

phenomena such as precipitation or earthquakes. Motivated by this observation,

and drawing on state-of-the-art techniques in hydroclimatology and insurance,

we introduce univariate and bivariate nonparametric stochastic safety risk

generators, based on Kernel Density Estimators and Copulas. These generators

enable the user to produce large numbers of synthetic safety risk values

faithfully to the original data, allowing safetyrelated decision-making under

uncertainty to be grounded on extensive empirical evidence. Just like the

accurate modeling and simulation of natural phenomena such as wind or

streamflow is indispensable to successful structure dimensioning or water

reservoir management, we posit that improving construction safety calls for the

accurate modeling, simulation, and assessment of safety risk. The underlying

assumption is that like natural phenomena, construction safety may benefit from

being studied in an empirical and quantitative way rather than qualitatively

which is the current industry standard. Finally, a side but interesting finding

is that attributes related to high energy levels and to human error emerge as

strong risk shapers on the dataset we used to illustrate our methodology.

26 May 2020

Axisymmetric disks of high eccentricity, low mass bodies on near-Keplerian

orbits are unstable to an out-of-plane buckling. This "inclination instability"

exponentially grows the orbital inclinations, raises perihelia distances and

clusters in argument of perihelion. Here we examine the instability in a

massive primordial scattered disk including the orbit-averaged gravitational

influence of the giant planets. We show that differential apsidal precession

induced by the giant planets will suppress the inclination instability unless

the primordial mass is ≳20 Earth masses. We also show that the

instability should produce a "perihelion gap" at semi-major axes of hundreds of

AU, as the orbits of the remnant population are more likely to have extremely

large perihelion distances (O(100 AU)) than intermediate

values.

09 Aug 2022

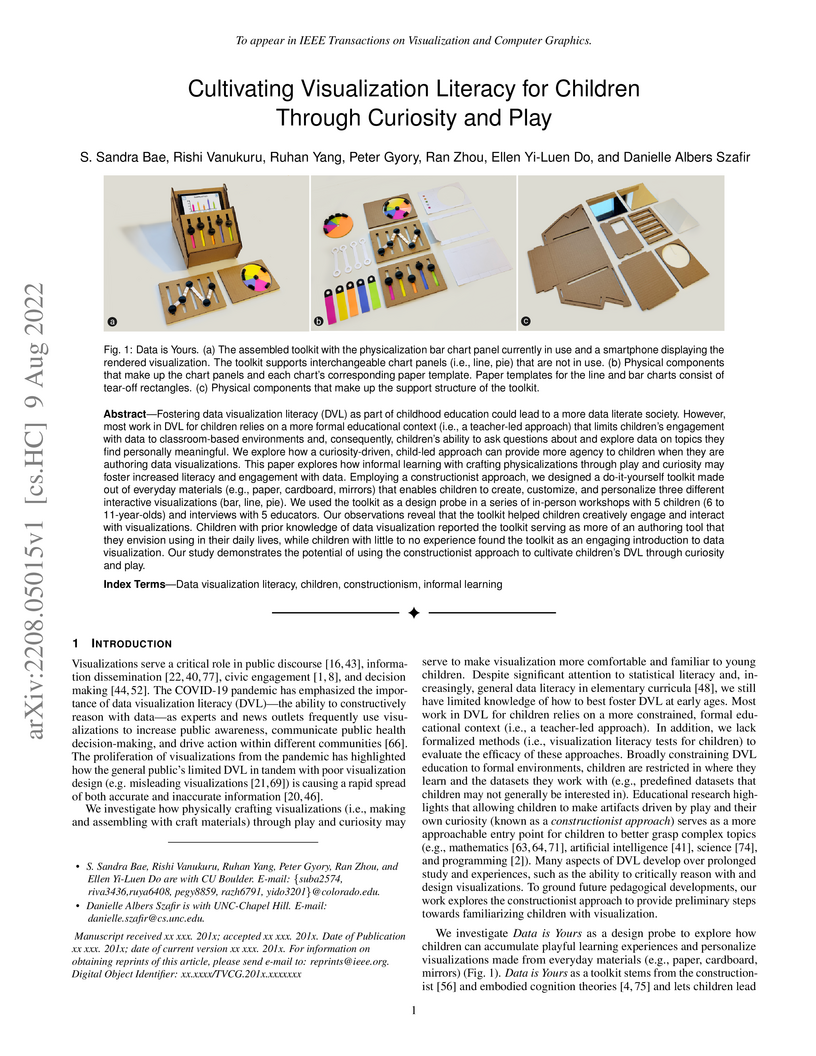

Fostering data visualization literacy (DVL) as part of childhood education could lead to a more data literate society. However, most work in DVL for children relies on a more formal educational context (i.e., a teacher-led approach) that limits children's engagement with data to classroom-based environments and, consequently, children's ability to ask questions about and explore data on topics they find personally meaningful. We explore how a curiosity-driven, child-led approach can provide more agency to children when they are authoring data visualizations. This paper explores how informal learning with crafting physicalizations through play and curiosity may foster increased literacy and engagement with data. Employing a constructionist approach, we designed a do-it-yourself toolkit made out of everyday materials (e.g., paper, cardboard, mirrors) that enables children to create, customize, and personalize three different interactive visualizations (bar, line, pie). We used the toolkit as a design probe in a series of in-person workshops with 5 children (6 to 11-year-olds) and interviews with 5 educators. Our observations reveal that the toolkit helped children creatively engage and interact with visualizations. Children with prior knowledge of data visualization reported the toolkit serving as more of an authoring tool that they envision using in their daily lives, while children with little to no experience found the toolkit as an engaging introduction to data visualization. Our study demonstrates the potential of using the constructionist approach to cultivate children's DVL through curiosity and play.

In this paper, we present a novel framework to synthesize robust strategies for discrete-time nonlinear systems with random disturbances that are unknown, against temporal logic specifications. The proposed framework is data-driven and abstraction-based: leveraging observations of the system, our approach learns a high-confidence abstraction of the system in the form of an uncertain Markov decision process (UMDP). The uncertainty in the resulting UMDP is used to formally account for both the error in abstracting the system and for the uncertainty coming from the data. Critically, we show that for any given state-action pair in the resulting UMDP, the uncertainty in the transition probabilities can be represented as a convex polytope obtained by a two-layer state discretization and concentration inequalities. This allows us to obtain tighter uncertainty estimates compared to existing approaches, and guarantees efficiency, as we tailor a synthesis algorithm exploiting the structure of this UMDP. We empirically validate our approach on several case studies, showing substantially improved performance compared to the state-of-the-art.

Translating knowledge-intensive and entity-rich text between English and

Korean requires transcreation to preserve language-specific and cultural

nuances beyond literal, phonetic or word-for-word conversion. We evaluate 13

models (LLMs and MT models) using automatic metrics and human assessment by

bilingual annotators. Our findings show LLMs outperform traditional MT systems

but struggle with entity translation requiring cultural adaptation. By

constructing an error taxonomy, we identify incorrect responses and entity name

errors as key issues, with performance varying by entity type and popularity

level. This work exposes gaps in automatic evaluation metrics and hope to

enable future work in completing culturally-nuanced machine translation.

CNRSUniversity of OsloSLAC National Accelerator LaboratoryDESY

CNRSUniversity of OsloSLAC National Accelerator LaboratoryDESY University of Michigan

University of Michigan INFNFermilab

INFNFermilab CERN

CERN Argonne National Laboratory

Argonne National Laboratory Arizona State University

Arizona State University Brookhaven National Laboratory

Brookhaven National Laboratory Lawrence Berkeley National LaboratoryTRIUMFTechnical University MunichLawrence Livermore National LaboratoryUniversity of LisbonNorthern Illinois UniversityKarlsruhe Insitute of TechnologyJefferson LaboratorySTFCCU BoulderUniverstity of Manchester

Lawrence Berkeley National LaboratoryTRIUMFTechnical University MunichLawrence Livermore National LaboratoryUniversity of LisbonNorthern Illinois UniversityKarlsruhe Insitute of TechnologyJefferson LaboratorySTFCCU BoulderUniverstity of ManchesterThis document outlines a community-driven Design Study for a 10 TeV pCM

Wakefield Accelerator Collider. The 2020 ESPP Report emphasized the need for

Advanced Accelerator R\&D, and the 2023 P5 Report calls for the ``delivery of

an end-to-end design concept, including cost scales, with self-consistent

parameters throughout." This Design Study leverages recent experimental and

theoretical progress resulting from a global R\&D program in order to deliver a

unified, 10 TeV Wakefield Collider concept. Wakefield Accelerators provide

ultra-high accelerating gradients which enables an upgrade path that will

extend the reach of Linear Colliders beyond the electroweak scale. Here, we

describe the organization of the Design Study including timeline and

deliverables, and we detail the requirements and challenges on the path to a 10

TeV Wakefield Collider.

There are no more papers matching your filters at the moment.