Argo AI and university researchers introduce Argoverse 2, a collection of three datasets designed to advance self-driving perception and forecasting by providing richer taxonomies for long-tail objects, the largest unlabeled lidar collection to date, and a focus on challenging, diverse scenarios with comprehensive 3D HD maps. Baseline experiments demonstrate that existing models face difficulties with the expanded object categories and the increased complexity of motion forecasting in this new dataset.

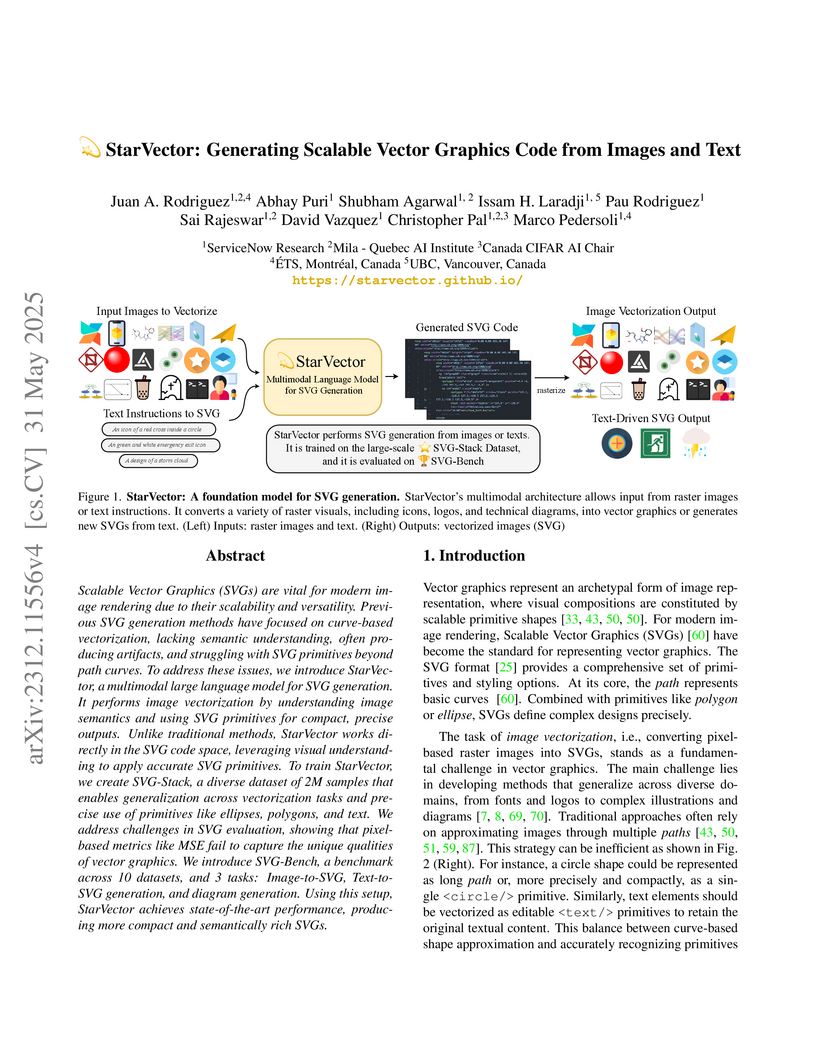

Scalable Vector Graphics (SVGs) are vital for modern image rendering due to

their scalability and versatility. Previous SVG generation methods have focused

on curve-based vectorization, lacking semantic understanding, often producing

artifacts, and struggling with SVG primitives beyond path curves. To address

these issues, we introduce StarVector, a multimodal large language model for

SVG generation. It performs image vectorization by understanding image

semantics and using SVG primitives for compact, precise outputs. Unlike

traditional methods, StarVector works directly in the SVG code space,

leveraging visual understanding to apply accurate SVG primitives. To train

StarVector, we create SVG-Stack, a diverse dataset of 2M samples that enables

generalization across vectorization tasks and precise use of primitives like

ellipses, polygons, and text. We address challenges in SVG evaluation, showing

that pixel-based metrics like MSE fail to capture the unique qualities of

vector graphics. We introduce SVG-Bench, a benchmark across 10 datasets, and 3

tasks: Image-to-SVG, Text-to-SVG generation, and diagram generation. Using this

setup, StarVector achieves state-of-the-art performance, producing more compact

and semantically rich SVGs.

Observing the temporal evolution of neural network predictions, termed "learning paths," reveals how models process noisy labels. This analysis leads to Filter-KD, a knowledge distillation method that averages teacher predictions over time to provide more stable supervisory signals, resulting in improved accuracy and calibration, particularly with label noise.

While deep learning methods have achieved strong performance in time series

prediction, their black-box nature and inability to explicitly model underlying

stochastic processes often limit their generalization to non-stationary data,

especially in the presence of abrupt changes. In this work, we introduce Neural

MJD, a neural network based non-stationary Merton jump diffusion (MJD) model.

Our model explicitly formulates forecasting as a stochastic differential

equation (SDE) simulation problem, combining a time-inhomogeneous It\^o

diffusion to capture non-stationary stochastic dynamics with a

time-inhomogeneous compound Poisson process to model abrupt jumps. To enable

tractable learning, we introduce a likelihood truncation mechanism that caps

the number of jumps within small time intervals and provide a theoretical error

bound for this approximation. Additionally, we propose an Euler-Maruyama with

restart solver, which achieves a provably lower error bound in estimating

expected states and reduced variance compared to the standard solver.

Experiments on both synthetic and real-world datasets demonstrate that Neural

MJD consistently outperforms state-of-the-art deep learning and statistical

learning methods.

LitLLM introduces a toolkit for scientific literature review that leverages a modular Retrieval Augmented Generation pipeline to efficiently produce related work sections. The system grounds LLM outputs in up-to-date scientific literature, mitigating hallucination and outdated knowledge, and provides both informative zero-shot and controllable plan-based generation options.

A fundamental problem in organic chemistry is identifying and predicting the

series of reactions that synthesize a desired target product molecule. Due to

the combinatorial nature of the chemical search space, single-step reactant

prediction -- i.e. single-step retrosynthesis -- remains challenging even for

existing state-of-the-art template-free generative approaches to produce an

accurate yet diverse set of feasible reactions. In this paper, we model

single-step retrosynthesis planning and introduce RETRO SYNFLOW (RSF) a

discrete flow-matching framework that builds a Markov bridge between the

prescribed target product molecule and the reactant molecule. In contrast to

past approaches, RSF employs a reaction center identification step to produce

intermediate structures known as synthons as a more informative source

distribution for the discrete flow. To further enhance diversity and

feasibility of generated samples, we employ Feynman-Kac steering with

Sequential Monte Carlo based resampling to steer promising generations at

inference using a new reward oracle that relies on a forward-synthesis model.

Empirically, we demonstrate \nameshort achieves 60.0% top-1 accuracy, which

outperforms the previous SOTA by 20%. We also substantiate the benefits of

steering at inference and demonstrate that FK-steering improves top-5

round-trip accuracy by 19% over prior template-free SOTA methods, all while

preserving competitive top-k accuracy results.

This paper introduces ReservoirTTA, a novel plug-in framework designed for prolonged test-time adaptation (TTA) in scenarios where the test domain continuously shifts over time, including cases where domains recur or evolve gradually. At its core, ReservoirTTA maintains a reservoir of domain-specialized models -- an adaptive test-time model ensemble -- that both detects new domains via online clustering over style features of incoming samples and routes each sample to the appropriate specialized model, and thereby enables domain-specific adaptation. This multi-model strategy overcomes key limitations of single model adaptation, such as catastrophic forgetting, inter-domain interference, and error accumulation, ensuring robust and stable performance on sustained non-stationary test distributions. Our theoretical analysis reveals key components that bound parameter variance and prevent model collapse, while our plug-in TTA module mitigates catastrophic forgetting of previously encountered domains. Extensive experiments on scene-level corruption benchmarks (ImageNet-C, CIFAR-10/100-C), object-level style shifts (DomainNet-126, PACS), and semantic segmentation (Cityscapes->ACDC) covering recurring and continuously evolving domain shifts -- show that ReservoirTTA substantially improves adaptation accuracy and maintains stable performance across prolonged, recurring shifts, outperforming state-of-the-art methods. Our code is publicly available at this https URL.

While learning to align Large Language Models (LLMs) with human preferences has shown remarkable success, aligning these models to meet the diverse user preferences presents further challenges in preserving previous knowledge. This paper examines the impact of personalized preference optimization on LLMs, revealing that the extent of knowledge loss varies significantly with preference heterogeneity. Although previous approaches have utilized the KL constraint between the reference model and the policy model, we observe that they fail to maintain general knowledge and alignment when facing personalized preferences. To this end, we introduce Base-Anchored Preference Optimization (BAPO), a simple yet effective approach that utilizes the initial responses of reference model to mitigate forgetting while accommodating personalized alignment. BAPO effectively adapts to diverse user preferences while minimally affecting global knowledge or general alignment. Our experiments demonstrate the efficacy of BAPO in various setups.

We approach self-supervised learning of image representations from a statistical dependence perspective, proposing Self-Supervised Learning with the Hilbert-Schmidt Independence Criterion (SSL-HSIC). SSL-HSIC maximizes dependence between representations of transformations of an image and the image identity, while minimizing the kernelized variance of those representations. This framework yields a new understanding of InfoNCE, a variational lower bound on the mutual information (MI) between different transformations. While the MI itself is known to have pathologies which can result in learning meaningless representations, its bound is much better behaved: we show that it implicitly approximates SSL-HSIC (with a slightly different regularizer). Our approach also gives us insight into BYOL, a negative-free SSL method, since SSL-HSIC similarly learns local neighborhoods of samples. SSL-HSIC allows us to directly optimize statistical dependence in time linear in the batch size, without restrictive data assumptions or indirect mutual information estimators. Trained with or without a target network, SSL-HSIC matches the current state-of-the-art for standard linear evaluation on ImageNet, semi-supervised learning and transfer to other classification and vision tasks such as semantic segmentation, depth estimation and object recognition. Code is available at this https URL .

Enhancing cryogenic electron microscopy (cryo-EM) 3D density maps at

intermediate resolution (4-8 {\AA}) is crucial in protein structure

determination. Recent advances in deep learning have led to the development of

automated approaches for enhancing experimental cryo-EM density maps. Yet,

these methods are not optimized for intermediate-resolution maps and rely on

map density features alone. To address this, we propose CryoSAMU, a novel

method designed to enhance 3D cryo-EM density maps of protein structures using

structure-aware multimodal U-Nets and trained on curated

intermediate-resolution density maps. We comprehensively evaluate CryoSAMU

across various metrics and demonstrate its competitive performance compared to

state-of-the-art methods. Notably, CryoSAMU achieves significantly faster

processing speed, showing promise for future practical applications. Our code

is available at this https URL

We introduce the Conditional Independence Regression CovariancE (CIRCE), a

measure of conditional independence for multivariate continuous-valued

variables. CIRCE applies as a regularizer in settings where we wish to learn

neural features φ(X) of data X to estimate a target Y, while being

conditionally independent of a distractor Z given Y. Both Z and Y are

assumed to be continuous-valued but relatively low dimensional, whereas X and

its features may be complex and high dimensional. Relevant settings include

domain-invariant learning, fairness, and causal learning. The procedure

requires just a single ridge regression from Y to kernelized features of Z,

which can be done in advance. It is then only necessary to enforce independence

of φ(X) from residuals of this regression, which is possible with

attractive estimation properties and consistency guarantees. By contrast,

earlier measures of conditional feature dependence require multiple regressions

for each step of feature learning, resulting in more severe bias and variance,

and greater computational cost. When sufficiently rich features are used, we

establish that CIRCE is zero if and only if $\varphi(X) \perp \!\!\! \perp Z

\mid Y$. In experiments, we show superior performance to previous methods on

challenging benchmarks, including learning conditionally invariant image

features.

In this work, we utilize Large Language Models (LLMs) for a novel use case: constructing Performance Predictors (PP) that estimate the performance of specific deep neural network architectures on downstream tasks. We create PP prompts for LLMs, comprising (i) role descriptions, (ii) instructions for the LLM, (iii) hyperparameter definitions, and (iv) demonstrations presenting sample architectures with efficiency metrics and `training from scratch' performance. In machine translation (MT) tasks, GPT-4 with our PP prompts (LLM-PP) achieves a SoTA mean absolute error and a slight degradation in rank correlation coefficient compared to baseline predictors. Additionally, we demonstrate that predictions from LLM-PP can be distilled to a compact regression model (LLM-Distill-PP), which surprisingly retains much of the performance of LLM-PP. This presents a cost-effective alternative for resource-intensive performance estimation. Specifically, for Neural Architecture Search (NAS), we introduce a Hybrid-Search algorithm (HS-NAS) employing LLM-Distill-PP for the initial search stages and reverting to the baseline predictor later. HS-NAS performs similarly to SoTA NAS, reducing search hours by approximately 50%, and in some cases, improving latency, GFLOPs, and model size. The code can be found at: this https URL.

The advent of foundation models (FMs) in healthcare offers unprecedented opportunities to enhance medical diagnostics through automated classification and segmentation tasks. However, these models also raise significant concerns about their fairness, especially when applied to diverse and underrepresented populations in healthcare applications. Currently, there is a lack of comprehensive benchmarks, standardized pipelines, and easily adaptable libraries to evaluate and understand the fairness performance of FMs in medical imaging, leading to considerable challenges in formulating and implementing solutions that ensure equitable outcomes across diverse patient populations. To fill this gap, we introduce FairMedFM, a fairness benchmark for FM research in medical this http URL integrates with 17 popular medical imaging datasets, encompassing different modalities, dimensionalities, and sensitive attributes. It explores 20 widely used FMs, with various usages such as zero-shot learning, linear probing, parameter-efficient fine-tuning, and prompting in various downstream tasks -- classification and segmentation. Our exhaustive analysis evaluates the fairness performance over different evaluation metrics from multiple perspectives, revealing the existence of bias, varied utility-fairness trade-offs on different FMs, consistent disparities on the same datasets regardless FMs, and limited effectiveness of existing unfairness mitigation methods. Checkout FairMedFM's project page and open-sourced codebase, which supports extendible functionalities and applications as well as inclusive for studies on FMs in medical imaging over the long term.

We introduce a technique for pairwise registration of neural fields that extends classical optimization-based local registration (i.e. ICP) to operate on Neural Radiance Fields (NeRF) -- neural 3D scene representations trained from collections of calibrated images. NeRF does not decompose illumination and color, so to make registration invariant to illumination, we introduce the concept of a ''surface field'' -- a field distilled from a pre-trained NeRF model that measures the likelihood of a point being on the surface of an object. We then cast nerf2nerf registration as a robust optimization that iteratively seeks a rigid transformation that aligns the surface fields of the two scenes. We evaluate the effectiveness of our technique by introducing a dataset of pre-trained NeRF scenes -- our synthetic scenes enable quantitative evaluations and comparisons to classical registration techniques, while our real scenes demonstrate the validity of our technique in real-world scenarios. Additional results available at: this https URL

To adapt kernel two-sample and independence testing to complex structured data, aggregation of multiple kernels is frequently employed to boost testing power compared to single-kernel tests. However, we observe a phenomenon that directly maximizing multiple kernel-based statistics may result in highly similar kernels that capture highly overlapping information, limiting the effectiveness of aggregation. To address this, we propose an aggregated statistic that explicitly incorporates kernel diversity based on the covariance between different kernels. Moreover, we identify a fundamental challenge: a trade-off between the diversity among kernels and the test power of individual kernels, i.e., the selected kernels should be both effective and diverse. This motivates a testing framework with selection inference, which leverages information from the training phase to select kernels with strong individual performance from the learned diverse kernel pool. We provide rigorous theoretical statements and proofs to show the consistency on the test power and control of Type-I error, along with asymptotic analysis of the proposed statistics. Lastly, we conducted extensive empirical experiments demonstrating the superior performance of our proposed approach across various benchmarks for both two-sample and independence testing.

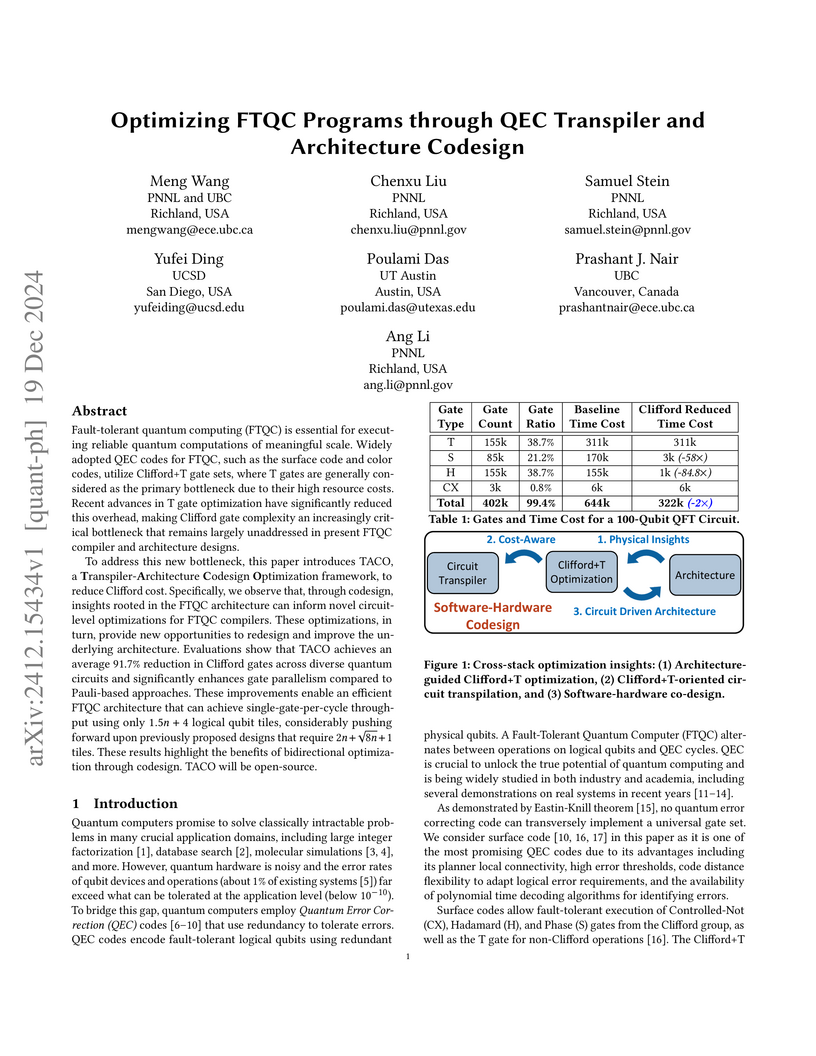

Fault-tolerant quantum computing (FTQC) is essential for executing reliable quantum computations of meaningful scale. Widely adopted QEC codes for FTQC, such as the surface code and color codes, utilize Clifford+T gate sets, where T gates are generally considered as the primary bottleneck due to their high resource costs. Recent advances in T gate optimization have significantly reduced this overhead, making Clifford gate complexity an increasingly critical bottleneck that remains largely unaddressed in present FTQC compiler and architecture designs. To address this new bottleneck, this paper introduces TACO, a \textbf{T}ranspiler-\textbf{A}rchitecture \textbf{C}odesign \textbf{O}ptimization framework, to reduce Clifford cost. Specifically, we observe that, through codesign, insights rooted in the FTQC architecture can inform novel circuit-level optimizations for FTQC compilers. These optimizations, in turn, provide new opportunities to redesign and improve the underlying architecture. Evaluations show that TACO achieves an average 91.7% reduction in Clifford gates across diverse quantum circuits and significantly enhances gate parallelism compared to Pauli-based approaches. These improvements enable an efficient FTQC architecture that can achieve single-gate-per-cycle throughput using only 1.5n+4 logical qubit tiles, considerably pushing forward upon previously proposed designs that require 2n+8n+1 tiles. These results highlight the benefits of bidirectional optimization through codesign. TACO will be open-source.

09 Apr 2024

We consider inexact policy iteration methods for large-scale infinite-horizon

discounted MDPs with finite spaces, a variant of policy iteration where the

policy evaluation step is implemented inexactly using an iterative solver for

linear systems. In the classical dynamic programming literature, a similar

principle is deployed in optimistic policy iteration, where an a-priori

fixed-number of iterations of value iteration is used to inexactly solve the

policy evaluation step. Inspired by the connection between policy iteration and

semismooth Newton's method, we investigate a class of iPI methods that mimic

the inexact variants of semismooth Newton's method by adopting a parametric

stopping condition to regulate the level of inexactness of the policy

evaluation step. For this class of methods we discuss local and global

convergence properties and derive a practical range of values for the

stopping-condition parameter that provide contraction guarantees. Our analysis

is general and therefore encompasses a variety of iterative solvers for policy

evaluation, including the standard value iteration as well as more

sophisticated ones such as GMRES. As underlined by our analysis, the selection

of the inner solver is of fundamental importance for the performance of the

overall method. We therefore consider different iterative methods to solve the

policy evaluation step and analyze their applicability and contraction

properties when used for policy evaluation. We show that the contraction

properties of these methods tend to be enhanced by the specific structure of

policy evaluation and that there is margin for substantial improvement in terms

of convergence rate. Finally, we study the numerical performance of different

instances of inexact policy iteration on large-scale MDPs for the design of

health policies to control the spread of infectious diseases in epidemiology.

04 Jan 2022

We consider interpolation learning in high-dimensional linear regression with

Gaussian data, and prove a generic uniform convergence guarantee on the

generalization error of interpolators in an arbitrary hypothesis class in terms

of the class's Gaussian width. Applying the generic bound to Euclidean norm

balls recovers the consistency result of Bartlett et al. (2020) for

minimum-norm interpolators, and confirms a prediction of Zhou et al. (2020) for

near-minimal-norm interpolators in the special case of Gaussian data. We

demonstrate the generality of the bound by applying it to the simplex,

obtaining a novel consistency result for minimum l1-norm interpolators (basis

pursuit). Our results show how norm-based generalization bounds can explain and

be used to analyze benign overfitting, at least in some settings.

Hybrid refractive-diffractive lenses combine the light efficiency of refractive lenses with the information encoding power of diffractive optical elements (DOE), showing great potential as the next generation of imaging systems. However, accurately simulating such hybrid designs is generally difficult, and in particular, there are no existing differentiable image formation models for hybrid lenses with sufficient accuracy.

In this work, we propose a new hybrid ray-tracing and wave-propagation (ray-wave) model for accurate simulation of both optical aberrations and diffractive phase modulation, where the DOE is placed between the last refractive surface and the image sensor, i.e. away from the Fourier plane that is often used as a DOE position. The proposed ray-wave model is fully differentiable, enabling gradient back-propagation for end-to-end co-design of refractive-diffractive lens optimization and the image reconstruction network. We validate the accuracy of the proposed model by comparing the simulated point spread functions (PSFs) with theoretical results, as well as simulation experiments that show our model to be more accurate than solutions implemented in commercial software packages like Zemax. We demonstrate the effectiveness of the proposed model through real-world experiments and show significant improvements in both aberration correction and extended depth-of-field (EDoF) imaging. We believe the proposed model will motivate further investigation into a wide range of applications in computational imaging, computational photography, and advanced optical design. Code will be released upon publication.

Real-time tracking of small unmanned aerial vehicles (UAVs) on edge devices faces a fundamental resolution-speed conflict. Downsampling high-resolution imagery to standard detector input sizes causes small target features to collapse below detectable thresholds. Yet processing native 1080p frames on resource-constrained platforms yields insufficient throughput for smooth gimbal control. We propose SDG-Track, a Sparse Detection-Guided Tracker that adopts an Observer-Follower architecture to reconcile this conflict. The Observer stream runs a high-capacity detector at low frequency on the GPU to provide accurate position anchors from 1920x1080 frames. The Follower stream performs high-frequency trajectory interpolation via ROI-constrained sparse optical flow on the CPU. To handle tracking failures from occlusion or model drift caused by spectrally similar distractors, we introduce Dual-Space Recovery, a training-free re-acquisition mechanism combining color histogram matching with geometric consistency constraints. Experiments on a ground-to-air tracking station demonstrate that SDG-Track achieves 35.1 FPS system throughput while retaining 97.2\% of the frame-by-frame detection precision. The system successfully tracks agile FPV drones under real-world operational conditions on an NVIDIA Jetson Orin Nano. Our paper code is publicly available at this https URL

There are no more papers matching your filters at the moment.