De La Salle University

Researchers at De La Salle University empirically evaluated Fitts' Law within a 3D tangible mixed reality environment using the PointARs system, finding optimal tangible cube dimensions between 1.5 and 2 inches and distances of 2 inches for efficient interaction. The study revealed that larger target cubes paradoxically increased completion times, likely due to system tracking limitations rather than theoretical difficulty.

Researchers at De La Salle University developed and evaluated "Point n Move," a glove-based pointing device designed as an alternative input for in-person presentations. While the prototypes demonstrated basic usability and an improved second iteration, they did not match the performance or intuitiveness of a commercial benchmark, revealing challenges with precision and sensor drift.

As Large Language Models become integral to software development, with substantial portions of AI-suggested code entering production, understanding their internal correctness mechanisms becomes critical for safe deployment. We apply sparse autoencoders to decompose LLM representations, identifying directions that correspond to code correctness. We select predictor directions using t-statistics and steering directions through separation scores from base model representations, then analyze their mechanistic properties through steering, attention analysis, and weight orthogonalization. We find that code correctness directions in LLMs reliably predict incorrect code, while correction capabilities, though statistically significant, involve tradeoffs between fixing errors and preserving correct code. Mechanistically, successful code generation depends on attending to test cases rather than problem descriptions. Moreover, directions identified in base models retain their effectiveness after instruction-tuning, suggesting code correctness mechanisms learned during pre-training are repurposed during fine-tuning. Our mechanistic insights suggest three practical applications: prompting strategies should prioritize test examples over elaborate problem descriptions, predictor directions can serve as error alarms for developer review, and these same predictors can guide selective steering, intervening only when errors are anticipated to prevent the code corruption from constant steering.

The exploration of brain networks has reached an important milestone as

relatively large and reliable information has been gathered for connectomes of

different species. Analyses of connectome data sets reveal that the structural

length and the distributions of in- and out-node strengths follow heavy-tailed

lognormal statistics, while the functional network properties exhibit powerlaw

tails, suggesting that the brain operates close to a critical point where

computational capabilities and sensitivity to stimulus is optimal. Because

these universal network features emerge from bottom-up (self-)organization, one

can pose the question of whether they can be modeled via a common framework,

particularly through the lens of criticality of statistical physical systems.

Here, we simultaneously reproduce the powerlaw statistics of connectome edge

weights and the lognormal distributions of node strengths from an

avalanche-type model with learning that operates on baseline networks that

mimic the neuronal circuitry. We observe that the avalanches created by a

sandpile-like model on simulated neurons connected by a hierarchical modular

network (HMN) produce robust powerlaw avalanche size distributions with

critical exponents of 3/2 characteristic of neuronal systems. Introducing

Hebbian learning, wherein neurons that `fire together, wire together,' recovers

the powerlaw distribution of edge weights and the lognormal distributions of

node degrees, comparable to those obtained from connectome data. Our results

strengthen the notion of a critical brain, one whose local interactions drive

connectivity and learning without a need for external intervention and precise

tuning.

There had been recent advancement toward the detection of ultra-faint dwarf galaxies, which may serve as a useful laboratory for dark matter exploration since some of them contains almost 99% of pure dark matter. The majority of these galaxies contain no black hole that inhabits them. Recently, there had been reports that some dwarf galaxies may have a black hole within. In this study, we construct a black hole solution combined with the Dehnen dark matter halo profile, which is commonly used for dwarf galaxies. We aim to find out whether there would be deviations relative to the standard black hole properties, which might allow determining whether the dark matter profile in an ultra-faint dwarf galaxy is cored or cuspy. To make the model more realistic, we applied the modified Newman-Janis prescription to obtain the rotating metric. We analyzed the black hole properties such as the event horizon, ergoregion, geodesics of time-like and null particles, and the black hole shadow. Using these observables, the results indicate the difficulty of distinguishing whether the dark matter is cored or cuspy. To find an observable that can potentially distinguish these two profiles, we also calculated the weak deflection angle to examine the effect of the Dehnen profile in finite distance and far approximation. Our results indicate that using the weak deflection angle is far better, in many orders of magnitude, in potentially differentiating these profiles. We conclude that although dwarf galaxies are dark matter-dominated places, the effect on the Dehnen profile is still dependent on the mass of the black hole, considering the method used herein.

Deep clustering algorithms combine representation learning and clustering by

jointly optimizing a clustering loss and a non-clustering loss. In such

methods, a deep neural network is used for representation learning together

with a clustering network. Instead of following this framework to improve

clustering performance, we propose a simpler approach of optimizing the

entanglement of the learned latent code representation of an autoencoder. We

define entanglement as how close pairs of points from the same class or

structure are, relative to pairs of points from different classes or

structures. To measure the entanglement of data points, we use the soft nearest

neighbor loss, and expand it by introducing an annealing temperature factor.

Using our proposed approach, the test clustering accuracy was 96.2% on the

MNIST dataset, 85.6% on the Fashion-MNIST dataset, and 79.2% on the EMNIST

Balanced dataset, outperforming our baseline models.

24 Dec 2021

A complex balanced kinetic system is absolutely complex balanced (ACB) if every positive equilibrium is complex balanced. Two results on absolute complex balancing were foundational for modern chemical reaction network theory (CRNT): in 1972, M. Feinberg proved that any deficiency zero complex balanced system is absolutely complex balanced. In the same year, F. Horn and R. Jackson showed that the (full) converse of the result is not true: any complex balanced mass action system, regardless of its deficiency, is absolutely complex balanced. In this paper, we present initial results on the extension of the Horn and Jackson ACB Theorem. In particular, we focus on other kinetic systems with positive deficiency where complex balancing implies absolute complex balancing. While doing so, we found out that complex balanced power law reactant determined kinetic systems (PL-RDK) systems are not ACB. In our search for necessary and sufficient conditions for complex balanced systems to be absolutely complex balanced, we came across the so-called CLP systems (complex balanced systems with a desired "log parametrization" property). It is shown that complex balanced systems with bi-LP property are absolutely complex balanced. For non-CLP systems, we discuss novel methods for finding sufficient conditions for ACB in kinetic systems containing non-CLP systems: decompositions, the Positive Function Factor (PFF) and the Coset Intersection Count (CIC) and their application to poly-PL and Hill-type systems.

The use of the internet as a fast medium of spreading fake news reinforces

the need for computational tools that combat it. Techniques that train fake

news classifiers exist, but they all assume an abundance of resources including

large labeled datasets and expert-curated corpora, which low-resource languages

may not have. In this work, we make two main contributions: First, we alleviate

resource scarcity by constructing the first expertly-curated benchmark dataset

for fake news detection in Filipino, which we call "Fake News Filipino."

Second, we benchmark Transfer Learning (TL) techniques and show that they can

be used to train robust fake news classifiers from little data, achieving 91%

accuracy on our fake news dataset, reducing the error by 14% compared to

established few-shot baselines. Furthermore, lifting ideas from multitask

learning, we show that augmenting transformer-based transfer techniques with

auxiliary language modeling losses improves their performance by adapting to

writing style. Using this, we improve TL performance by 4-6%, achieving an

accuracy of 96% on our best model. Lastly, we show that our method generalizes

well to different types of news articles, including political news,

entertainment news, and opinion articles.

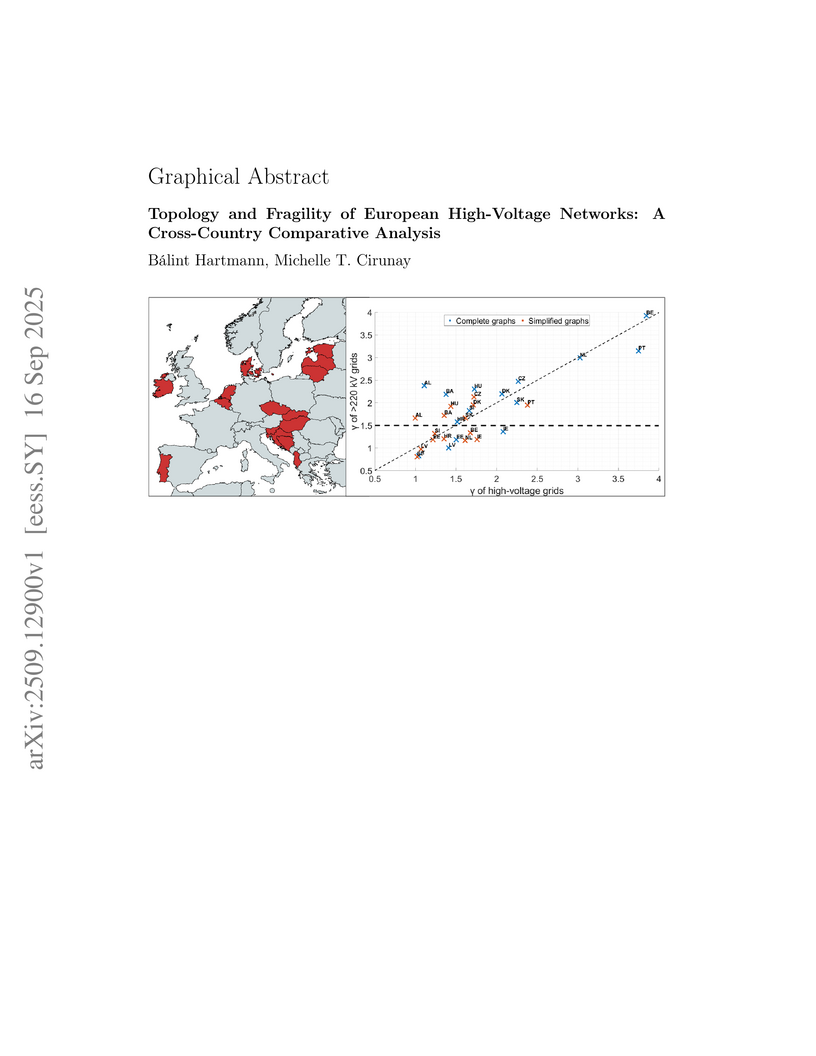

Reliable electricity supply depends on the seamless operation of high-voltage grid infrastructure spanning both transmission and sub-transmission levels. Beneath this apparent uniformity lies a striking structural diversity, which leaves a clear imprint on system vulnerability. In this paper, we present harmonized topological models of the high-voltage grids of 15 European countries, integrating all elements at voltage levels above 110 kV. Topological analysis of these networks reveals a simple yet robust pattern: node degree distributions consistently follow an exponential decay, but the rate of decay varies significantly across countries. Through a detailed and systematic evaluation of network tolerance to node and edge removals, we show that the decay rate delineates the boundary between systems that are more resilient to failures and those that are prone to large-scale disruptions. Furthermore, we demonstrate that this numerical boundary is highly sensitive to which layers of the infrastructure are included in the models. To our knowledge, this study provides the first quantitative cross-country comparison of 15 European high-voltage networks, linking topological properties with vulnerability characteristics.

Creative coding is an experimentation-heavy activity that requires

translating high-level visual ideas into code. However, most languages and

libraries for creative coding may not be adequately intuitive for beginners. In

this paper, we present AniFrame, a domain-specific language for drawing and

animation. Designed for novice programmers, it (i) features animation-specific

data types, operations, and built-in functions to simplify the creation and

animation of composite objects, (ii) allows for fine-grained control over

animation sequences through explicit specification of the target object and the

start and end frames, (iii) reduces the learning curve through a Python-like

syntax, type inferencing, and a minimal set of control structures and keywords

that map closely to their semantic intent, and (iv) promotes computational

expressivity through support for common mathematical operations, built-in

trigonometric functions, and user-defined recursion. Our usability test

demonstrates AniFrame's potential to enhance readability and writability for

multiple creative coding use cases. AniFrame is open-source, and its

implementation and reference are available at

this https URL

Poincaré Gauge's theory of gravity is the most noteworthy alternative extension of general relativity that has a correspondence between spin and spacetime geometry. In this paper, we use Reissner-Nordstrom-de Sitter and anti-de Sitter solutions, where torsion τ is added as an independent field, to analyze the weak deflection angles α^ of massive and null particles in finite distance regime. We then apply α^ to determine the Einstein ring formation in M87* and Sgr. A* and determine that relative to Earth's location from these black holes, massive torsion effects can provide considerable deviation, while the cosmological constant's effect remains negligible. Furthermore, we also explore how the torsion parameter affects the shadow radius perceived by both static and co-moving (with cosmic expansion) observers in a Universe dominated by dark energy, matter, and radiation. Our findings indicate that torsion and cosmological constant parameters affect the shadow radius differently between observers in static and co-moving states. We also show how the torsion parameter affects the luminosity of the photonsphere by studying the shadow with infalling accretion. The calculation of the quasinormal modes, greybody bounds, and high-energy absorption cross-section are also affected by the torsion parameter considerably.

27 Apr 2023

The objective of this study is to determine Education in the Digital World

from the lens of millennial learners. This also identifies the cybergogical

implications of the issue with digital education as seen through the lens of

the outlier.

This study uses a mixed methods sequential explanatory design. A quantitative

method was employed during the first phase and the instruments of the study

were distributed using google forms. The survey received a total of 85

responses and the results were analyzed using descriptive methods. Following up

with a qualitative method, during the second phase the outliers were

interviewed,and the results were analyzed using thematic analysis. The results

of the mixed methods were interpreted in the form of cybergogical implications.

The digital education from the lens of millennial learners in terms of the

Benefits of E-Learning and Students Perceptions of E Learning received an

overall mean of 3.68 which was verbally interpreted as Highly acceptable. The

results reveal that millennial learners perceptions of digital education are

influenced by the convenience in time and location, the fruit of collaboration

using online interaction, the skills and knowledge they will acquire using

digital resources, and the capability of improving themselves for the future.

Millennial learners were able to adopt and learned how to use e learning.

Also, since it is self paced learning,it allows them to study on their own time

and schedule since e learning can be accessed anytime and anywhere. However,

the technological resources of the learners should be considered in the

implementation of e learning.

Community-level bans are a common tool against groups that enable online harassment and harmful speech. Unfortunately, the efficacy of community bans has only been partially studied and with mixed results. Here, we provide a flexible unsupervised methodology to identify in-group language and track user activity on Reddit both before and after the ban of a community (subreddit). We use a simple word frequency divergence to identify uncommon words overrepresented in a given community, not as a proxy for harmful speech but as a linguistic signature of the community. We apply our method to 15 banned subreddits, and find that community response is heterogeneous between subreddits and between users of a subreddit. Top users were more likely to become less active overall, while random users often reduced use of in-group language without decreasing activity. Finally, we find some evidence that the effectiveness of bans aligns with the content of a community. Users of dark humor communities were largely unaffected by bans while users of communities organized around white supremacy and fascism were the most affected. Altogether, our results show that bans do not affect all groups or users equally, and pave the way to understanding the effect of bans across communities.

This study aims to determine a predictive model to learn students probability to pass their courses taken at the earliest stage of the semester. To successfully discover a good predictive model with high acceptability, accurate, and precision rate which delivers a useful outcome for decision making in education systems, in improving the processes of conveying knowledge and uplifting students academic performance, the proponent applies and strictly followed the CRISP-DM (Cross-Industry Standard Process for Data Mining) methodology. This study employs classification for data mining techniques, and decision tree for algorithm. With the utilization of the newly discovered predictive model, the prediction of students probabilities to pass the current courses they take gives 0.7619 accuracy, 0.8333 precision, 0.8823 recall, and 0.8571 f1 score, which shows that the model used in the prediction is reliable, accurate, and recommendable. Considering the indicators and the results, it can be noted that the prediction model used in this study is highly acceptable. The data mining techniques provides effective and efficient innovative tools in analyzing and predicting student performances. The model used in this study will greatly affect the way educators understand and identify the weakness of their students in the class, the way they improved the effectiveness of their learning processes gearing to their students, bring down academic failure rates, and help institution administrators modify their learning system outcomes. Further study for the inclusion of some students demographic information, vast amount of data within the dataset, automated and manual process of predictive criteria indicators where the students can regulate to which criteria, they must improve more for them to pass their courses taken at the end of the semester as early as midterm period are highly needed.

A graph operator is a function Γ defined on some set of graphs such that whenever two graphs G and H are isomorphic, written G≃H, then Γ(G)≃Γ(H). For a graph G not in the domain of Γ, we put Γ(G)=∅. Also, let us define Γ0(G)=G, and for any integr k≥1, Γk(G)=Γ(Γk−1(G))

We prove that if Γ is a graph operator, then the sequence ⟨Γk(G)⟩k=0∞ has only three possible types of behaviour. Either Γk(G)=∅ for some integer k>0, or k→∞lim∣V(Γk(G))∣=∞, or there exist integers m≥0, p>0 such that the graphs Γj(G) are non-isomorphic (0≤j≤m), and Γn+p≃Γn(G) for all integers n≥m. We illustrate this using two new graph operators, namely, the path graph operator and the claw graph operator.

03 Jul 2023

Let q be a nonzero complex number that is not a root of unity. In the q-oscillator with commutation relation aa+−qa+a=1, it is known that the smallest commutator algebra of operators containing the creation and annihilation operators a+ and a is the linear span of a+ and a, together with all operators of the form a+l[a,a+]k, and [a,a+]kal, where l is a nonnegative integer and k is a positive integer. That is, linear combinations of operators of the form ah or (a+)h with h≥2 or h=0 are outside the commutator algebra generated by a and a+. This is a solution to the Lie polynomial characterization problem for the associative algebra generated by a+ and a. In this work, we extend the Lie polynomial characterization into the associative algebra P=P(q) generated by a, a+, and the operator eωN for some nonzero real parameter ω, where N is the number operator, and we relate this to a q-oscillator representation of the Askey-Wilson algebra AW(3).

30 Nov 2024

Smart Home Assistants (SHAs) have become ubiquitous in modern households, offering convenience and efficiency through its voice interface. However, for Deaf and Hard-of-Hearing (DHH) individuals, the reliance on auditory and textual feedback through a screen poses significant challenges. Existing solutions primarily focus on sign language input but overlook the need for seamless interaction and feedback modalities. This paper envisions SHAs designed specifically for DHH users, focusing on accessibility and inclusion. We discuss integrating augmented reality (AR) for visual feedback, support for multimodal input, including sign language and gestural commands, and context awareness through sound detection. Our vision highlights the importance of considering the diverse communication needs of the DHH community in developing SHA to ensure equitable access to smart home technology.

Push notifications are brief messages that users frequently encounter in their daily lives. However, the volume of notifications can lead to information overload, making it challenging for users to engage effectively. This study investigates how notification behavior and color influence user interaction and perception. To explore this, we developed an app prototype that tracks user interactions with notifications, categorizing them as accepted, dismissed, or ignored. After each interaction, users were asked to complete a survey regarding their perception of the notifications. The study focused on how different notification colors might affect the likelihood of acceptance and perceived importance. The results reveal that certain colors were more likely to be accepted and were perceived as more important compared to others, suggesting that both color and behavior play significant roles in shaping user engagement with notifications.

03 Jul 2025

This paper examines a critical yet unexplored dimension of the AI alignment problem: the potential for Large Language Models (LLMs) to inherit and amplify existing misalignments between human espoused theories and theories-in-use. Drawing on action science research, we argue that LLMs trained on human-generated text likely absorb and reproduce Model 1 theories-in-use - a defensive reasoning pattern that both inhibits learning and creates ongoing anti-learning dynamics at the dyad, group, and organisational levels. Through a detailed case study of an LLM acting as an HR consultant, we show how its advice, while superficially professional, systematically reinforces unproductive problem-solving approaches and blocks pathways to deeper organisational learning. This represents a specific instance of the alignment problem where the AI system successfully mirrors human behaviour but inherits our cognitive blind spots. This poses particular risks if LLMs are integrated into organisational decision-making processes, potentially entrenching anti-learning practices while lending authority to them. The paper concludes by exploring the possibility of developing LLMs capable of facilitating Model 2 learning - a more productive theory-in-use - and suggests this effort could advance both AI alignment research and action science practice. This analysis reveals an unexpected symmetry in the alignment challenge: the process of developing AI systems properly aligned with human values could yield tools that help humans themselves better embody those same values.

Wordnets are indispensable tools for various natural language processing applications. Unfortunately, wordnets get outdated, and producing or updating wordnets can be slow and costly in terms of time and resources. This problem intensifies for low-resource languages. This study proposes a method for word sense induction and synset induction using only two linguistic resources, namely, an unlabeled corpus and a sentence embeddings-based language model. The resulting sense inventory and synonym sets can be used in automatically creating a wordnet. We applied this method on a corpus of Filipino text. The sense inventory and synsets were evaluated by matching them with the sense inventory of the machine translated Princeton WordNet, as well as comparing the synsets to the Filipino WordNet. This study empirically shows that the 30% of the induced word senses are valid and 40% of the induced synsets are valid in which 20% are novel synsets.

There are no more papers matching your filters at the moment.