ETSUniversity of Quebec

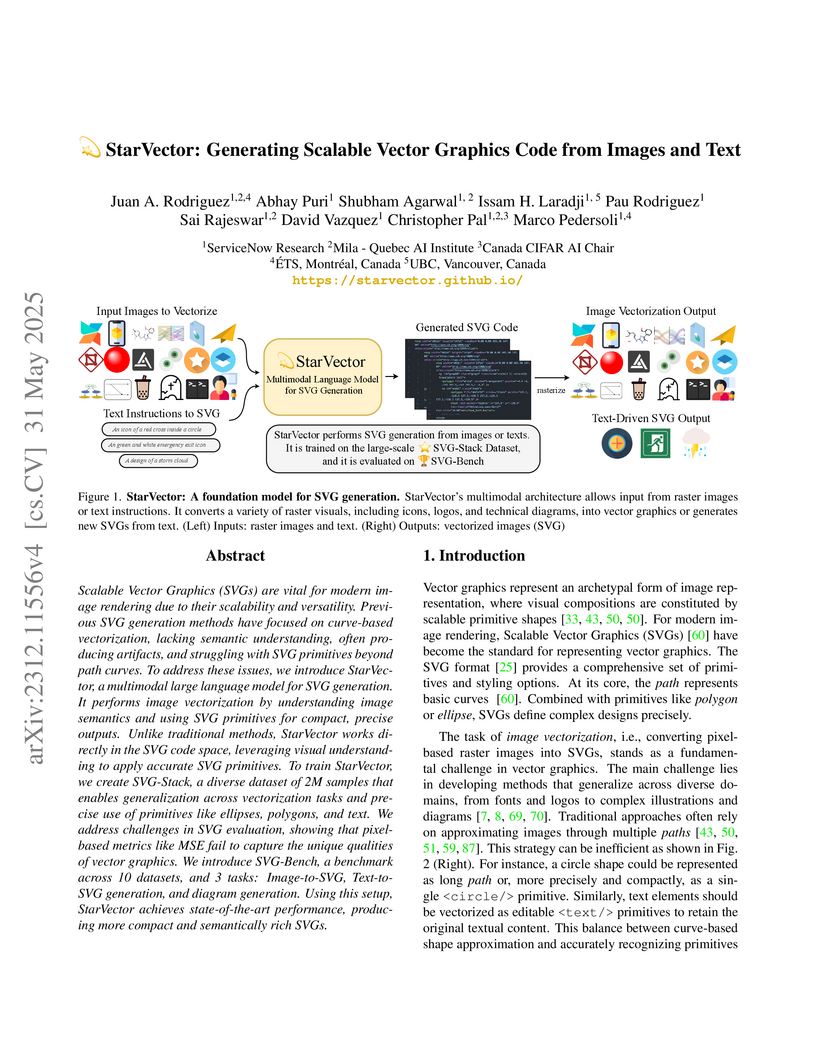

Scalable Vector Graphics (SVGs) are vital for modern image rendering due to

their scalability and versatility. Previous SVG generation methods have focused

on curve-based vectorization, lacking semantic understanding, often producing

artifacts, and struggling with SVG primitives beyond path curves. To address

these issues, we introduce StarVector, a multimodal large language model for

SVG generation. It performs image vectorization by understanding image

semantics and using SVG primitives for compact, precise outputs. Unlike

traditional methods, StarVector works directly in the SVG code space,

leveraging visual understanding to apply accurate SVG primitives. To train

StarVector, we create SVG-Stack, a diverse dataset of 2M samples that enables

generalization across vectorization tasks and precise use of primitives like

ellipses, polygons, and text. We address challenges in SVG evaluation, showing

that pixel-based metrics like MSE fail to capture the unique qualities of

vector graphics. We introduce SVG-Bench, a benchmark across 10 datasets, and 3

tasks: Image-to-SVG, Text-to-SVG generation, and diagram generation. Using this

setup, StarVector achieves state-of-the-art performance, producing more compact

and semantically rich SVGs.

The generative modeling landscape has experienced tremendous growth in recent years, particularly in generating natural images and art. Recent techniques have shown impressive potential in creating complex visual compositions while delivering impressive realism and quality. However, state-of-the-art methods have been focusing on the narrow domain of natural images, while other distributions remain unexplored. In this paper, we introduce the problem of text-to-figure generation, that is creating scientific figures of papers from text descriptions. We present FigGen, a diffusion-based approach for text-to-figure as well as the main challenges of the proposed task. Code and models are available at this https URL

We present a novel longitudinal multimodal corpus of physiological and

behavioral data collected from direct clinical providers in a hospital

workplace. We designed the study to investigate the use of off-the-shelf

wearable and environmental sensors to understand individual-specific constructs

such as job performance, interpersonal interaction, and well-being of hospital

workers over time in their natural day-to-day job settings. We collected

behavioral and physiological data from n=212 participants through

Internet-of-Things Bluetooth data hubs, wearable sensors (including a

wristband, a biometrics-tracking garment, a smartphone, and an audio-feature

recorder), together with a battery of surveys to assess personality traits,

behavioral states, job performance, and well-being over time. Besides the

default use of the data set, we envision several novel research opportunities

and potential applications, including multi-modal and multi-task behavioral

modeling, authentication through biometrics, and privacy-aware and

privacy-preserving machine learning.

This paper reviews pioneering works in microphone array processing and

multichannel speech enhancement, highlighting historical achievements,

technological evolution, commercialization aspects, and key challenges. It

provides valuable insights into the progression and future direction of these

areas. The paper examines foundational developments in microphone array design

and optimization, showcasing innovations that improved sound acquisition and

enhanced speech intelligibility in noisy and reverberant environments. It then

introduces recent advancements and cutting-edge research in the field,

particularly the integration of deep learning techniques such as all-neural

beamformers. The paper also explores critical applications, discussing their

evolution and current state-of-the-art technologies that significantly impact

user experience. Finally, the paper outlines future research directions,

identifying challenges and potential solutions that could drive further

innovation in these fields. By providing a comprehensive overview and

forward-looking perspective, this paper aims to inspire ongoing research and

contribute to the sustained growth and development of microphone arrays and

multichannel speech enhancement.

Consecutive frames in a video contain redundancy, but they may also contain

relevant complementary information for the detection task. The objective of our

work is to leverage this complementary information to improve detection.

Therefore, we propose a spatio-temporal fusion framework (STF). We first

introduce multi-frame and single-frame attention modules that allow a neural

network to share feature maps between nearby frames to obtain more robust

object representations. Second, we introduce a dual-frame fusion module that

merges feature maps in a learnable manner to improve them. Our evaluation is

conducted on three different benchmarks including video sequences of moving

road users. The performed experiments demonstrate that the proposed

spatio-temporal fusion module leads to improved detection performance compared

to baseline object detectors. Code is available at

this https URL

15 Oct 2015

Reverberation, especially in large rooms, severely degrades speech recognition performance and speech intelligibility. Since direct measurement of room characteristics is usually not possible, blind estimation of reverberation-related metrics such as the reverberation time (RT) and the direct-to-reverberant energy ratio (DRR) can be valuable information to speech recognition and enhancement algorithms operating in enclosed environments. The objective of this work is to evaluate the performance of five variants of blind RT and DRR estimators based on a modulation spectrum representation of reverberant speech with single- and multi-channel speech data. These models are all based on variants of the so-called Speech-to-Reverberation Modulation Energy Ratio (SRMR). We show that these measures outperform a state-of-the-art baseline based on maximum-likelihood estimation of sound decay rates in terms of root-mean square error (RMSE), as well as Pearson correlation. Compared to the baseline, the best proposed measure, called NSRMR_k , achieves a 23% relative improvement in terms of RMSE and allows for relative correlation improvements ranging from 13% to 47% for RT prediction.

The rapid advancements in large language models and generative artificial intelligence (AI) capabilities are making their broad application in the high-stakes testing context more likely. Use of generative AI in the scoring of constructed responses is particularly appealing because it reduces the effort required for handcrafting features in traditional AI scoring and might even outperform those methods. The purpose of this paper is to highlight the differences in the feature-based and generative AI applications in constructed response scoring systems and propose a set of best practices for the collection of validity evidence to support the use and interpretation of constructed response scores from scoring systems using generative AI. We compare the validity evidence needed in scoring systems using human ratings, feature-based natural language processing AI scoring engines, and generative AI. The evidence needed in the generative AI context is more extensive than in the feature-based NLP scoring context because of the lack of transparency and other concerns unique to generative AI such as consistency. Constructed response score data from standardized tests demonstrate the collection of validity evidence for different types of scoring systems and highlights the numerous complexities and considerations when making a validity argument for these scores. In addition, we discuss how the evaluation of AI scores might include a consideration of how a contributory scoring approach combining multiple AI scores (from different sources) will cover more of the construct in the absence of human ratings.

Research found that pre-trained LLM embeddings for student responses inherently group correct answers tightly while scattering incorrect answers, which often appear more similar to correct responses than to other errors of the same type. This phenomenon, termed the "Anna Karenina principle," results in unsupervised clustering methods failing to reliably identify distinct pedagogical error profiles and showing a bias towards high-performing students.

23 Mar 2022

The use of personal data for training machine learning systems comes with a

privacy threat and measuring the level of privacy of a model is one of the

major challenges in machine learning today. Identifying training data based on

a trained model is a standard way of measuring the privacy risks induced by the

model. We develop a novel approach to address the problem of membership

inference in pattern recognition models, relying on information provided by

adversarial examples. The strategy we propose consists of measuring the

magnitude of a perturbation necessary to build an adversarial example. Indeed,

we argue that this quantity reflects the likelihood of belonging to the

training data. Extensive numerical experiments on multivariate data and an

array of state-of-the-art target models show that our method performs

comparable or even outperforms state-of-the-art strategies, but without

requiring any additional training samples.

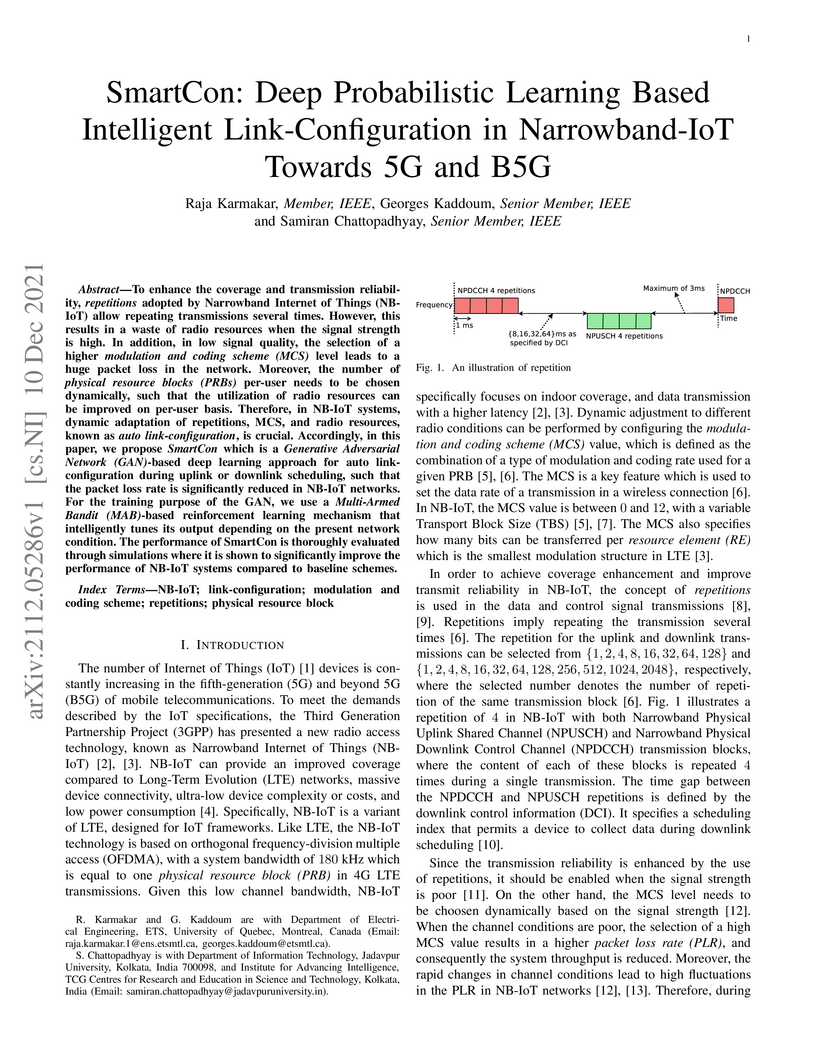

To enhance the coverage and transmission reliability, repetitions adopted by Narrowband Internet of Things (NB-IoT) allow repeating transmissions several times. However, this results in a waste of radio resources when the signal strength is high. In addition, in low signal quality, the selection of a higher modulation and coding scheme (MCS) level leads to a huge packet loss in the network. Moreover, the number of physical resource blocks (PRBs) per-user needs to be chosen dynamically, such that the utilization of radio resources can be improved on per-user basis. Therefore, in NB-IoT systems, dynamic adaptation of repetitions, MCS, and radio resources, known as auto link-configuration, is crucial. Accordingly, in this paper, we propose SmartCon which is a Generative Adversarial Network (GAN)-based deep learning approach for auto link-configuration during uplink or downlink scheduling, such that the packet loss rate is significantly reduced in NB-IoT networks. For the training purpose of the GAN, we use a Multi-Armed Bandit (MAB)-based reinforcement learning mechanism that intelligently tunes its output depending on the present network condition. The performance of SmartCon is thoroughly evaluated through simulations where it is shown to significantly improve the performance of NB-IoT systems compared to baseline schemes.

Implementing effective control mechanisms to ensure the proper functioning

and security of deployed NLP models, from translation to chatbots, is

essential. A key ingredient to ensure safe system behaviour is

Out-Of-Distribution (OOD) detection, which aims to detect whether an input

sample is statistically far from the training distribution. Although OOD

detection is a widely covered topic in classification tasks, most methods rely

on hidden features output by the encoder. In this work, we focus on leveraging

soft-probabilities in a black-box framework, i.e. we can access the

soft-predictions but not the internal states of the model. Our contributions

include: (i) RAINPROOF a Relative informAItioN Projection OOD detection

framework; and (ii) a more operational evaluation setting for OOD detection.

Surprisingly, we find that OOD detection is not necessarily aligned with

task-specific measures. The OOD detector may filter out samples well processed

by the model and keep samples that are not, leading to weaker performance. Our

results show that RAINPROOF provides OOD detection methods more aligned with

task-specific performance metrics than traditional OOD detectors.

In Federated Learning (FL), training is conducted on client devices,

typically with limited computational resources and storage capacity. To address

these constraints, we propose an automatic pruning scheme tailored for FL

systems. Our solution improves computation efficiency on client devices, while

minimizing communication costs. One of the challenges of tuning pruning

hyper-parameters in FL systems is the restricted access to local data. Thus, we

introduce an automatic pruning paradigm that dynamically determines pruning

boundaries. Additionally, we utilized a structured pruning algorithm optimized

for mobile devices that lack hardware support for sparse computations.

Experimental results demonstrate the effectiveness of our approach, achieving

accuracy comparable to existing methods. Our method notably reduces the number

of parameters by 89% and FLOPS by 90%, with minimal impact on the accuracy of

the FEMNIST and CelebFaces datasets. Furthermore, our pruning method decreases

communication overhead by up to 5x and halves inference time when deployed on

Android devices.

In this paper, an adjustment to the original differentially private

stochastic gradient descent (DPSGD) algorithm for deep learning models is

proposed. As a matter of motivation, to date, almost no state-of-the-art

machine learning algorithm hires the existing privacy protecting components due

to otherwise serious compromise in their utility despite the vital necessity.

The idea in this study is natural and interpretable, contributing to improve

the utility with respect to the state-of-the-art. Another property of the

proposed technique is its simplicity which makes it again more natural and also

more appropriate for real world and specially commercial applications. The

intuition is to trim and balance out wild individual discrepancies for privacy

reasons, and at the same time, to preserve relative individual differences for

seeking performance. The idea proposed here can also be applied to the

recurrent neural networks (RNN) to solve the gradient exploding problem. The

algorithm is applied to benchmark datasets MNIST and CIFAR-10 for a

classification task and the utility measure is calculated. The results

outperformed the original work.

Recent advances in electronic devices and communication infrastructure have revolutionized the traditional healthcare system into a smart healthcare system by using IoMT devices. However, due to the centralized training approach of artificial intelligence (AI), the use of mobile and wearable IoMT devices raises privacy concerns with respect to the information that has been communicated between hospitals and end users. The information conveyed by the IoMT devices is highly confidential and can be exposed to adversaries. In this regard, federated learning (FL), a distributive AI paradigm has opened up new opportunities for privacy-preservation in IoMT without accessing the confidential data of the participants. Further, FL provides privacy to end users as only gradients are shared during training. For these specific properties of FL, in this paper we present privacy related issues in IoMT. Afterwards, we present the role of FL in IoMT networks for privacy preservation and introduce some advanced FL architectures incorporating deep reinforcement learning (DRL), digital twin, and generative adversarial networks (GANs) for detecting privacy threats. Subsequently, we present some practical opportunities of FL in smart healthcare systems. At the end, we conclude this survey by providing open research challenges for FL that can be used in future smart healthcare systems

This research from Ullah and Mohanta addresses the problem of hallucination in video captioning by enhancing visual feature representation and improving multi-modal fusion. The system leverages auxiliary heads to refine visual semantics and uses dynamic context gates for balanced feature integration, alongside introducing the COAHA metric to specifically measure hallucination.

02 Apr 2014

A global agreement on how to reduce and cap human footprint, especially their

GHG emissions, is very unlikely in near future. At the same time, bilateral

agreements would be inefficient because of their neural and balanced nature.

Therefore, unilateral actions would have attracted attention as a practical

option. However, any unilateral action would most likely fail if it is not fair

and also if it is not consistent with the world trade organization's (WTO's)

rules, considering highly heterogeneity of the global economy. The modified GHG

intensity (MGHGINT) indicator, hereafter called Inequality-adjusted

Production-based GHGINT (IPGHGINT), was put forward to address this need in the

form of a universal indicator applicable to every region regardless of its

economic and social status. Nonetheless, the original MGHGINT indicator ignores

hidden consumption-related emissions, and therefore it could be unfair to some

production-oriented regions in the current bipolar production/consumption

world. Here, we propose two generalizations, called Inequality-adjusted

Consumption-based GHGINT (ICGHGINT) and Inequality-adjusted

Production/Consumption-Insensitive GHGINT (IIGHGINT), to the IPGHGINT in order

to combine both production and consumption emissions in a unified and balanced

manner. The impact of this generalizations on the associated border carbon tax

rates is evaluated in order to validate their practicality.

06 Jun 2025

Atomic force microscopy (AFM) enables high-resolution imaging and quantitative force measurement, which is critical for understanding nanoscale mechanical, chemical, and biological interactions. In dynamic AFM modes, however, interaction forces are not directly measured; they must be mathematically reconstructed from observables such as amplitude, phase, or frequency shift. Many reconstruction techniques have been proposed over the last two decades, but they rely on different assumptions and have been applied inconsistently, limiting reproducibility and cross-study comparison. Here, we systematically evaluate major force reconstruction methods in both frequency- and amplitude-modulation AFM, detailing their theoretical foundations, performance regimes, and sources of error. To support benchmarking and reproducibility, we introduce an open-source software package that unifies all widely used methods, enabling side-by-side comparisons across different formulations. This work represents a critical step toward achieving consistent and interpretable AFM force spectroscopy, thereby supporting the more reliable application of AFM in fields ranging from materials science to biophysics.

The 'Small Contributions, Small Networks' approach to neural network pruning effectively identifies redundant weights by utilizing statistical properties of neuron activations and the concept of an 'activation blind range'. This method achieved up to a 75% reduction in model size on MNIST while boosting the original unpruned model's accuracy by up to 1.13 percentage points.

Precise 3D segmentation of infant brain tissues is an essential step towards comprehensive volumetric studies and quantitative analysis of early brain developement. However, computing such segmentations is very challenging, especially for 6-month infant brain, due to the poor image quality, among other difficulties inherent to infant brain MRI, e.g., the isointense contrast between white and gray matter and the severe partial volume effect due to small brain sizes. This study investigates the problem with an ensemble of semi-dense fully convolutional neural networks (CNNs), which employs T1-weighted and T2-weighted MR images as input. We demonstrate that the ensemble agreement is highly correlated with the segmentation errors. Therefore, our method provides measures that can guide local user corrections. To the best of our knowledge, this work is the first ensemble of 3D CNNs for suggesting annotations within images. Furthermore, inspired by the very recent success of dense networks, we propose a novel architecture, SemiDenseNet, which connects all convolutional layers directly to the end of the network. Our architecture allows the efficient propagation of gradients during training, while limiting the number of parameters, requiring one order of magnitude less parameters than popular medical image segmentation networks such as 3D U-Net. Another contribution of our work is the study of the impact that early or late fusions of multiple image modalities might have on the performances of deep architectures. We report evaluations of our method on the public data of the MICCAI iSEG-2017 Challenge on 6-month infant brain MRI segmentation, and show very competitive results among 21 teams, ranking first or second in most metrics.

30 Sep 2017

In this work, we analyze the performance of full-duplex relay selection (FDRS) in spectrum-sharing networks. Contrary to half-duplex relaying, full-duplex relaying (FDR) enables simultaneous listening/forwarding at the secondary relay(s), thereby allowing for a higher spectral efficiency. However, since the source and relay simultaneously transmit in FDR, their superimposed signal at the primary receiver should now satisfy the existing interference constraint, which can considerably limit the secondary network throughput. In this regard, relay selection can offer an adequate solution to boost the secondary throughput while satisfying the imposed interference limit. We first analyze the performance of opportunistic FDRS with residual self-interference (RSI) by deriving the exact cumulative distribution function of its end-to-end signal-to-interference-plus-noise ratio under Nakagami-m fading. We also evaluate the offered diversity gain of relay selection for different full-duplex cooperation schemes in the presence/absence of a direct source-destination link. When the adopted RSI link gain model is sublinear in the relay power, which agrees with recent research findings, we show that remarkable diversity gain can be recovered even in the presence of an interfering direct link. Second, we evaluate the end-to-end performance of FDRS with interference constraints due to the presence of a primary receiver. Finally, the presented exact theoretical findings are verified by numerical simulations.

There are no more papers matching your filters at the moment.