ISM-CNRNational Research Council

06 Oct 2025

From the onset of fundamental statistical mechanical constructs formulated in the late 19th century, alchemical free-energy methods slowly emerged and transitioned to become operational tools of biomolecular simulation applicable to a wide range of problems including protein-ligand binding for drug discovery research. This article reconstructs how statistical mechanical approaches such as thermodynamic integration and free-energy perturbation were reconfigured in the early 1980's to address the complexities of increasingly heterogeneous biomolecular systems. Drawing on oral history interviews and primary literature, the study examines the technical, institutional, theoretical, and infrastructural conditions under which these methods were implemented, and became progressively operational. These conditions encompassed the consolidation of lab-specific software infrastructures, the formulation of practical simulation protocols, as well as essential statistical mechanical clarifications. From this perspective, the progress of free-energy methods proceeded less from a unified convergence than from an iterative troubleshooting process of alignment involving practical and theoretical considerations. The aim of the present article is to offer a historically grounded account of how free-energy techniques acquired practical and functional reliability.

A central challenge in cognitive neuroscience is to explain how semantic and episodic memory, two major forms of declarative memory, typically associated with cortical and hippocampal processing, interact to support learning, recall, and imagination. Despite significant advances, we still lack a unified computational framework that jointly accounts for core empirical phenomena across both semantic and episodic processing domains. Here, we introduce the Generative Episodic-Semantic Integration System (GENESIS), a computational model that formalizes memory as the interaction between two limited-capacity generative systems: a Cortical-VAE, supporting semantic learning and generalization, and a Hippocampal-VAE, supporting episodic encoding and retrieval within a retrieval-augmented generation (RAG) architecture. GENESIS reproduces hallmark behavioral findings, including generalization in semantic memory, recognition, serial recall effects and gist-based distortions in episodic memory, and constructive episodic simulation, while capturing their dynamic interactions. The model elucidates how capacity constraints shape the fidelity and memorability of experiences, how semantic processing introduces systematic distortions in episodic recall, and how episodic replay can recombine previous experiences. Together, these results provide a principled account of memory as an active, constructive, and resource-bounded process. GENESIS thus advances a unified theoretical framework that bridges semantic and episodic memory, offering new insights into the generative foundations of human cognition.

This study from the National Research Council of Canada and McGill University comprehensively evaluates text classification methods in the LLM era, focusing on data scarcity and multilingual performance. It demonstrates that zero-shot LLMs excel in sentiment analysis, few-shot fine-tuning improves performance for complex tasks, and synthetic data can serve as an effective alternative to labeled data across eight languages.

06 Oct 2025

This paper develops a risk-adjusted alternative to standard optimal policy learning (OPL) for observational data by importing Roy's (1952) safety-first principle into the treatment assignment problem. We formalize a welfare functional that maximizes the probability that outcomes exceed a socially required threshold and show that the associated pointwise optimal rule ranks treatments by the ratio of conditional means to conditional standard deviations. We implement the framework using microdata from the Italian Farm Accountancy Data Network to evaluate the allocation of subsidies under the EU Common Agricultural Policy. Empirically, risk-adjusted optimal policies systematically dominate the realized allocation across specifications, while risk aversion lowers overall welfare relative to the risk-neutral benchmark, making transparent the social cost of insurance against uncertainty. The results illustrate how safety-first OPL provides an implementable, interpretable tool for risk-sensitive policy design, quantifying the efficiency-insurance trade-off that policymakers face when outcomes are volatile.

15 Jul 2024

The Industrial Internet of Things (IIoT) is a transformative paradigm that integrates smart sensors, advanced analytics, and robust connectivity within industrial processes, enabling real-time data-driven decision-making and enhancing operational efficiency across diverse sectors, including manufacturing, energy, and logistics. IIoT is susceptible to various attack vectors, with Advanced Persistent Threats (APTs) posing a particularly grave concern due to their stealthy, prolonged, and targeted nature. The effectiveness of machine learning-based intrusion detection systems in APT detection has been documented in the literature. However, existing cybersecurity datasets often lack crucial attributes for APT detection in IIoT environments. Incorporating insights from prior research on APT detection using provenance data and intrusion detection within IoT systems, we present the CICAPT-IIoT dataset. The main goal of this paper is to propose a novel APT dataset in the IIoT setting that includes essential information for the APT detection task. In order to achieve this, a testbed for IIoT is developed, and over 20 attack techniques frequently used in APT campaigns are included. The performed attacks create some of the invariant phases of the APT cycle, including Data Collection and Exfiltration, Discovery and Lateral Movement, Defense Evasion, and Persistence. By integrating network logs and provenance logs with detailed attack information, the CICAPT-IIoT dataset presents foundation for developing holistic cybersecurity measures. Additionally, a comprehensive dataset analysis is provided, presenting cybersecurity experts with a strong basis on which to build innovative and efficient security solutions.

Automatic Readability Assessment (ARA), the task of assigning a reading level to a text, is traditionally treated as a classification problem in NLP research. In this paper, we propose the first neural, pairwise ranking approach to ARA and compare it with existing classification, regression, and (non-neural) ranking methods. We establish the performance of our model by conducting experiments with three English, one French and one Spanish datasets. We demonstrate that our approach performs well in monolingual single/cross corpus testing scenarios and achieves a zero-shot cross-lingual ranking accuracy of over 80% for both French and Spanish when trained on English data. Additionally, we also release a new parallel bilingual readability dataset in English and French. To our knowledge, this paper proposes the first neural pairwise ranking model for ARA, and shows the first results of cross-lingual, zero-shot evaluation of ARA with neural models.

16 Jan 2025

CNRS

CNRS Sun Yat-Sen University

Sun Yat-Sen University University of Southern CaliforniaGhent University

University of Southern CaliforniaGhent University Tsinghua University

Tsinghua University Stanford University

Stanford University Université Paris-Saclay

Université Paris-Saclay CEA

CEA Princeton UniversityNational Institute of Standards and TechnologyThe University of British ColumbiaNorth Carolina State UniversityUniversity of TrentoIMECÉcole Polytechnique Fédérale de LausanneNational Research CouncilNTT CorporationLeibniz-Institute of Photonic TechnologyLetiHewlett Packard LabsHewlett Packard EnterpriseSapienza UniversityInstitut FEMTO-STInstitut National de la Recherche Scientifique-Énergie Matériaux Télécommunications (IN)Université Franche Comte CNRSUniversit

Grenoble AlpesEnrico Fermi” Research CenterQueens

’ University

Princeton UniversityNational Institute of Standards and TechnologyThe University of British ColumbiaNorth Carolina State UniversityUniversity of TrentoIMECÉcole Polytechnique Fédérale de LausanneNational Research CouncilNTT CorporationLeibniz-Institute of Photonic TechnologyLetiHewlett Packard LabsHewlett Packard EnterpriseSapienza UniversityInstitut FEMTO-STInstitut National de la Recherche Scientifique-Énergie Matériaux Télécommunications (IN)Université Franche Comte CNRSUniversit

Grenoble AlpesEnrico Fermi” Research CenterQueens

’ UniversityThis roadmap consolidates recent advances while exploring emerging applications, reflecting the remarkable diversity of hardware platforms, neuromorphic concepts, and implementation philosophies reported in the field. It emphasizes the critical role of cross-disciplinary collaboration in this rapidly evolving field.

05 Sep 2024

The modern technological landscape has trended towards increased precision and greater digitization of information. However, the methods used to record and communicate scientific procedures have remained largely unchanged over the last century. Written text as the primary means for communicating scientific protocols poses notable limitations in human and machine information transfer. In this work, we present the Universal Workflow Language (UWL) and the open-source Universal Workflow Language interface (UWLi). UWL is a graph-based data architecture that can capture arbitrary scientific procedures through workflow representation of protocol steps and embedded procedure metadata. It is machine readable, discipline agnostic, and compatible with FAIR reporting standards. UWLi is an accompanying software package for building and manipulating UWL files into tabular and plain text representations in a controlled, detailed, and multilingual format. UWL transcription of protocols from three high-impact publications resulted in the identification of substantial deficiencies in the detail of the reported procedures. UWL transcription of these publications identified seventeen procedural ambiguities and thirty missing parameters for every one hundred words in published procedures. In addition to preventing and identifying procedural omission, UWL files were found to be compatible with geometric learning techniques for representing scientific protocols. In a surrogate function designed to represent an arbitrary multi-step experimental process, graph transformer networks were able to predict outcomes in approximately 6,000 fewer experiments than equivalent linear models. Implementation of UWL and UWLi into the scientific reporting process will result in higher reproducibility between both experimentalists and machines, thus proving an avenue to more effective modeling and control of complex systems.

03 Dec 2024

The mdCATH dataset provides extensive all-atom molecular dynamics simulations for over 5,000 protein domains from the CATH classification system, capturing protein dynamics across five temperatures and five independent replicas. This unique resource includes instantaneous atomic forces alongside coordinates, enabling the training of next-generation machine learning potentials for computational biophysics and facilitating proteome-wide statistical analyses of protein unfolding.

24 Apr 2024

Children with autism spectrum disorder (ASD) experience challenges in

grasping social-emotional cues, which can result in difficulties in recognizing

emotions and understanding and responding to social interactions.

Social-emotional intervention is an effective method to improve emotional

understanding and facial expression recognition among individuals with ASD.

Existing work emphasizes the importance of personalizing interventions to meet

individual needs and motivate engagement for optimal outcomes in daily

settings. We design a social-emotional game for ASD children, which generates

personalized stories by leveraging the current advancement of artificial

intelligence. Via a co-design process with five domain experts, this work

offers several design insights into developing future AI-enabled gamified

systems for families with autistic children. We also propose a fine-tuned AI

model and a dataset of social stories for different basic emotions.

The aging population is growing rapidly, and so is the danger of falls in older adults. A major cause of injury is falling, and detection in time can greatly save medical expenses and recovery time. However, to provide timely intervention and avoid unnecessary alarms, detection systems must be effective and reliable while addressing privacy concerns regarding the user. In this work, we propose a framework for detecting falls using several complementary systems: a semi-supervised federated learning-based fall detection system (SF2D), an indoor localization and navigation system, and a vision-based human fall recognition system. A wearable device and an edge device identify a fall scenario in the first system. On top of that, the second system uses an indoor localization technique first to localize the fall location and then navigate a robot to inspect the scenario. A vision-based detection system running on an edge device with a mounted camera on a robot is used to recognize fallen people. Each of the systems of this proposed framework achieves different accuracy rates. Specifically, the SF2D has a 0.81% failure rate equivalent to 99.19% accuracy, while the vision-based fallen people detection achieves 96.3% accuracy. However, when we combine the accuracy of these two systems with the accuracy of the navigation system (95% success rate), our proposed framework creates a highly reliable performance for fall detection, with an overall accuracy of 99.99%. Not only is the proposed framework safe for older adults, but it is also a privacy-preserving solution for detecting falls.

The recent advancements in artificial intelligence (AI) combined with the extensive amount of data generated by today's clinical systems, has led to the development of imaging AI solutions across the whole value chain of medical imaging, including image reconstruction, medical image segmentation, image-based diagnosis and treatment planning. Notwithstanding the successes and future potential of AI in medical imaging, many stakeholders are concerned of the potential risks and ethical implications of imaging AI solutions, which are perceived as complex, opaque, and difficult to comprehend, utilise, and trust in critical clinical applications. Addressing these concerns and risks, the FUTURE-AI framework has been proposed, which, sourced from a global multi-domain expert consensus, comprises guiding principles for increased trust, safety, and adoption for AI in healthcare. In this paper, we transform the general FUTURE-AI healthcare principles to a concise and specific AI implementation guide tailored to the needs of the medical imaging community. To this end, we carefully assess each building block of the FUTURE-AI framework consisting of (i) Fairness, (ii) Universality, (iii) Traceability, (iv) Usability, (v) Robustness and (vi) Explainability, and respectively define concrete best practices based on accumulated AI implementation experiences from five large European projects on AI in Health Imaging. We accompany our concrete step-by-step medical imaging development guide with a practical AI solution maturity checklist, thus enabling AI development teams to design, evaluate, maintain, and deploy technically, clinically and ethically trustworthy imaging AI solutions into clinical practice.

A generalized understanding of protein dynamics is an unsolved scientific

problem, the solution of which is critical to the interpretation of the

structure-function relationships that govern essential biological processes.

Here, we approach this problem by constructing coarse-grained molecular

potentials based on artificial neural networks and grounded in statistical

mechanics. For training, we build a unique dataset of unbiased all-atom

molecular dynamics simulations of approximately 9 ms for twelve different

proteins with multiple secondary structure arrangements. The coarse-grained

models are capable of accelerating the dynamics by more than three orders of

magnitude while preserving the thermodynamics of the systems. Coarse-grained

simulations identify relevant structural states in the ensemble with comparable

energetics to the all-atom systems. Furthermore, we show that a single

coarse-grained potential can integrate all twelve proteins and can capture

experimental structural features of mutated proteins. These results indicate

that machine learning coarse-grained potentials could provide a feasible

approach to simulate and understand protein dynamics.

03 Aug 2025

Quantum metrology aims to maximize measurement precision on quantum systems, with a wide range of applications in quantum sensing. Achieving the Heisenberg limit (HL) - the fundamental precision bound set by quantum mechanics - is often hindered by noise-induced decoherence, which typically reduces achievable precision to the standard quantum limit (SQL). While quantum error correction (QEC) can recover the HL under Markovian noise, its applicability to non-Markovian noise remains less explored. In this work, we analyze a hidden Markov model in which a quantum probe, coupled to an inaccessible environment, undergoes joint evolution described by Lindbladian dynamics, with the inaccessible degrees of freedom serving as a memory. We derive generalized Knill-Laflamme conditions for the hidden Markov model and establish three types of sufficient conditions for achieving the HL under non-Markovian noise using QEC. Additionally, we demonstrate the attainability of the SQL when these sufficient conditions are violated, by analytical solutions for special cases and numerical methods for general scenarios. Our results not only extend prior QEC frameworks for metrology but also provide new insights into precision limits under realistic noise conditions.

Readability assessment is the task of evaluating the reading difficulty of a

given piece of text. Although research on computational approaches to

readability assessment is now two decades old, there is not much work on

synthesizing this research. This article is a brief survey of contemporary

research on developing computational models for readability assessment. We

identify the common approaches, discuss their shortcomings, and identify some

challenges for the future. Where possible, we also connect computational

research with insights from related work in other disciplines such as education

and psychology.

Molecular dynamics simulations provide a mechanistic description of molecules by relying on empirical potentials. The quality and transferability of such potentials can be improved leveraging data-driven models derived with machine learning approaches. Here, we present TorchMD, a framework for molecular simulations with mixed classical and machine learning potentials. All of force computations including bond, angle, dihedral, Lennard-Jones and Coulomb interactions are expressed as PyTorch arrays and operations. Moreover, TorchMD enables learning and simulating neural network potentials. We validate it using standard Amber all-atom simulations, learning an ab-initio potential, performing an end-to-end training and finally learning and simulating a coarse-grained model for protein folding. We believe that TorchMD provides a useful tool-set to support molecular simulations of machine learning potentials. Code and data are freely available at \url{this http URL}.

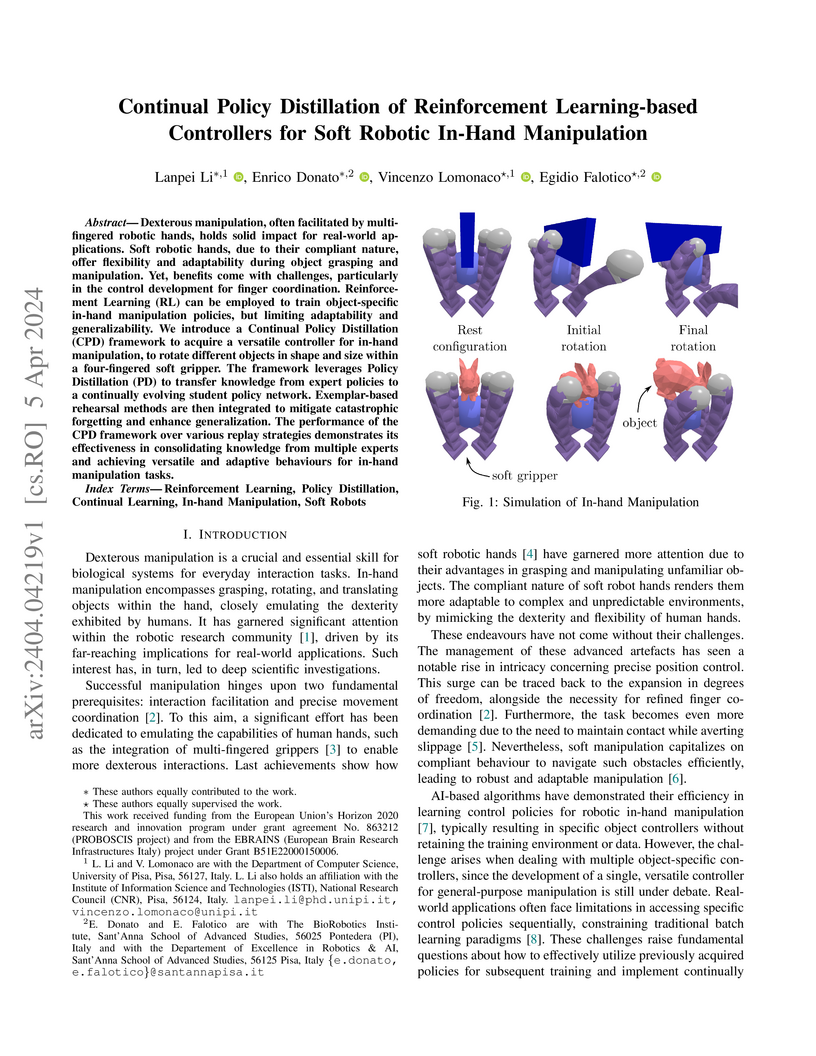

This research from the University of Pisa and Sant’Anna School of Advanced Studies introduces a Continual Policy Distillation framework that enables a single soft robotic controller to learn dexterous in-hand manipulation of various objects sequentially. The framework effectively consolidates knowledge from multiple object-specific expert policies, mitigating catastrophic forgetting and achieving high manipulation performance on diverse objects, with replay-based strategies like Reward Prioritized Experience Replay showing performance comparable to training on all data simultaneously.

A deep learning approach for outdoor environment reconstruction, developed at Yerevan State University and CNR Pisa, exclusively leverages ambient radio frequency signals, demonstrating a viable alternative to vision-based methods. The transformer-based model achieved an IoU of 42.2% and a Chamfer distance of 18.3m on a synthetic dataset, showing improved resilience and scalability for mapping solutions.

The Projected Gradient Descent (PGD) algorithm is a widely used and efficient first-order method for solving constrained optimization problems due to its simplicity and scalability in large design spaces. Building on recent advancements in the PGD algorithm where an inertial step component has been introduced to improve efficiency in solving constrained optimization problems this study introduces two key enhancements to further improve the algorithm's performance and adaptability in large-scale design spaces. First, univariate constraints (such as design variable bounds constraints) are directly incorporated into the projection step via the Schur complement and an improved active set algorithm with bulk constraints manipulation, avoiding issues with min-max clipping. Second, the update step is decomposed relative to the constraint vector space, enabling a post-projection adjustment based on the state of the constraints and an approximation of the Lagrangian, significantly improving the algorithm's robustness for problems with nonlinear constraints. Applied to a topology optimization problem for heat sink design, the proposed PGD algorithm demonstrates performance comparable to or exceeding that of the Method of Moving Asymptotes (MMA), with minimal parameter tuning. These results position the enhanced PGD as a robust tool for complex optimization problems with large variable space, such as topology optimization problems.

This paper introduces an innovative drone carrier concept that is applied in maritime port security or offshore rescue. This system works with a heterogeneous system consisting of multiple Unmanned Aerial Vehicles (UAVs) and Unmanned Surface Vehicles (USVs) to perform inspection and intervention tasks in GNSS-denied or interrupted environments. The carrier, an electric catamaran measuring 4m by 7m, features a 4m by 6m deck supporting automated takeoff and landing for four DJI M300 drones, along with a 10kg-payload manipulator operable in up to level 3 sea conditions. Utilizing an offshore gimbal camera for navigation, the carrier can autonomously navigate, approach and dock with non-cooperative vessels, guided by an onboard camera, LiDAR, and Doppler Velocity Log (DVL) over a 3 km2 area. UAVs equipped with onboard Ultra-Wideband (UWB) technology execute mapping, detection, and manipulation tasks using a versatile gripper designed for wet, saline conditions. Additionally, two UAVs can coordinate to transport large objects to the manipulator or interact directly with them. These procedures are fully automated and were successfully demonstrated at the Mohammed Bin Zayed International Robotic Competition (MBZIRC2024), where the drone carrier equipped with four UAVS and one manipulator, automatically accomplished the intervention tasks in sea-level-3 (wave height 1.25m) based on the rough target information.

There are no more papers matching your filters at the moment.