Information Technology University

Compositional Zero-Shot Learning (CZSL) is a critical task in computer vision that enables models to recognize unseen combinations of known attributes and objects during inference, addressing the combinatorial challenge of requiring training data for every possible composition. This is particularly challenging because the visual appearance of primitives is highly contextual; for example, ``small'' cats appear visually distinct from ``older'' ones, and ``wet'' cars differ significantly from ``wet'' cats. Effectively modeling this contextuality and the inherent compositionality is crucial for robust compositional zero-shot recognition. This paper presents, to our knowledge, the first comprehensive survey specifically focused on Compositional Zero-Shot Learning. We systematically review the state-of-the-art CZSL methods, introducing a taxonomy grounded in disentanglement, with four families of approaches: no explicit disentanglement, textual disentanglement, visual disentanglement, and cross-modal disentanglement. We provide a detailed comparative analysis of these methods, highlighting their core advantages and limitations in different problem settings, such as closed-world and open-world CZSL. Finally, we identify the most significant open challenges and outline promising future research directions. This survey aims to serve as a foundational resource to guide and inspire further advancements in this fascinating and important field. Papers studied in this survey with their official code are available on our github: this https URL

The preservation of aquatic biodiversity is critical in mitigating the effects of climate change. Aquatic scene understanding plays a pivotal role in aiding marine scientists in their decision-making processes. In this paper, we introduce AquaticCLIP, a novel contrastive language-image pre-training model tailored for aquatic scene understanding. AquaticCLIP presents a new unsupervised learning framework that aligns images and texts in aquatic environments, enabling tasks such as segmentation, classification, detection, and object counting. By leveraging our large-scale underwater image-text paired dataset without the need for ground-truth annotations, our model enriches existing vision-language models in the aquatic domain. For this purpose, we construct a 2 million underwater image-text paired dataset using heterogeneous resources, including YouTube, Netflix, NatGeo, etc. To fine-tune AquaticCLIP, we propose a prompt-guided vision encoder that progressively aggregates patch features via learnable prompts, while a vision-guided mechanism enhances the language encoder by incorporating visual context. The model is optimized through a contrastive pretraining loss to align visual and textual modalities. AquaticCLIP achieves notable performance improvements in zero-shot settings across multiple underwater computer vision tasks, outperforming existing methods in both robustness and interpretability. Our model sets a new benchmark for vision-language applications in underwater environments. The code and dataset for AquaticCLIP are publicly available on GitHub at xxx.

The remarkable success of transformers in the field of natural language

processing has sparked the interest of the speech-processing community, leading

to an exploration of their potential for modeling long-range dependencies

within speech sequences. Recently, transformers have gained prominence across

various speech-related domains, including automatic speech recognition, speech

synthesis, speech translation, speech para-linguistics, speech enhancement,

spoken dialogue systems, and numerous multimodal applications. In this paper,

we present a comprehensive survey that aims to bridge research studies from

diverse subfields within speech technology. By consolidating findings from

across the speech technology landscape, we provide a valuable resource for

researchers interested in harnessing the power of transformers to advance the

field. We identify the challenges encountered by transformers in speech

processing while also offering insights into potential solutions to address

these issues.

21 May 2021

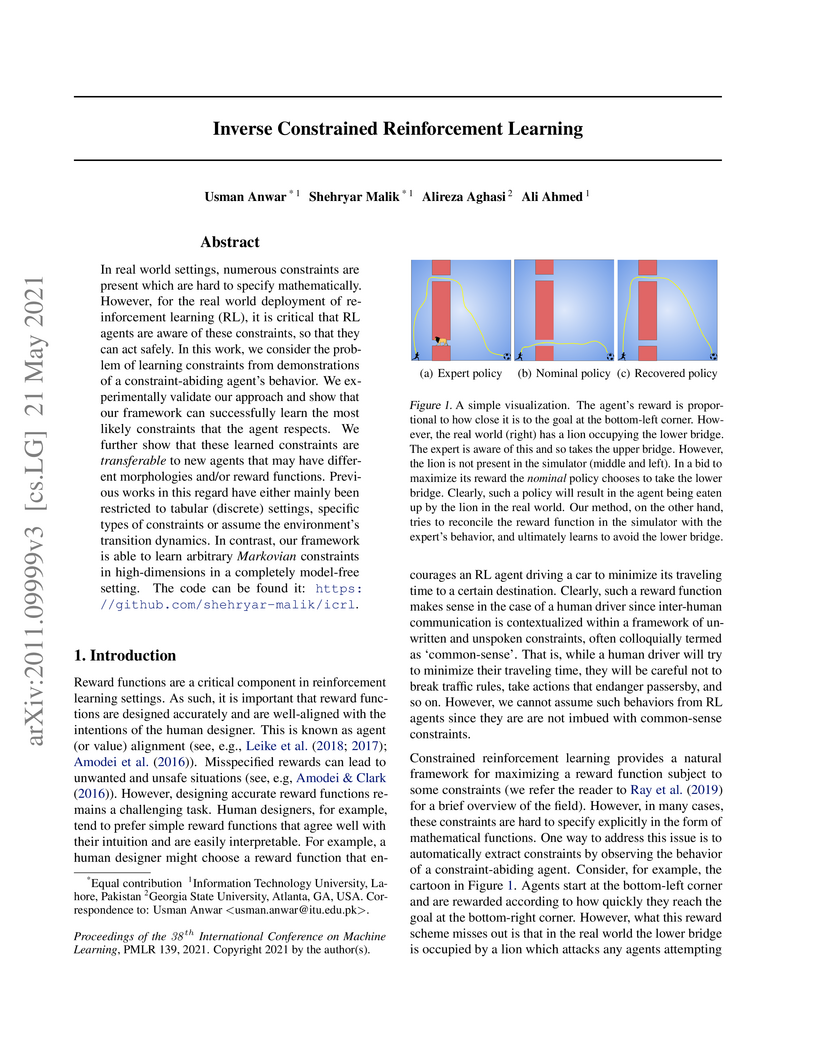

In real world settings, numerous constraints are present which are hard to specify mathematically. However, for the real world deployment of reinforcement learning (RL), it is critical that RL agents are aware of these constraints, so that they can act safely. In this work, we consider the problem of learning constraints from demonstrations of a constraint-abiding agent's behavior. We experimentally validate our approach and show that our framework can successfully learn the most likely constraints that the agent respects. We further show that these learned constraints are \textit{transferable} to new agents that may have different morphologies and/or reward functions. Previous works in this regard have either mainly been restricted to tabular (discrete) settings, specific types of constraints or assume the environment's transition dynamics. In contrast, our framework is able to learn arbitrary \textit{Markovian} constraints in high-dimensions in a completely model-free setting. The code can be found it: \url{this https URL}.

Indexing endoscopic surgical videos is vital in surgical data science,

forming the basis for systematic retrospective analysis and clinical

performance evaluation. Despite its significance, current video analytics rely

on manual indexing, a time-consuming process. Advances in computer vision,

particularly deep learning, offer automation potential, yet progress is limited

by the lack of publicly available, densely annotated surgical datasets. To

address this, we present TEMSET-24K, an open-source dataset comprising 24,306

trans-anal endoscopic microsurgery (TEMS) video micro-clips. Each clip is

meticulously annotated by clinical experts using a novel hierarchical labeling

taxonomy encompassing phase, task, and action triplets, capturing intricate

surgical workflows. To validate this dataset, we benchmarked deep learning

models, including transformer-based architectures. Our in silico evaluation

demonstrates high accuracy (up to 0.99) and F1 scores (up to 0.99) for key

phases like Setup and Suturing. The STALNet model, tested with ConvNeXt, ViT,

and SWIN V2 encoders, consistently segmented well-represented phases.

TEMSET-24K provides a critical benchmark, propelling state-of-the-art solutions

in surgical data science.

Data augmentation is widely used to enhance generalization in visual classification tasks. However, traditional methods struggle when source and target domains differ, as in domain adaptation, due to their inability to address domain gaps. This paper introduces GenMix, a generalizable prompt-guided generative data augmentation approach that enhances both in-domain and cross-domain image classification. Our technique leverages image editing to generate augmented images based on custom conditional prompts, designed specifically for each problem type. By blending portions of the input image with its edited generative counterpart and incorporating fractal patterns, our approach mitigates unrealistic images and label ambiguity, improving the performance and adversarial robustness of the resulting models. Efficacy of our method is established with extensive experiments on eight public datasets for general and fine-grained classification, in both in-domain and cross-domain settings. Additionally, we demonstrate performance improvements for self-supervised learning, learning with data scarcity, and adversarial robustness. As compared to the existing state-of-the-art methods, our technique achieves stronger performance across the board.

This paper presents a framework using Multi-Agent Large Language Models to enhance complex problem-solving in engineering senior design projects

14 May 2025

Large Language Models (LLMs) are predominantly trained and aligned in ways

that reinforce Western-centric epistemologies and socio-cultural norms, leading

to cultural homogenization and limiting their ability to reflect global

civilizational plurality. Existing benchmarking frameworks fail to adequately

capture this bias, as they rely on rigid, closed-form assessments that overlook

the complexity of cultural inclusivity. To address this, we introduce

WorldView-Bench, a benchmark designed to evaluate Global Cultural Inclusivity

(GCI) in LLMs by analyzing their ability to accommodate diverse worldviews. Our

approach is grounded in the Multiplex Worldview proposed by Senturk et al.,

which distinguishes between Uniplex models, reinforcing cultural

homogenization, and Multiplex models, which integrate diverse perspectives.

WorldView-Bench measures Cultural Polarization, the exclusion of alternative

perspectives, through free-form generative evaluation rather than conventional

categorical benchmarks. We implement applied multiplexity through two

intervention strategies: (1) Contextually-Implemented Multiplex LLMs, where

system prompts embed multiplexity principles, and (2) Multi-Agent System

(MAS)-Implemented Multiplex LLMs, where multiple LLM agents representing

distinct cultural perspectives collaboratively generate responses. Our results

demonstrate a significant increase in Perspectives Distribution Score (PDS)

entropy from 13% at baseline to 94% with MAS-Implemented Multiplex LLMs,

alongside a shift toward positive sentiment (67.7%) and enhanced cultural

balance. These findings highlight the potential of multiplex-aware AI

evaluation in mitigating cultural bias in LLMs, paving the way for more

inclusive and ethically aligned AI systems.

Contrastive Language-Image Pretraining (CLIP) has shown impressive zero-shot

performance on image classification. However, state-of-the-art methods often

rely on fine-tuning techniques like prompt learning and adapter-based tuning to

optimize CLIP's performance. The necessity for fine-tuning significantly limits

CLIP's adaptability to novel datasets and domains. This requirement mandates

substantial time and computational resources for each new dataset. To overcome

this limitation, we introduce simple yet effective training-free approaches,

Single-stage LMM Augmented CLIP (SLAC) and Two-stage LMM Augmented CLIP (TLAC),

that leverages powerful Large Multimodal Models (LMMs), such as Gemini, for

image classification. The proposed methods leverages the capabilities of

pre-trained LMMs, allowing for seamless adaptation to diverse datasets and

domains without the need for additional training. Our approaches involve

prompting the LMM to identify objects within an image. Subsequently, the CLIP

text encoder determines the image class by identifying the dataset class with

the highest semantic similarity to the LLM predicted object. Our models

achieved superior accuracy on 9 of 11 base-to-novel datasets, including

ImageNet, SUN397, and Caltech101, while maintaining a strictly training-free

paradigm. Our TLAC model achieved an overall accuracy of 83.44%, surpassing the

previous state-of-the-art few-shot methods by a margin of 6.75%. Compared to

other training-free approaches, our TLAC method achieved 83.6% average accuracy

across 13 datasets, a 9.7% improvement over the previous methods. Our Code is

available at this https URL

Aerial imagery analysis is critical for many research fields. However, obtaining frequent high-quality aerial images is not always accessible due to its high effort and cost requirements. One solution is to use the Ground-to-Aerial (G2A) technique to synthesize aerial images from easily collectible ground images. However, G2A is rarely studied, because of its challenges, including but not limited to, the drastic view changes, occlusion, and range of visibility. In this paper, we present a novel Geometric Preserving Ground-to-Aerial (G2A) image synthesis (GPG2A) model that can generate realistic aerial images from ground images. GPG2A consists of two stages. The first stage predicts the Bird's Eye View (BEV) segmentation (referred to as the BEV layout map) from the ground image. The second stage synthesizes the aerial image from the predicted BEV layout map and text descriptions of the ground image. To train our model, we present a new multi-modal cross-view dataset, namely VIGORv2 which is built upon VIGOR with newly collected aerial images, maps, and text descriptions. Our extensive experiments illustrate that GPG2A synthesizes better geometry-preserved aerial images than existing models. We also present two applications, data augmentation for cross-view geo-localization and sketch-based region search, to further verify the effectiveness of our GPG2A. The code and data will be publicly available.

We develop and evaluate two novel purpose-built deep learning (DL) models for synthesis of the arterial blood pressure (ABP) waveform in a cuff-less manner, using a single-site photoplethysmography (PPG) signal. We train and evaluate our DL models on the data of 209 subjects from the public UCI dataset on cuff-less blood pressure (CLBP) estimation. Our transformer model consists of an encoder-decoder pair that incorporates positional encoding, multi-head attention, layer normalization, and dropout techniques for ABP waveform synthesis. Secondly, under our frequency-domain (FD) learning approach, we first obtain the discrete cosine transform (DCT) coefficients of the PPG and ABP signals, and then learn a linear/non-linear (L/NL) regression between them. The transformer model (FD L/NL model) synthesizes the ABP waveform with a mean absolute error (MAE) of 3.01 (4.23). Further, the synthesis of ABP waveform also allows us to estimate the systolic blood pressure (SBP) and diastolic blood pressure (DBP) values. To this end, the transformer model reports an MAE of 3.77 mmHg and 2.69 mmHg, for SBP and DBP, respectively. On the other hand, the FD L/NL method reports an MAE of 4.37 mmHg and 3.91 mmHg, for SBP and DBP, respectively. Both methods fulfill the AAMI criterion. As for the BHS criterion, our transformer model (FD L/NL regression model) achieves grade A (grade B).

In recent years, numerous domain adaptive strategies have been proposed to help deep learning models overcome the challenges posed by domain shift. However, even unsupervised domain adaptive strategies still require a large amount of target data. Medical imaging datasets are often characterized by class imbalance and scarcity of labeled and unlabeled data. Few-shot domain adaptive object detection (FSDAOD) addresses the challenge of adapting object detectors to target domains with limited labeled data. Existing works struggle with randomly selected target domain images that may not accurately represent the real population, resulting in overfitting to small validation sets and poor generalization to larger test sets. Medical datasets exhibit high class imbalance and background similarity, leading to increased false positives and lower mean Average Precision (map) in target domains. To overcome these challenges, we propose a novel FSDAOD strategy for microscopic imaging. Our contributions include a domain adaptive class balancing strategy for few-shot scenarios, multi-layer instance-level inter and intra-domain alignment to enhance similarity between class instances regardless of domain, and an instance-level classification loss applied in the middle layers of the object detector to enforce feature retention necessary for correct classification across domains. Extensive experimental results with competitive baselines demonstrate the effectiveness of our approach, achieving state-of-the-art results on two public microscopic datasets. Code available at this https URL

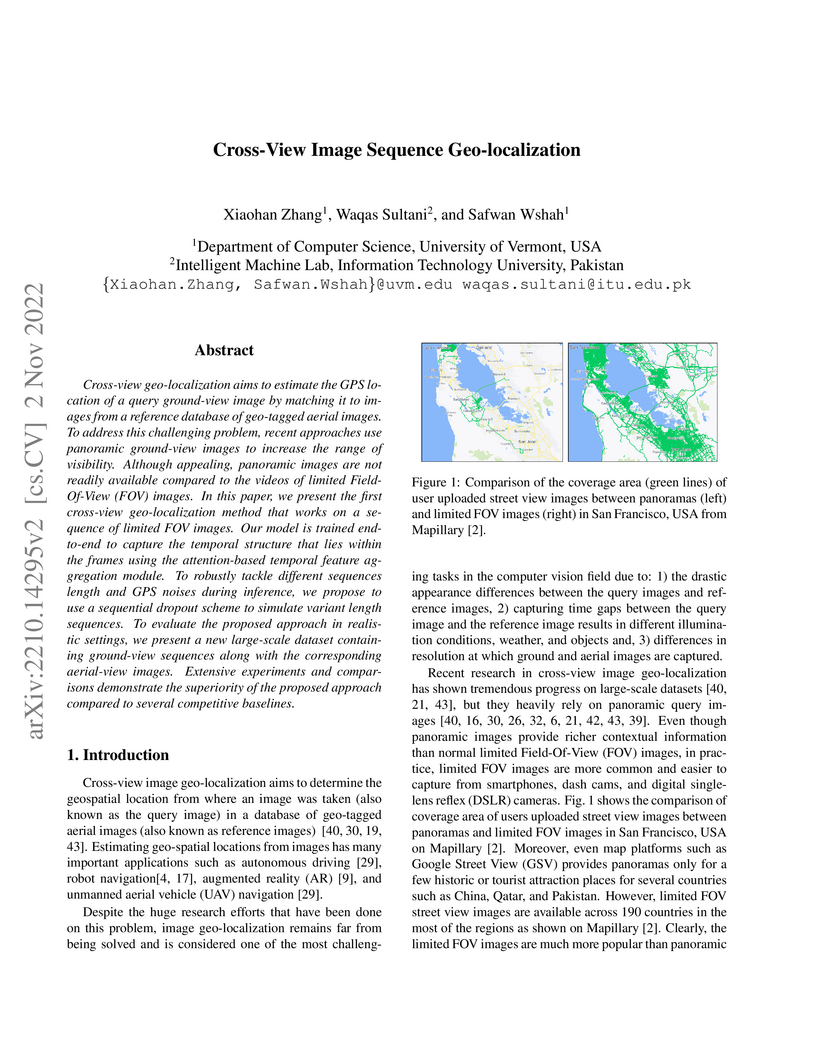

The concept of geo-localization refers to the process of determining where on earth some `entity' is located, typically using Global Positioning System (GPS) coordinates. The entity of interest may be an image, sequence of images, a video, satellite image, or even objects visible within the image. As massive datasets of GPS tagged media have rapidly become available due to smartphones and the internet, and deep learning has risen to enhance the performance capabilities of machine learning models, the fields of visual and object geo-localization have emerged due to its significant impact on a wide range of applications such as augmented reality, robotics, self-driving vehicles, road maintenance, and 3D reconstruction. This paper provides a comprehensive survey of geo-localization involving images, which involves either determining from where an image has been captured (Image geo-localization) or geo-locating objects within an image (Object geo-localization). We will provide an in-depth study, including a summary of popular algorithms, a description of proposed datasets, and an analysis of performance results to illustrate the current state of each field.

A method for cross-view geo-localization utilizes sequences of limited field-of-view ground images by introducing a transformer-based temporal feature aggregation module. The approach, also featuring sequential dropout for variable sequence length robustness, demonstrated improved retrieval accuracy (R@1 up to 2.07% with ResNet50) compared to adapted baselines and includes a newly collected large-scale dataset.

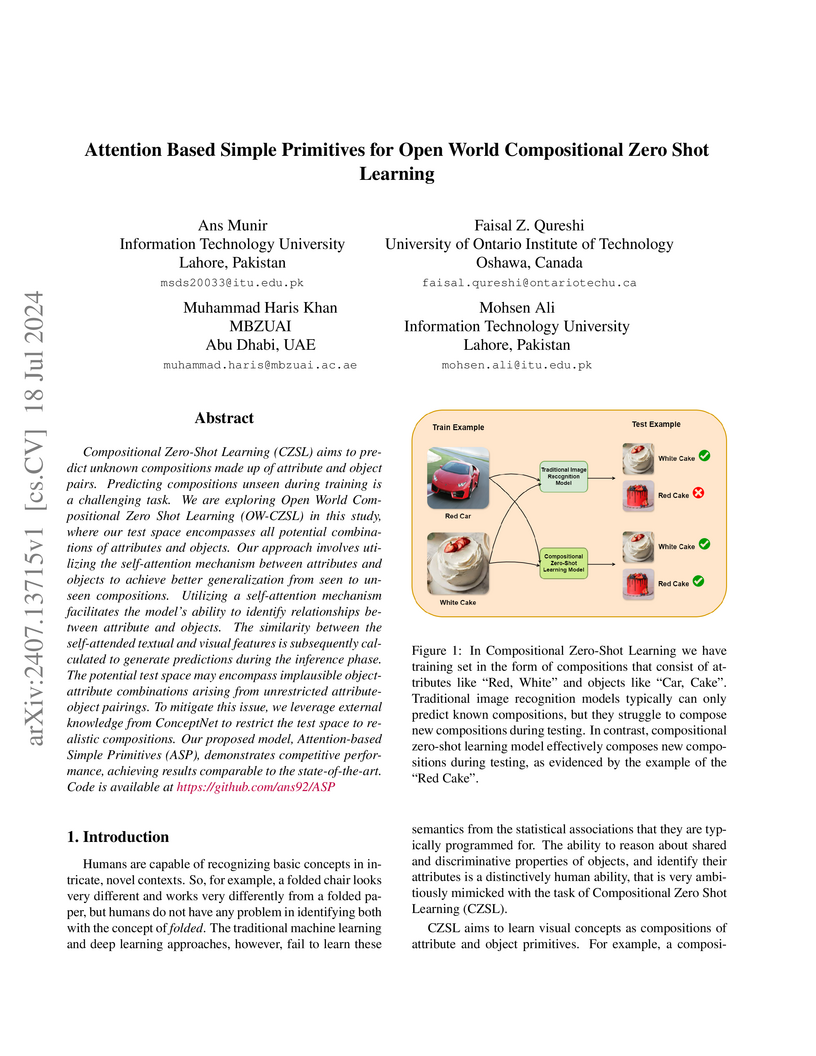

Compositional Zero-Shot Learning (CZSL) aims to predict unknown compositions

made up of attribute and object pairs. Predicting compositions unseen during

training is a challenging task. We are exploring Open World Compositional

Zero-Shot Learning (OW-CZSL) in this study, where our test space encompasses

all potential combinations of attributes and objects. Our approach involves

utilizing the self-attention mechanism between attributes and objects to

achieve better generalization from seen to unseen compositions. Utilizing a

self-attention mechanism facilitates the model's ability to identify

relationships between attribute and objects. The similarity between the

self-attended textual and visual features is subsequently calculated to

generate predictions during the inference phase. The potential test space may

encompass implausible object-attribute combinations arising from unrestricted

attribute-object pairings. To mitigate this issue, we leverage external

knowledge from ConceptNet to restrict the test space to realistic compositions.

Our proposed model, Attention-based Simple Primitives (ASP), demonstrates

competitive performance, achieving results comparable to the state-of-the-art.

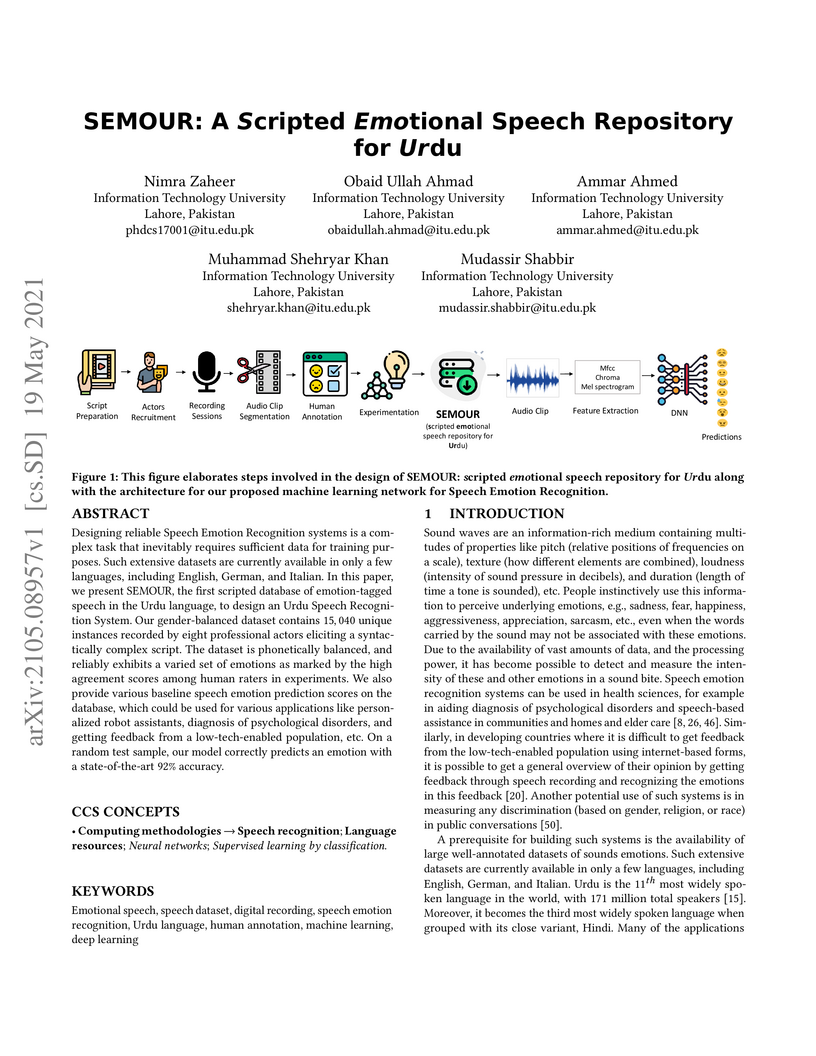

Designing reliable Speech Emotion Recognition systems is a complex task that inevitably requires sufficient data for training purposes. Such extensive datasets are currently available in only a few languages, including English, German, and Italian. In this paper, we present SEMOUR, the first scripted database of emotion-tagged speech in the Urdu language, to design an Urdu Speech Recognition System. Our gender-balanced dataset contains 15,040 unique instances recorded by eight professional actors eliciting a syntactically complex script. The dataset is phonetically balanced, and reliably exhibits a varied set of emotions as marked by the high agreement scores among human raters in experiments. We also provide various baseline speech emotion prediction scores on the database, which could be used for various applications like personalized robot assistants, diagnosis of psychological disorders, and getting feedback from a low-tech-enabled population, etc. On a random test sample, our model correctly predicts an emotion with a state-of-the-art 92% accuracy.

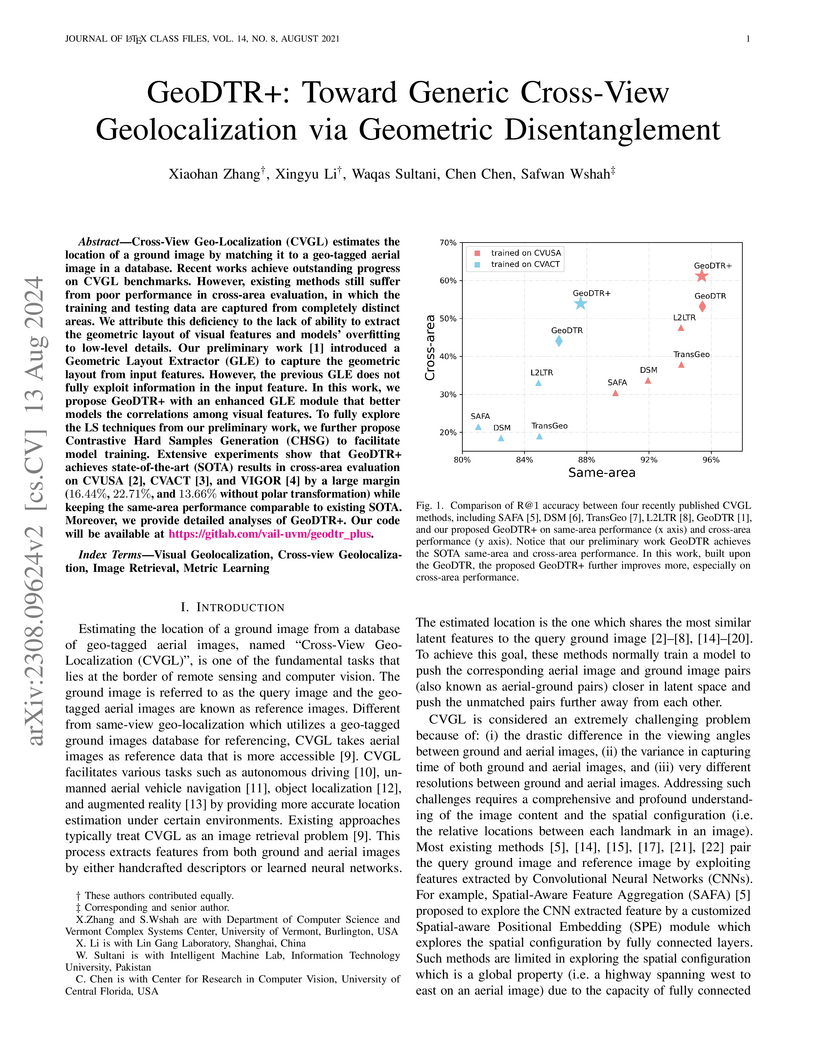

Cross-View Geo-Localization (CVGL) estimates the location of a ground image

by matching it to a geo-tagged aerial image in a database. Recent works achieve

outstanding progress on CVGL benchmarks. However, existing methods still suffer

from poor performance in cross-area evaluation, in which the training and

testing data are captured from completely distinct areas. We attribute this

deficiency to the lack of ability to extract the geometric layout of visual

features and models' overfitting to low-level details. Our preliminary work

introduced a Geometric Layout Extractor (GLE) to capture the geometric layout

from input features. However, the previous GLE does not fully exploit

information in the input feature. In this work, we propose GeoDTR+ with an

enhanced GLE module that better models the correlations among visual features.

To fully explore the LS techniques from our preliminary work, we further

propose Contrastive Hard Samples Generation (CHSG) to facilitate model

training. Extensive experiments show that GeoDTR+ achieves state-of-the-art

(SOTA) results in cross-area evaluation on CVUSA, CVACT, and VIGOR by a large

margin (16.44%, 22.71%, and 13.66% without polar transformation) while

keeping the same-area performance comparable to existing SOTA. Moreover, we

provide detailed analyses of GeoDTR+. Our code will be available at

https://gitlab.com/vail-uvm/geodtr plus.

Trained generative models have shown remarkable performance as priors for inverse problems in imaging -- for example, Generative Adversarial Network priors permit recovery of test images from 5-10x fewer measurements than sparsity priors. Unfortunately, these models may be unable to represent any particular image because of architectural choices, mode collapse, and bias in the training dataset. In this paper, we demonstrate that invertible neural networks, which have zero representation error by design, can be effective natural signal priors at inverse problems such as denoising, compressive sensing, and inpainting. Given a trained generative model, we study the empirical risk formulation of the desired inverse problem under a regularization that promotes high likelihood images, either directly by penalization or algorithmically by initialization. For compressive sensing, invertible priors can yield higher accuracy than sparsity priors across almost all undersampling ratios, and due to their lack of representation error, invertible priors can yield better reconstructions than GAN priors for images that have rare features of variation within the biased training set, including out-of-distribution natural images. We additionally compare performance for compressive sensing to unlearned methods, such as the deep decoder, and we establish theoretical bounds on expected recovery error in the case of a linear invertible model.

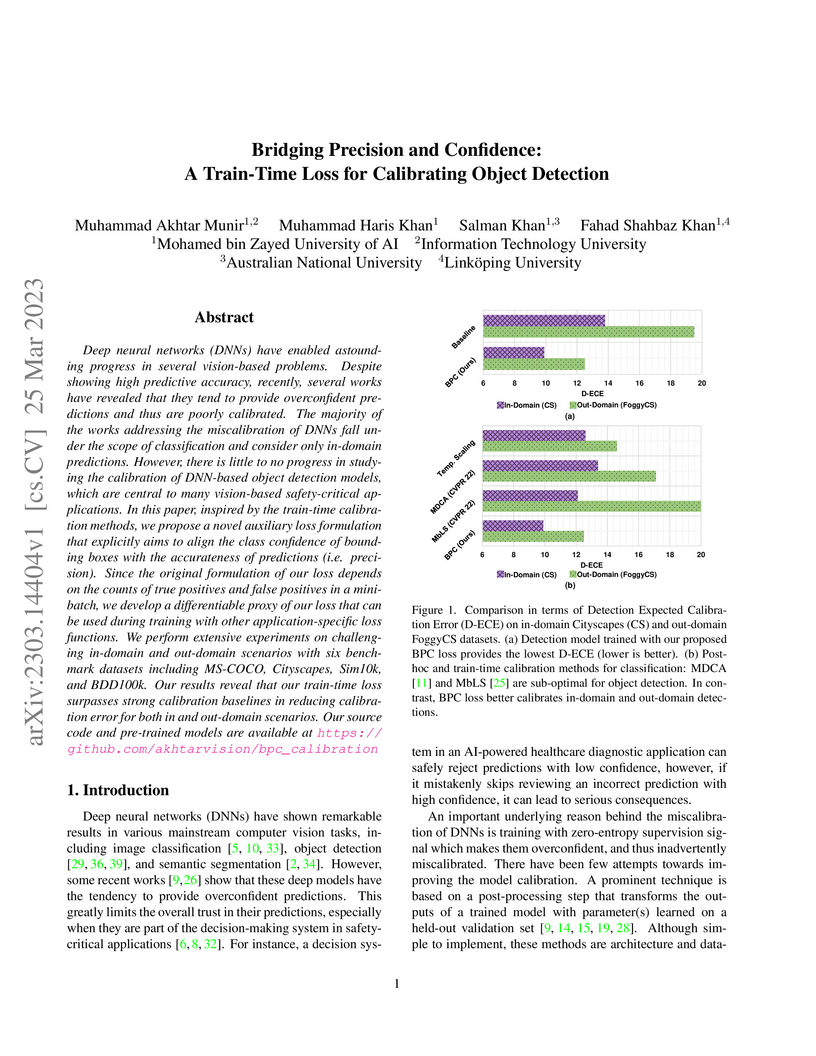

Deep neural networks (DNNs) have enabled astounding progress in several vision-based problems. Despite showing high predictive accuracy, recently, several works have revealed that they tend to provide overconfident predictions and thus are poorly calibrated. The majority of the works addressing the miscalibration of DNNs fall under the scope of classification and consider only in-domain predictions. However, there is little to no progress in studying the calibration of DNN-based object detection models, which are central to many vision-based safety-critical applications. In this paper, inspired by the train-time calibration methods, we propose a novel auxiliary loss formulation that explicitly aims to align the class confidence of bounding boxes with the accurateness of predictions (i.e. precision). Since the original formulation of our loss depends on the counts of true positives and false positives in a minibatch, we develop a differentiable proxy of our loss that can be used during training with other application-specific loss functions. We perform extensive experiments on challenging in-domain and out-domain scenarios with six benchmark datasets including MS-COCO, Cityscapes, Sim10k, and BDD100k. Our results reveal that our train-time loss surpasses strong calibration baselines in reducing calibration error for both in and out-domain scenarios. Our source code and pre-trained models are available at this https URL

17 Aug 2017

In higher educational institutes, many students have to struggle hard to

complete different courses since there is no dedicated support offered to

students who need special attention in the registered courses. Machine learning

techniques can be utilized for students' grades prediction in different

courses. Such techniques would help students to improve their performance based

on predicted grades and would enable instructors to identify such individuals

who might need assistance in the courses. In this paper, we use Collaborative

Filtering (CF), Matrix Factorization (MF), and Restricted Boltzmann Machines

(RBM) techniques to systematically analyze a real-world data collected from

Information Technology University (ITU), Lahore, Pakistan. We evaluate the

academic performance of ITU students who got admission in the bachelor's degree

program in ITU's Electrical Engineering department. The RBM technique is found

to be better than the other techniques used in predicting the students'

performance in the particular course.

There are no more papers matching your filters at the moment.