Monash University

Monash UniversityByteDance Seed introduced BAGEL, an open-source unified multimodal foundation model trained on trillions of interleaved text, image, and video tokens. This model demonstrates emergent reasoning abilities and achieves state-of-the-art performance among open-source alternatives, narrowing the capability gap with leading proprietary systems.

Researchers from Monash University, VinUniversity, and the University of Cambridge developed PiVe (Prompting with Iterative Verification), a framework that uses a specialized verifier module to iteratively correct semantic graphs generated by Large Language Models (LLMs). This method improved graph generation quality by an average of 26% across multiple datasets and enabled the creation of a high-quality text-graph dataset, GenWiki-HIQ.

06 Dec 2025

Monash UniversityCSIRO

Monash UniversityCSIRO Chinese Academy of SciencesSichuan University

Chinese Academy of SciencesSichuan University University of Manchester

University of Manchester Beihang University

Beihang University Nanjing University

Nanjing University Zhejiang University

Zhejiang University ByteDanceShanghai AI LabHarbin Institute of Technology

ByteDanceShanghai AI LabHarbin Institute of Technology Beijing Jiaotong University

Beijing Jiaotong University Huawei

Huawei Nanyang Technological UniversityNTUBeijing University of Posts and TelecommunicationsUniversity of Sheffield

Nanyang Technological UniversityNTUBeijing University of Posts and TelecommunicationsUniversity of Sheffield TencentAlibabaHuawei CloudStepFunTeleAIOPPOHong Kong University of Science and Technology (Guangzhou)KuaiShouM-A-PChinese Academy of Sciences, Institute of AutomationUOM

TencentAlibabaHuawei CloudStepFunTeleAIOPPOHong Kong University of Science and Technology (Guangzhou)KuaiShouM-A-PChinese Academy of Sciences, Institute of AutomationUOMA comprehensive synthesis of Large Language Models for automated software development covers the entire model lifecycle, from data curation to autonomous agents, and offers practical guidance derived from empirical experiments on pre-training, fine-tuning, and reinforcement learning, alongside a detailed analysis of challenges and future directions.

18 Oct 2025

South China University of Technology California Institute of Technology

California Institute of Technology University of Cambridge

University of Cambridge Monash UniversityShanghai Artificial Intelligence Laboratory

Monash UniversityShanghai Artificial Intelligence Laboratory Chinese Academy of Sciences

Chinese Academy of Sciences University College London

University College London Fudan University

Fudan University Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Stanford University

Stanford University The Chinese University of Hong Kong

The Chinese University of Hong Kong The Hong Kong Polytechnic UniversityThe Chinese University of Hong Kong, Shenzhen

The Hong Kong Polytechnic UniversityThe Chinese University of Hong Kong, Shenzhen The University of Hong KongMBZUAI

The University of Hong KongMBZUAI Purdue University

Purdue University Virginia Tech

Virginia Tech HKUSTUniversity College DublinUNC-Chapel HillFuzhou UniversityNorth University of ChinaChina Pharmaceutical UniversityBeijing Institute of Heart, Lung and Blood Vessel Diseases

HKUSTUniversity College DublinUNC-Chapel HillFuzhou UniversityNorth University of ChinaChina Pharmaceutical UniversityBeijing Institute of Heart, Lung and Blood Vessel Diseases

California Institute of Technology

California Institute of Technology University of Cambridge

University of Cambridge Monash UniversityShanghai Artificial Intelligence Laboratory

Monash UniversityShanghai Artificial Intelligence Laboratory Chinese Academy of Sciences

Chinese Academy of Sciences University College London

University College London Fudan University

Fudan University Shanghai Jiao Tong University

Shanghai Jiao Tong University Nanjing University

Nanjing University Stanford University

Stanford University The Chinese University of Hong Kong

The Chinese University of Hong Kong The Hong Kong Polytechnic UniversityThe Chinese University of Hong Kong, Shenzhen

The Hong Kong Polytechnic UniversityThe Chinese University of Hong Kong, Shenzhen The University of Hong KongMBZUAI

The University of Hong KongMBZUAI Purdue University

Purdue University Virginia Tech

Virginia Tech HKUSTUniversity College DublinUNC-Chapel HillFuzhou UniversityNorth University of ChinaChina Pharmaceutical UniversityBeijing Institute of Heart, Lung and Blood Vessel Diseases

HKUSTUniversity College DublinUNC-Chapel HillFuzhou UniversityNorth University of ChinaChina Pharmaceutical UniversityBeijing Institute of Heart, Lung and Blood Vessel DiseasesA comprehensive survey by researchers from Shanghai AI Lab and various global institutions outlines the intricate relationship between scientific large language models (Sci-LLMs) and their data foundations, tracing their evolution towards autonomous agents for scientific discovery. The paper establishes a taxonomy for scientific data and knowledge, meticulously reviews over 270 datasets and 190 benchmarks, and identifies critical data challenges alongside future paradigms.

TIME-LLM introduces a reprogramming framework that adapts large language models for general time series forecasting by keeping the LLM backbone frozen. The approach achieves state-of-the-art performance across various benchmarks, excelling particularly in data-scarce few-shot and zero-shot settings.

Multi-Agent Path Finding (MAPF) is a fundamental problem in robotics that asks us to compute collision-free paths for a team of agents, all moving across a shared map. Although many works appear on this topic, all current algorithms struggle as the number of agents grows. The principal reason is that existing approaches typically plan free-flow optimal paths, which creates congestion. To tackle this issue, we propose a new approach for MAPF where agents are guided to their destination by following congestion-avoiding paths. We evaluate the idea in two large-scale settings: one-shot MAPF, where each agent has a single destination, and lifelong MAPF, where agents are continuously assigned new destinations. Empirically, we report large improvements in solution quality for one-short MAPF and in overall throughput for lifelong MAPF.

GFM-RAG introduces the first graph foundation model specifically designed for Retrieval Augmented Generation (RAG), leveraging a query-dependent Graph Neural Network to capture complex, multi-hop knowledge relationships. This model achieves state-of-the-art retrieval and question answering performance on diverse datasets and generalizes to unseen domains without fine-tuning, significantly enhancing LLM reasoning capabilities.

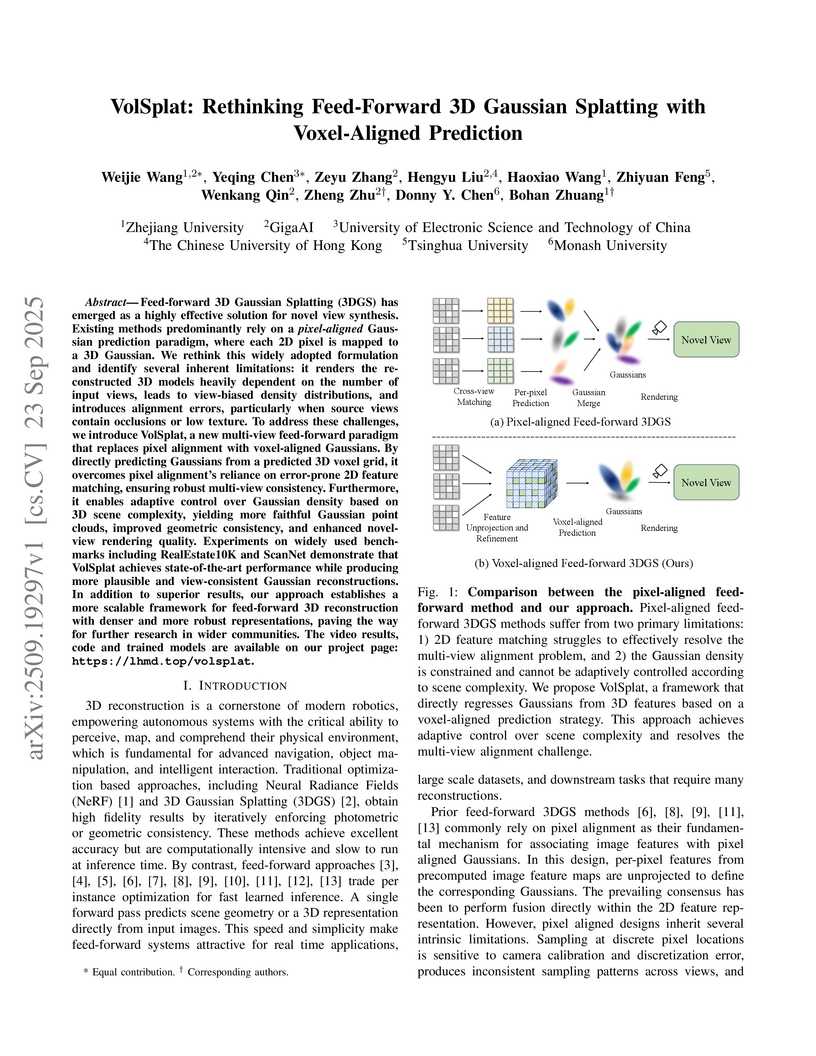

VolSplat introduces a voxel-aligned prediction paradigm for feed-forward 3D Gaussian Splatting, aggregating 2D features into a 3D voxel grid to predict Gaussian parameters. This approach significantly enhances geometric consistency, robustness, and rendering quality, outperforming prior pixel-aligned methods on benchmarks like RealEstate10K and ScanNet.

LIGHTFUSION introduces a double fusion framework that integrates pre-trained understanding and generation models to achieve unified multimodal capabilities. This approach delivers competitive performance across multimodal understanding, text-to-image generation, and image editing tasks using significantly fewer training tokens (approximately 35 billion) compared to existing large-scale unified models.

Researchers developed UniMedVL, a unified medical foundation model capable of simultaneously performing both understanding and generation tasks within a single architecture, leveraging the UniMed-5M multimodal dataset and a progressive curriculum learning strategy. The model achieves superior performance across diverse medical visual understanding benchmarks and demonstrates high-fidelity generation and seamless execution of complex interleaved multimodal tasks.

03 Sep 2025

Youtu-GraphRAG introduces a vertically unified agentic paradigm that jointly optimizes graph construction and retrieval for large language models, significantly enhancing complex reasoning accuracy and reducing token consumption by up to 90.71% across various benchmarks while mitigating knowledge leaking through novel evaluation datasets.

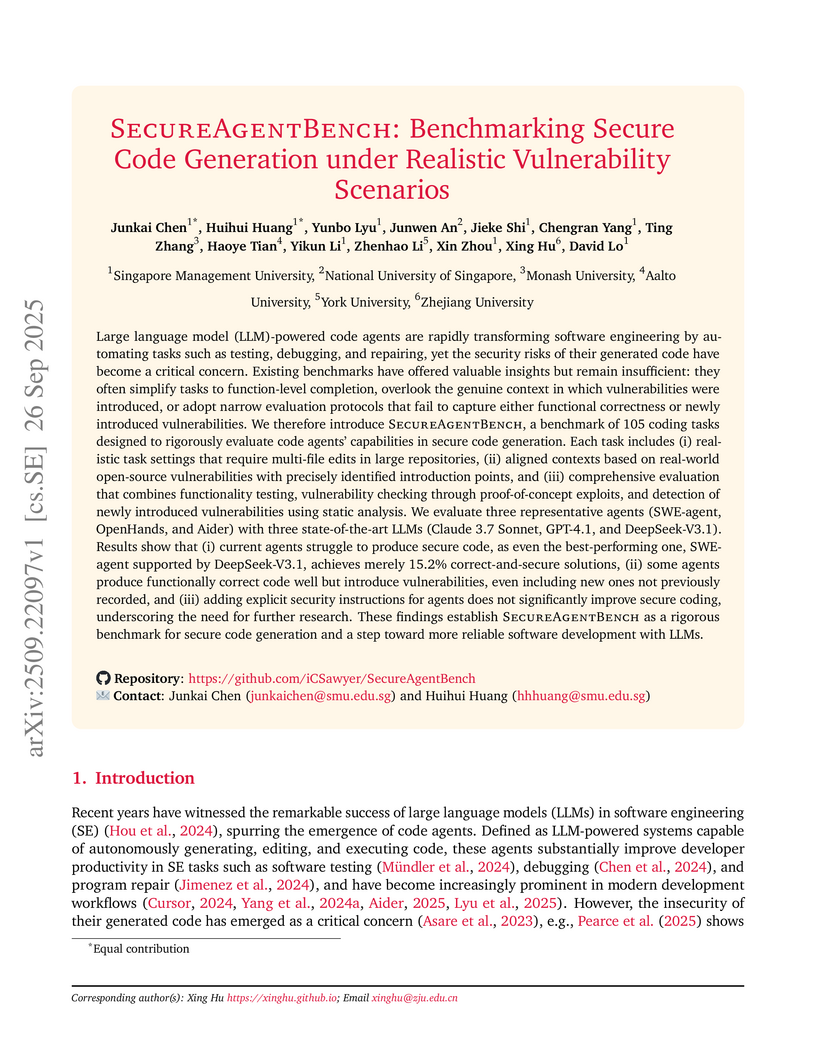

26 Sep 2025

Researchers developed SecureAgentBench, a benchmark with 105 real-world, repository-level tasks, to evaluate LLM-powered code agents' ability to generate secure code. Evaluations show that current agents achieve a mere 9.2% success rate in producing functionally correct and secure solutions, frequently introducing novel vulnerabilities and struggling even with explicit security guidance.

27 Aug 2025

A survey charts the recent trajectory of Compositional Visual Reasoning (CVR) from 2023 to 2025, introducing a five-stage taxonomy to explain its evolution and distinct advantages over monolithic approaches. The work systematically reviews over 260 papers, identifying key benefits such as enhanced interpretability and robustness, while also outlining persistent open challenges and future research directions for the field.

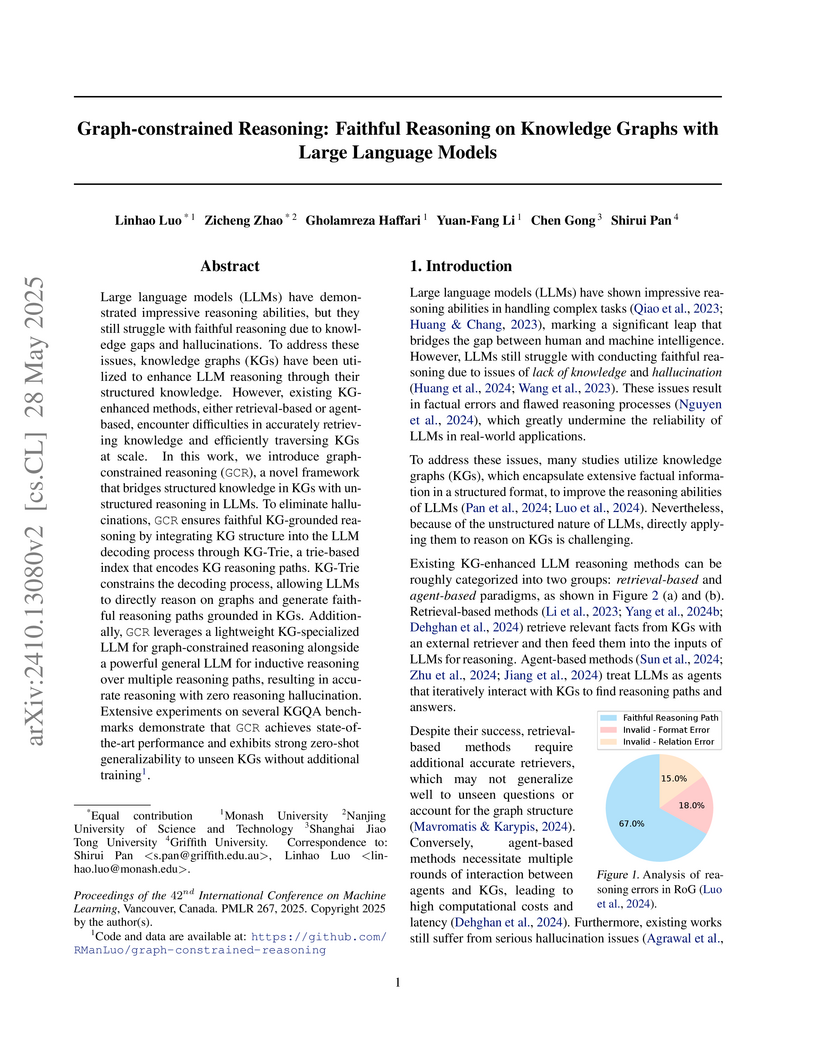

The Graph-constrained Reasoning (GCR) framework integrates Knowledge Graph (KG) structure directly into Large Language Model (LLM) decoding, achieving 100% faithful reasoning without hallucinations on KGQA tasks. This approach consistently outperforms state-of-the-art methods on benchmarks like WebQuestionSP and Complex WebQuestions by up to 9.1% while being significantly more efficient than agent-based approaches.

Meituan's LongCat-Flash-Omni is a 560-billion-parameter open-source omni-modal model that processes text, image, video, and audio to enable real-time audio-visual interaction. It achieves state-of-the-art performance on various multimodal benchmarks and shows highly competitive results against leading proprietary models.

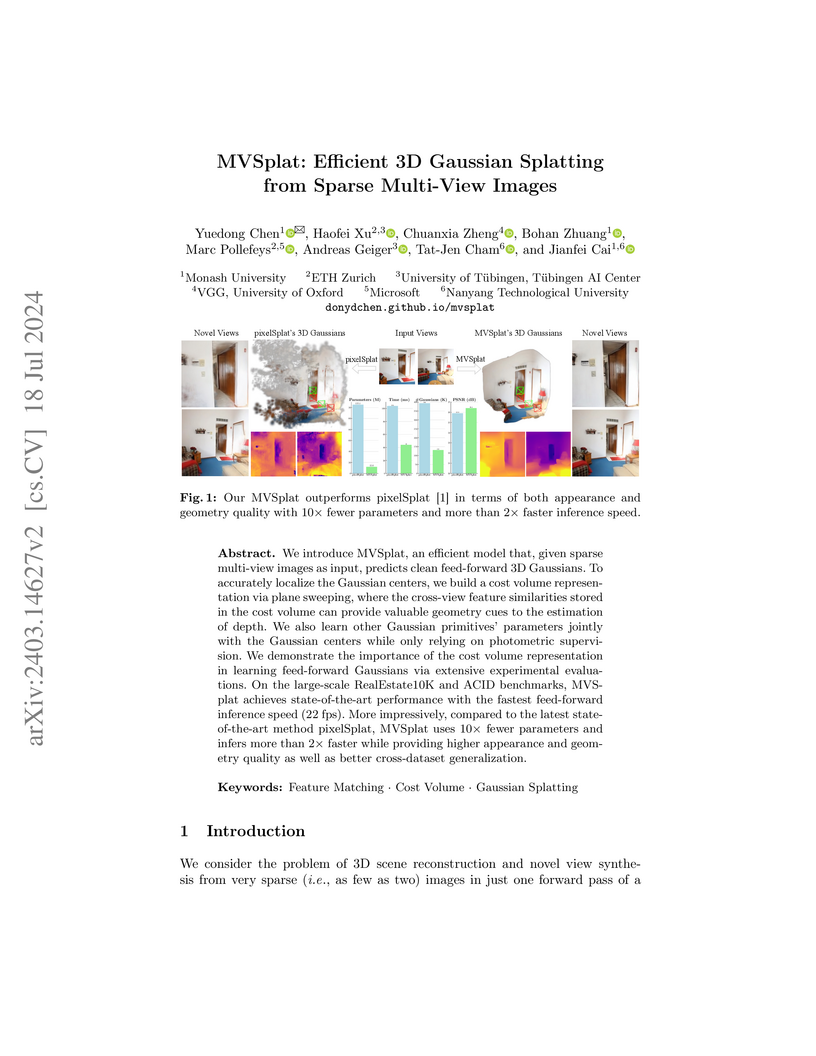

MVSplat presents an efficient, generalizable feed-forward model that generates high-quality 3D Gaussian Splatting representations from sparse multi-view images. It achieves state-of-the-art visual quality with over 2x faster inference (22 fps) and a 10x smaller model size (12M parameters) than prior methods by integrating multi-view stereo cost volumes for robust 3D geometry estimation.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Monash University

Monash University Carnegie Mellon University

Carnegie Mellon University University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley University College London

University College London Cornell UniversityCSIRO’s Data61

Cornell UniversityCSIRO’s Data61 Hugging FaceTU Darmstadt

Hugging FaceTU Darmstadt InriaSingapore Management UniversitySea AI Lab

InriaSingapore Management UniversitySea AI Lab MITIntelAWS AI Labs

MITIntelAWS AI Labs Shanghai Jiaotong University

Shanghai Jiaotong University Queen Mary University of London

Queen Mary University of London University of VirginiaUNC-Chapel Hill

University of VirginiaUNC-Chapel Hill ServiceNowContextual AIDetomo Inc

ServiceNowContextual AIDetomo IncBigCodeBench is a new benchmark that evaluates Large Language Models on their ability to generate Python code requiring diverse function calls and complex instructions, revealing that current models like GPT-4o achieve a maximum of 60% accuracy on these challenging tasks, significantly lagging human performance.

The Reasoning on Graphs (RoG) framework enhances Large Language Model (LLM) reasoning by integrating Knowledge Graph (KG) structural information as explicit reasoning plans. It achieves state-of-the-art performance on KGQA benchmarks, improving Hits@1 by 22.3% and F1 by 14.4% on CWQ, while providing faithful and interpretable explanations grounded in KG paths.

University of Illinois at Urbana-Champaign

University of Illinois at Urbana-Champaign Monash UniversityLeipzig University

Monash UniversityLeipzig University Northeastern University

Northeastern University University of Notre Dame

University of Notre Dame UC Berkeley

UC Berkeley University College London

University College London Cohere

Cohere Cornell University

Cornell University University of California, San Diego

University of California, San Diego University of British ColumbiaCSIRO’s Data61

University of British ColumbiaCSIRO’s Data61 NVIDIAIBM Research

NVIDIAIBM Research Hugging Face

Hugging Face Johns Hopkins University

Johns Hopkins University Technical University of MunichSea AI Lab

Technical University of MunichSea AI Lab MIT

MIT Princeton UniversityTechnical University of DarmstadtBaidu

Princeton UniversityTechnical University of DarmstadtBaidu ServiceNowKaggleWellesley CollegeContextual AIRobloxSalesforceIndependentMazzumaTechnion

Israel Institute of Technology

ServiceNowKaggleWellesley CollegeContextual AIRobloxSalesforceIndependentMazzumaTechnion

Israel Institute of TechnologyThe BigCode project releases StarCoder 2 models and The Stack v2 dataset, setting a new standard for open and ethically sourced Code LLM development. StarCoder 2 models, particularly the 15B variant, demonstrate competitive performance across code generation, completion, and reasoning tasks, often outperforming larger, closed-source alternatives, by prioritizing data quality and efficient architecture over sheer data quantity.

27 Oct 2025

VIDEO-THINKER, a new framework, empowers Multimodal Large Language Models to reason with videos by intrinsically developing temporal grounding and captioning abilities. The model establishes new state-of-the-art performance on various video reasoning benchmarks, achieving up to an 11.44% improvement on the VRBench out-of-domain dataset, while showcasing enhanced temporal localization (48.22% mIoU) and descriptive captioning.

There are no more papers matching your filters at the moment.