Ozyegin University

Evidential Deep Learning (EDL) enables deep neural networks to directly quantify classification uncertainty by modeling evidence using Dirichlet distributions. This approach yields comparable classification accuracy while providing superior uncertainty estimates for out-of-distribution inputs and improved robustness against adversarial examples, allowing models to express when they lack sufficient confidence.

State Reconstruction for Diffusion Policies (SRDP) improves out-of-distribution generalization in offline reinforcement learning by integrating an auxiliary state reconstruction objective into diffusion policies. This method demonstrates a 167% performance improvement over Diffusion-QL in maze navigation with missing data and achieves a Chamfer distance of 0.071

0.02 against ground truth in real-world UR10 robot experiments.

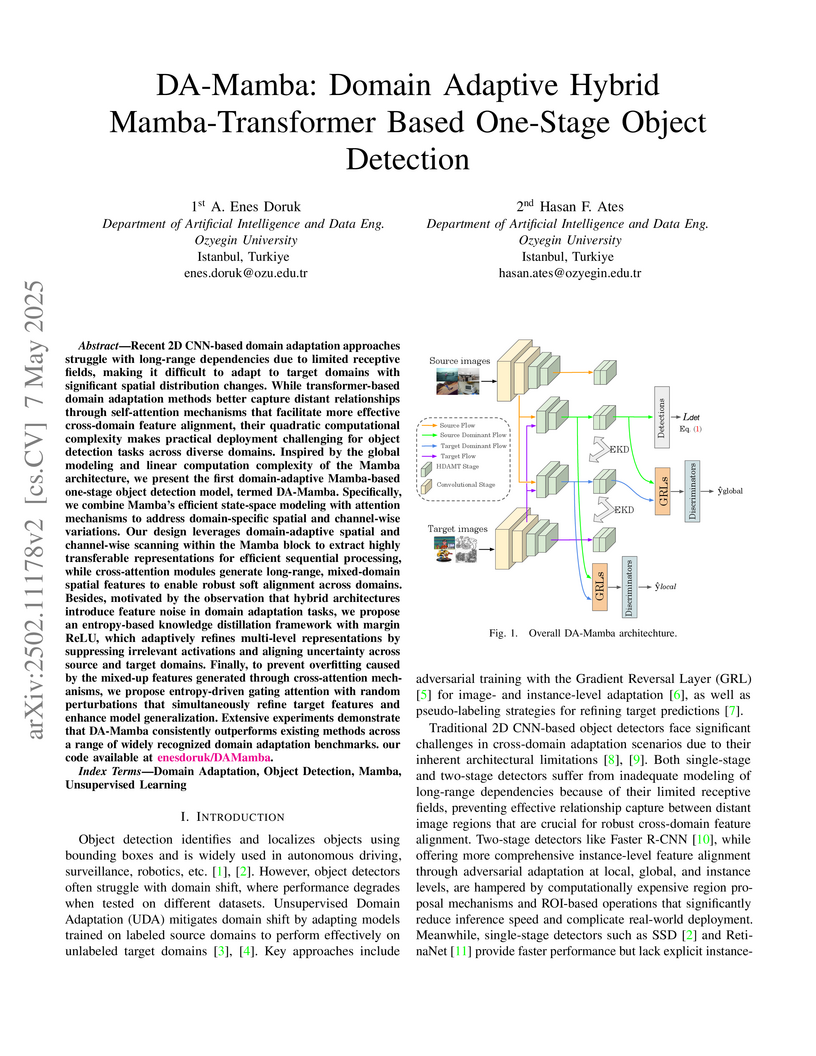

Recent 2D CNN-based domain adaptation approaches struggle with long-range

dependencies due to limited receptive fields, making it difficult to adapt to

target domains with significant spatial distribution changes. While

transformer-based domain adaptation methods better capture distant

relationships through self-attention mechanisms that facilitate more effective

cross-domain feature alignment, their quadratic computational complexity makes

practical deployment challenging for object detection tasks across diverse

domains. Inspired by the global modeling and linear computation complexity of

the Mamba architecture, we present the first domain-adaptive Mamba-based

one-stage object detection model, termed DA-Mamba. Specifically, we combine

Mamba's efficient state-space modeling with attention mechanisms to address

domain-specific spatial and channel-wise variations. Our design leverages

domain-adaptive spatial and channel-wise scanning within the Mamba block to

extract highly transferable representations for efficient sequential

processing, while cross-attention modules generate long-range, mixed-domain

spatial features to enable robust soft alignment across domains. Besides,

motivated by the observation that hybrid architectures introduce feature noise

in domain adaptation tasks, we propose an entropy-based knowledge distillation

framework with margin ReLU, which adaptively refines multi-level

representations by suppressing irrelevant activations and aligning uncertainty

across source and target domains. Finally, to prevent overfitting caused by the

mixed-up features generated through cross-attention mechanisms, we propose

entropy-driven gating attention with random perturbations that simultaneously

refine target features and enhance model generalization.

This survey presents the evolution of live media streaming and the technological developments behind today's IP-based low-latency live streaming systems. Live streaming primarily involves capturing, encoding, packaging and delivering real-time events such as live sports, live news, personal broadcasts and surveillance videos. Live streaming also involves concurrent streaming of linear TV programming off the satellite, cable, over-the-air or IPTV broadcast, where the programming is not necessarily a real-time event.

The survey starts with a discussion on the latency and latency continuum in streaming applications. Then, it lays out the existing live streaming workflows and protocols, followed by an in-depth analysis of the latency sources in these workflows and protocols. The survey continues with the technology enablers, low-latency extensions for the popular HTTP adaptive streaming methods and enhancements for robust low-latency playback. An entire section is dedicated to the detailed summary and findings of Twitch's grand challenge on low-latency live streaming. The survey concludes with a discussion of ongoing research problems in this space. We expect this survey to be the one-stop reference for those who would like to learn how low-latency live streaming has evolved and works today, and what further developments could happen in the future.

Researchers from Ozyegin University developed X2BR, a hybrid neural implicit framework that reconstructs high-fidelity 3D bone models from a single planar X-ray image, achieving high geometric accuracy while ensuring anatomical consistency via template-guided refinement. The system provides quantitative improvements in anatomical realism, making it suitable for surgical planning and orthopedic assessment, and also introduced a large dataset of paired 3D bone meshes and X-ray images.

Deep Reinforcement Learning (DRL) algorithms can scale to previously

intractable problems. The automation of profit generation in the stock market

is possible using DRL, by combining the financial assets price "prediction"

step and the "allocation" step of the portfolio in one unified process to

produce fully autonomous systems capable of interacting with their environment

to make optimal decisions through trial and error. This work represents a DRL

model to generate profitable trades in the stock market, effectively overcoming

the limitations of supervised learning approaches. We formulate the trading

problem as a Partially Observed Markov Decision Process (POMDP) model,

considering the constraints imposed by the stock market, such as liquidity and

transaction costs. We then solve the formulated POMDP problem using the Twin

Delayed Deep Deterministic Policy Gradient (TD3) algorithm reporting a 2.68

Sharpe Ratio on unseen data set (test data). From the point of view of stock

market forecasting and the intelligent decision-making mechanism, this paper

demonstrates the superiority of DRL in financial markets over other types of

machine learning and proves its credibility and advantages of strategic

decision-making.

Wide Area Motion Imagery (WAMI) yields high-resolution images with a large number of extremely small objects. Target objects have large spatial displacements throughout consecutive frames. This nature of WAMI images makes object tracking and detection challenging. In this paper, we present our deep neural network-based combined object detection and tracking model, namely, Heat Map Network (HM-Net). HM-Net is significantly faster than state-of-the-art frame differencing and background subtraction-based methods, without compromising detection and tracking performances. HM-Net follows the object center-based joint detection and tracking paradigm. Simple heat map-based predictions support an unlimited number of simultaneous detections. The proposed method uses two consecutive frames and the object detection heat map obtained from the previous frame as input, which helps HM-Net monitor spatio-temporal changes between frames and keeps track of previously predicted objects. Although reuse of prior object detection heat map acts as a vital feedback-based memory element, it can lead to an unintended surge of false-positive detections. To increase the robustness of the method against false positives and to eliminate low confidence detections, HM-Net employs novel feedback filters and advanced data augmentations. HM-Net outperforms state-of-the-art WAMI moving object detection and tracking methods on the WPAFB dataset with its 96.2% F1 and 94.4% mAP detection scores while achieving a 61.8% mAP tracking score on the same dataset. This performance corresponds to an improvement of 2.1% for F1, 6.1% for mAP scores on detection, and 9.5% for mAP score on tracking over the state-of-the-art.

Affordances represent the inherent effect and action possibilities that

objects offer to the agents within a given context. From a theoretical

viewpoint, affordances bridge the gap between effect and action, providing a

functional understanding of the connections between the actions of an agent and

its environment in terms of the effects it can cause. In this study, we propose

a deep neural network model that unifies objects, actions, and effects into a

single latent vector in a common latent space that we call the affordance

space. Using the affordance space, our system can generate effect trajectories

when action and object are given and can generate action trajectories when

effect trajectories and objects are given. Our model does not learn the

behavior of individual objects acted upon by a single agent. Still, rather, it

forms a `shared affordance representation' spanning multiple agents and

objects, which we call Affordance Equivalence. Affordance Equivalence

facilitates not only action generalization over objects but also Cross

Embodiment transfer linking actions of different robots. In addition to the

simulation experiments that demonstrate the proposed model's range of

capabilities, we also showcase that our model can be used for direct imitation

in real-world settings.

13 Jan 2025

Biosensing based on optically trapped fluorescent nanodiamonds is an intriguing research direction potentially allowing to resolve biochemical processes inside living cells. Towards this goal, we investigate infrared near (NIR) laser irradiation at 1064 nm on fluorescent nanodiamonds (FNDs) containing nitrogen-vacancy (NV) centers. By conducting comprehensive experiments, we aim to understand how NIR exposure influences the fluorescence and sensing properties of FNDs and to determine the potential implications for the use of FNDs in various sensing applications. The experimental setup involved exposing FNDs to varying intensities of NIR laser light and analyzing the resultant changes in their optical and physical properties. Key measurements included T1 relaxation times, optical spectroscopy, and optically detected magnetic resonance (ODMR) spectra. The findings reveal how increased NIR laser power correlates with alterations in ODMR central frequency but also that charge state dynamics under NIR irradiation of NV centers plays a role. We suggest protocols with NIR and green light that mitigate the effect of NIR, and demonstrate that FND biosensing works well with such a protocol.

Chiba University Queen Mary University of LondonThe University of Electro-CommunicationsRitsumeikan UniversityCzech Technical University in PragueUniversity of the WitwatersrandUniversität Innsbruck

Queen Mary University of LondonThe University of Electro-CommunicationsRitsumeikan UniversityCzech Technical University in PragueUniversity of the WitwatersrandUniversität Innsbruck University of GöttingenBogazici UniversityOzyegin UniversityCouncil for Scientific and Industrial ResearchOkayama Prefectural University

University of GöttingenBogazici UniversityOzyegin UniversityCouncil for Scientific and Industrial ResearchOkayama Prefectural University

Queen Mary University of LondonThe University of Electro-CommunicationsRitsumeikan UniversityCzech Technical University in PragueUniversity of the WitwatersrandUniversität Innsbruck

Queen Mary University of LondonThe University of Electro-CommunicationsRitsumeikan UniversityCzech Technical University in PragueUniversity of the WitwatersrandUniversität Innsbruck University of GöttingenBogazici UniversityOzyegin UniversityCouncil for Scientific and Industrial ResearchOkayama Prefectural University

University of GöttingenBogazici UniversityOzyegin UniversityCouncil for Scientific and Industrial ResearchOkayama Prefectural UniversityHumans use signs, e.g., sentences in a spoken language, for communication and

thought. Hence, symbol systems like language are crucial for our communication

with other agents and adaptation to our real-world environment. The symbol

systems we use in our human society adaptively and dynamically change over

time. In the context of artificial intelligence (AI) and cognitive systems, the

symbol grounding problem has been regarded as one of the central problems

related to {\it symbols}. However, the symbol grounding problem was originally

posed to connect symbolic AI and sensorimotor information and did not consider

many interdisciplinary phenomena in human communication and dynamic symbol

systems in our society, which semiotics considered. In this paper, we focus on

the symbol emergence problem, addressing not only cognitive dynamics but also

the dynamics of symbol systems in society, rather than the symbol grounding

problem. We first introduce the notion of a symbol in semiotics from the

humanities, to leave the very narrow idea of symbols in symbolic AI.

Furthermore, over the years, it became more and more clear that symbol

emergence has to be regarded as a multifaceted problem. Therefore, secondly, we

review the history of the symbol emergence problem in different fields,

including both biological and artificial systems, showing their mutual

relations. We summarize the discussion and provide an integrative viewpoint and

comprehensive overview of symbol emergence in cognitive systems. Additionally,

we describe the challenges facing the creation of cognitive systems that can be

part of symbol emergence systems.

Federated learning (FL) enables multiple clients to collaboratively train a shared model without disclosing their local datasets. This is achieved by exchanging local model updates with the help of a parameter server (PS). However, due to the increasing size of the trained models, the communication load due to the iterative exchanges between the clients and the PS often becomes a bottleneck in the performance. Sparse communication is often employed to reduce the communication load, where only a small subset of the model updates are communicated from the clients to the PS. In this paper, we introduce a novel time-correlated sparsification (TCS) scheme, which builds upon the notion that sparse communication framework can be considered as identifying the most significant elements of the underlying model. Hence, TCS seeks a certain correlation between the sparse representations used at consecutive iterations in FL, so that the overhead due to encoding and transmission of the sparse representation can be significantly reduced without compromising the test accuracy. Through extensive simulations on the CIFAR-10 dataset, we show that TCS can achieve centralized training accuracy with 100 times sparsification, and up to 2000 times reduction in the communication load when employed together with quantization.

Unsupervised Domain Adaptation (UDA) aims to utilize labeled data from a source domain to solve tasks in an unlabeled target domain, often hindered by significant domain gaps. Traditional CNN-based methods struggle to fully capture complex domain relationships, motivating the shift to vision transformers like the Swin Transformer, which excel in modeling both local and global dependencies. In this work, we propose a novel UDA approach leveraging the Swin Transformer with three key modules. A Graph Domain Discriminator enhances domain alignment by capturing inter-pixel correlations through graph convolutions and entropy-based attention differentiation. An Adaptive Double Attention module combines Windows and Shifted Windows attention with dynamic reweighting to align long-range and local features effectively. Finally, a Cross-Feature Transform modifies Swin Transformer blocks to improve generalization across domains. Extensive benchmarks confirm the state-of-the-art performance of our versatile method, which requires no task-specific alignment modules, establishing its adaptability to diverse applications.

XPoint: A Self-Supervised Visual-State-Space based Architecture for Multispectral Image Registration

XPoint: A Self-Supervised Visual-State-Space based Architecture for Multispectral Image Registration

Accurate multispectral image matching presents significant challenges due to non-linear intensity variations across spectral modalities, extreme viewpoint changes, and the scarcity of labeled datasets. Current state-of-the-art methods are typically specialized for a single spectral difference, such as visibleinfrared, and struggle to adapt to other modalities due to their reliance on expensive supervision, such as depth maps or camera poses. To address the need for rapid adaptation across modalities, we introduce XPoint, a self-supervised, modular image-matching framework designed for adaptive training and fine-tuning on aligned multispectral datasets, allowing users to customize key components based on their specific tasks. XPoint employs modularity and self-supervision to allow for the adjustment of elements such as the base detector, which generates pseudoground truth keypoints invariant to viewpoint and spectrum variations. The framework integrates a VMamba encoder, pretrained on segmentation tasks, for robust feature extraction, and includes three joint decoder heads: two are dedicated to interest point and descriptor extraction; and a task-specific homography regression head imposes geometric constraints for superior performance in tasks like image registration. This flexible architecture enables quick adaptation to a wide range of modalities, demonstrated by training on Optical-Thermal data and fine-tuning on settings such as visual-near infrared, visual-infrared, visual-longwave infrared, and visual-synthetic aperture radar. Experimental results show that XPoint consistently outperforms or matches state-ofthe-art methods in feature matching and image registration tasks across five distinct multispectral datasets. Our source code is available at this https URL.

19 Sep 2025

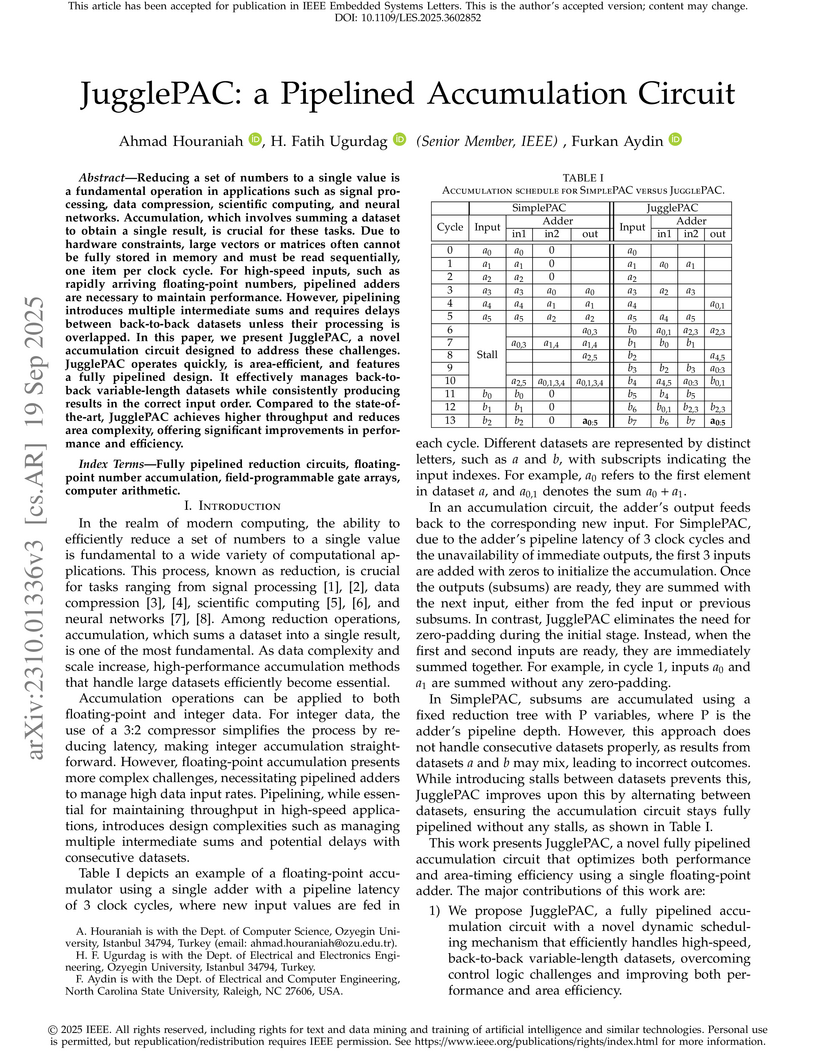

Reducing a set of numbers to a single value is a fundamental operation in applications such as signal processing, data compression, scientific computing, and neural networks. Accumulation, which involves summing a dataset to obtain a single result, is crucial for these tasks. Due to hardware constraints, large vectors or matrices often cannot be fully stored in memory and must be read sequentially, one item per clock cycle. For high-speed inputs, such as rapidly arriving floating-point numbers, pipelined adders are necessary to maintain performance. However, pipelining introduces multiple intermediate sums and requires delays between back-to-back datasets unless their processing is overlapped. In this paper, we present JugglePAC, a novel accumulation circuit designed to address these challenges. JugglePAC operates quickly, is area-efficient, and features a fully pipelined design. It effectively manages back-to-back variable-length datasets while consistently producing results in the correct input order. Compared to the state-of-the-art, JugglePAC achieves higher throughput and reduces area complexity, offering significant improvements in performance and efficiency.

As humans learn new skills and apply their existing knowledge while maintaining previously learned information, "continual learning" in machine learning aims to incorporate new data while retaining and utilizing past knowledge. However, existing machine learning methods often does not mimic human learning where tasks are intermixed due to individual preferences and environmental conditions. Humans typically switch between tasks instead of completely mastering one task before proceeding to the next. To explore how human-like task switching can enhance learning efficiency, we propose a multi task learning architecture that alternates tasks based on task-agnostic measures such as "learning progress" and "neural computational energy expenditure". To evaluate the efficacy of our method, we run several systematic experiments by using a set of effect-prediction tasks executed by a simulated manipulator robot. The experiments show that our approach surpasses random interleaved and sequential task learning in terms of average learning accuracy. Moreover, by including energy expenditure in the task switching logic, our approach can still perform favorably while reducing neural energy expenditure.

22 Nov 2023

Exploratoration and self-observation are key mechanisms of infant

sensorimotor development. These processes are further guided by parental

scaffolding accelerating skill and knowledge acquisition. In developmental

robotics, this approach has been adopted often by having a human acting as the

source of scaffolding. In this study, we investigate whether Large Language

Models (LLMs) can act as a scaffolding agent for a robotic system that aims to

learn to predict the effects of its actions. To this end, an object

manipulation setup is considered where one object can be picked and placed on

top of or in the vicinity of another object. The adopted LLM is asked to guide

the action selection process through algorithmically generated state

descriptions and action selection alternatives in natural language. The

simulation experiments that include cubes in this setup show that LLM-guided

(GPT3.5-guided) learning yields significantly faster discovery of novel

structures compared to random exploration. However, we observed that GPT3.5

fails to effectively guide the robot in generating structures with different

affordances such as cubes and spheres. Overall, we conclude that even without

fine-tuning, LLMs may serve as a moderate scaffolding agent for improving robot

learning, however, they still lack affordance understanding which limits the

applicability of the current LLMs in robotic scaffolding tasks.

Predicting the stock market trend has always been challenging since its

movement is affected by many factors. Here, we approach the future trend

prediction problem as a machine learning classification problem by creating

tomorrow_trend feature as our label to be predicted. Different features are

given to help the machine learning model predict the label of a given day;

whether it is an uptrend or downtrend, those features are technical indicators

generated from the stock's price history. In addition, as financial news plays

a vital role in changing the investor's behavior, the overall sentiment score

on a given day is created from all news released on that day and added to the

model as another feature. Three different machine learning models are tested in

Spark (big-data computing platform), Logistic Regression, Random Forest, and

Gradient Boosting Machine. Random Forest was the best performing model with a

63.58% test accuracy.

RAW image datasets are more suitable than the standard RGB image datasets for the ill-posed inverse problems in low-level vision, but not common in the literature. There are also a few studies to focus on mapping sRGB images to RAW format. Mapping from sRGB to RAW format could be a relevant domain for reverse style transferring since the task is an ill-posed reversing problem. In this study, we seek an answer to the question: Can the ISP operations be modeled as the style factor in an end-to-end learning pipeline? To investigate this idea, we propose a novel architecture, namely RST-ISP-Net, for learning to reverse the ISP operations with the help of adaptive feature normalization. We formulate this problem as a reverse style transferring and mostly follow the practice used in the prior work. We have participated in the AIM Reversed ISP challenge with our proposed architecture. Results indicate that the idea of modeling disruptive or modifying factors as style is still valid, but further improvements are required to be competitive in such a challenge.

In the realm of online privacy, privacy assistants play a pivotal role in empowering users to manage their privacy effectively. Although recent studies have shown promising progress in tackling tasks such as privacy violation detection and personalized privacy recommendations, a crucial aspect for widespread user adoption is the capability of these systems to provide explanations for their decision-making processes. This paper presents a privacy assistant for generating explanations for privacy decisions. The privacy assistant focuses on discovering latent topics, identifying explanation categories, establishing explanation schemes, and generating automated explanations. The generated explanations can be used by users to understand the recommendations of the privacy assistant. Our user study of real-world privacy dataset of images shows that users find the generated explanations useful and easy to understand. Additionally, the generated explanations can be used by privacy assistants themselves to improve their decision-making. We show how this can be realized by incorporating the generated explanations into a state-of-the-art privacy assistant.

Eddy detection is a critical task for ocean scientists to understand and

analyze ocean circulation. In this paper, we introduce a hybrid eddy detection

approach that combines sea surface height (SSH) and velocity fields with

geometric criteria defining eddy behavior. Our approach searches for SSH minima

and maxima, which oceanographers expect to find at the center of eddies.

Geometric criteria are used to verify expected velocity field properties, such

as net rotation and symmetry, by tracing velocity components along a circular

path surrounding each eddy center. Progressive searches outward and into deeper

layers yield each eddy's 3D region of influence. Isolation of each eddy

structure from the dataset, using it's cylindrical footprint, facilitates

visualization of internal eddy structures using horizontal velocity, vertical

velocity, temperature and salinity. A quantitative comparison of Okubo-Weiss

vorticity (OW) thresholding, the standard winding angle, and this new

SSH-velocity hybrid methods of eddy detection as applied to the Red Sea dataset

suggests that detection results are highly dependent on the choices of method,

thresholds, and criteria. Our new SSH-velocity hybrid detection approach has

the advantages of providing eddy structures with verified rotation properties,

3D visualization of the internal structure of physical properties, and rapid

efficient estimations of eddy footprints without calculating streamlines. Our

approach combines visualization of internal structure and tracking overall

movement to support the study of the transport mechanisms key to understanding

the interaction of nutrient distribution and ocean circulation. Our method is

applied to three different datasets to showcase the generality of its

application.

There are no more papers matching your filters at the moment.