Southwestern University of Finance and Economics

This survey paper provides a comprehensive review of Continual Reinforcement Learning (CRL), introducing a novel taxonomy that categorizes methods based on whether they primarily store and transfer knowledge related to policies, experiences, environment dynamics, or reward signals. It identifies the unique challenges of CRL, such as the stability-plasticity-scalability dilemma, and outlines future research directions and applications across various domains.

Open-set perception in complex traffic environments poses a critical challenge for autonomous driving systems, particularly in identifying previously unseen object categories, which is vital for ensuring safety. Visual Language Models (VLMs), with their rich world knowledge and strong semantic reasoning capabilities, offer new possibilities for addressing this task. However, existing approaches typically leverage VLMs to extract visual features and couple them with traditional object detectors, resulting in multi-stage error propagation that hinders perception accuracy. To overcome this limitation, we propose VLM-3D, the first end-to-end framework that enables VLMs to perform 3D geometric perception in autonomous driving scenarios. VLM-3D incorporates Low-Rank Adaptation (LoRA) to efficiently adapt VLMs to driving tasks with minimal computational overhead, and introduces a joint semantic-geometric loss design: token-level semantic loss is applied during early training to ensure stable convergence, while 3D IoU loss is introduced in later stages to refine the accuracy of 3D bounding box predictions. Evaluations on the nuScenes dataset demonstrate that the proposed joint semantic-geometric loss in VLM-3D leads to a 12.8% improvement in perception accuracy, fully validating the effectiveness and advancement of our method.

11 Jun 2025

In many complex scenarios, robotic manipulation relies on generative models

to estimate the distribution of multiple successful actions. As the diffusion

model has better training robustness than other generative models, it performs

well in imitation learning through successful robot demonstrations. However,

the diffusion-based policy methods typically require significant time to

iteratively denoise robot actions, which hinders real-time responses in robotic

manipulation. Moreover, existing diffusion policies model a time-varying action

denoising process, whose temporal complexity increases the difficulty of model

training and leads to suboptimal action accuracy. To generate robot actions

efficiently and accurately, we present the Time-Unified Diffusion Policy

(TUDP), which utilizes action recognition capabilities to build a time-unified

denoising process. On the one hand, we build a time-unified velocity field in

action space with additional action discrimination information. By unifying all

timesteps of action denoising, our velocity field reduces the difficulty of

policy learning and speeds up action generation. On the other hand, we propose

an action-wise training method, which introduces an action discrimination

branch to supply additional action discrimination information. Through

action-wise training, the TUDP implicitly learns the ability to discern

successful actions to better denoising accuracy. Our method achieves

state-of-the-art performance on RLBench with the highest success rate of 82.6%

on a multi-view setup and 83.8% on a single-view setup. In particular, when

using fewer denoising iterations, TUDP achieves a more significant improvement

in success rate. Additionally, TUDP can produce accurate actions for a wide

range of real-world tasks.

Automatic Text Summarization (ATS), utilizing Natural Language Processing (NLP) algorithms, aims to create concise and accurate summaries, thereby significantly reducing the human effort required in processing large volumes of text. ATS has drawn considerable interest in both academic and industrial circles. Many studies have been conducted in the past to survey ATS methods; however, they generally lack practicality for real-world implementations, as they often categorize previous methods from a theoretical standpoint. Moreover, the advent of Large Language Models (LLMs) has altered conventional ATS methods. In this survey, we aim to 1) provide a comprehensive overview of ATS from a ``Process-Oriented Schema'' perspective, which is best aligned with real-world implementations; 2) comprehensively review the latest LLM-based ATS works; and 3) deliver an up-to-date survey of ATS, bridging the two-year gap in the literature. To the best of our knowledge, this is the first survey to specifically investigate LLM-based ATS methods.

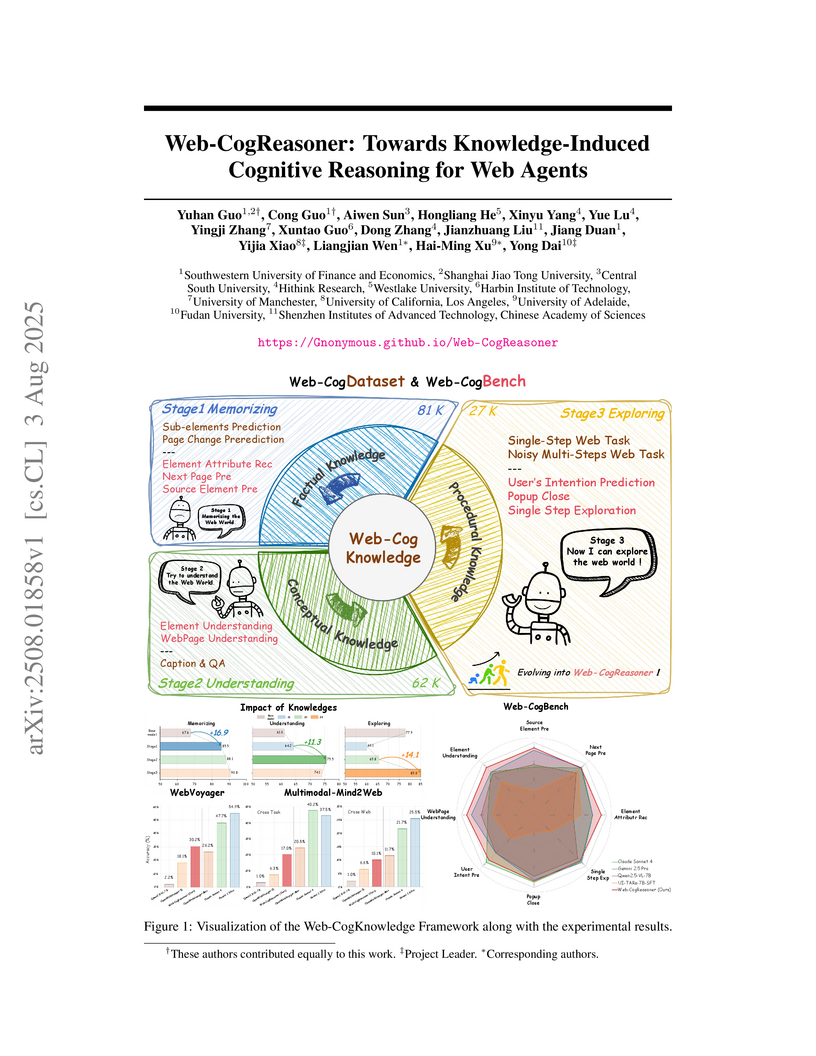

03 Aug 2025

A framework called Web-CogReasoner enables web agents to learn and apply factual, conceptual, and procedural knowledge through a human-inspired curriculum. This structured approach, using the Qwen2.5-VL-7B LMM, leads to an 84.4% accuracy on cognitive reasoning tasks and establishes a new state-of-the-art for open-source agents on the WebVoyager benchmark with a 30.2% success rate.

X-Humanoid Chinese Academy of SciencesUniversity of Electronic Science and Technology of ChinaSouthwestern University of Finance and EconomicsShanghai Academy of AI for ScienceEngineering Research Center of Intelligent Finance, Ministry of EducationArtificial Intelligence Innovation and Incubation Institute, Fudan University

Chinese Academy of SciencesUniversity of Electronic Science and Technology of ChinaSouthwestern University of Finance and EconomicsShanghai Academy of AI for ScienceEngineering Research Center of Intelligent Finance, Ministry of EducationArtificial Intelligence Innovation and Incubation Institute, Fudan University

Chinese Academy of SciencesUniversity of Electronic Science and Technology of ChinaSouthwestern University of Finance and EconomicsShanghai Academy of AI for ScienceEngineering Research Center of Intelligent Finance, Ministry of EducationArtificial Intelligence Innovation and Incubation Institute, Fudan University

Chinese Academy of SciencesUniversity of Electronic Science and Technology of ChinaSouthwestern University of Finance and EconomicsShanghai Academy of AI for ScienceEngineering Research Center of Intelligent Finance, Ministry of EducationArtificial Intelligence Innovation and Incubation Institute, Fudan UniversityIn multimodal representation learning, synergistic interactions between modalities not only provide complementary information but also create unique outcomes through specific interaction patterns that no single modality could achieve alone. Existing methods may struggle to effectively capture the full spectrum of synergistic information, leading to suboptimal performance in tasks where such interactions are critical. This is particularly problematic because synergistic information constitutes the fundamental value proposition of multimodal representation. To address this challenge, we introduce InfMasking, a contrastive synergistic information extraction method designed to enhance synergistic information through an Infinite Masking strategy. InfMasking stochastically occludes most features from each modality during fusion, preserving only partial information to create representations with varied synergistic patterns. Unmasked fused representations are then aligned with masked ones through mutual information maximization to encode comprehensive synergistic information. This infinite masking strategy enables capturing richer interactions by exposing the model to diverse partial modality combinations during training. As computing mutual information estimates with infinite masking is computationally prohibitive, we derive an InfMasking loss to approximate this calculation. Through controlled experiments, we demonstrate that InfMasking effectively enhances synergistic information between modalities. In evaluations on large-scale real-world datasets, InfMasking achieves state-of-the-art performance across seven benchmarks. Code is released at this https URL.

26 Jul 2025

We propose a new method for identifying and estimating the CP-factor models for matrix time series. Unlike the generalized eigenanalysis-based method of Chang et al. (2023) for which the convergence rates of the associated estimators may suffer from small eigengaps as the asymptotic theory is based on some matrix perturbation analysis, the proposed new method enjoys faster convergence rates which are free from any eigengaps. It achieves this by turning the problem into a joint diagonalization of several matrices whose elements are determined by a basis of a linear system, and by choosing the basis carefully to avoid near co-linearity (see Proposition 5 and Section 4.3). Furthermore, unlike Chang et al. (2023) which requires the two factor loading matrices to be full-ranked, the proposed new method can handle rank-deficient factor loading matrices. Illustration with both simulated and real matrix time series data shows the advantages of the proposed new method.

Forecasting the trend of stock prices is an enduring topic at the intersection of finance and computer science. Periodical updates to forecasters have proven effective in handling concept drifts arising from non-stationary markets. However, the existing methods neglect either emerging patterns in recent data or recurring patterns in historical data, both of which are empirically advantageous for future forecasting. To address this issue, we propose meta-learning with dynamic adaptation (MetaDA) for the incremental learning of stock trends, which periodically performs dynamic model adaptation utilizing the emerging and recurring patterns simultaneously. We initially organize the stock trend forecasting into meta-learning tasks and train a forecasting model following meta-learning protocols. During model adaptation, MetaDA efficiently adapts the forecasting model with the latest data and a selected portion of historical data, which is dynamically identified by a task inference module. The task inference module first extracts task-level embeddings from the historical tasks, and then identifies the informative data with a task inference network. MetaDA has been evaluated on real-world stock datasets, achieving state-of-the-art performance with satisfactory efficiency.

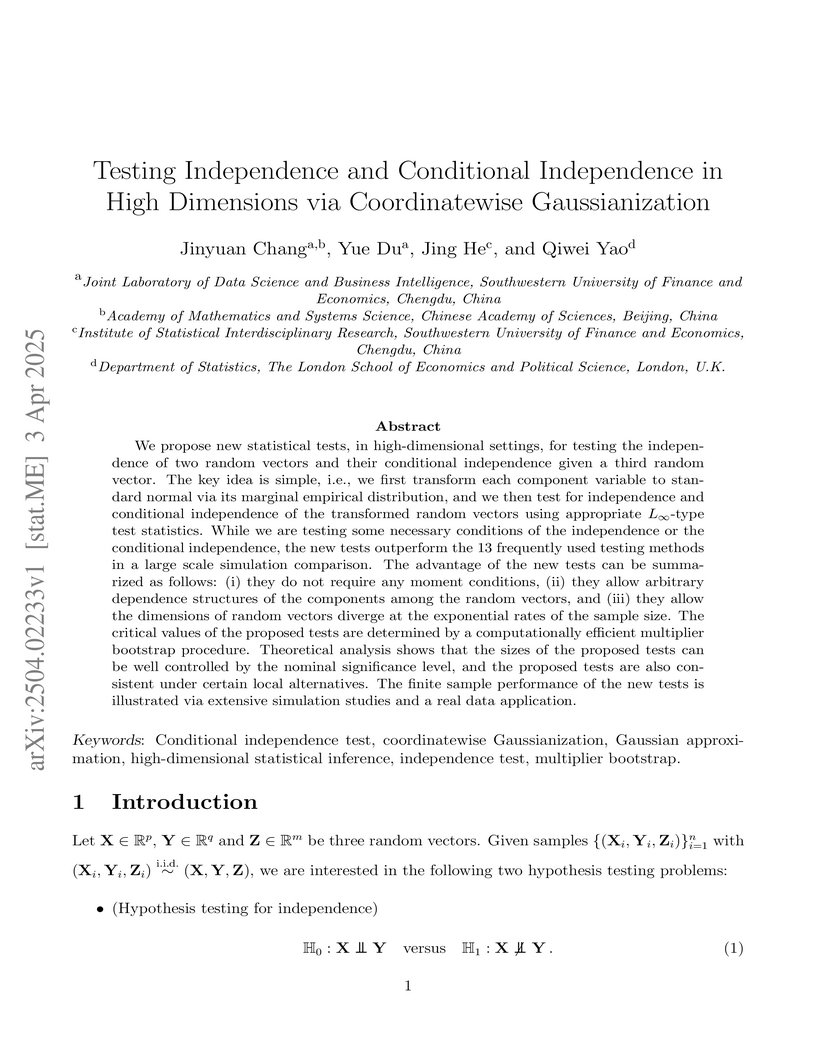

03 Apr 2025

We propose new statistical tests, in high-dimensional settings, for testing

the independence of two random vectors and their conditional independence given

a third random vector. The key idea is simple, i.e., we first transform each

component variable to standard normal via its marginal empirical distribution,

and we then test for independence and conditional independence of the

transformed random vectors using appropriate L∞-type test statistics.

While we are testing some necessary conditions of the independence or the

conditional independence, the new tests outperform the 13 frequently used

testing methods in a large scale simulation comparison. The advantage of the

new tests can be summarized as follows: (i) they do not require any moment

conditions, (ii) they allow arbitrary dependence structures of the components

among the random vectors, and (iii) they allow the dimensions of random vectors

diverge at the exponential rates of the sample size. The critical values of the

proposed tests are determined by a computationally efficient multiplier

bootstrap procedure. Theoretical analysis shows that the sizes of the proposed

tests can be well controlled by the nominal significance level, and the

proposed tests are also consistent under certain local alternatives. The finite

sample performance of the new tests is illustrated via extensive simulation

studies and a real data application.

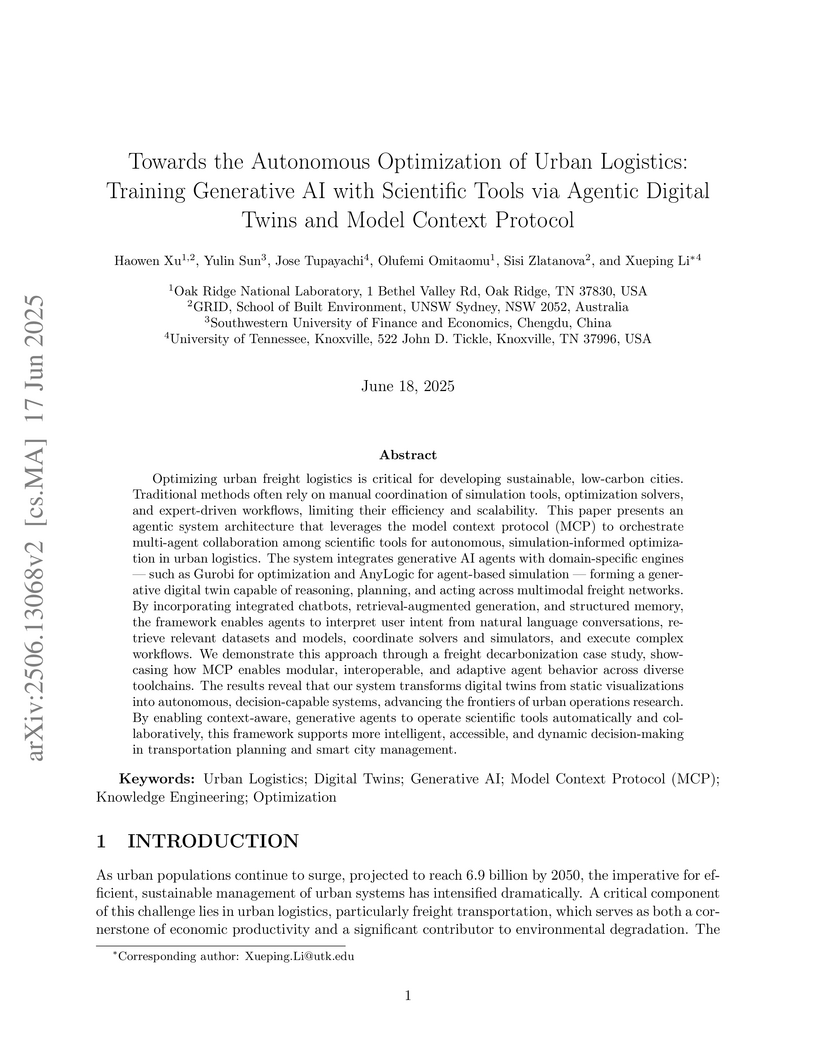

17 Jun 2025

The research from Oak Ridge National Laboratory, University of Tennessee, and UNSW Sydney introduces an agentic system that transforms urban digital twins into autonomous cognitive platforms for optimizing urban freight logistics. It leverages generative AI, multi-agent systems, and a Model Context Protocol to orchestrate scientific tools, achieving optimal intermodal delivery plans for 250 containers in 0.06 seconds and completing the full workflow in under 15 seconds.

Despite their success, Large Vision-Language Models (LVLMs) remain vulnerable

to hallucinations. While existing studies attribute the cause of hallucinations

to insufficient visual attention to image tokens, our findings indicate that

hallucinations also arise from interference from instruction tokens during

decoding. Intuitively, certain instruction tokens continuously distort LVLMs'

visual perception during decoding, hijacking their visual attention toward less

discriminative visual regions. This distortion prevents them integrating

broader contextual information from images, ultimately leading to

hallucinations. We term this phenomenon 'Attention Hijacking', where disruptive

instruction tokens act as 'Attention Hijackers'. To address this, we propose a

novel, training-free strategy namely Attention HIjackers Detection and

Disentanglement (AID), designed to isolate the influence of Hijackers, enabling

LVLMs to rely on their context-aware intrinsic attention map. Specifically, AID

consists of three components: First, Attention Hijackers Detection identifies

Attention Hijackers by calculating instruction-driven visual salience. Next,

Attention Disentanglement mechanism is proposed to mask the visual attention of

these identified Hijackers, and thereby mitigate their disruptive influence on

subsequent tokens. Finally, Re-Disentanglement recalculates the balance between

instruction-driven and image-driven visual salience to avoid over-masking

effects. Extensive experiments demonstrate that AID significantly reduces

hallucination across various LVLMs on several benchmarks.

By incorporating visual inputs, Multimodal Large Language Models (MLLMs) extend LLMs to support visual reasoning. However, this integration also introduces new vulnerabilities, making MLLMs susceptible to multimodal jailbreak attacks and hindering their safe this http URL defense methods, including Image-to-Text Translation, Safe Prompting, and Multimodal Safety Tuning, attempt to address this by aligning multimodal inputs with LLMs' built-in this http URL, they fall short in uncovering root causes of multimodal vulnerabilities, particularly how harmful multimodal tokens trigger jailbreak in MLLMs? Consequently, they remain vulnerable to text-driven multimodal jailbreaks, often exhibiting overdefensive behaviors and imposing heavy training this http URL bridge this gap, we present an comprehensive analysis of where, how and which harmful multimodal tokens bypass safeguards in MLLMs. Surprisingly, we find that less than 1% tokens in early-middle layers are responsible for inducing unsafe behaviors, highlighting the potential of precisely removing a small subset of harmful tokens, without requiring safety tuning, can still effectively improve safety against jailbreaks. Motivated by this, we propose Safe Prune-then-Restore (SafePTR), an training-free defense framework that selectively prunes harmful tokens at vulnerable layers while restoring benign features at subsequent this http URL incurring additional computational overhead, SafePTR significantly enhances the safety of MLLMs while preserving efficiency. Extensive evaluations across three MLLMs and five benchmarks demonstrate SafePTR's state-of-the-art performance in mitigating jailbreak risks without compromising utility.

Continual reinforcement learning (CRL) empowers RL agents with the ability to learn a sequence of tasks, accumulating knowledge learned in the past and using the knowledge for problemsolving or future task learning. However, existing methods often focus on transferring fine-grained knowledge across similar tasks, which neglects the multi-granularity structure of human cognitive control, resulting in insufficient knowledge transfer across diverse tasks. To enhance coarse-grained knowledge transfer, we propose a novel framework called MT-Core (as shorthand for Multi-granularity knowledge Transfer for Continual reinforcement learning). MT-Core has a key characteristic of multi-granularity policy learning: 1) a coarsegrained policy formulation for utilizing the powerful reasoning ability of the large language model (LLM) to set goals, and 2) a fine-grained policy learning through RL which is oriented by the goals. We also construct a new policy library (knowledge base) to store policies that can be retrieved for multi-granularity knowledge transfer. Experimental results demonstrate the superiority of the proposed MT-Core in handling diverse CRL tasks versus popular baselines.

This paper introduces Provable Diffusion Posterior Sampling (PDPS), a method for Bayesian inverse problems that integrates pre-trained diffusion models as data-driven priors. The approach offers the first non-asymptotic error bounds for diffusion-based posterior score estimation and demonstrates superior performance with reliable uncertainty quantification across various imaging tasks.

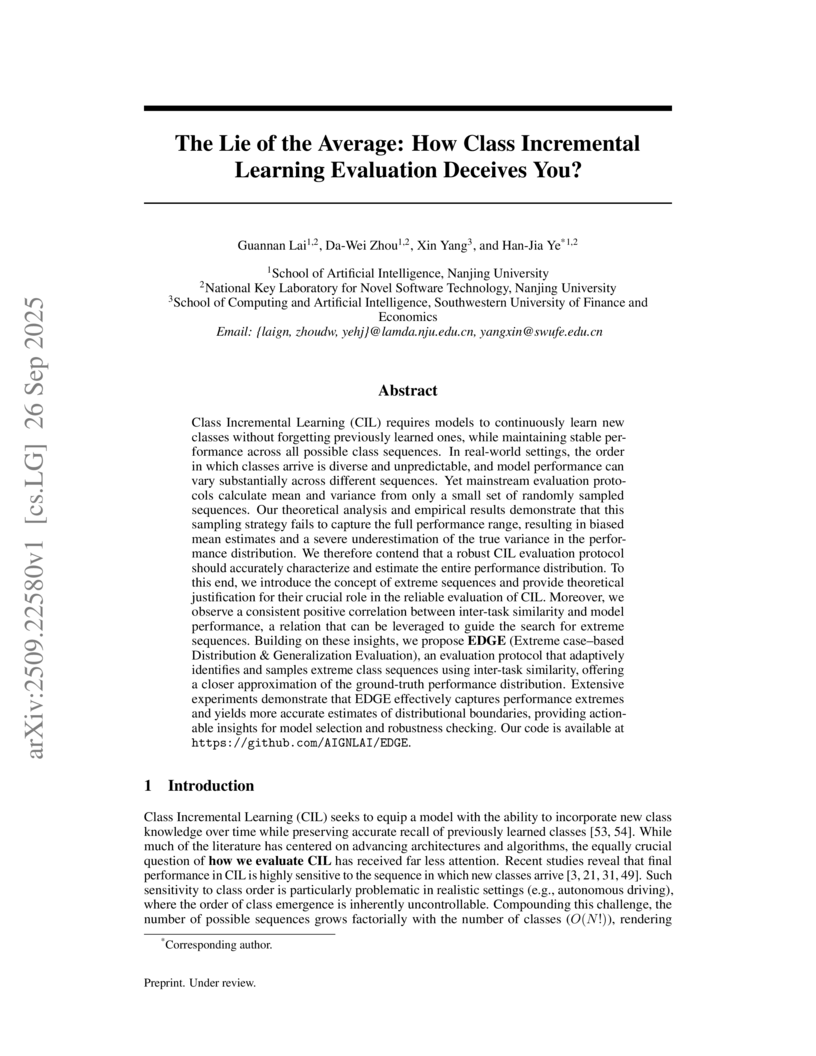

26 Sep 2025

Class Incremental Learning (CIL) requires models to continuously learn new classes without forgetting previously learned ones, while maintaining stable performance across all possible class sequences. In real-world settings, the order in which classes arrive is diverse and unpredictable, and model performance can vary substantially across different sequences. Yet mainstream evaluation protocols calculate mean and variance from only a small set of randomly sampled sequences. Our theoretical analysis and empirical results demonstrate that this sampling strategy fails to capture the full performance range, resulting in biased mean estimates and a severe underestimation of the true variance in the performance distribution. We therefore contend that a robust CIL evaluation protocol should accurately characterize and estimate the entire performance distribution. To this end, we introduce the concept of extreme sequences and provide theoretical justification for their crucial role in the reliable evaluation of CIL. Moreover, we observe a consistent positive correlation between inter-task similarity and model performance, a relation that can be leveraged to guide the search for extreme sequences. Building on these insights, we propose EDGE (Extreme case-based Distribution and Generalization Evaluation), an evaluation protocol that adaptively identifies and samples extreme class sequences using inter-task similarity, offering a closer approximation of the ground-truth performance distribution. Extensive experiments demonstrate that EDGE effectively captures performance extremes and yields more accurate estimates of distributional boundaries, providing actionable insights for model selection and robustness checking. Our code is available at this https URL.

03 Jan 2024

The recent breakthroughs in large language models (LLMs) are positioned to

transition many areas of software. Database technologies particularly have an

important entanglement with LLMs as efficient and intuitive database

interactions are paramount. In this paper, we present DB-GPT, a revolutionary

and production-ready project that integrates LLMs with traditional database

systems to enhance user experience and accessibility. DB-GPT is designed to

understand natural language queries, provide context-aware responses, and

generate complex SQL queries with high accuracy, making it an indispensable

tool for users ranging from novice to expert. The core innovation in DB-GPT

lies in its private LLM technology, which is fine-tuned on domain-specific

corpora to maintain user privacy and ensure data security while offering the

benefits of state-of-the-art LLMs. We detail the architecture of DB-GPT, which

includes a novel retrieval augmented generation (RAG) knowledge system, an

adaptive learning mechanism to continuously improve performance based on user

feedback and a service-oriented multi-model framework (SMMF) with powerful

data-driven agents. Our extensive experiments and user studies confirm that

DB-GPT represents a paradigm shift in database interactions, offering a more

natural, efficient, and secure way to engage with data repositories. The paper

concludes with a discussion of the implications of DB-GPT framework on the

future of human-database interaction and outlines potential avenues for further

enhancements and applications in the field. The project code is available at

https://github.com/eosphoros-ai/DB-GPT. Experience DB-GPT for yourself by

installing it with the instructions

https://github.com/eosphoros-ai/DB-GPT#install and view a concise 10-minute

video at https://www.youtube.com/watch?v=KYs4nTDzEhk.

A comprehensive survey details the theoretical underpinnings of diffusion models and their diverse applications across Natural Language Processing tasks. The analysis categorizes applications such as text generation, text-to-image synthesis, and text-to-speech, quantitatively showing that text-driven image generation is the most impactful area, with a mean citation of about 147 compared to ~15 for pure text generation.

Quantization and fine-tuning are crucial for deploying large language models (LLMs) on resource-constrained edge devices. However, fine-tuning quantized models presents significant challenges, primarily stemming from: First, the mismatch in data types between the low-precision quantized weights (e.g., 4-bit) and the high-precision adaptation weights (e.g., 16-bit). This mismatch limits the computational efficiency advantage offered by quantized weights during inference. Second, potential accuracy degradation when merging these high-precision adaptation weights into the low-precision quantized weights, as the adaptation weights often necessitate approximation or truncation. Third, as far as we know, no existing methods support the lossless merging of adaptation while adjusting all quantized weights. To address these challenges, we introduce lossless ternary adaptation for quantization-aware fine-tuning (LoTA-QAF). This is a novel fine-tuning method specifically designed for quantized LLMs, enabling the lossless merging of ternary adaptation weights into quantized weights and the adjustment of all quantized weights. LoTA-QAF operates through a combination of: i) A custom-designed ternary adaptation (TA) that aligns ternary weights with the quantization grid and uses these ternary weights to adjust quantized weights. ii) A TA-based mechanism that enables the lossless merging of adaptation weights. iii) Ternary signed gradient descent (t-SignSGD) for updating the TA weights. We apply LoTA-QAF to Llama-3.1/3.3 and Qwen-2.5 model families and validate its effectiveness on several downstream tasks. On the MMLU benchmark, our method effectively recovers performance for quantized models, surpassing 16-bit LoRA by up to 5.14\%. For task-specific fine-tuning, 16-bit LoRA achieves superior results, but LoTA-QAF still outperforms other methods. Code: this http URL.

Accurately forecasting the impact of macroeconomic events is critical for investors and policymakers. Salient events like monetary policy decisions and employment reports often trigger market movements by shaping expectations of economic growth and risk, thereby establishing causal relationships between events and market behavior. Existing forecasting methods typically focus either on textual analysis or time-series modeling, but fail to capture the multi-modal nature of financial markets and the causal relationship between events and price movements. To address these gaps, we propose CAMEF (Causal-Augmented Multi-Modality Event-Driven Financial Forecasting), a multi-modality framework that effectively integrates textual and time-series data with a causal learning mechanism and an LLM-based counterfactual event augmentation technique for causal-enhanced financial forecasting. Our contributions include: (1) a multi-modal framework that captures causal relationships between policy texts and historical price data; (2) a new financial dataset with six types of macroeconomic releases from 2008 to April 2024, and high-frequency real trading data for five key U.S. financial assets; and (3) an LLM-based counterfactual event augmentation strategy. We compare CAMEF to state-of-the-art transformer-based time-series and multi-modal baselines, and perform ablation studies to validate the effectiveness of the causal learning mechanism and event types.

07 Mar 2025

Economic and financial models -- such as vector autoregressions, local

projections, and multivariate volatility models -- feature complex dynamic

interactions and spillovers across many time series. These models can be

integrated into a unified framework, with high-dimensional parameters

identified by moment conditions. As the number of parameters and moment

conditions may surpass the sample size, we propose adding a double penalty to

the empirical likelihood criterion to induce sparsity and facilitate dimension

reduction. Notably, we utilize a marginal empirical likelihood approach despite

temporal dependence in the data. Under regularity conditions, we provide

asymptotic guarantees for our method, making it an attractive option for

estimating large-scale multivariate time series models. We demonstrate the

versatility of our procedure through extensive Monte Carlo simulations and

three empirical applications, including analyses of US sectoral inflation

rates, fiscal multipliers, and volatility spillover in China's banking sector.

There are no more papers matching your filters at the moment.