Thapar Institute of Engineering and Technology

Reward-model training is the cost bottleneck in modern Reinforcement Learning

Human Feedback (RLHF) pipelines, often requiring tens of billions of parameters

and an offline preference-tuning phase. In the proposed method, a frozen,

instruction-tuned 7B LLM is augmented with only a one line JSON rubric and a

rank-16 LoRA adapter (affecting just 0.8% of the model's parameters), enabling

it to serve as a complete substitute for the previously used heavyweight

evaluation models. The plug-and-play judge achieves 96.2% accuracy on

RewardBench, outperforming specialized reward networks ranging from 27B to 70B

parameters. Additionally, it allows a 7B actor to outperform the top 70B DPO

baseline, which scores 61.8%, by achieving 92% exact match accuracy on GSM-8K

utilizing online PPO. Thorough ablations indicate that (i) six in context

demonstrations deliver the majority of the zero-to-few-shot improvements

(+2pp), and (ii) the LoRA effectively addresses the remaining disparity,

particularly in the safety and adversarial Chat-Hard segments. The proposed

model introduces HH-Rationales, a subset of 10,000 pairs from Anthropic

HH-RLHF, to examine interpretability, accompanied by human generated

justifications. GPT-4 scoring indicates that our LoRA judge attains

approximately = 9/10 in similarity to human explanations, while zero-shot

judges score around =5/10. These results indicate that the combination of

prompt engineering and tiny LoRA produces a cost effective, transparent, and

easily adjustable reward function, removing the offline phase while achieving

new state-of-the-art outcomes for both static evaluation and online RLHF.

Researchers from the University of Waterloo and Thapar Institute developed a real-time phone call fraud detection system leveraging Retrieval-Augmented Generation (RAG) based Large Language Models. This system achieved an accuracy of 97.98% and an F1-score of 97.44% on synthetic datasets for identifying fraudulent calls and impersonations, demonstrating adaptable policy compliance without requiring model retraining.

The challenges of solving complex university-level mathematics problems, particularly those from MIT, and Columbia University courses, and selected tasks from the MATH dataset, remain a significant obstacle in the field of artificial intelligence. Conventional methods have consistently fallen short in this domain, highlighting the need for more advanced approaches. In this paper, we introduce a language-based solution that leverages zero-shot learning and mathematical reasoning to effectively solve, explain, and generate solutions for these advanced math problems. By integrating program synthesis, our method reduces reliance on large-scale training data while significantly improving problem-solving accuracy. Our approach achieves an accuracy of 90.15%, representing a substantial improvement over the previous benchmark of 81% and setting a new standard in automated mathematical problem-solving. These findings highlight the significant potential of advanced AI methodologies to address and overcome the challenges presented by some of the most complex mathematical courses and datasets.

11 Dec 2024

SPACE-SUIT: An Artificial Intelligence based chromospheric feature extractor and classifier for SUIT

SPACE-SUIT: An Artificial Intelligence based chromospheric feature extractor and classifier for SUIT

Indian Association for the Cultivation of ScienceUniversity of DelhiTezpur UniversityManipal Academy of Higher EducationInter-University Centre for Astronomy and AstrophysicsBay Area Environmental Research InstituteThapar Institute of Engineering and TechnologyLockheed Martin Solar and Astrophysics LaboratoryRamakrishna Mission Residential College

The Solar Ultraviolet Imaging Telescope(SUIT) onboard Aditya-L1 is an imager that observes the solar photosphere and chromosphere through observations in the wavelength range of 200-400 nm. A comprehensive understanding of the plasma and thermodynamic properties of chromospheric and photospheric morphological structures requires a large sample statistical study, necessitating the development of automatic feature detection methods. To this end, we develop the feature detection algorithm SPACE-SUIT: Solar Phenomena Analysis and Classification using Enhanced vision techniques for SUIT, to detect and classify the solar chromospheric features to be observed from SUIT's Mg II k filter. Specifically, we target plage regions, sunspots, filaments, and off-limb structures. SPACE uses You Only Look Once(YOLO), a neural network-based model to identify regions of interest. We train and validate SPACE using mock-SUIT images developed from Interface Region Imaging Spectrometer(IRIS) full-disk mosaic images in Mg II k line, while we also perform detection on Level-1 SUIT data. SPACE achieves an approximate precision of 0.788, recall 0.863 and MAP of 0.874 on the validation mock SUIT FITS dataset. Given the manual labeling of our dataset, we perform "self-validation" by applying statistical measures and Tamura features on the ground truth and predicted bounding boxes. We find the distributions of entropy, contrast, dissimilarity, and energy to show differences in the features. These differences are qualitatively captured by the detected regions predicted by SPACE and validated with the observed SUIT images, even in the absence of labeled ground truth. This work not only develops a chromospheric feature extractor but also demonstrates the effectiveness of statistical metrics and Tamura features for distinguishing chromospheric features, offering independent validation for future detection schemes.

Armoured vehicles are specialized and complex pieces of machinery designed to operate in high-stress environments, often in combat or tactical situations. This study proposes a predictive maintenance-based ensemble system that aids in predicting potential maintenance needs based on sensor data collected from these vehicles. The proposed model's architecture involves various models such as Light Gradient Boosting, Random Forest, Decision Tree, Extra Tree Classifier and Gradient Boosting to predict the maintenance requirements of the vehicles accurately. In addition, K-fold cross validation, along with TOPSIS analysis, is employed to evaluate the proposed ensemble model's stability. The results indicate that the proposed system achieves an accuracy of 98.93%, precision of 99.80% and recall of 99.03%. The algorithm can effectively predict maintenance needs, thereby reducing vehicle downtime and improving operational efficiency. Through comparisons between various algorithms and the suggested ensemble, this study highlights the potential of machine learning-based predictive maintenance solutions.

Tic Tac Toe is amongst the most well-known games. It has already been shown that it is a biased game, giving more chances to win for the first player leaving only a draw or a loss as possibilities for the opponent, assuming both the players play optimally. Thus on average majority of the games played result in a draw. The majority of the latest research on how to solve a tic tac toe board state employs strategies such as Genetic Algorithms, Neural Networks, Co-Evolution, and Evolutionary Programming. But these approaches deal with a trivial board state of 3X3 and very little research has been done for a generalized algorithm to solve 4X4,5X5,6X6 and many higher states. Even though an algorithm exists which is Min-Max but it takes a lot of time in coming up with an ideal move due to its recursive nature of implementation. A Sample has been created on this link \url{this https URL} to prove this fact. This is the main problem that this study is aimed at solving i.e providing a generalized algorithm(Approximate method, Learning-Based) for higher board states of tic tac toe to make precise moves in a short period. Also, the code changes needed to accommodate higher board states will be nominal. The idea is to pose the tic tac toe game as a well-posed learning problem. The study and its results are promising, giving a high win to draw ratio with each epoch of training. This study could also be encouraging for other researchers to apply the same algorithm to other similar board games like Minesweeper, Chess, and GO for finding efficient strategies and comparing the results.

Foundation models (FMs) for computer vision learn rich and robust

representations, enabling their adaptation to task/domain-specific deployments

with little to no fine-tuning. However, we posit that the very same strength

can make applications based on FMs vulnerable to model stealing attacks.

Through empirical analysis, we reveal that models fine-tuned from FMs harbor

heightened susceptibility to model stealing, compared to conventional vision

architectures like ResNets. We hypothesize that this behavior is due to the

comprehensive encoding of visual patterns and features learned by FMs during

pre-training, which are accessible to both the attacker and the victim. We

report that an attacker is able to obtain 94.28% agreement (matched predictions

with victim) for a Vision Transformer based victim model (ViT-L/16) trained on

CIFAR-10 dataset, compared to only 73.20% agreement for a ResNet-18 victim,

when using ViT-L/16 as the thief model. We arguably show, for the first time,

that utilizing FMs for downstream tasks may not be the best choice for

deployment in commercial APIs due to their susceptibility to model theft. We

thereby alert model owners towards the associated security risks, and highlight

the need for robust security measures to safeguard such models against theft.

Code is available at this https URL

The classification of harmful brain activities, such as seizures and periodic discharges, play a vital role in neurocritical care, enabling timely diagnosis and intervention. Electroencephalography (EEG) provides a non-invasive method for monitoring brain activity, but the manual interpretation of EEG signals are time-consuming and rely heavily on expert judgment. This study presents a comparative analysis of deep learning architectures, including Convolutional Neural Networks (CNNs), Vision Transformers (ViTs), and EEGNet, applied to the classification of harmful brain activities using both raw EEG data and time-frequency representations generated through Continuous Wavelet Transform (CWT). We evaluate the performance of these models use multimodal data representations, including high-resolution spectrograms and waveform data, and introduce a multi-stage training strategy to improve model robustness. Our results show that training strategies, data preprocessing, and augmentation techniques are as critical to model success as architecture choice, with multi-stage TinyViT and EfficientNet demonstrating superior performance. The findings underscore the importance of robust training regimes in achieving accurate and efficient EEG classification, providing valuable insights for deploying AI models in clinical practice.

Urban autonomous driving is an open and challenging problem to solve as the decision-making system has to account for several dynamic factors like multi-agent interactions, diverse scene perceptions, complex road geometries, and other rarely occurring real-world events. On the other side, with deep reinforcement learning (DRL) techniques, agents have learned many complex policies. They have even achieved super-human-level performances in various Atari Games and Deepmind's AlphaGo. However, current DRL techniques do not generalize well on complex urban driving scenarios. This paper introduces the DRL driven Watch and Drive (WAD) agent for end-to-end urban autonomous driving. Motivated by recent advancements, the study aims to detect important objects/states in high dimensional spaces of CARLA and extract the latent state from them. Further, passing on the latent state information to WAD agents based on TD3 and SAC methods to learn the optimal driving policy. Our novel approach utilizing fewer resources, step-by-step learning of different driving tasks, hard episode termination policy, and reward mechanism has led our agents to achieve a 100% success rate on all driving tasks in the original CARLA benchmark and set a new record of 82% on further complex NoCrash benchmark, outperforming the state-of-the-art model by more than +30% on NoCrash benchmark.

Investors make investment decisions depending on several factors such as fundamental analysis, technical analysis, and quantitative analysis. Another factor on which investors can make investment decisions is through sentiment analysis of news headlines, the sole purpose of this study. Natural Language Processing techniques are typically used to deal with such a large amount of data and get valuable information out of it. NLP algorithms convert raw text into numerical representations that machines can easily understand and interpret. This conversion can be done using various embedding techniques. In this research, embedding techniques used are BoW, TF-IDF, Word2Vec, BERT, GloVe, and FastText, and then fed to deep learning models such as RNN and LSTM. This work aims to evaluate these model's performance to choose the robust model in identifying the significant factors influencing the prediction. During this research, it was expected that Deep Leaming would be applied to get the desired results or achieve better accuracy than the state-of-the-art. The models are compared to check their outputs to know which one has performed better.

The COVID-19 pandemic and the internet's availability have recently boosted

online learning. However, monitoring engagement in online learning is a

difficult task for teachers. In this context, timely automatic student

engagement classification can help teachers in making adaptive adjustments to

meet students' needs. This paper proposes EngageFormer, a transformer based

architecture with sequence pooling using video modality for engagement

classification. The proposed architecture computes three views from the input

video and processes them in parallel using transformer encoders; the global

encoder then processes the representation from each encoder, and finally, multi

layer perceptron (MLP) predicts the engagement level. A learning centered

affective state dataset is curated from existing open source databases. The

proposed method achieved an accuracy of 63.9%, 56.73%, 99.16%, 65.67%, and

74.89% on Dataset for Affective States in E-Environments (DAiSEE), Bahcesehir

University Multimodal Affective Database-1 (BAUM-1), Yawning Detection Dataset

(YawDD), University of Texas at Arlington Real-Life Drowsiness Dataset

(UTA-RLDD), and curated learning-centered affective state dataset respectively.

The achieved results on the BAUM-1, DAiSEE, and YawDD datasets demonstrate

state-of-the-art performance, indicating the superiority of the proposed model

in accurately classifying affective states on these datasets. Additionally, the

results obtained on the UTA-RLDD dataset, which involves two-class

classification, serve as a baseline for future research. These results provide

a foundation for further investigations and serve as a point of reference for

future works to compare and improve upon.

18 Jun 2024

Inter-University Center for Astronomy and AstrophysicsNational Institute of TechnologyInstitute of PhysicsIndian Institute of AstrophysicsNational Centre for Radio AstrophysicsCentral University of Himachal PradeshThapar Institute of Engineering and TechnologyMaulana Azad National Urdu UniversityHomi Bhabha Center for Science EducationAryabhatta Research Institute of observational-sciences

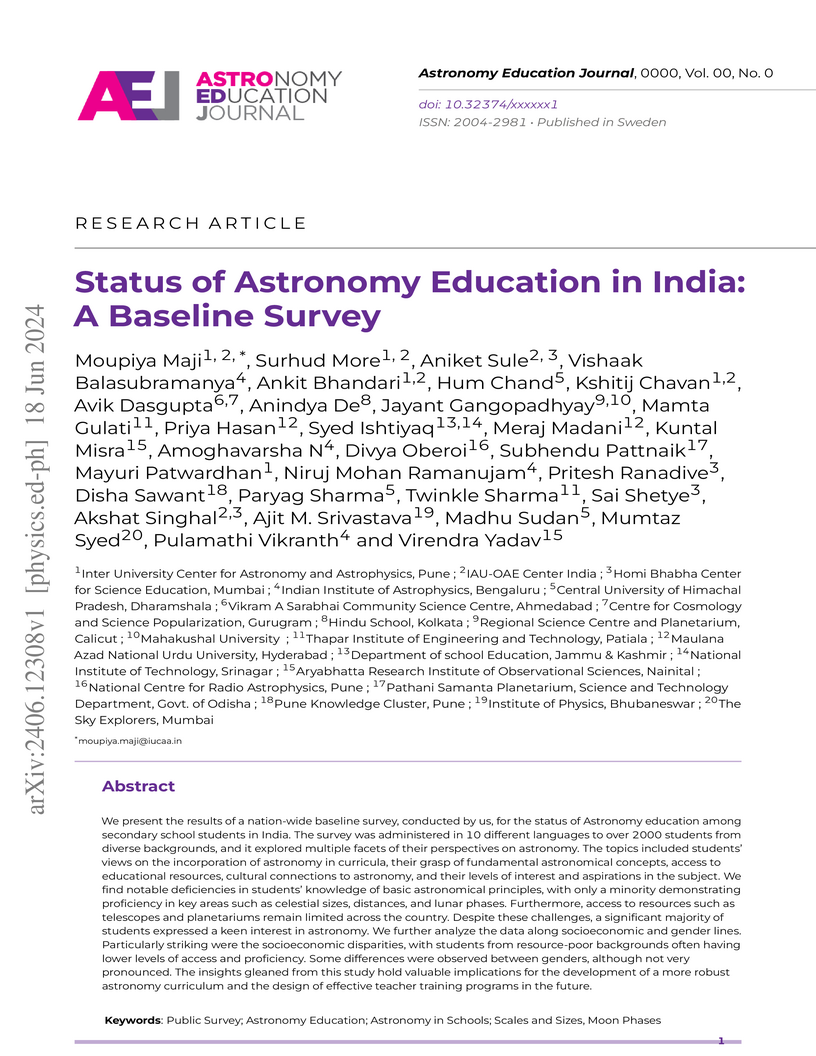

We present the results of a nation-wide baseline survey, conducted by us, for

the status of Astronomy education among secondary school students in India. The

survey was administered in 10 different languages to over 2000 students from

diverse backgrounds, and it explored multiple facets of their perspectives on

astronomy. The topics included students' views on the incorporation of

astronomy in curricula, their grasp of fundamental astronomical concepts,

access to educational resources, cultural connections to astronomy, and their

levels of interest and aspirations in the subject. We find notable deficiencies

in students' knowledge of basic astronomical principles, with only a minority

demonstrating proficiency in key areas such as celestial sizes, distances, and

lunar phases. Furthermore, access to resources such as telescopes and

planetariums remain limited across the country. Despite these challenges, a

significant majority of students expressed a keen interest in astronomy. We

further analyze the data along socioeconomic and gender lines. Particularly

striking were the socioeconomic disparities, with students from resource-poor

backgrounds often having lower levels of access and proficiency. Some

differences were observed between genders, although not very pronounced. The

insights gleaned from this study hold valuable implications for the development

of a more robust astronomy curriculum and the design of effective teacher

training programs in the future.

Electroencephalography (EEG)-based Brain-Computer Interfaces (BCIs) have emerged as a transformative technology with applications spanning robotics, virtual reality, medicine, and rehabilitation. However, existing BCI frameworks face several limitations, including a lack of stage-wise flexibility essential for experimental research, steep learning curves for researchers without programming expertise, elevated costs due to reliance on proprietary software, and a lack of all-inclusive features leading to the use of multiple external tools affecting research outcomes. To address these challenges, we present PyNoetic, a modular BCI framework designed to cater to the diverse needs of BCI research. PyNoetic is one of the very few frameworks in Python that encompasses the entire BCI design pipeline, from stimulus presentation and data acquisition to channel selection, filtering, feature extraction, artifact removal, and finally simulation and visualization. Notably, PyNoetic introduces an intuitive and end-to-end GUI coupled with a unique pick-and-place configurable flowchart for no-code BCI design, making it accessible to researchers with minimal programming experience. For advanced users, it facilitates the seamless integration of custom functionalities and novel algorithms with minimal coding, ensuring adaptability at each design stage. PyNoetic also includes a rich array of analytical tools such as machine learning models, brain-connectivity indices, systematic testing functionalities via simulation, and evaluation methods of novel paradigms. PyNoetic's strengths lie in its versatility for both offline and real-time BCI development, which streamlines the design process, allowing researchers to focus on more intricate aspects of BCI development and thus accelerate their research endeavors. Project Website: this https URL

The challenges of solving complex university-level mathematics problems, particularly those from MIT, and Columbia University courses, and selected tasks from the MATH dataset, remain a significant obstacle in the field of artificial intelligence. Conventional methods have consistently fallen short in this domain, highlighting the need for more advanced approaches. In this paper, we introduce a language-based solution that leverages zero-shot learning and mathematical reasoning to effectively solve, explain, and generate solutions for these advanced math problems. By integrating program synthesis, our method reduces reliance on large-scale training data while significantly improving problem-solving accuracy. Our approach achieves an accuracy of 90.15%, representing a substantial improvement over the previous benchmark of 81% and setting a new standard in automated mathematical problem-solving. These findings highlight the significant potential of advanced AI methodologies to address and overcome the challenges presented by some of the most complex mathematical courses and datasets.

Source camera model identification (SCMI) plays a pivotal role in image

forensics with applications including authenticity verification and copyright

protection. For identifying the camera model used to capture a given image, we

propose SPAIR-Swin, a novel model combining a modified spatial attention

mechanism and inverted residual block (SPAIR) with a Swin Transformer.

SPAIR-Swin effectively captures both global and local features, enabling robust

identification of artifacts such as noise patterns that are particularly

effective for SCMI. Additionally, unlike conventional methods focusing on

homogeneous patches, we propose a patch selection strategy for SCMI that

emphasizes high-entropy regions rich in patterns and textures. Extensive

evaluations on four benchmark SCMI datasets demonstrate that SPAIR-Swin

outperforms existing methods, achieving patch-level accuracies of 99.45%,

98.39%, 99.45%, and 97.46% and image-level accuracies of 99.87%, 99.32%, 100%,

and 98.61% on the Dresden, Vision, Forchheim, and Socrates datasets,

respectively. Our findings highlight that high-entropy patches, which contain

high-frequency information such as edge sharpness, noise, and compression

artifacts, are more favorable in improving SCMI accuracy. Code will be made

available upon request.

13 Mar 2023

In this new digital era, social media has created a severe impact on the

lives of people. In recent times, fake news content on social media has become

one of the major challenging problems for society. The dissemination of

fabricated and false news articles includes multimodal data in the form of text

and images. The previous methods have mainly focused on unimodal analysis.

Moreover, for multimodal analysis, researchers fail to keep the unique

characteristics corresponding to each modality. This paper aims to overcome

these limitations by proposing an Efficient Transformer based Multilevel

Attention (ETMA) framework for multimodal fake news detection, which comprises

the following components: visual attention-based encoder, textual

attention-based encoder, and joint attention-based learning. Each component

utilizes the different forms of attention mechanism and uniquely deals with

multimodal data to detect fraudulent content. The efficacy of the proposed

network is validated by conducting several experiments on four real-world fake

news datasets: Twitter, Jruvika Fake News Dataset, Pontes Fake News Dataset,

and Risdal Fake News Dataset using multiple evaluation metrics. The results

show that the proposed method outperforms the baseline methods on all four

datasets. Further, the computation time of the model is also lower than the

state-of-the-art methods.

11 Jun 2025

The magnetic octupole moment of JP=23+ decuplet baryons are

discussed in the statistical framework, treating baryons as an ensembles of

quark-gluon Fock states. The probabilities associated with multiple strange and

non-strange Fock states depict the importance of sea in spin, flavor & color

space, which are further merged into statistical parameters. The individual

contribution of valence and sea (scalar, vector and tensor) to the magnetic

octupole moment is calculated. The symmetry breaking in both sea and valence is

experienced by a suppression factor k(1−Cl)n−1 and a mass correction

parameter 'r', respectively. The factor k(1−Cl)n−1 systematically reduces

the probabilities of Fock states containing multiple strange quark pairs. The

octupole moment value is obtained -ve for Δ++,Δ+,Σ∗+

and +ve for Δ−,Σ∗−,Ξ∗−,Ω− baryons with the

domination of scalar (spin-0) sea. The computed results are compared with

existing theoretical predictions, demonstrating good consistency. These

predictions may serve as valuable inputs for future high-precision experiments

and theoretical explorations in hadron structure.

15 Feb 2025

This paper proposes a novel set of trigonometric implementations which are 5x

faster than the inbuilt C++ functions. The proposed implementation is also

highly memory efficient requiring no precomputations of any kind. Benchmark

comparisons are done versus inbuilt functions and an optimized taylor

implementation. Further, device usage estimates are also obtained, showing

significant hardware usage reduction compared to inbuilt functions. This

improvement could be particularly useful for low-end FPGAs or other

resource-constrained devices.

Quasiparticle interference has been used frequently for the purpose of

unraveling the electronic states in the vicinity of the Fermi level as well as

the nature of superconducting gap in the unconventional superconductors. Using

the metallic spin-density wave state of iron pnictides as an example, we

demonstrate that the quasiparticle interference can also be used as a probe to

provide crucial insight into the interplay of the electronic bandstructure and

correlation effects in addition to bringing forth the essential features of

electronic states in the vicinity of the Fermi level. Our study reveals that

the features of quasiparticle interference pattern can help us narrowing down

the interaction parameter window and choose a more realistic tight-binding

model.

In recent times, online education and the usage of video-conferencing platforms have experienced massive growth. Due to the limited scope of a virtual classroom, it may become difficult for instructors to analyze learners' attention and comprehension in real time while teaching. In the digital mode of education, it would be beneficial for instructors to have an automated feedback mechanism to be informed regarding learners' attentiveness at any given time. This research presents a novel computer vision-based approach to analyze and quantify learners' attentiveness, engagement, and other affective states within online learning scenarios. This work presents the development of a multiclass multioutput classification method using convolutional neural networks on a publicly available dataset - DAiSEE. A machine learning-based algorithm is developed on top of the classification model that outputs a comprehensive attentiveness index of the learners. Furthermore, an end-to-end pipeline is proposed through which learners' live video feed is processed, providing detailed attentiveness analytics of the learners to the instructors. By comparing the experimental outcomes of the proposed method against those of previous methods, it is demonstrated that the proposed method exhibits better attentiveness detection than state-of-the-art methods. The proposed system is a comprehensive, practical, and real-time solution that is deployable and easy to use. The experimental results also demonstrate the system's efficiency in gauging learners' attentiveness.

There are no more papers matching your filters at the moment.