UC Riverside

The paper scrutinizes the long-standing belief in unbounded Large Language Model (LLM) scaling, establishing a proof-informed framework that identifies intrinsic theoretical limits on their capabilities. It synthesizes empirical failures like hallucination and reasoning degradation with foundational concepts from computability theory, information theory, and statistical learning, showing that these issues are inherent rather than transient engineering challenges.

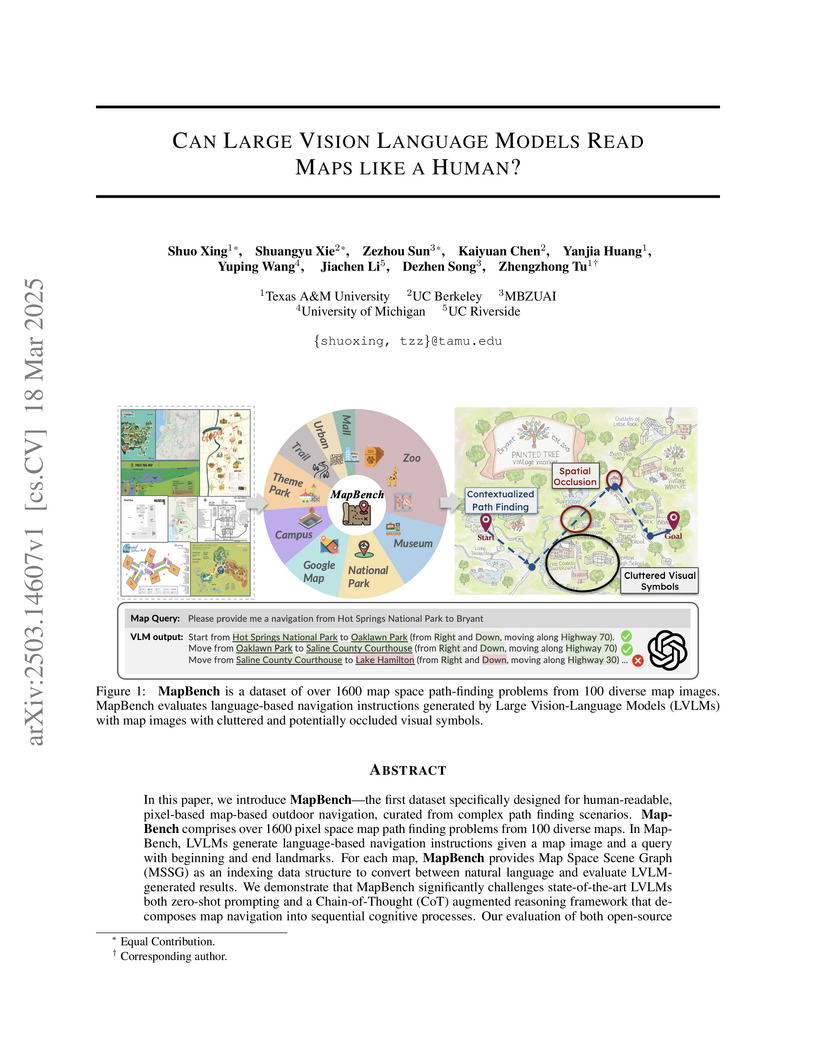

Researchers from Texas A&M University and collaborators introduce MapBench, a dataset of 100 diverse maps with 1649 path-finding queries, to evaluate how well Large Vision Language Models (LVLMs) can understand and navigate maps compared to humans, revealing significant limitations in spatial reasoning and route planning capabilities even with Chain-of-Thought prompting.

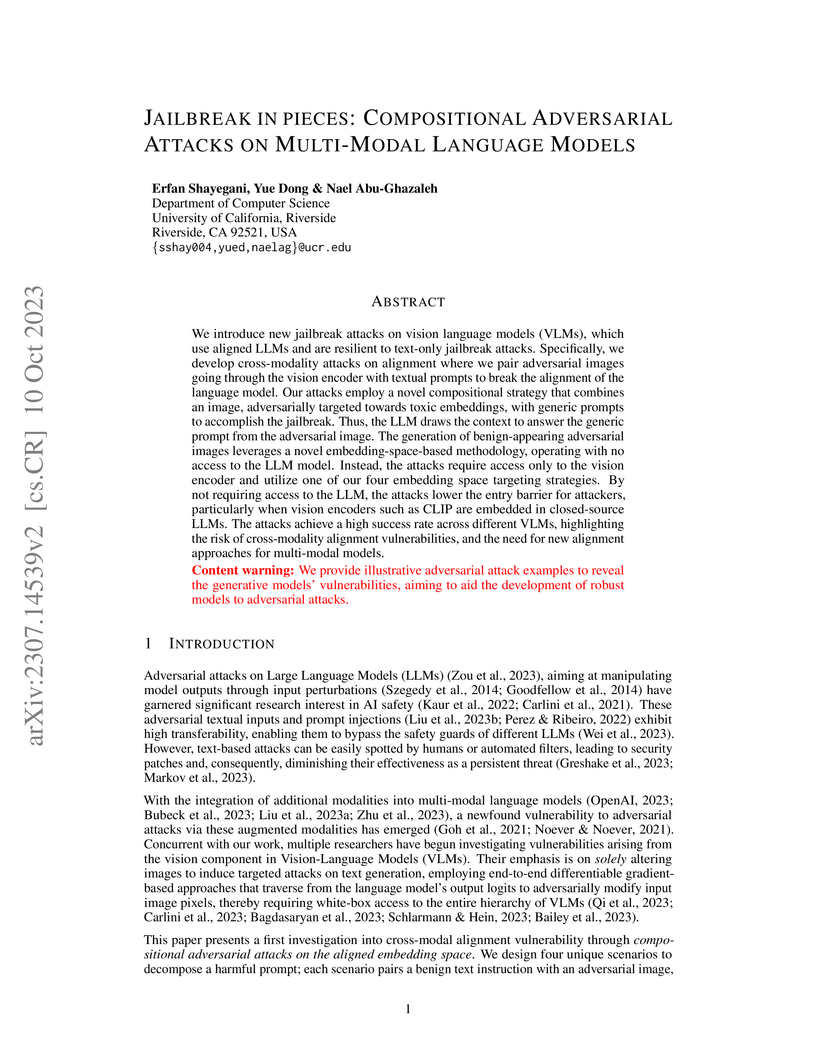

Researchers developed "Jailbreak in Pieces," a compositional cross-modal adversarial attack method for Vision-Language Models (VLMs) that manipulates image inputs in the aligned embedding space. This approach achieves high attack success rates, compelling VLMs to generate harmful content while requiring only black-box access to the language model component.

The growing carbon footprint of artificial intelligence (AI) has been

undergoing public scrutiny. Nonetheless, the equally important water

(withdrawal and consumption) footprint of AI has largely remained under the

radar. For example, training the GPT-3 language model in Microsoft's

state-of-the-art U.S. data centers can directly evaporate 700,000 liters of

clean freshwater, but such information has been kept a secret. More critically,

the global AI demand is projected to account for 4.2-6.6 billion cubic meters

of water withdrawal in 2027, which is more than the total annual water

withdrawal of 4-6 Denmark or half of the United Kingdom. This is concerning, as

freshwater scarcity has become one of the most pressing challenges. To respond

to the global water challenges, AI can, and also must, take social

responsibility and lead by example by addressing its own water footprint. In

this paper, we provide a principled methodology to estimate the water footprint

of AI, and also discuss the unique spatial-temporal diversities of AI's runtime

water efficiency. Finally, we highlight the necessity of holistically

addressing water footprint along with carbon footprint to enable truly

sustainable AI.

A framework combining large language models with evolutionary algorithms automates the design of trajectory prediction heuristics, producing computationally efficient models that achieve competitive accuracy with deep learning approaches while maintaining interpretability and demonstrating superior cross-dataset generalization on the Stanford Drone Dataset.

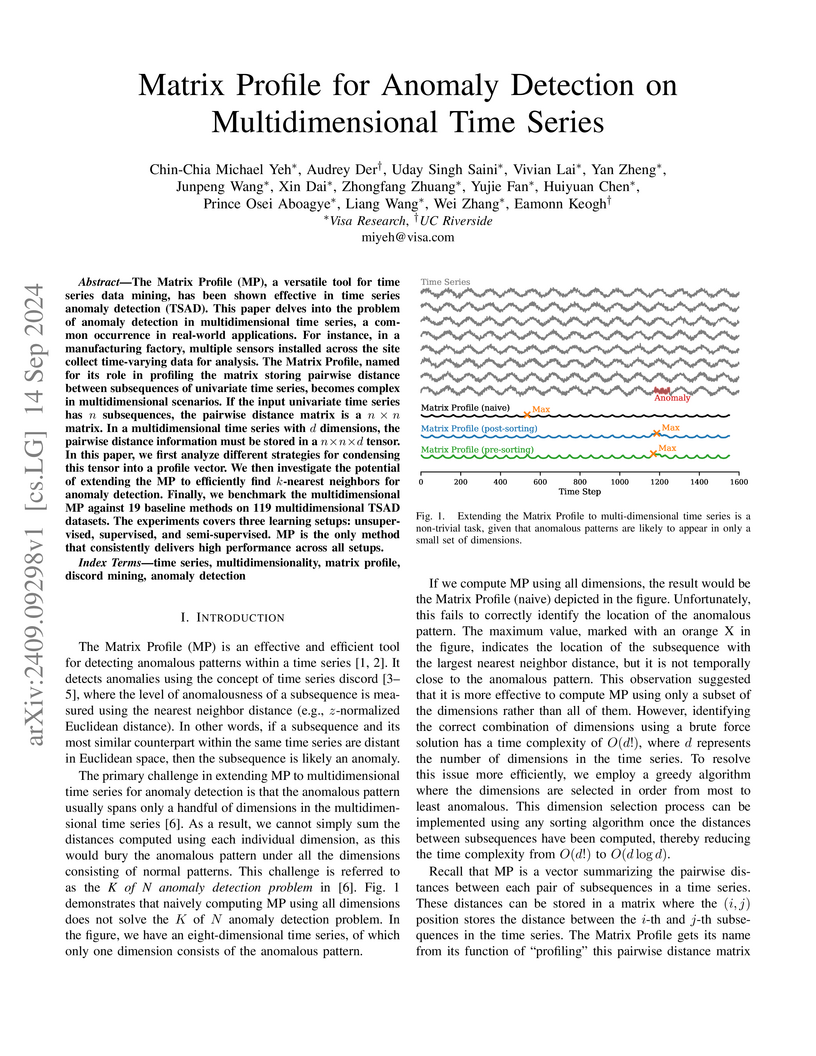

This paper extends the Matrix Profile framework to multidimensional time series for anomaly detection by introducing pre-sorting and post-sorting strategies to handle anomalies manifesting in subsets of dimensions. The approach demonstrates consistent high performance across unsupervised, supervised, and semi-supervised learning setups and maintains computational efficiency on large datasets.

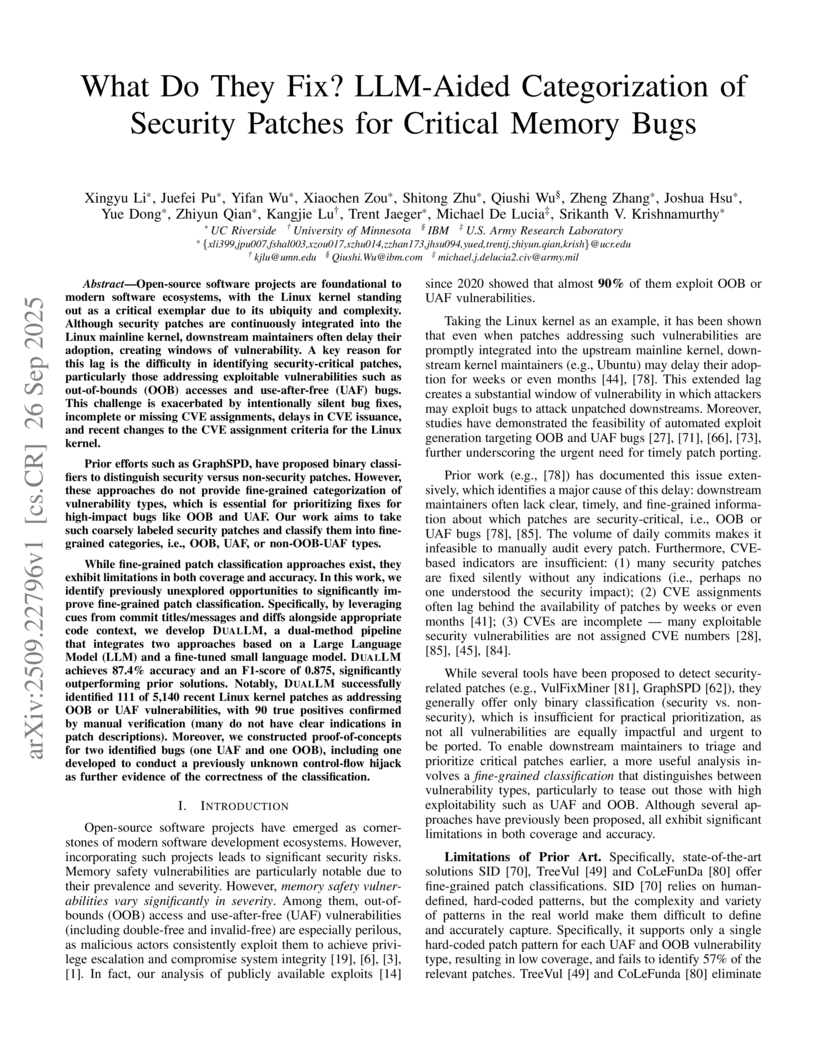

Open-source software projects are foundational to modern software ecosystems, with the Linux kernel standing out as a critical exemplar due to its ubiquity and complexity. Although security patches are continuously integrated into the Linux mainline kernel, downstream maintainers often delay their adoption, creating windows of vulnerability. A key reason for this lag is the difficulty in identifying security-critical patches, particularly those addressing exploitable vulnerabilities such as out-of-bounds (OOB) accesses and use-after-free (UAF) bugs. This challenge is exacerbated by intentionally silent bug fixes, incomplete or missing CVE assignments, delays in CVE issuance, and recent changes to the CVE assignment criteria for the Linux kernel. While fine-grained patch classification approaches exist, they exhibit limitations in both coverage and accuracy. In this work, we identify previously unexplored opportunities to significantly improve fine-grained patch classification. Specifically, by leveraging cues from commit titles/messages and diffs alongside appropriate code context, we develop DUALLM, a dual-method pipeline that integrates two approaches based on a Large Language Model (LLM) and a fine-tuned small language model. DUALLM achieves 87.4% accuracy and an F1-score of 0.875, significantly outperforming prior solutions. Notably, DUALLM successfully identified 111 of 5,140 recent Linux kernel patches as addressing OOB or UAF vulnerabilities, with 90 true positives confirmed by manual verification (many do not have clear indications in patch descriptions). Moreover, we constructed proof-of-concepts for two identified bugs (one UAF and one OOB), including one developed to conduct a previously unknown control-flow hijack as further evidence of the correctness of the classification.

BugLens, a framework developed at UC Riverside, Indiana University, and the University of Chicago, integrates Large Language Models into a structured workflow to refine static analysis results for vulnerability detection in complex codebases like the Linux kernel. The system increases precision by up to 7-fold and uncovered four previously unreported vulnerabilities by guiding LLMs through rigorous program analysis reasoning.

LLift, a framework from UC Riverside, integrates large language models with traditional static analysis to enhance use-before-initialization bug detection in large-scale software like the Linux kernel. This approach specifically addresses complex cases where conventional tools fail, successfully identifying 13 previously unknown UBI bugs in the Linux kernel and achieving 100% recall on known bugs.

The surging demand for AI has led to a rapid expansion of energy-intensive data centers, impacting the environment through escalating carbon emissions and water consumption. While significant attention has been paid to AI's growing environmental footprint, the public health burden, a hidden toll of AI, has been largely overlooked. Specifically, AI's lifecycle, from chip manufacturing to data center operation, significantly degrades air quality through emissions of criteria air pollutants such as fine particulate matter, substantially impacting public health. This paper introduces a methodology to model pollutant emissions across AI's lifecycle, quantifying the public health impacts. Our findings reveal that training an AI model of the Llama3.1 scale can produce air pollutants equivalent to more than 10,000 round trips by car between Los Angeles and New York City. The total public health burden of U.S. data centers in 2030 is valued at up to more than $20 billion per year, double that of U.S. coal-based steelmaking and comparable to that of on-road emissions of California. Further, the public health costs unevenly impact economically disadvantaged communities, where the per-household health burden could be 200x more than that in less-impacted communities. We recommend adopting a standard reporting protocol for criteria air pollutants and the public health costs of AI, paying attention to all impacted communities, and implementing health-informed AI to mitigate adverse effects while promoting public health equity.

STAMP (Scalable Task- and Model-Agnostic Collaborative Perception) presents a framework for robust multi-agent collaboration in autonomous driving by enabling heterogeneous agents to share information efficiently and securely. This approach achieves scalable and task-agnostic fusion, demonstrating superior performance and efficiency on both simulated and real-world datasets for tasks like 3D object detection and BEV segmentation.

A framework for domain-adaptive semantic segmentation is introduced, leveraging weak labels from the target domain to guide image-level classification and category-wise adversarial feature alignment. The method achieves state-of-the-art performance in Unsupervised Domain Adaptation (UDA) and significantly boosts performance in the newly proposed Weakly-supervised Domain Adaptation (WDA) setting, effectively bridging the gap between UDA and fully-supervised segmentation with minimal annotation cost.

This paper provides a theoretical analysis of prompt-tuning, demonstrating that its attention mechanism is more expressive than self-attention in certain contexts by selectively extracting relevant information via the softmax non-linearity. It establishes optimization and generalization guarantees, showing prompt-attention can achieve low test error rates and sometimes outperform fine-tuning in data-limited scenarios.

The rapid growth of digital and AI-generated images has amplified the need for secure and verifiable methods of image attribution. While digital watermarking offers more robust protection than metadata-based approaches--which can be easily stripped--current watermarking techniques remain vulnerable to forgery, creating risks of misattribution that can damage the reputations of AI model developers and the rights of digital artists. These vulnerabilities arise from two key issues: (1) content-agnostic watermarks, which, once learned or leaked, can be transferred across images to fake attribution, and (2) reliance on detector-based verification, which is unreliable since detectors can be tricked. We present MetaSeal, a novel framework for content-dependent watermarking with cryptographic security guarantees to safeguard image attribution. Our design provides (1) forgery resistance, preventing unauthorized replication and enforcing cryptographic verification; (2) robust, self-contained protection, embedding attribution directly into images while maintaining resilience against benign transformations; and (3) evidence of tampering, making malicious alterations visually detectable. Experiments demonstrate that MetaSeal effectively mitigates forgery attempts and applies to both natural and AI-generated images, establishing a new standard for secure image attribution.

Processing graphs with temporal information (the temporal graphs) has become increasingly important in the real world. In this paper, we study efficient solutions to temporal graph applications using new algorithms for Incremental Minimum Spanning Trees (MST). The first contribution of this work is to formally discuss how a broad set of setting-problem combinations of temporal graph processing can be solved using incremental MST, along with their theoretical guarantees. Despite the importance of the problem, we observe a gap between theory and practice for efficient incremental MST algorithms. While many classic data structures, such as the link-cut tree, provide strong bounds for incremental MST, their performance is limited in practice. Meanwhile, existing practical solutions used in applications do not have any non-trivial theoretical guarantees. Our second and main contribution includes new algorithms for incremental MST that are efficient both in theory and in practice. Our new data structure, the AM-tree, achieves the same theoretical bound as the link-cut tree for temporal graph processing and shows strong performance in practice. In our experiments, the AM-tree has competitive or better performance than existing practical solutions due to theoretical guarantees, and can be significantly faster than the link-cut tree (7.8-11x in updates and 7.7-13.7x in queries).

This paper studies decentralized online convex optimization in a networked

multi-agent system and proposes a novel algorithm, Learning-Augmented

Decentralized Online optimization (LADO), for individual agents to select

actions only based on local online information. LADO leverages a baseline

policy to safeguard online actions for worst-case robustness guarantees, while

staying close to the machine learning (ML) policy for average performance

improvement. In stark contrast with the existing learning-augmented online

algorithms that focus on centralized settings, LADO achieves strong robustness

guarantees in a decentralized setting. We also prove the average cost bound for

LADO, revealing the tradeoff between average performance and worst-case

robustness and demonstrating the advantage of training the ML policy by

explicitly considering the robustness requirement.

Rapid advancements in GPU computational power has outpaced memory capacity and bandwidth growth, creating bottlenecks in Large Language Model (LLM) inference. Post-training quantization is the leading method for addressing memory-related bottlenecks in LLM inference, but it suffers from significant performance degradation below 4-bit precision. This paper addresses these challenges by investigating the pretraining of low-bitwidth models specifically Ternary Language Models (TriLMs) as an alternative to traditional floating-point models (FloatLMs) and their post-training quantized versions (QuantLMs). We present Spectra LLM suite, the first open suite of LLMs spanning multiple bit-widths, including FloatLMs, QuantLMs, and TriLMs, ranging from 99M to 3.9B parameters trained on 300B tokens. Our comprehensive evaluation demonstrates that TriLMs offer superior scaling behavior in terms of model size (in bits). Surprisingly, at scales exceeding one billion parameters, TriLMs consistently outperform their QuantLM and FloatLM counterparts for a given bit size across various benchmarks. Notably, the 3.9B parameter TriLM matches the performance of the FloatLM 3.9B across all benchmarks, despite having fewer bits than FloatLM 830M. Overall, this research provides valuable insights into the feasibility and scalability of low-bitwidth language models, paving the way for the development of more efficient LLMs.

To enhance understanding of low-bitwidth models, we are releasing 500+ intermediate checkpoints of the Spectra suite at this https URL.

The paper introduces Bileve, a bi-level signature scheme designed to secure text provenance in Large Language Models against spoofing attacks. It achieves reliable attribution, tamper-evidence, and unforgeability by combining fine-grained cryptographic signatures with robust coarse-grained statistical signals.

As a type of valuable intellectual property (IP), deep neural network (DNN)

models have been protected by techniques like watermarking. However, such

passive model protection cannot fully prevent model abuse. In this work, we

propose an active model IP protection scheme, namely NNSplitter, which actively

protects the model by splitting it into two parts: the obfuscated model that

performs poorly due to weight obfuscation, and the model secrets consisting of

the indexes and original values of the obfuscated weights, which can only be

accessed by authorized users with the support of the trusted execution

environment. Experimental results demonstrate the effectiveness of NNSplitter,

e.g., by only modifying 275 out of over 11 million (i.e., 0.002%) weights, the

accuracy of the obfuscated ResNet-18 model on CIFAR-10 can drop to 10%.

Moreover, NNSplitter is stealthy and resilient against norm clipping and

fine-tuning attacks, making it an appealing solution for DNN model protection.

The code is available at: this https URL

Approximate nearest-neighbor search (ANNS) algorithms are a key part of the modern deep learning stack due to enabling efficient similarity search over high-dimensional vector space representations (i.e., embeddings) of data. Among various ANNS algorithms, graph-based algorithms are known to achieve the best throughput-recall tradeoffs. Despite the large scale of modern ANNS datasets, existing parallel graph based implementations suffer from significant challenges to scale to large datasets due to heavy use of locks and other sequential bottlenecks, which 1) prevents them from efficiently scaling to a large number of processors, and 2) results in nondeterminism that is undesirable in certain applications.

In this paper, we introduce ParlayANN, a library of deterministic and parallel graph-based approximate nearest neighbor search algorithms, along with a set of useful tools for developing such algorithms. In this library, we develop novel parallel implementations for four state-of-the-art graph-based ANNS algorithms that scale to billion-scale datasets. Our algorithms are deterministic and achieve high scalability across a diverse set of challenging datasets. In addition to the new algorithmic ideas, we also conduct a detailed experimental study of our new algorithms as well as two existing non-graph approaches. Our experimental results both validate the effectiveness of our new techniques, and lead to a comprehensive comparison among ANNS algorithms on large scale datasets with a list of interesting findings.

There are no more papers matching your filters at the moment.